Virtual dressing image generation method and system

A virtual dressing and image technology, applied in the field of virtual dressing images, can solve problems such as technical barriers, high computing costs, and long production cycles of 3D clothing modeling, and achieve optimized fitting images, small calculations, and reduced storage space and network transfer time effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

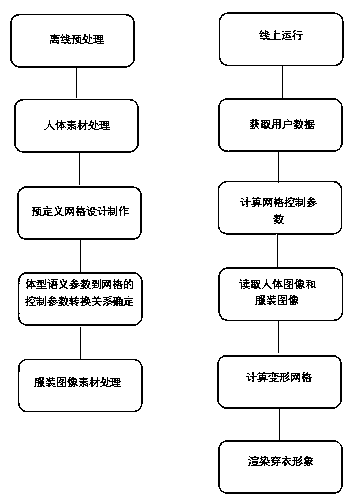

[0061] The embodiment of the present invention provides off-line preprocessing, including:

[0062] Standard human material processing;

[0063] Pre-defined grid design and production;

[0064] Determination of the conversion relationship between body shape semantic parameters and grid control parameters;

[0065] Clothing image material processing;

[0066] In a specific embodiment, the shape and skin of a standard human body are provided in this embodiment, and the shape of the standard human body is consistent with the contour projected by the parameters of the model camera used to shoot clothing.

[0067] In a specific embodiment, the standard human body in this embodiment is close to the human eye, and a camera lens with a focal length of 35mm or 50mm or 85mm is used, and the camera is 2 to 4 meters away from the model for shooting.

[0068] Concrete embodiment, in this implementation, the human body skin is processed according to the skin of a live model, and the resu...

example

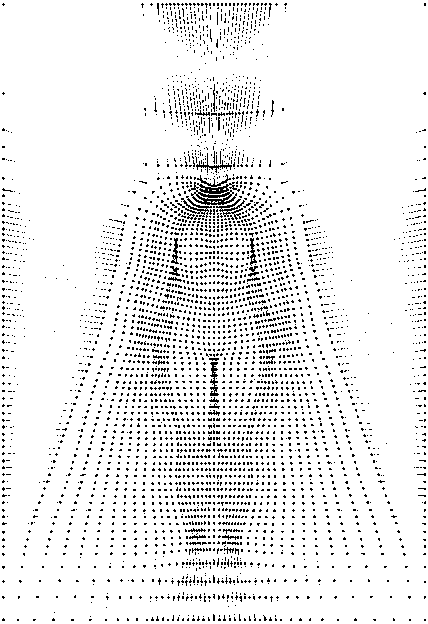

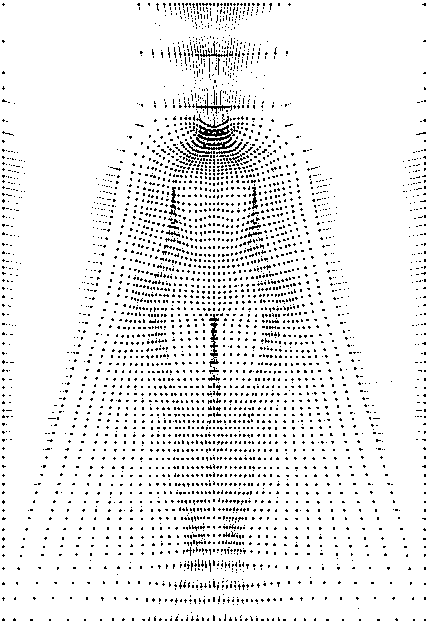

[0099] Concrete examples, in this embodiment such as Figure 8 is the deformed mesh under the control parameters.

[0100] In a specific embodiment, in this embodiment, the dressed image is rendered, and the deformed dressed image is generated through GPU rendering or CPU pixel calculation based on the grid data and textures obtained above.

[0101] In a specific embodiment, after the dressed image is generated, the user can readjust the body shape parameters. At this time, there is no need to change the texture, only the deformation grid needs to be recalculated; the user can also change the clothing, and only the texture needs to be replaced at this time.

[0102] Concrete embodiment, in this embodiment Figure 9 , Figure 10 , Figure 11 and Figure 12 They are the comparison of two different clothes before and after deformation.

Embodiment 2

[0104] The embodiment of the present invention provides an off-line preprocessing unit, including:

[0105] Standard human body material processing module;

[0106] Predefined grid design and production modules;

[0107] A module for determining the conversion relationship between body shape semantic parameters and grid control parameters;

[0108] Garment image material processing module;

[0109] In a specific embodiment, this embodiment provides a standard human body shape and skin module, and the standard human body shape is consistent with the contour projected by the parameters of the model camera used to shoot clothing.

[0110] In a specific embodiment, the standard human body in this embodiment is close to the human eye, and a camera lens with a focal length of 35mm or 50mm or 85mm is used, and the camera is 2 to 4 meters away from the model for shooting.

[0111] In a specific embodiment, the human body skin module in this implementation is obtained according to t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com