A low-bit efficient deep convolutional neural network hardware acceleration design method, module and system based on logarithmic quantization

A deep convolution and neural network technology, applied in the field of artificial neural network hardware implementation, can solve problems such as high computational complexity, large area and energy, and large multiplier hardware complexity, so as to reduce hardware complexity and simplify the design method , reduce the effect of repeated reading

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

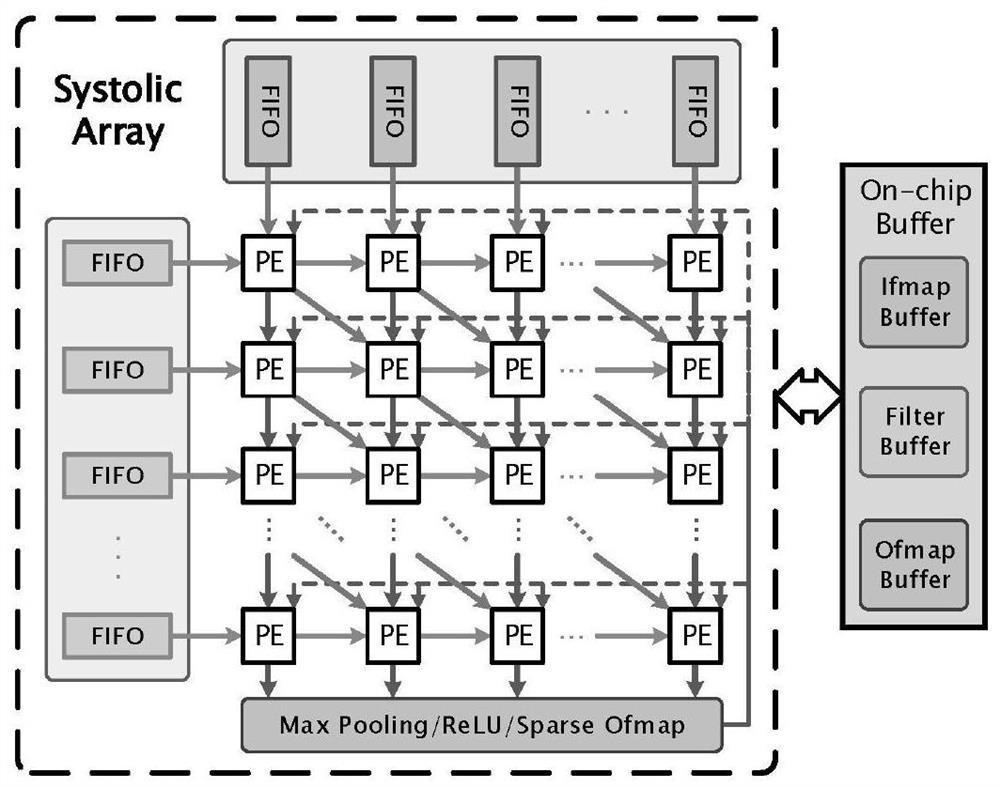

[0028] The technical solution of the present invention will be further introduced below in combination with specific embodiments.

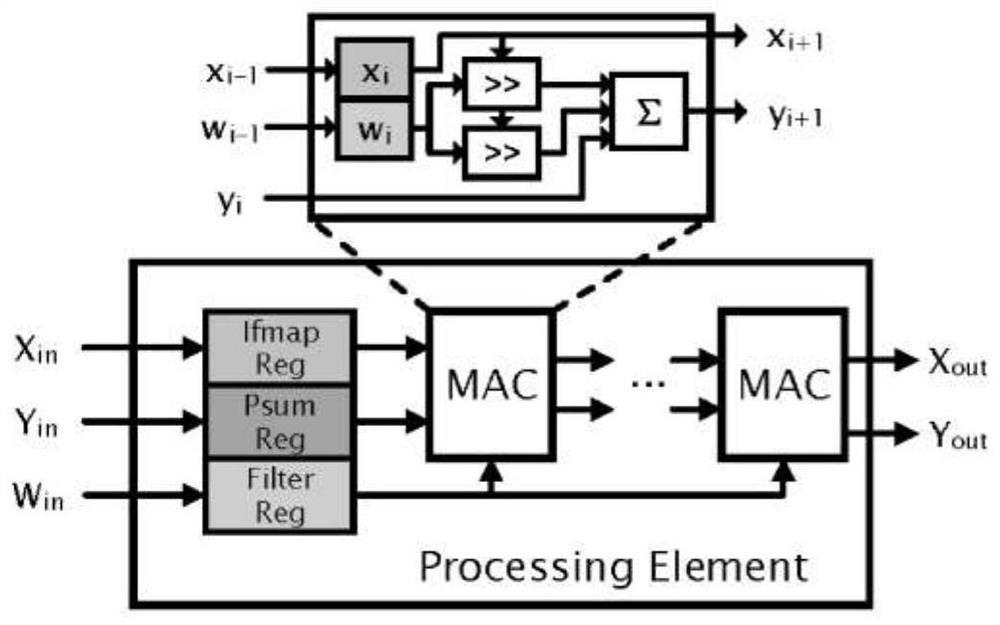

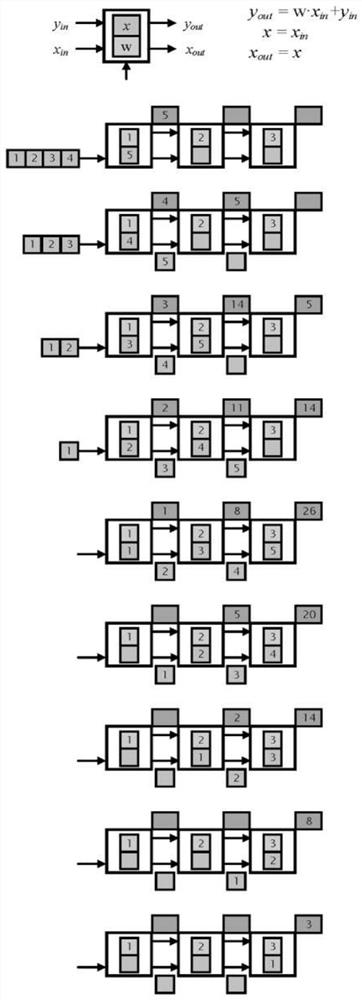

[0029] This specific embodiment discloses a low-bit high-efficiency deep convolutional neural network hardware acceleration design method based on logarithmic quantization, including the following steps:

[0030] S1: Realize low-bit and high-precision non-uniform fixed-point quantization based on the logarithmic domain, and use multiple quantization codebooks to quantize the full-precision pre-trained neural network model;

[0031] S2: The range of quantization is controlled by introducing offset shift parameters. In the case of extremely low-bit non-uniform quantization, the algorithm for adaptively finding the optimal quantization strategy compensates for quantization errors.

[0032] Usually, in order to facilitate the simplification of hardware complexity, a certain bit of fixed-point number is uniformly quantized for full-precision floating-p...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com