Space-time consistence and feature center EMD adaptive video-stable joint optimization method

A joint optimization and video stabilization technology, applied in color TV components, TV system components, TVs, etc., to suppress jitter components and protect motion trends

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0055] The present invention will be described in detail below in conjunction with the accompanying drawings and embodiments.

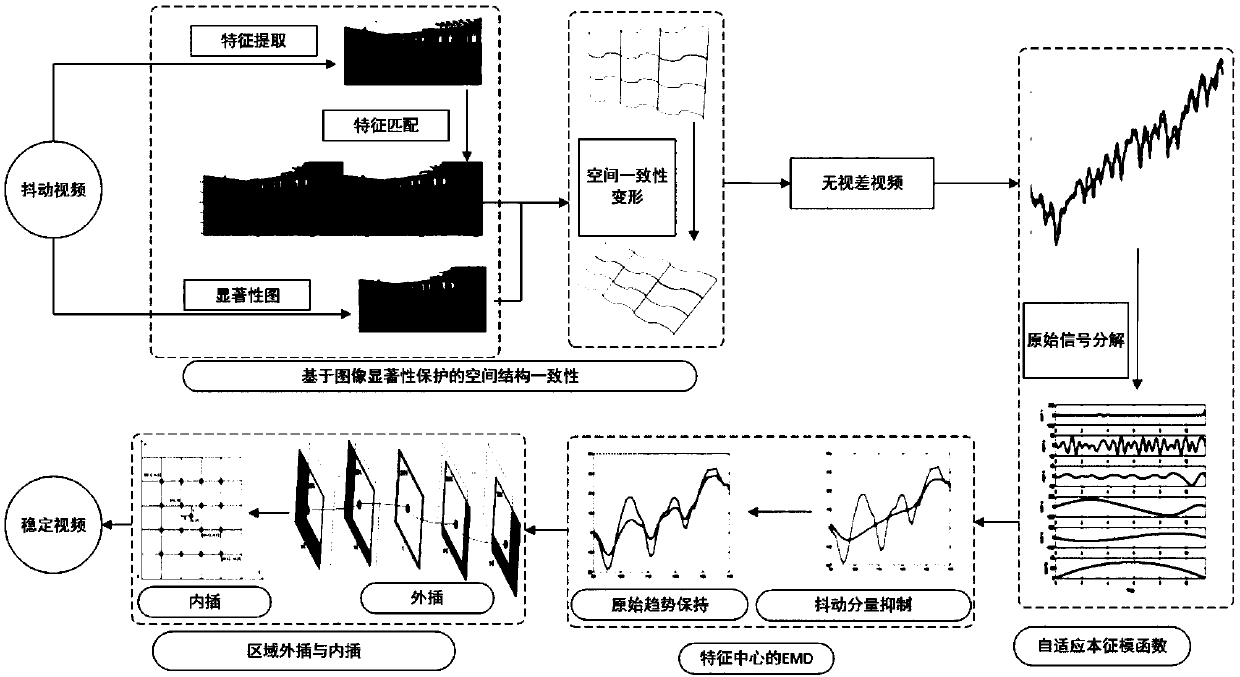

[0056] like figure 1 Shown, the steps of the present invention are:

[0057] (1) Extract image features by SIFT method, perform feature matching, and obtain saliency vectors, and use this as a benchmark to perform spatially consistent deformation.

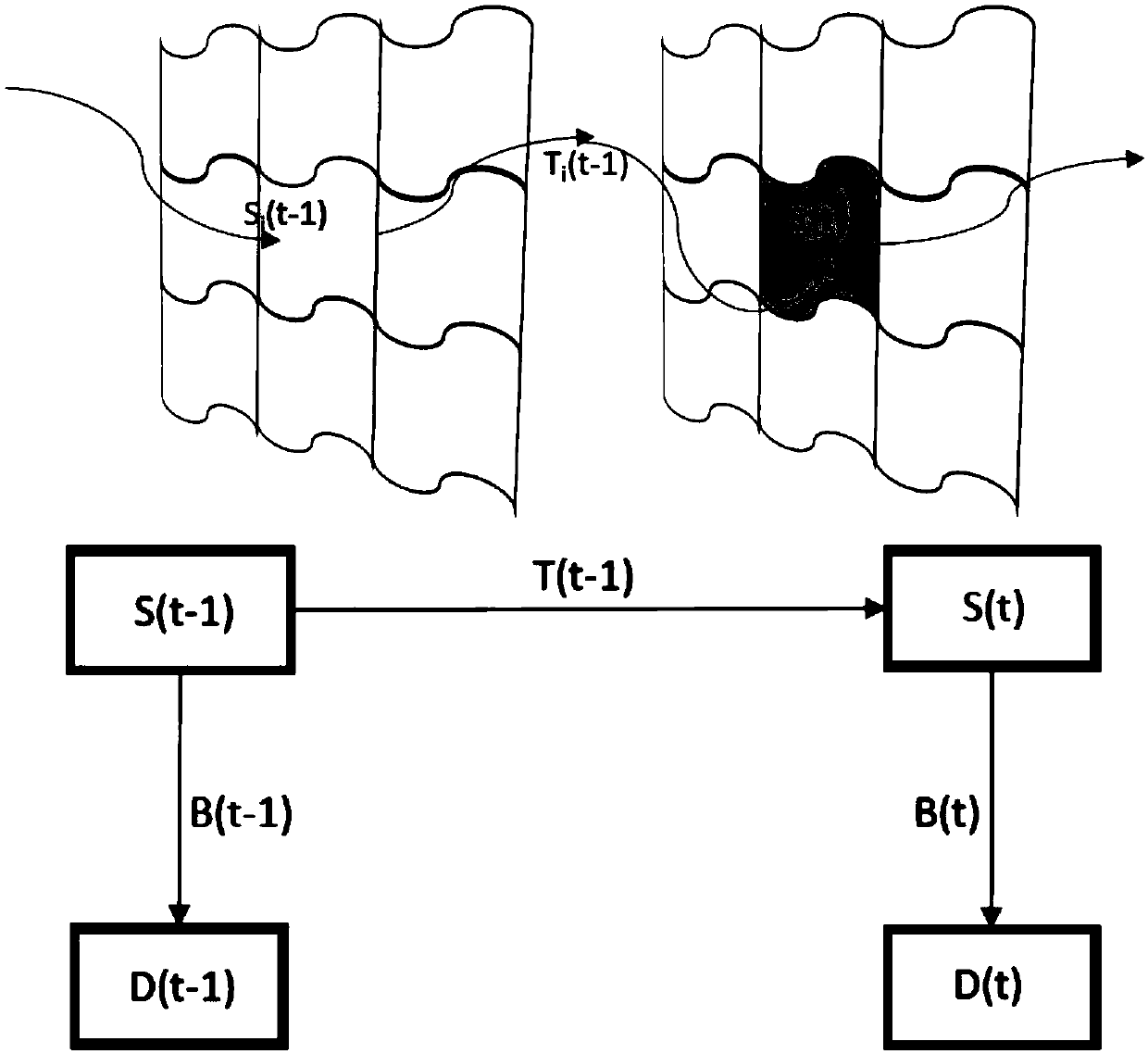

[0058] (2) Starting from the position of the viewpoint, deform each image frame obtained in step (1), reacquire the feature set, construct a SIFT-based spatial structure matrix, extract rotation, translation, and scaling information, and construct the original motion signal, according to the adaptive The intrinsic modulus function optimization algorithm obtains new motion signals.

[0059] (3) The adaptive motion signal calculated in step (2) is used as a new input signal. According to the feature center algorithm, the motion trend of the original signal is protected as much as possible while the jitter ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com