Systems and methods for block-sparse recurrent neural networks

A technology of neural network model and implementation method, applied in the field of computer learning system, can solve problems such as irregularity, inability to utilize array data paths, and ineffective utilization of hardware resources in sparse format

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

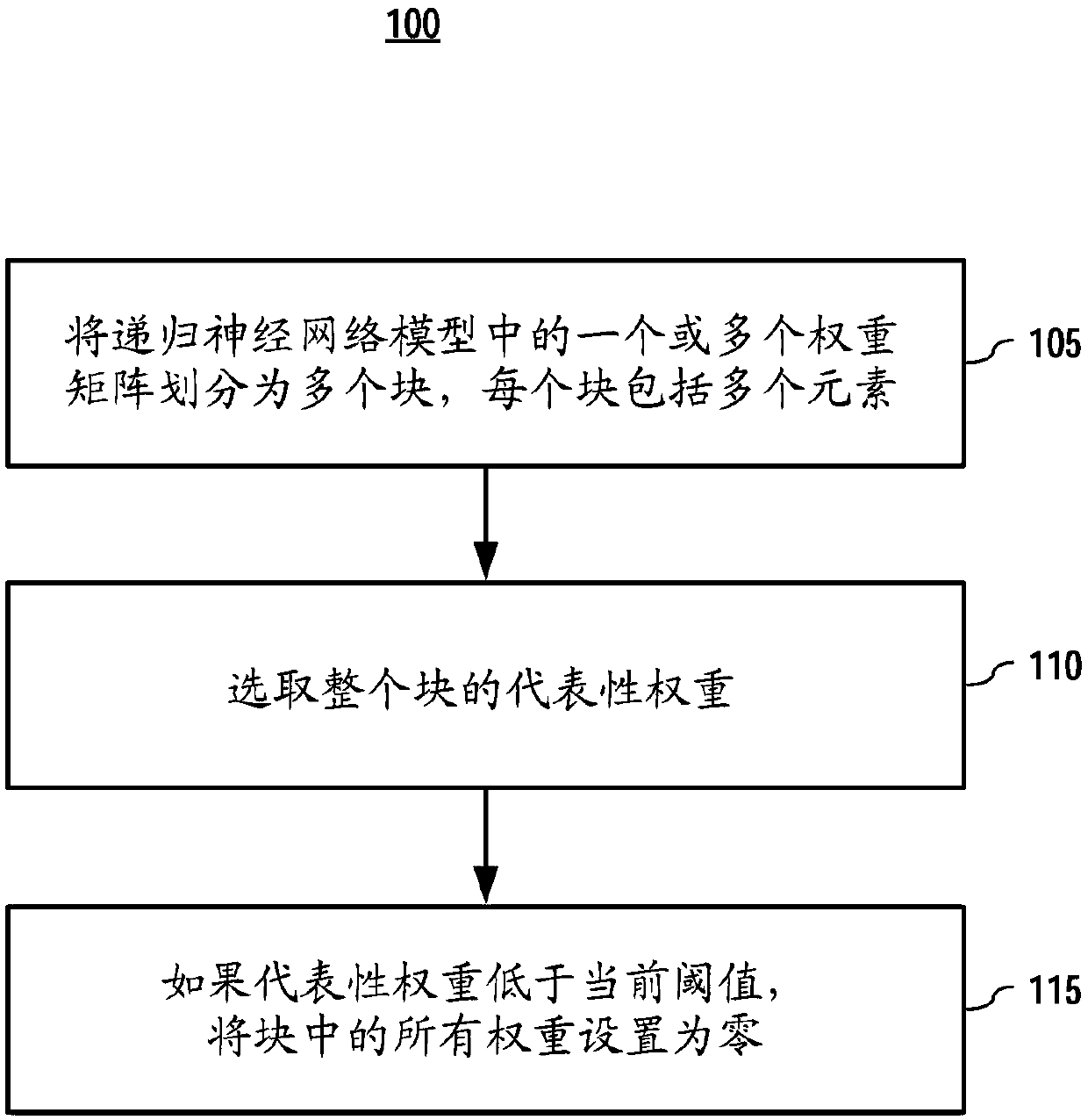

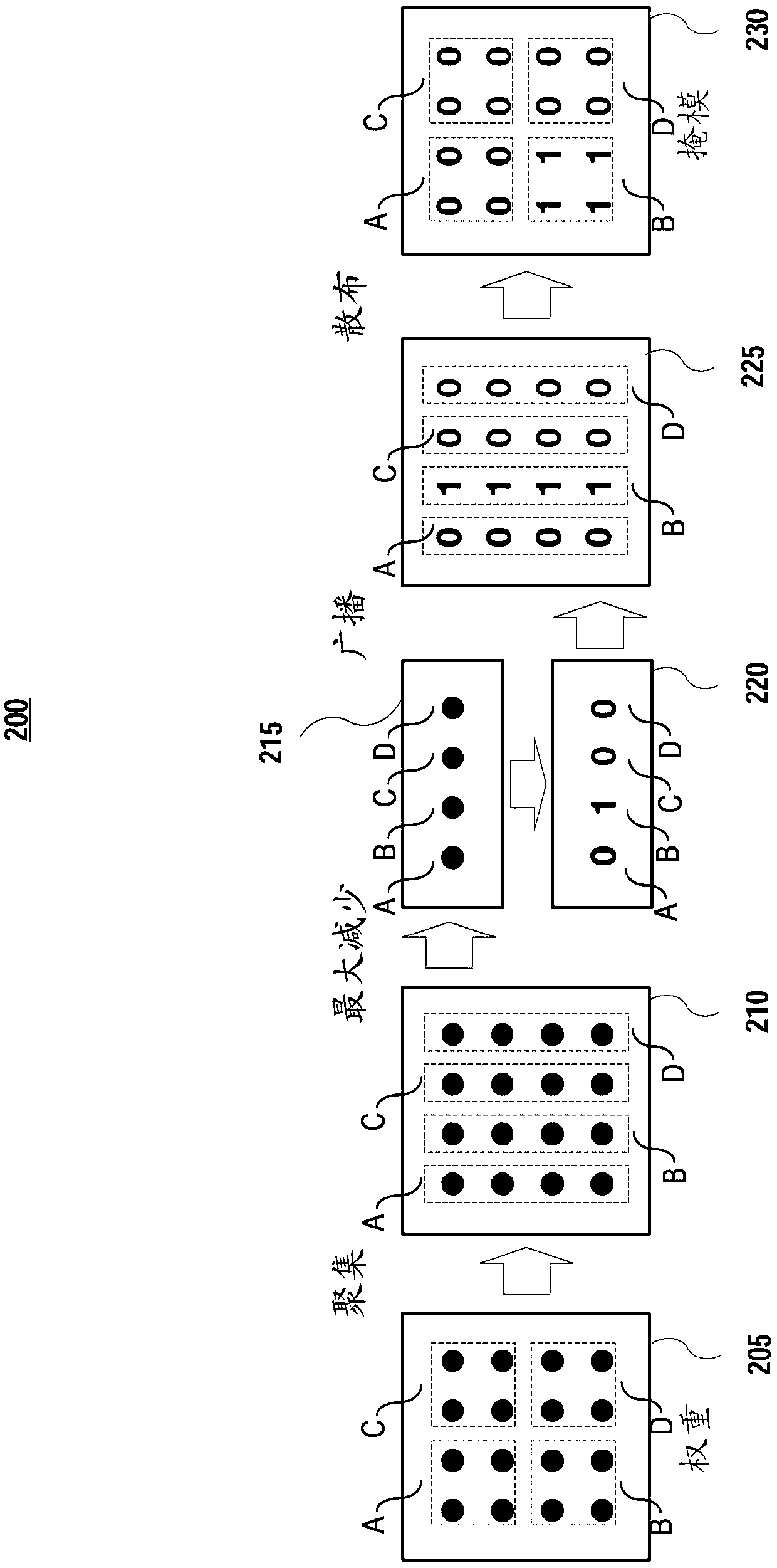

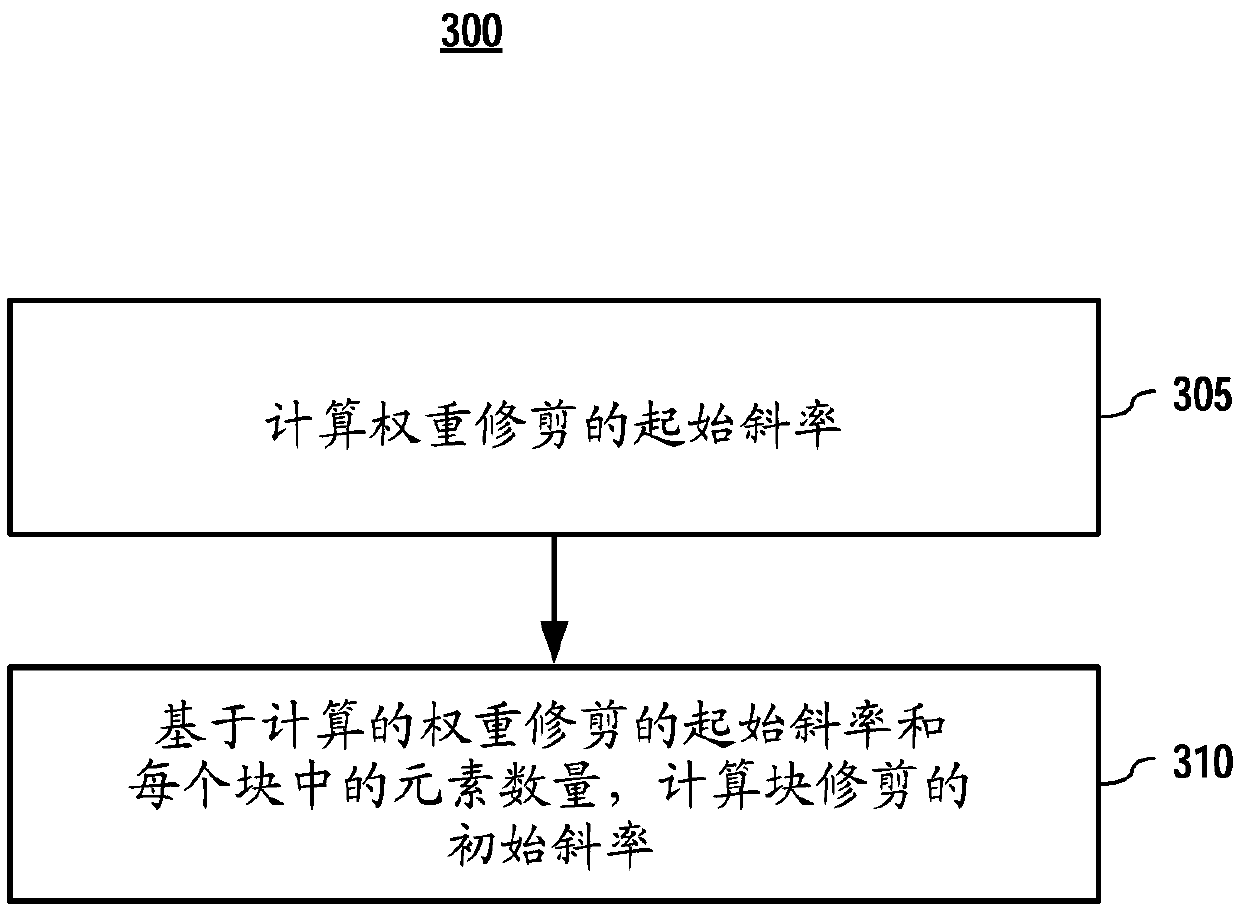

Method used

Image

Examples

Embodiment approach

[0132] In embodiments, aspects of this patent document may relate to, may comprise, or may be implemented within one or more information handling systems / computing systems realized. Computing systems may include devices operable to compute, calculate, determine, classify, process, transmit, receive, retrieve, create, route, switch, store, display, communicate, authenticate, detect, record, reproduce, process or any tool or aggregation of tools utilizing information, intelligence or data of any kind. For example, a computing system can be or include a personal computer (e.g., a laptop), a tablet computer, a phablet, a personal digital assistant (PDA), a smart phone, a smart watch, a smart bag, a server (e.g., a blade server or rack server), network storage device, camera or any other suitable device and may vary in size, shape, performance, functionality and price. A computing system may include random access memory (RAM), one or more processing resources (such as a central p...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com