A parallel deep learning scheduling training method and system based on a container

A deep learning and training method technology, applied in the field of cloud computing and deep learning, can solve problems such as inability to isolate Task resources, inconvenient training tasks and logs, and a large amount of upper-level development, so as to improve resource utilization and computing resource utilization rate, increase the effect of scheduling tasks

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0053] The container-based parallel deep learning scheduling training method of the present invention, the method is to use the Kubernetes container to realize the configuration and scheduling of the computing resources of the task, provide ResourceQuota, LimitRanger multiple resource management mechanisms, through the pod nodes in the container cluster Communication to achieve resource isolation between tasks; the same training node starts training pods and lifecycle management pods at the same time, and LCM performs unified resource job scheduling. The microservice architecture itself is deployed as a POD, relying on the latest version of Kubernetes to effectively mobilize GPUs When the K8S job crashes due to any failure reasons in OS, docker or machine failure, restart the microservice architecture and report the health of the microservice architecture; the training work is arranged in FIFO order by default, and LCM supports job priority. For each training task, LCM uses on-...

Embodiment 2

[0057] The container-based parallel deep learning scheduling training method of the present invention, the specific steps of the method are as follows:

[0058] S1. Pre-install the Kubernetes container (above 1.3) on the host machine, designate one pod as a scheduling node, one pod as a monitoring node, and n pods as task nodes;

[0059] S2. The scheduling node is responsible for submitting job tasks, and specifies a task node to perform a round of iterations through the scheduling algorithm; the scheduling algorithm is as follows:

[0060] (1) When the threshold is exceeded, the newly assigned computing node will transfer the computing task, and at this time there will be a spare task node (Pod);

[0061] (2), based on the resource (GPU) size occupied by the spare task node, set the weight (weight),

[0062] (3), the greater the resources occupied, the greater the weight;

[0063] (4) When a new node needs to be allocated when the threshold value is exceeded again, it is pr...

Embodiment 3

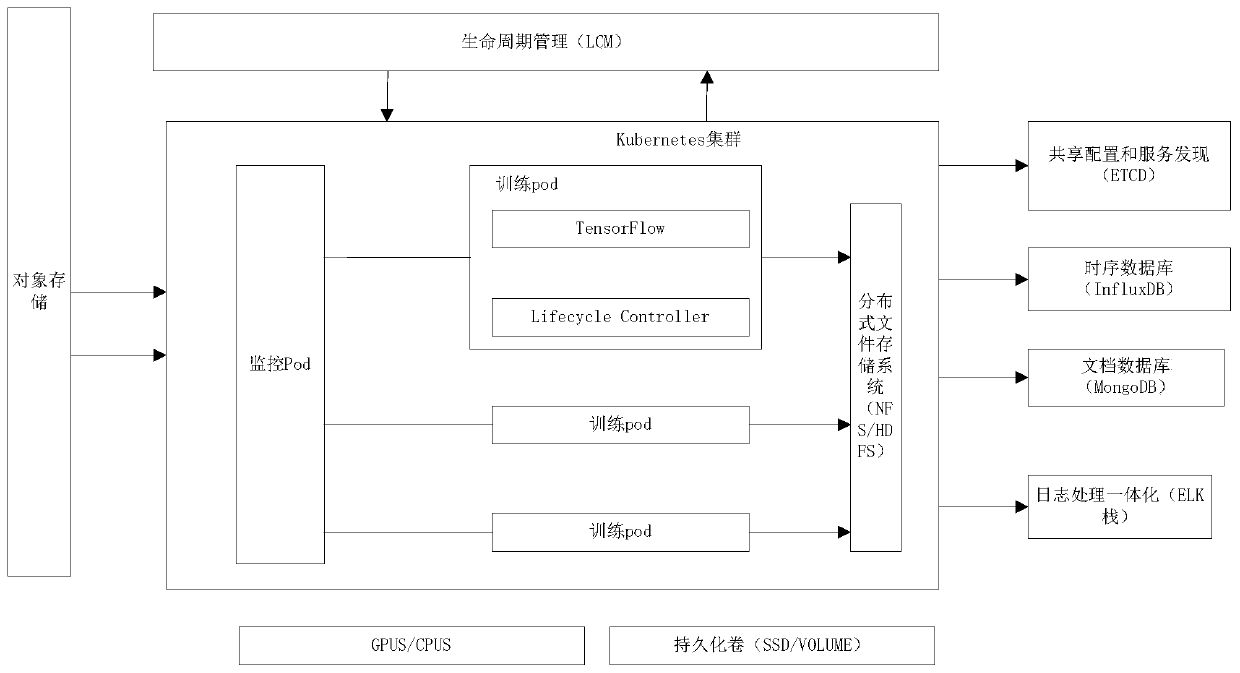

[0077] as attached figure 1 As shown, the container-based parallel deep learning scheduling training system of the present invention includes microservice architecture, learning training (DL), container cluster management and life cycle management (LCM);

[0078] Among them, the microservice architecture is used to reduce the coupling between components, keep each component single and as stateless as possible, isolate each other, and allow each component to be independently developed, tested, deployed, scaled and upgraded; and through dynamic registration RESTAPI service instance to achieve load balancing;

[0079] Learning and training (DL) is composed of a single learning node (Learning Pod) in the kubernetes container using GPU, and the user code instantiates the framework kubernetes service; usually, learning and training jobs use several GPUs / CPUs or are synchronized by several learning nodes in the Centralized parameter service is used on MPI; users submit training task...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com