Mobile robot visual following method based on deep reinforcement learning

A mobile robot and reinforcement learning technology, applied in the field of intelligent robots, can solve problems such as high hardware cost and design cost, increase system cost, system performance impact, etc., achieve good lighting changes, improve the level of intelligence, and reduce the effect of possibility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0046] The software environment of this embodiment is the Ubuntu14.04 system, the mobile robot adopts the TurtleBot2 robot, and the input sensor of the robot is a monocular color camera with a resolution of 640×480.

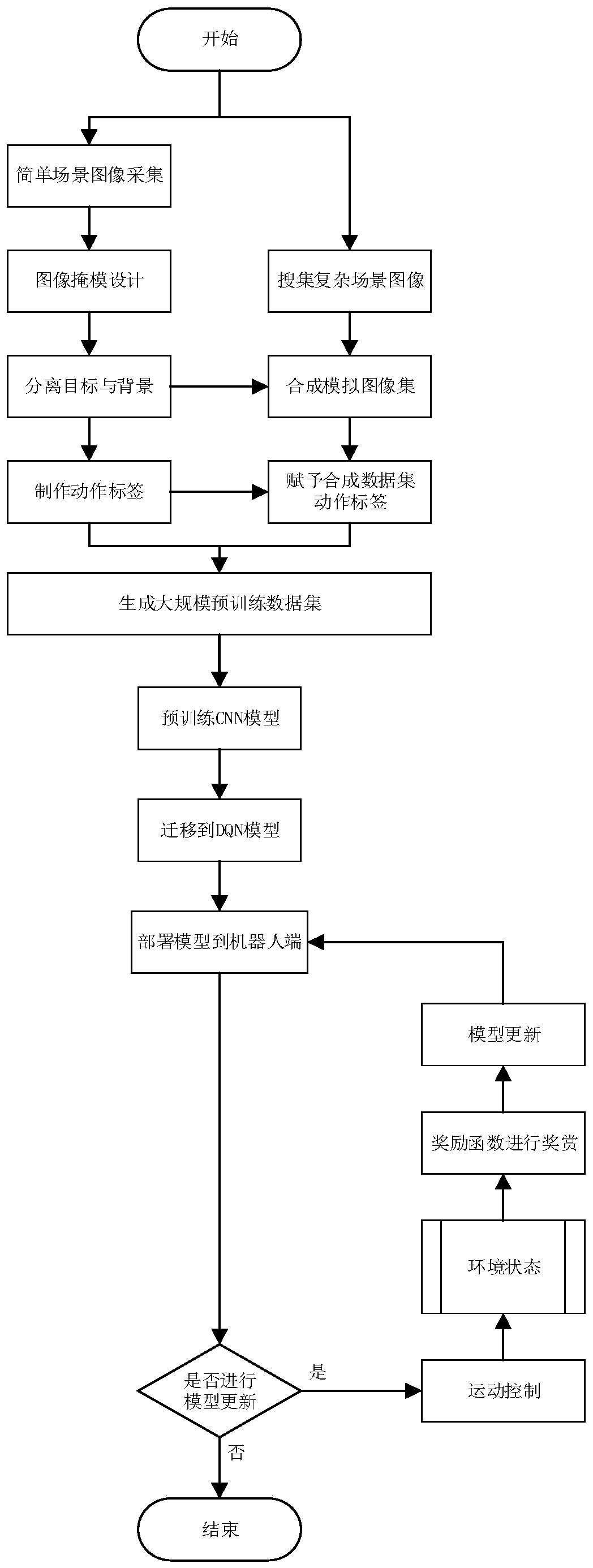

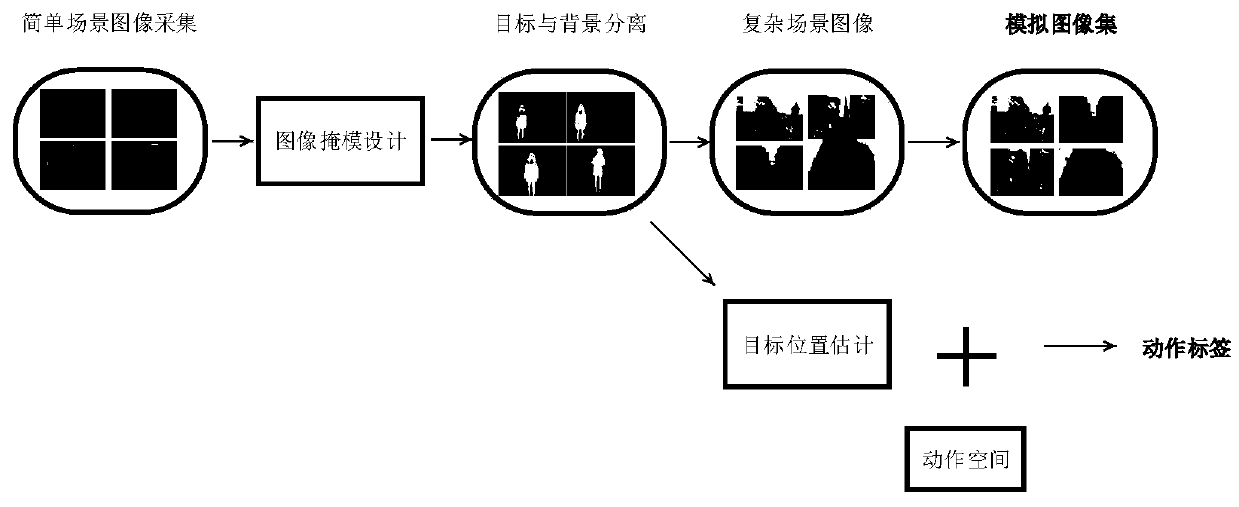

[0047] Step 1: Dataset automatic construction process

[0048] For the direction control model of the supervised following robot in the present invention, the input is the camera view image of the following robot, and the output is the action that the robot should take at the current moment. The construction process of the entire dataset includes two parts: the acquisition of the input field of view image and the labeling of the output action.

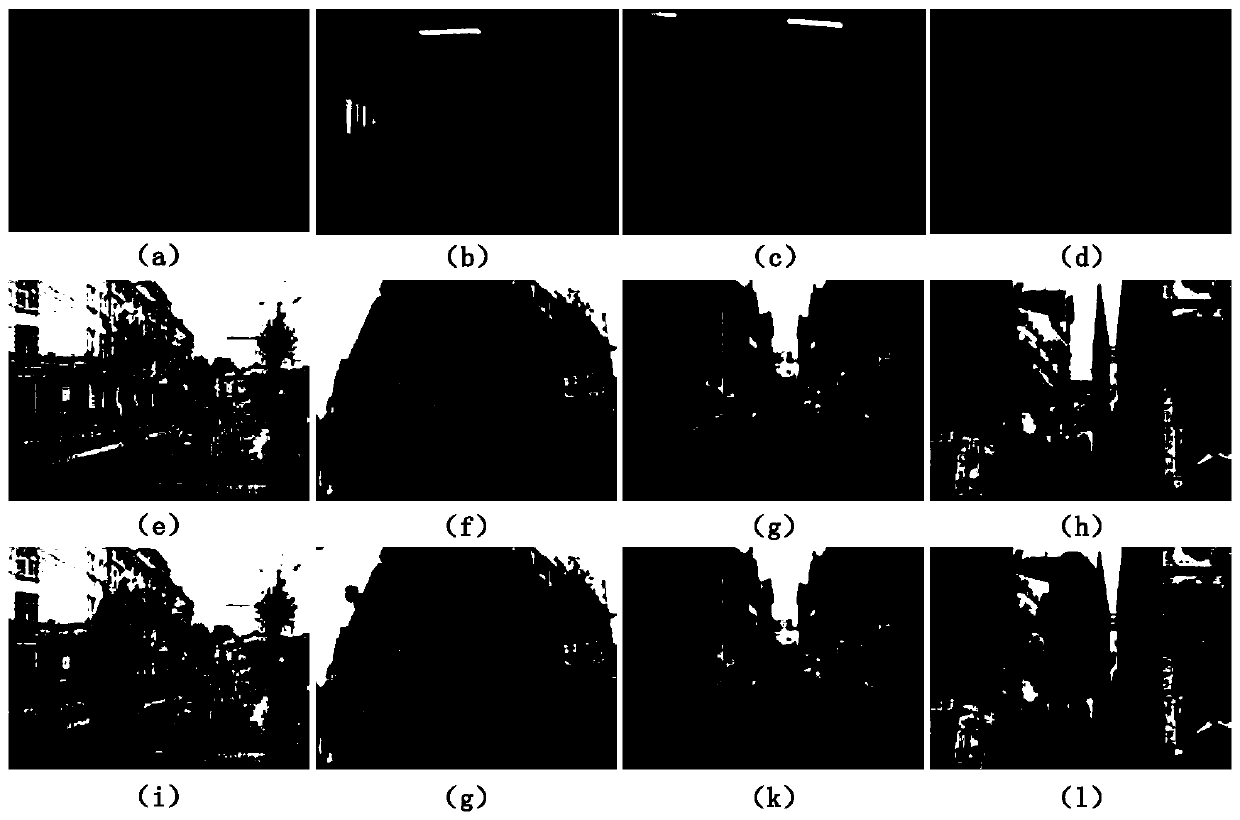

[0049] Prepare a simple scene where the object being followed needs to be relatively easy to distinguish from the background. In a simple scenario, multiple images of the target person at different positions in the robot's field of view are collected from the field of view of the following robot. Download a certain numb...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com