Video behavior identification method based on time sequence causal convolutional network

A technology of convolutional network and recognition method, which is applied in the field of video behavior recognition based on time-series causal convolutional network, can solve the problems of inability to apply real-time video stream and high computational cost, and achieve reduced computational cost, high computational efficiency, and reduced model The effect of capacity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] Below in conjunction with accompanying drawing and embodiment, further describe the present invention.

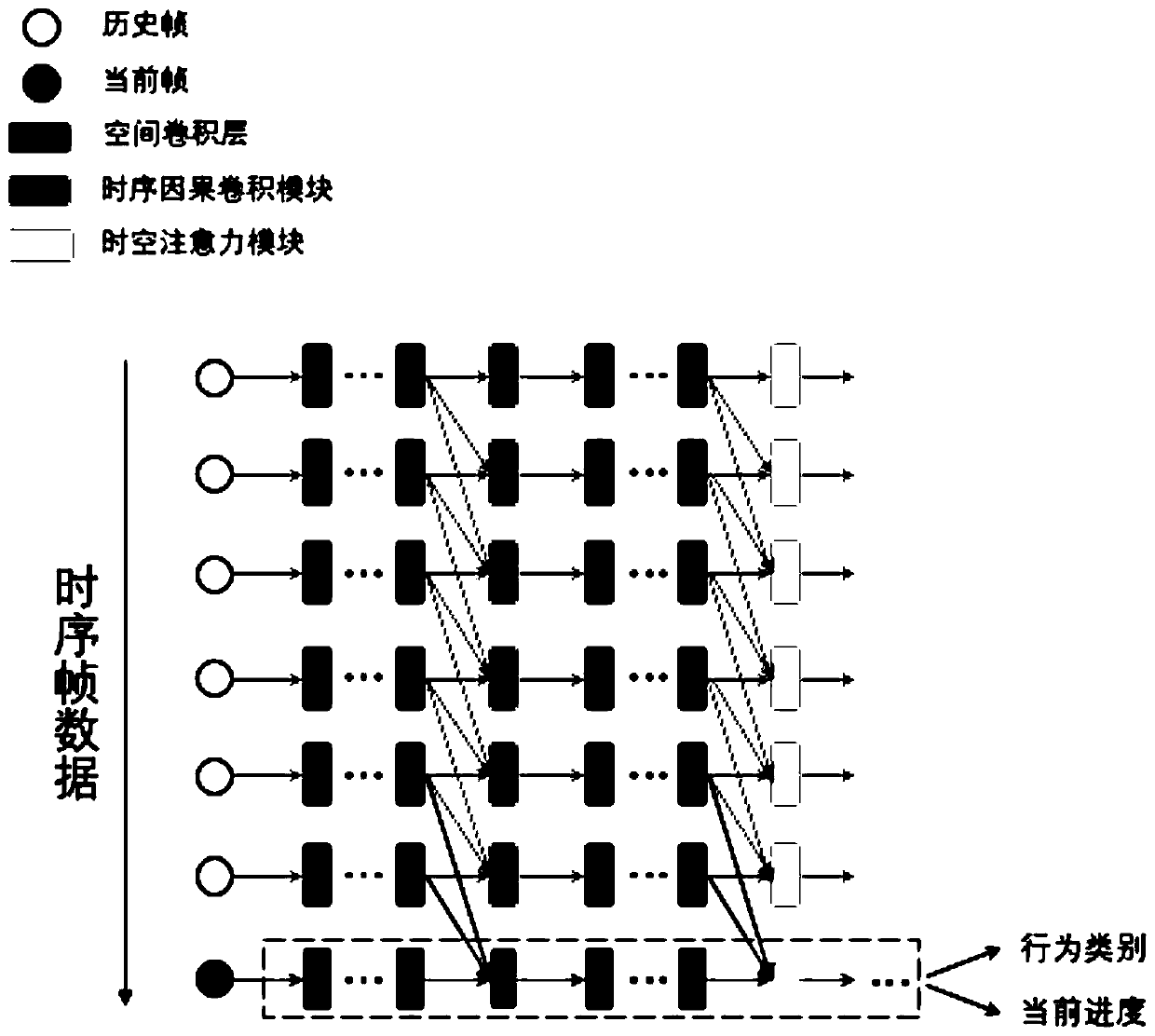

[0030] figure 1 It shows the time-series causal three-dimensional convolutional neural network processing system diagram for online behavior recognition of the present invention. The system of the present invention includes an input video frame picture stream, a spatial convolution layer, a time sequence causal convolution and a causal self-attention mechanism network basic module, a behavior classifier and a progress regressor.

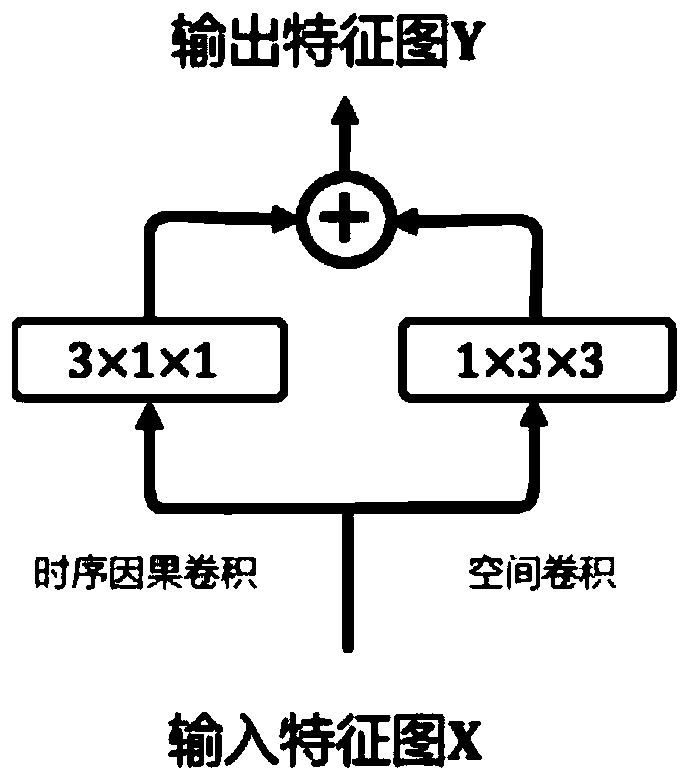

[0031] figure 2 It shows the temporal sequence causal convolution and spatial convolution fusion module diagram of the present invention for modeling short-term spatiotemporal features. The input feature map X is passed through two channels of temporal causal convolution with a convolution kernel of 3×1×1 and a spatial convolution with a convolution kernel of 1×3×3 to obtain two feature maps, and the elements of the two are added to obta...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com