Parallel decoding using auto-regression machine learning model

An autoregressive model and model technology, applied in machine learning, computing models, integrated learning, etc., can solve problems such as quality deterioration and achieve the effect of improving the generation speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

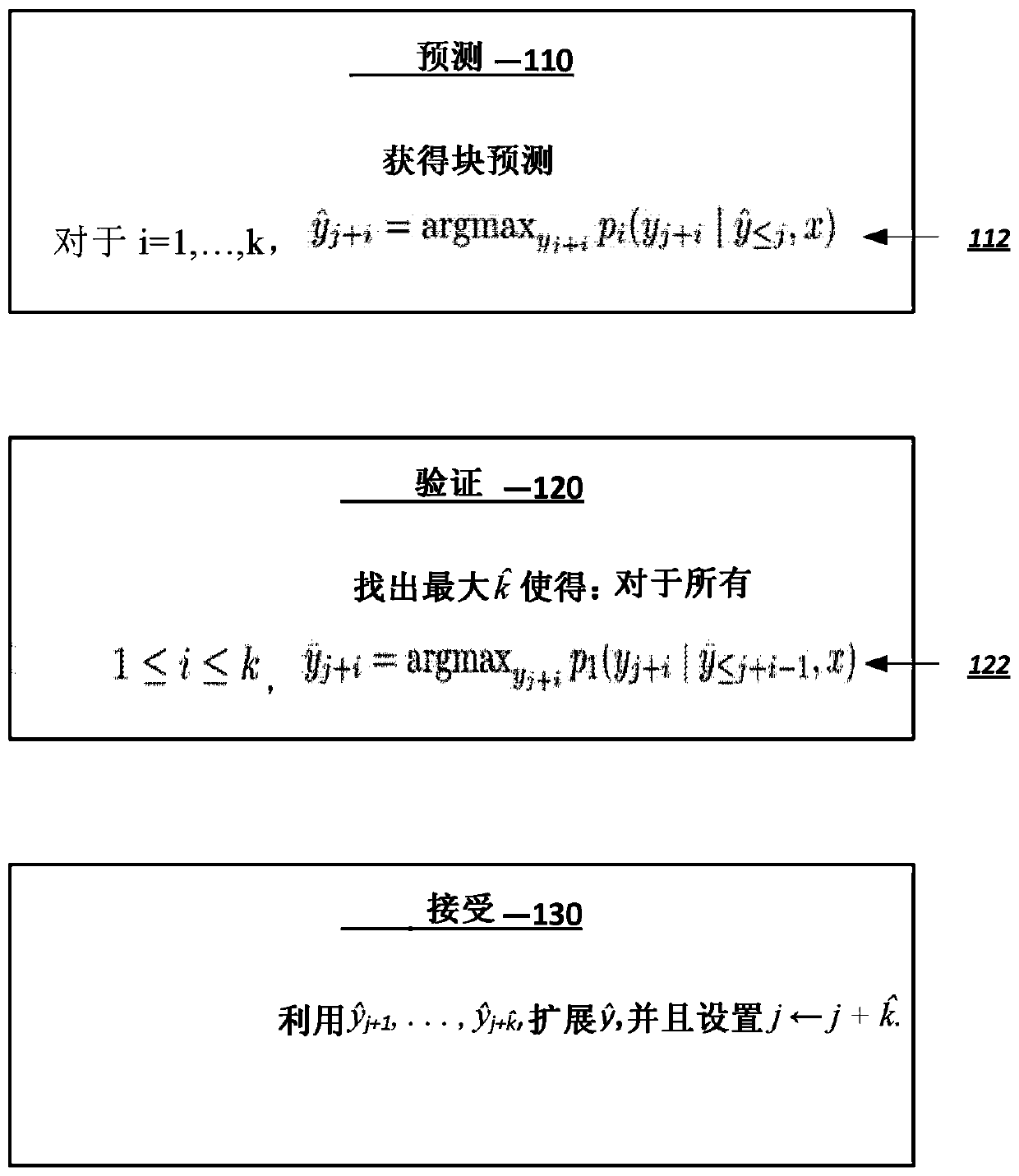

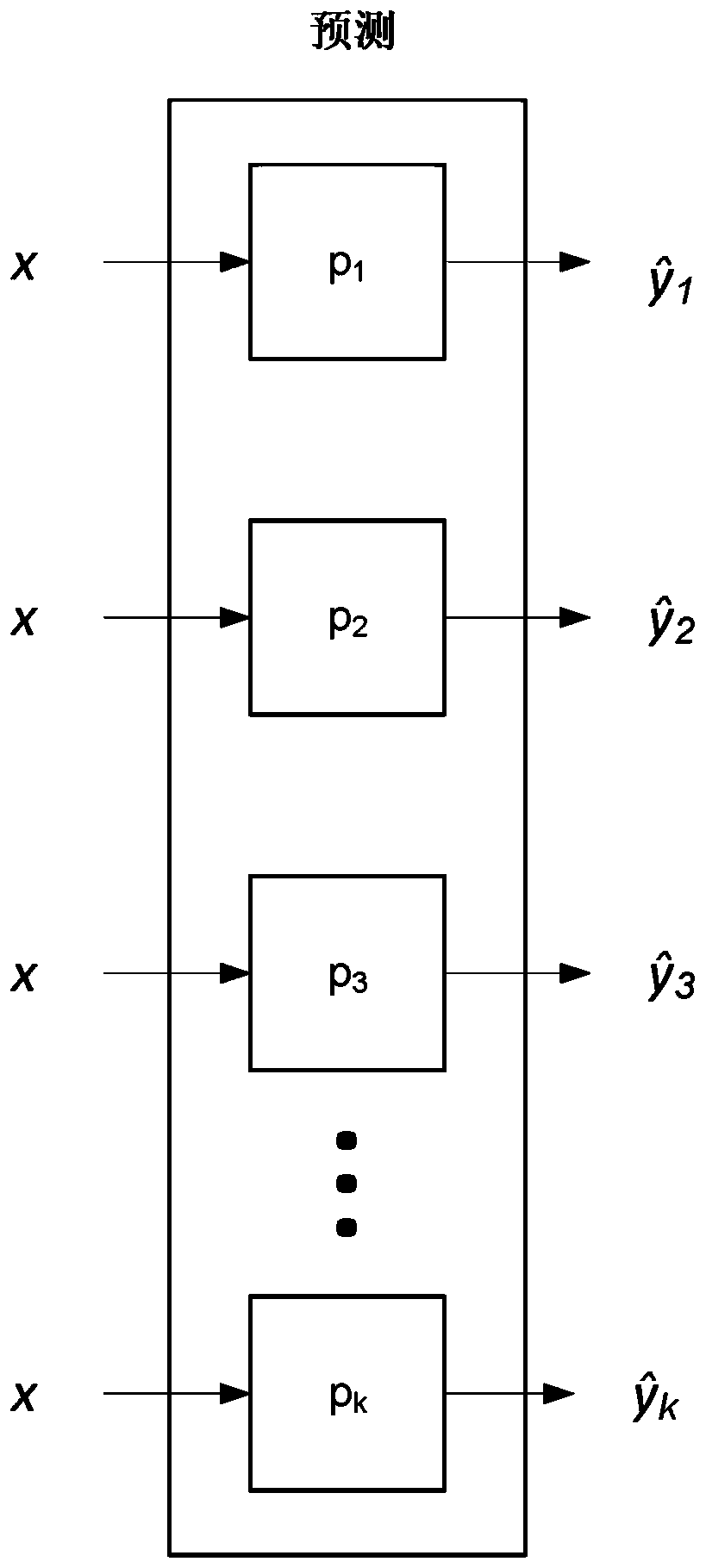

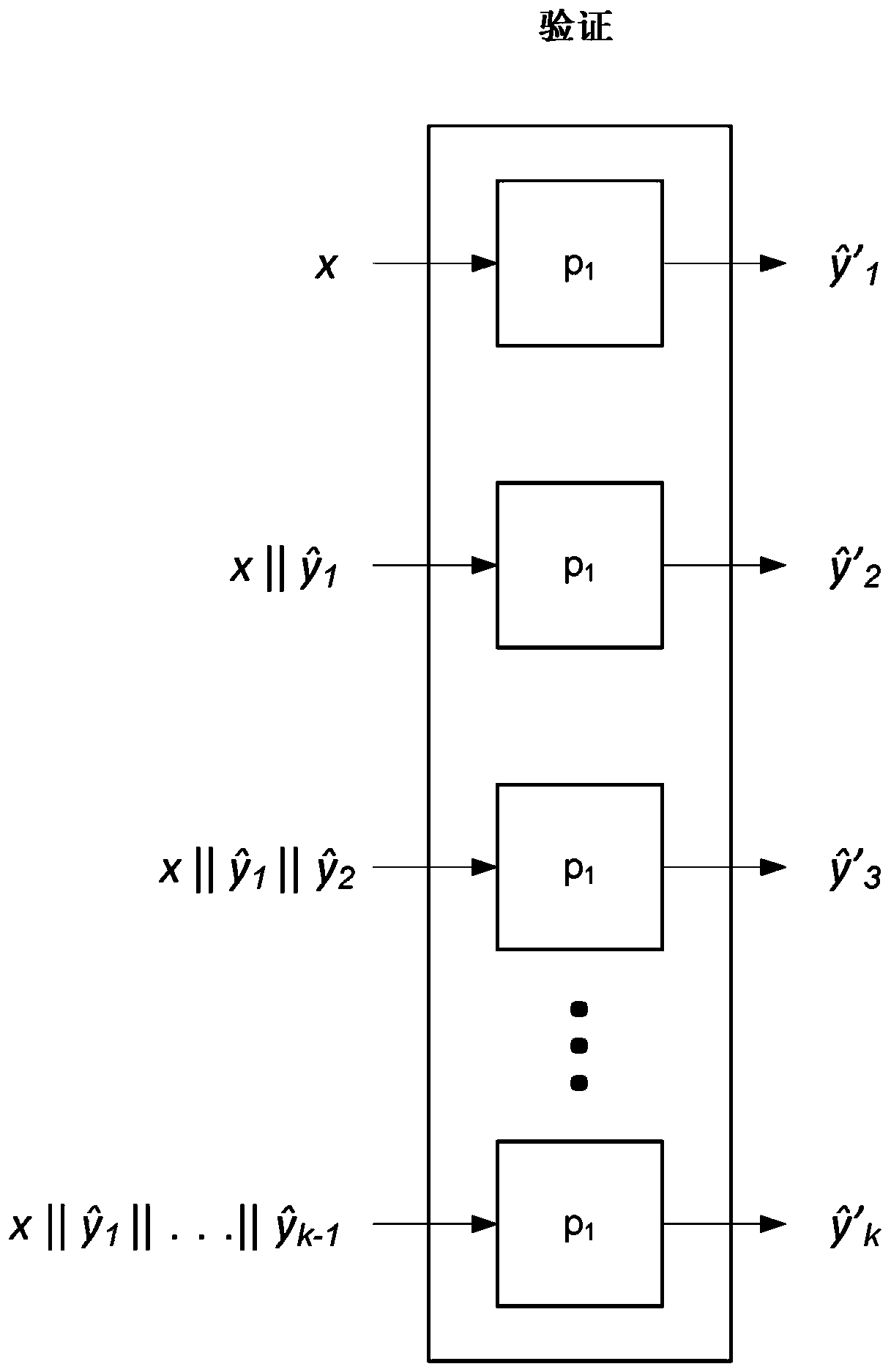

[0022] In sequence-to-sequence problems, given an input sequence (x 1 ,...,x n ), and the goal is to predict the corresponding output sequence (y 1 ,...,y m ). In the case of machine translation, these sequences may be source and target sentences, or in the case of image super-resolution, these sequences may be low-resolution images and high-resolution images.

[0023] Suppose the system has learned an autoregressive scoring model p(y|x) decomposed according to left-to-right decomposition

[0024]

[0025] Given an input x, the system can use the model to predict the output by greedy decoding as described below. Starting from j=0, the system starts with the highest scoring token Iteratively expand the prediction and set j←j+1 until the termination condition is met. For language generation problems, the system usually stops once the end of a particular sequence of tokens has been generated. For the image generation problem, the system simply decodes for a fixed num...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com