Target detection method based on global and local information fusion

A technology of target detection and local information, applied in computer parts, character and pattern recognition, instruments, etc., can solve the problem of ignoring scene level and so on

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

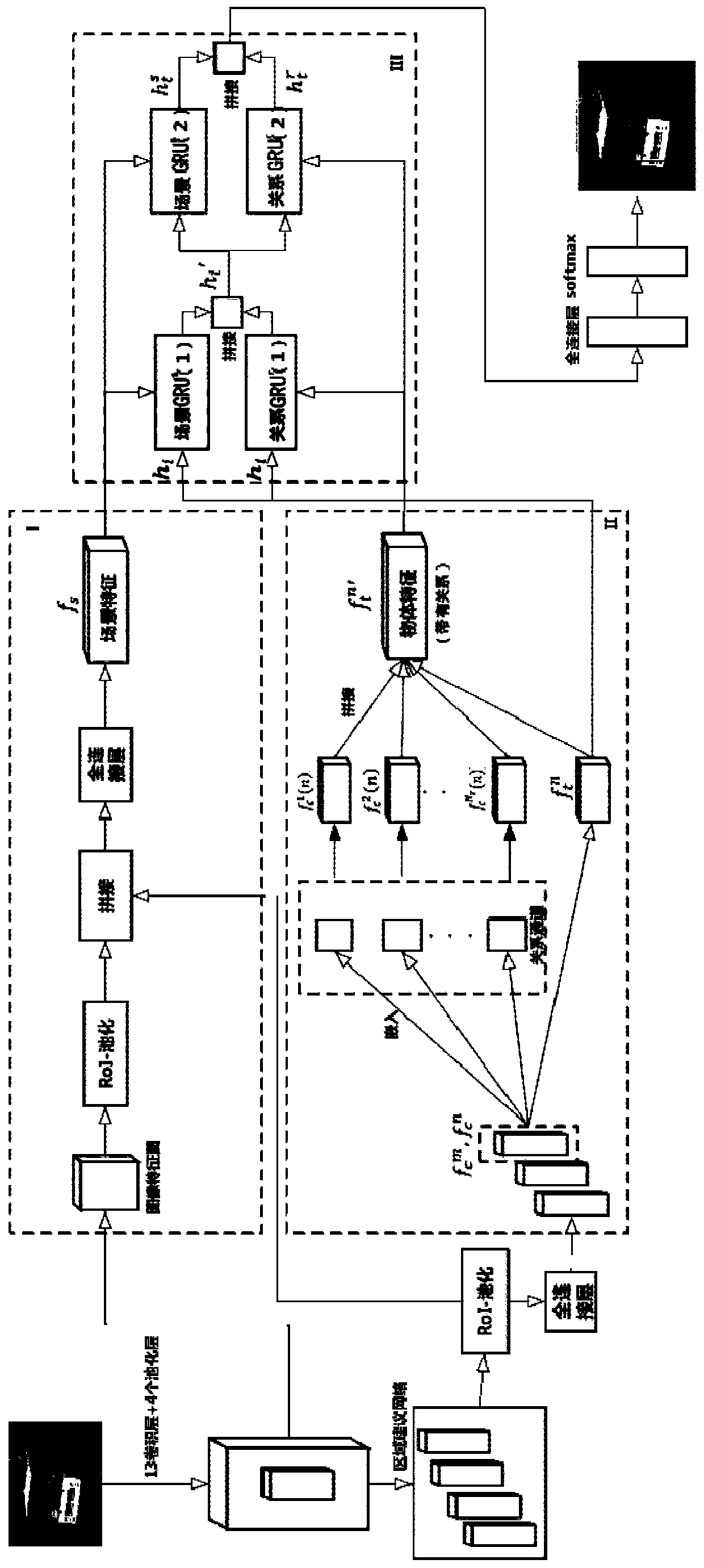

Method used

Image

Examples

experiment example

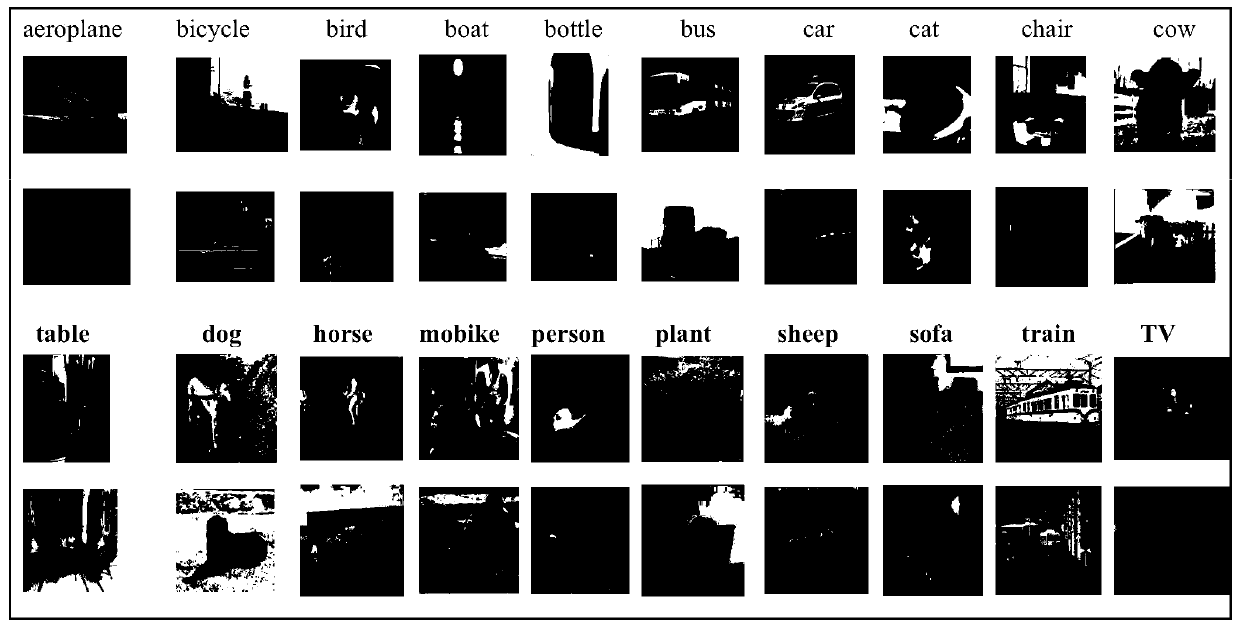

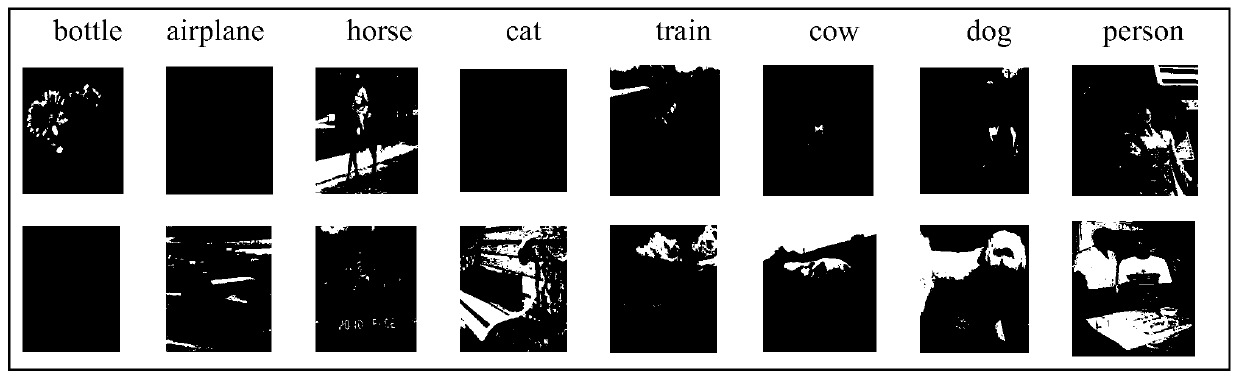

[0099] In order to effectively and systematically evaluate the proposed method, a large number of target detection experiments were carried out on two standard databases PASCAL VOC and MS COCO2014; among them, PASCAL VOC contains two data sets of VOC 2007 and VOC2012, and the PASCAL VOC2007 data set contains 9963 An annotated picture consists of three parts: train / val / test, with a total of 24,640 objects marked. The train / val / test of the VOC2012 dataset contains all corresponding images from 2008 to 2011, and the train+val has 11,540 images with a total of 27,450 objects. Compared with the PASCAL VOC dataset, the pictures in MSCOCO 2014 contain natural images and common target images in life, and consist of two parts: train / minival. The image background in this database is relatively complex, the number of targets is large and the target size is smaller, so the task on the MS COCO 2014 dataset is more difficult and challenging. figure 1 with figure 2 Partial images in the t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com