Sign language translation method based on BP neural network

A BP neural network and sign language translation technology, applied in the field of sign language translation, can solve the problems of limiting the social circle of deaf-mute people, low price, high price, etc., to solve the problem of communication barriers, low cost of hardware equipment, and high translation accuracy Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

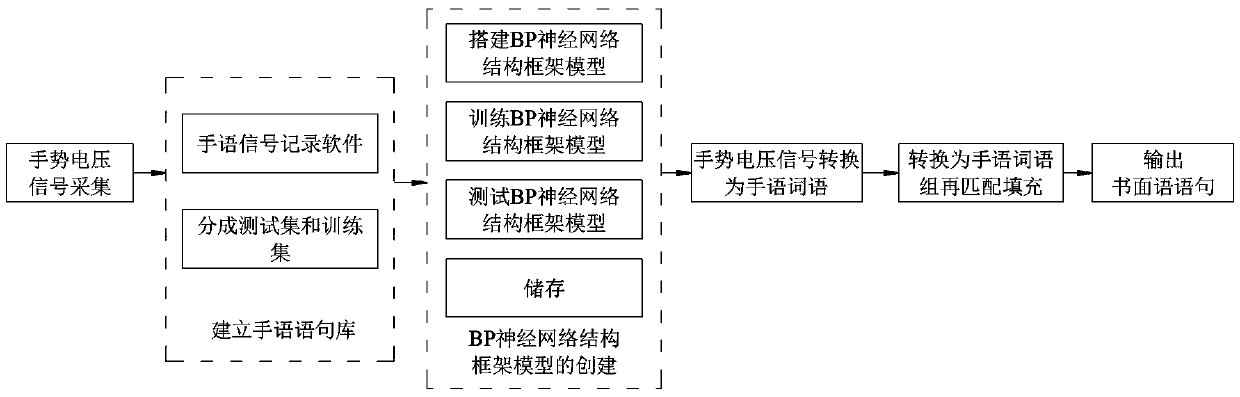

[0027] According to attached figure 1 , the present invention is a kind of sign language translation method based on BP neural network, comprises the following steps:

[0028] Step 1: The Raspberry Pi 3B single-board computer collects the gesture voltage signal through the flexible sensor and the acceleration sensor on the wearable data glove, filters and amplifies the gesture voltage signal, and transmits it to the memory through its integrated Bluetooth module .

[0029] Step 2: Use the signal screening program to compile the sign language words and commonly used sign language sentences corresponding to each group of signals into the sign language library to make a sign language sentence library, and divide the gesture voltage signals collected many times and the corresponding sign language words into training at a ratio of 7:3 set and test set;

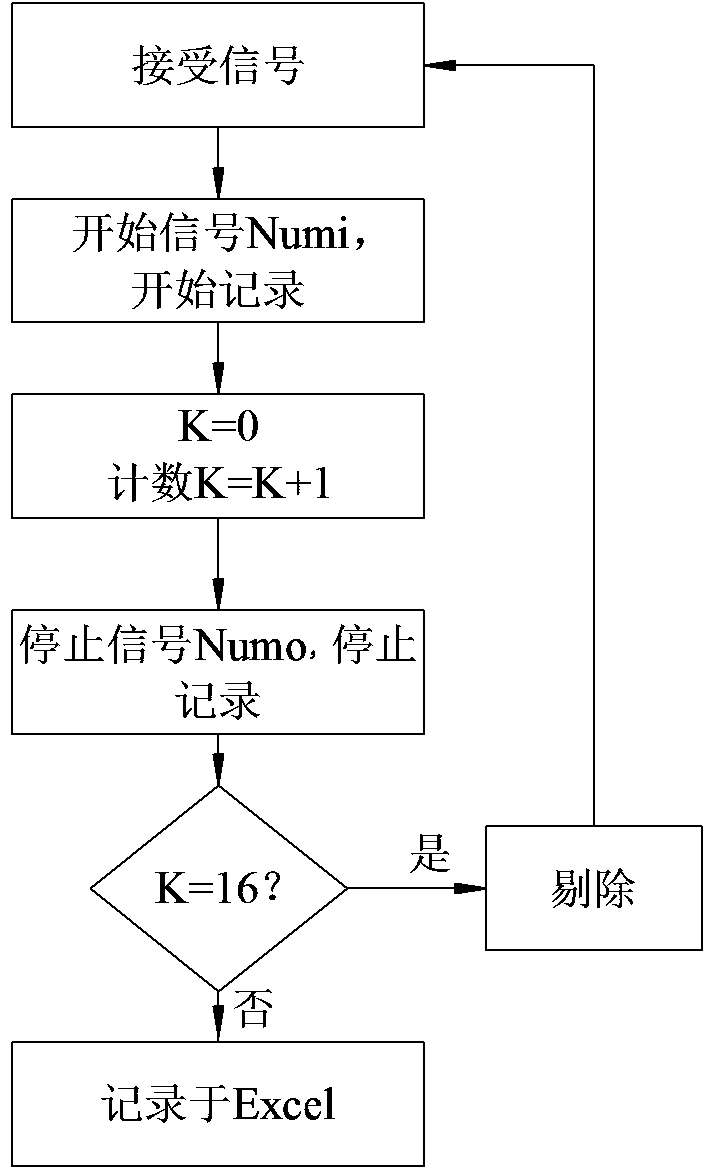

[0030] Step 2.1: Write sign language library recording software based on C# language, which can record each received gesture vo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com