Resource management and job scheduling method and system combining pull mode and push mode

A technology for resource management and job scheduling, applied in resource allocation, electrical digital data processing, multi-programming devices, etc., can solve the problems affecting system operation efficiency and scalability, central scheduler system performance bottlenecks, and system resource heterogeneity. Enhancement and other issues to achieve the effect of improving resource utilization, reducing bottleneck effect, and promoting scalability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

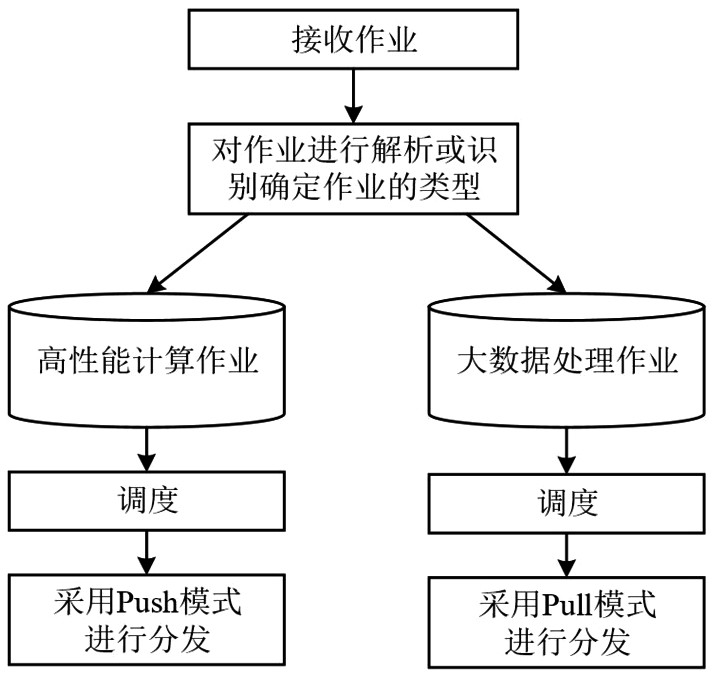

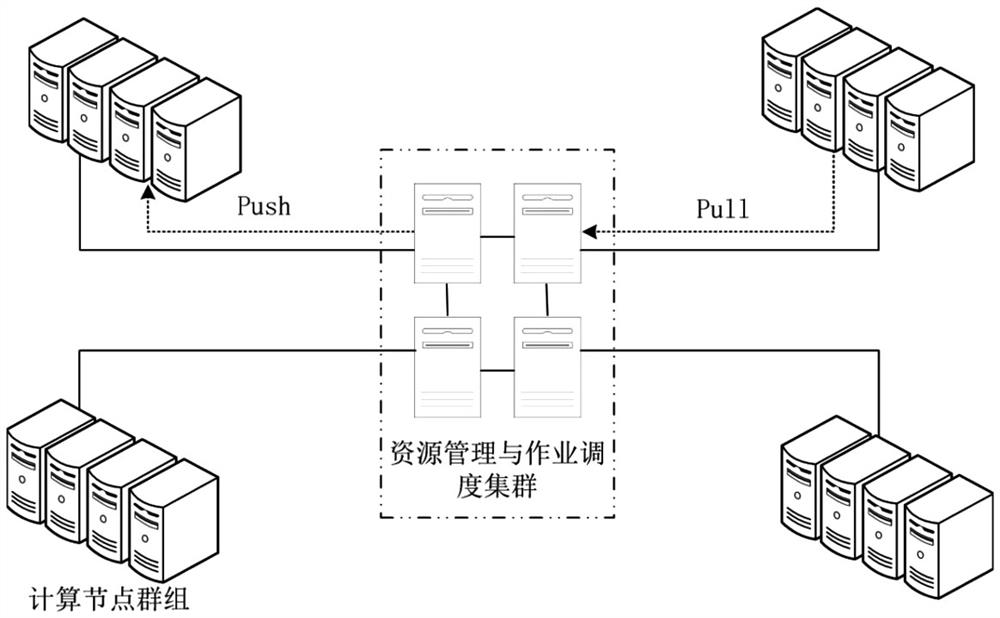

[0043] Such as figure 1 As shown, the implementation steps of the resource management and job scheduling method combining the Pull mode and the Push mode in this embodiment include:

[0044] 1) Receive jobs;

[0045] 2) Analyze or identify the job, and determine the type of job as high-performance computing job or big data processing job; it should be noted that different types of jobs can be stored in a mixed or separate manner according to needs, and the storage method can also be stored according to needs Use the required storage form, such as queue, linked list, etc.;

[0046] 3) Scheduling for different types of jobs, and for the high-performance computing jobs obtained by scheduling, use the Push mode to distribute: assign computing nodes to the high-performance computing jobs, and push the high-performance computing jobs to the assigned computing nodes for execution ; For the scheduled big data processing jobs, use the Pull mode to distribute: wait for the job request...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com