Medical image automatic segmentation method based on multi-path attention fusion

A technology for automatic segmentation of medical images, applied in image analysis, neural learning methods, image enhancement, etc., can solve problems such as difficulty in preserving spatial information, encoder loses spatial information, and affects segmentation results, so as to improve feature quality and increase image quality. Quantity, good accuracy effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

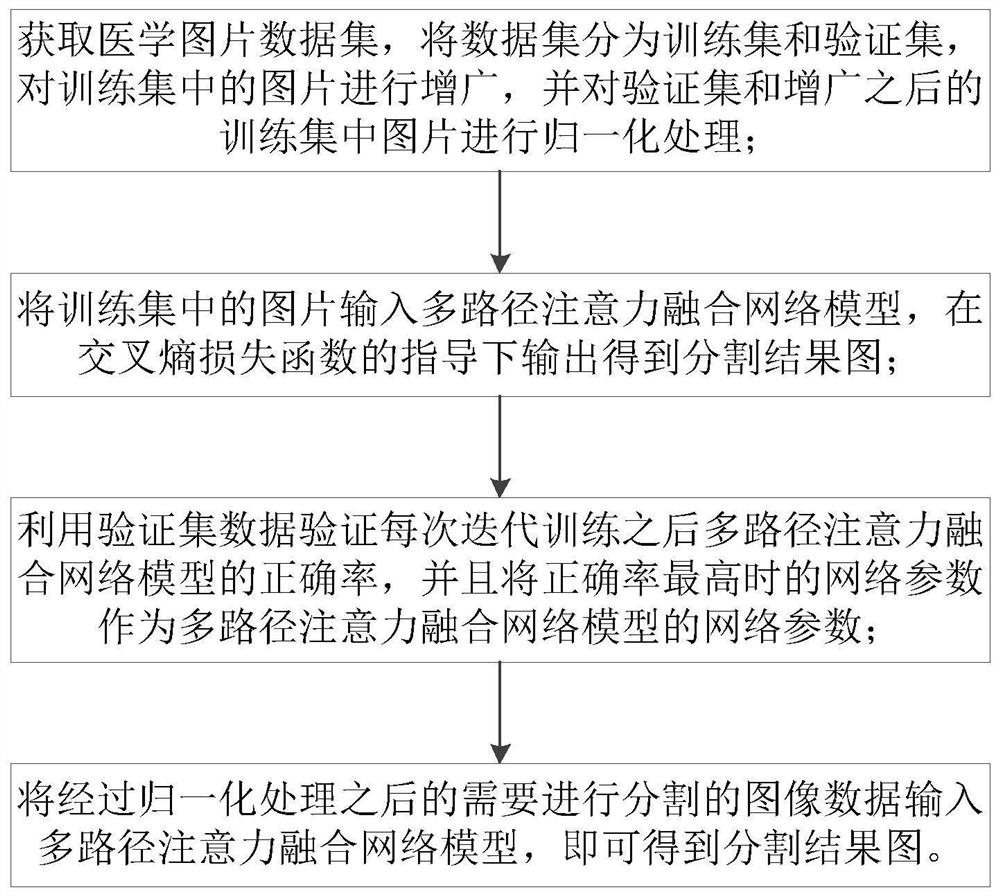

[0059] The pictures in the medical image data set are divided into a training set and a verification set. The training set is used to train the model, and the verification set is used to optimize various indicators of the model. For medical image segmentation, it is not easy to obtain sufficient training samples, so the present invention Augment the pictures in the training set. The augmentation operations include:

[0060] Rotate the pictures in the training set, the rotation angles include 10°, 20°, -10° and -20°, and save the rotated picture;

[0061] Turn the pictures in the training set upside down and left and right, and save the flipped pictures;

[0062] Perform elastic transformation on the pictures in the training set, and save the pictures after the elastic transformation;

[0063] Perform (20%, 80%) range zoom processing on the pictures in the training set, and save the zoomed pictures;

[0064] The pictures in the training set and the pictures in the training set processed...

Embodiment 2

[0083] Using the separation method in Example 1, in this implementation, the Keras and Tensorflow open source deep learning libraries are used, the NIVIDIA Geforce RTX-2080Ti GPU is used for training, the Adam optimization algorithm model is used, and the learning rate is set to 0.0001; the 2018ISIC skin is used Cancer lesion segmentation, LUNA lung CT data set.

[0084] A data set of this embodiment is provided by the 2018 Skin Cancer Lesion Segmentation Challenge. It contains a total of 2954 skin cancer lesion pictures. Each picture has a size of 700×900 and has a corresponding segmentation label map; use 1815 One picture is used as the training set, 59 pictures are used as the verification set, and the remaining 520 pictures are used as the test set. In order to facilitate network training, all pictures are adjusted to 256×256 in size. The data in the test set is as follows Figure 4 Shown, where Figure 4 The first row is the original image data, the second row is the label of...

Embodiment 3

[0090] The separation method in Example 1 is used. Unlike Example 2, this example uses the LUNA data set, which is provided by the 2017 Kaggle Lung Node Competition. It contains a total of 730 pictures and 730 corresponding segmentation label maps. The pixel size of each picture is 512×512. 70% of the pictures are used as the training set, 10% of the pictures are used as the verification set, and the remaining 20% of the data As a test set.

[0091] Due to the small amount of data, techniques such as rotation, flipping, and elastic transformation are used to augment the training data set, so that the network can have good robustness and segmentation accuracy.

[0092] Four evaluation indicators are used, F1-score, Accuracy, Sensitivity and Specificity. The larger the four indicators, the more accurate the segmentation effect. As can be seen from Table 2, the experimental results on the LUNA data set show that compared with U-Net, R2-Unet, BCD-Net and U-Net++, the method of the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com