Self-adaptive reinforced fusion real-time instance segmentation method based on camera and laser radar

A technology of lidar and camera, which is applied in image analysis, image data processing, character and pattern recognition, etc., can solve the problem of inability to adaptively learn the complementary features of different modes, single-mode sensor perception of the external environment, and low target positioning accuracy and other problems to achieve the effect of avoiding calculation overhead, high positioning accuracy and good adaptability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

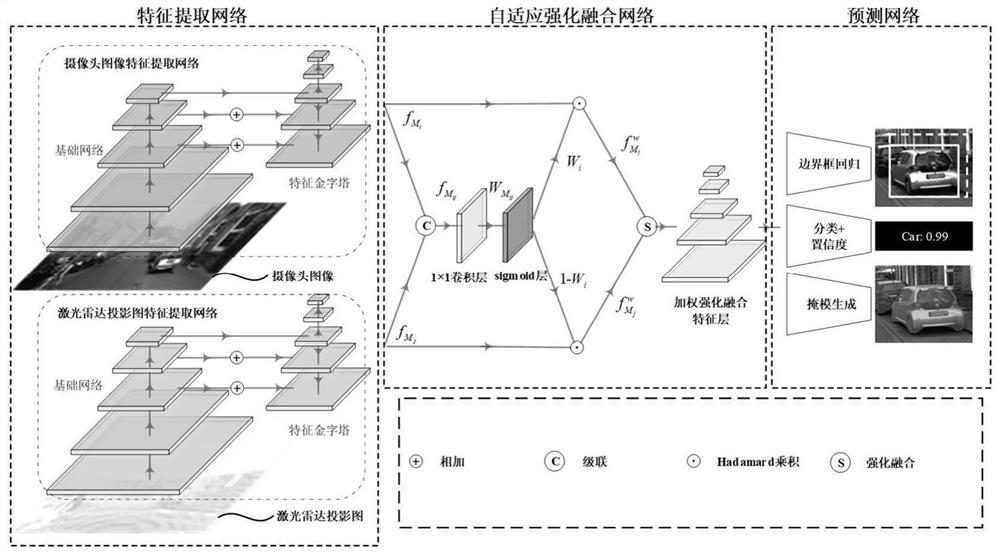

[0048] Such as figure 1 As shown, a kind of self-adaptive strengthening fusion real-time instance segmentation method based on camera and lidar of the present invention comprises the following steps:

[0049] S10, feature extraction: use convolutional neural network to extract multi-scale high-level semantic features from the spatiotemporal consistent camera image and lidar point cloud projection image;

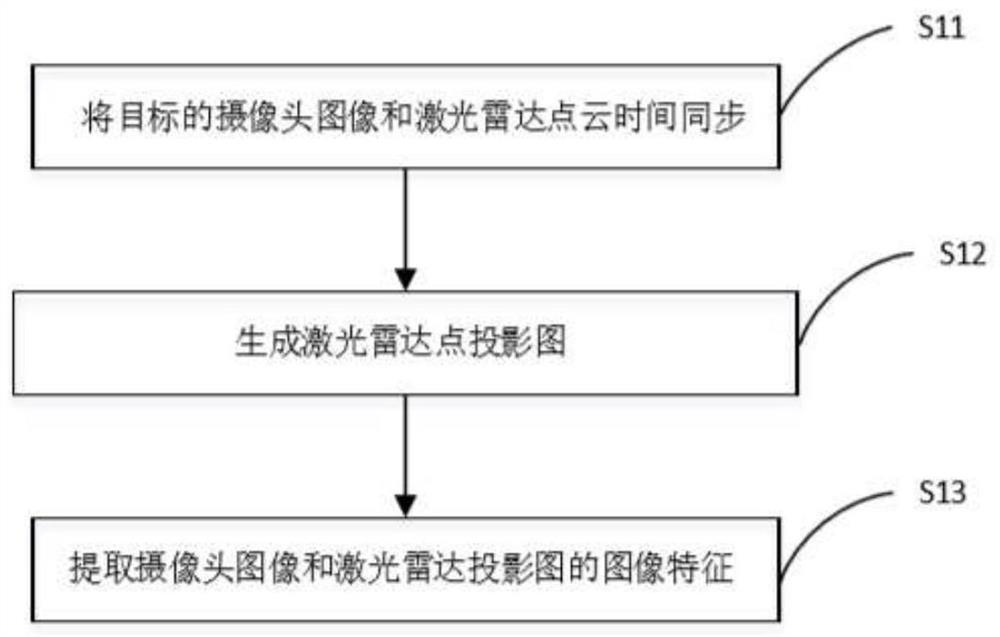

[0050] combine figure 2 , the feature extraction step includes:

[0051] S11, camera and lidar time synchronization:

[0052] The closest camera image frame to the current lidar frame is obtained by the time synchronization algorithm, and the time-synchronized lidar point cloud frame and camera image frame are obtained.

[0053] S12, generating a lidar point cloud projection map:

[0054] According to the external parameter matrix M of the lidar coordinate system to the camera coordinate system e , and the internal parameter matrix M of the camera i , a point (X Y Z) o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com