Visual navigation method for moving robot

A technology of robot vision and navigation methods, applied in neural learning methods, instruments, computer components, etc., can solve problems such as low precision, increased cumulative error of inertial navigation, and sensitivity to light sources

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0089] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments.

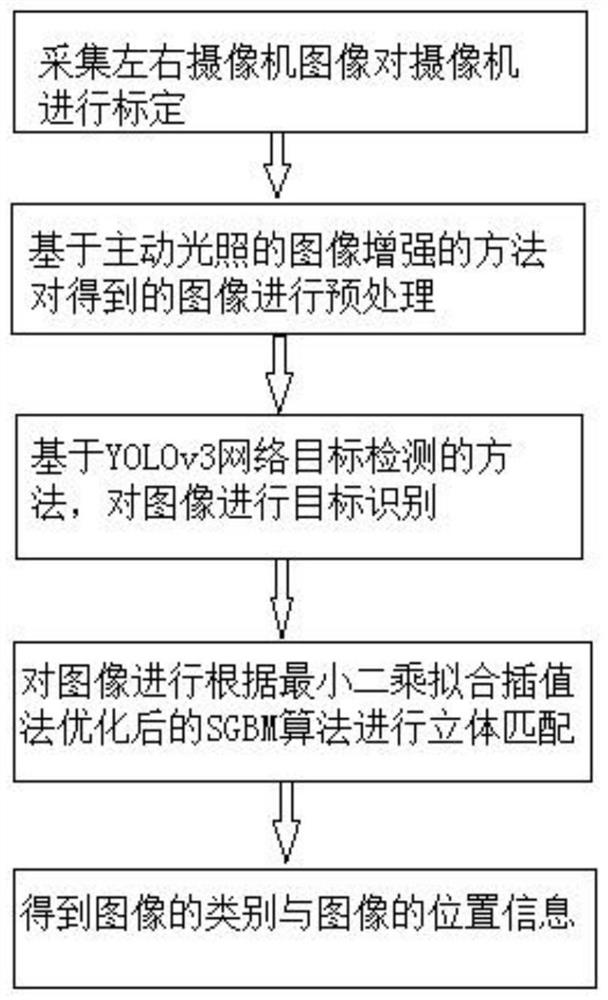

[0090] Such as figure 1 Shown, the inventive method comprises the following steps:

[0091] 1. Calibrate the camera using Zhang’s plane calibration method to obtain the camera’s internal parameter matrix, distortion coefficient matrix, intrinsic matrix, fundamental matrix, rotation matrix and translation matrix, correct the camera, collect video and store it;

[0092] 2. Process the video to obtain the frame image, and use the image enhancement method based on active lighting to preprocess the obtained image, including the following steps:

[0093] ①Using the depth of field to divide the image into foreground and background areas;

[0094] ② On the basis of the depth of field, the object and the background are separated according to the gradient information of the object and the background;

[0095] ③ Select the pixels at infinity that have lo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com