Method and system for achieving short-distance character extraction of mobile terminal

A mobile terminal, text extraction technology, applied in neural learning methods, character recognition, character and pattern recognition and other directions, can solve the problem of inability to take into account the efficiency and accuracy of text information extraction, achieve a good user experience, expand the image range, The effect of improving efficiency and accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

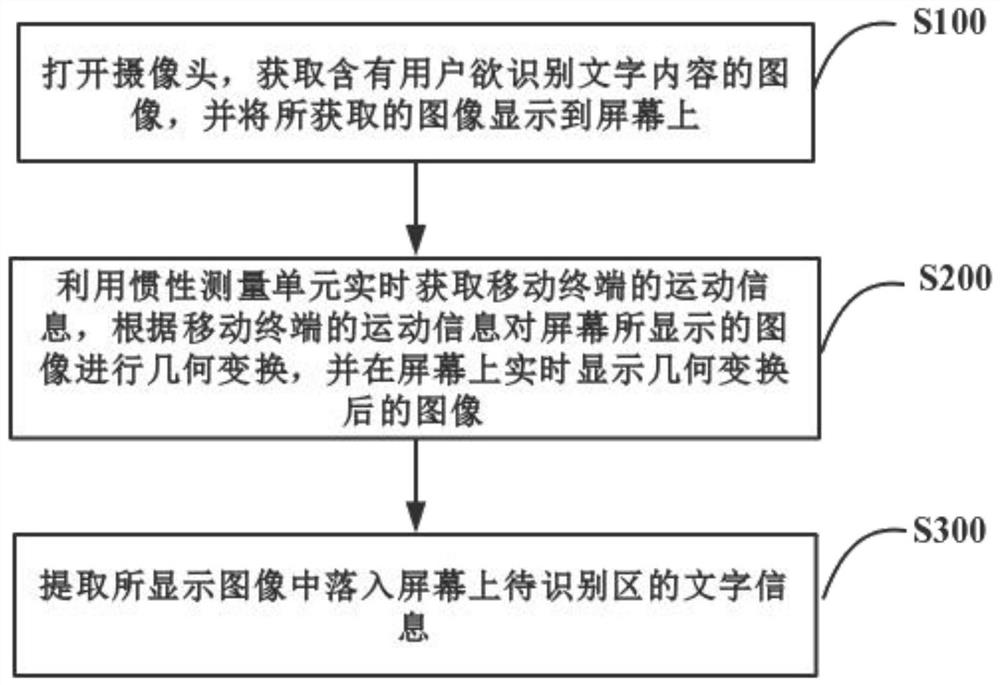

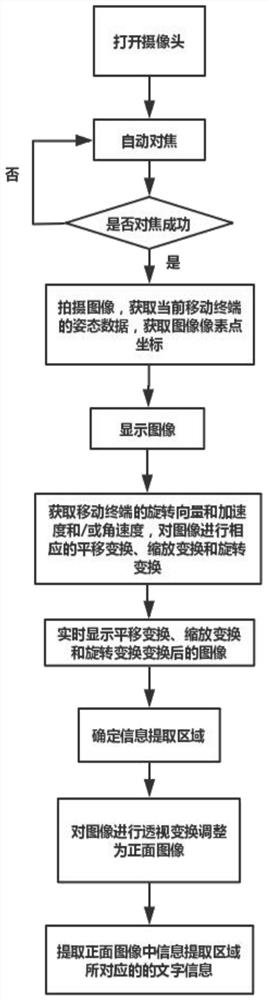

[0114] Such as figure 2 As shown, a method for realizing short-distance text extraction by a mobile terminal includes:

[0115] Step S100, specifically:

[0116] Turn on the camera, turn on the auto-focus function of the mobile terminal, and check whether the image captured by the current camera is in focus. If the focus is successful, control the camera to automatically take a clear image. If the focus is not detected, re-focus until a clear image is captured. , and display the acquired image on the screen;

[0117] Using the inertial measurement unit to acquire the attitude data of the current mobile terminal while acquiring the image;

[0118] Step S200, specifically:

[0119] Utilize the inertial measurement unit to obtain the rotation vector r of the current mobile terminal and the acceleration a and / or angular velocity w of the current mobile device in real time, according to the acquired rotation vector r of the current mobile device and the acceleration a and / or an...

Embodiment 2

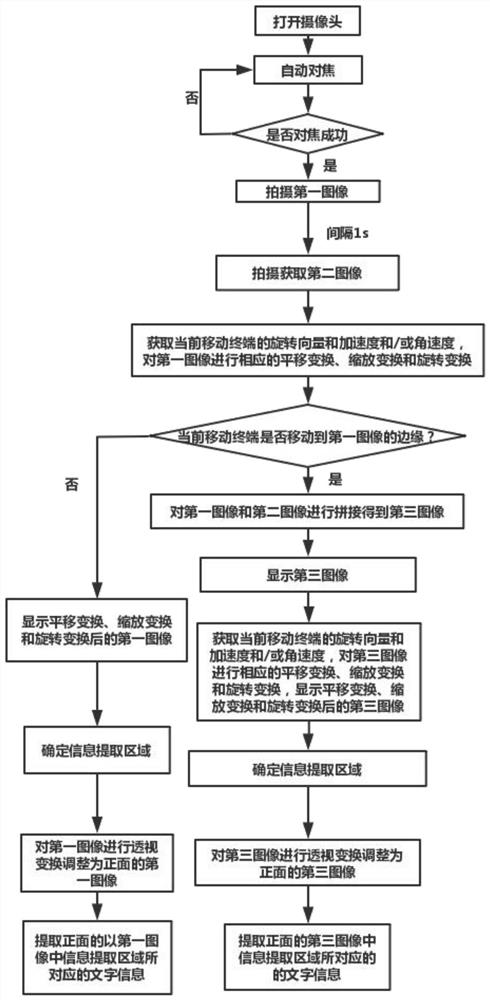

[0125] Such as image 3 As shown, a method for realizing short-distance text extraction by a mobile terminal includes:

[0126] Step 100, specifically:

[0127] Turn on the camera, turn on the auto-focus function of the mobile terminal, and check whether the image captured by the current camera is in focus. If the focus is successful, control the camera to automatically take a clear image. If the focus is not detected, re-focus until a clear image is captured. ;

[0128] Taking a first image with clear focus, using the inertial measurement unit to obtain the attitude data of the current mobile terminal while taking the first image, and displaying the first image on the screen;

[0129] When the inertial measurement unit senses that the mobile terminal is moving, the camera is controlled to collect a second image adjacent to the first image within the image collection period, wherein the first image and the second image have an overlapping portion;

[0130] Step S200, specif...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com