Robot man-machine interaction method

A technology of human-computer interaction and robotics, applied in the field of language interaction, radar obstacle avoidance, deep learning, and active visual perception of robots, can solve problems such as knowledge question answering with little research and no great progress, to improve intelligence, The effect of improving navigation accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

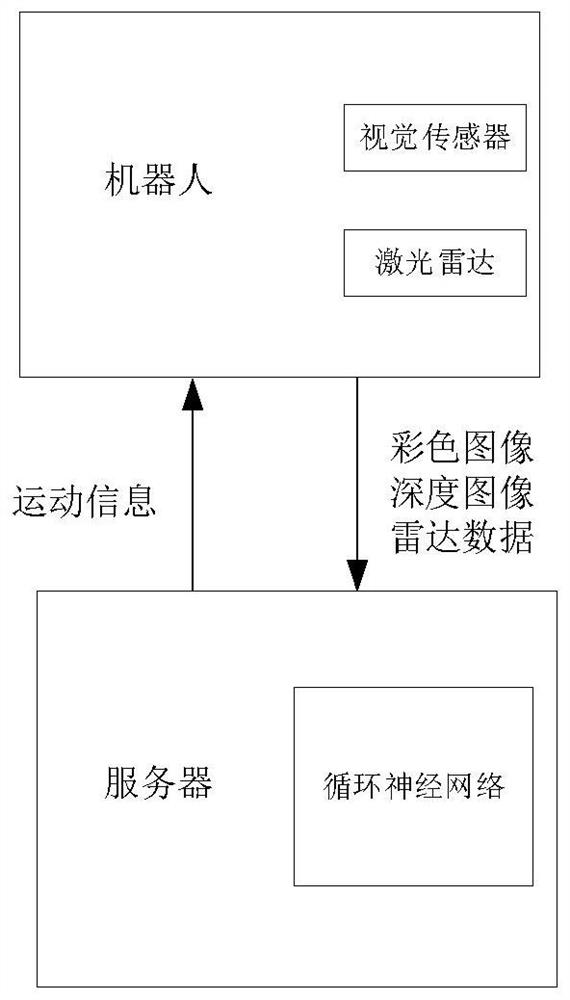

[0017] The robot human-computer interaction method proposed by the present invention includes:

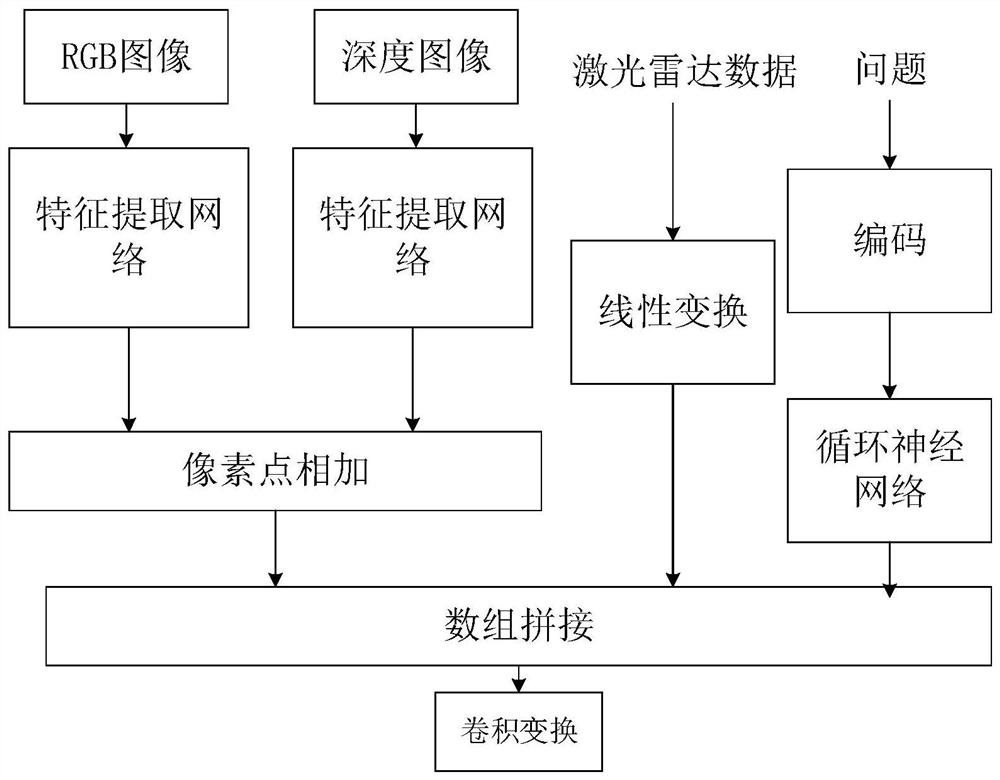

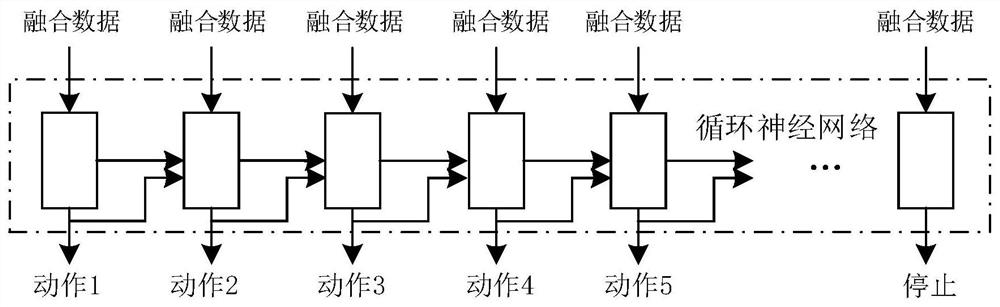

[0018] Shoot the RGB image and depth map of the environment, and detect the obstacle information to obtain the laser radar array, normalize the acquired data, construct the problem encoding network in human-computer interaction to encode the problem; construct the image feature extraction network, and convert the RGB The image and depth image information is extracted into a feature matrix, and the lidar data, question code and feature matrix are spliced to obtain a feature fusion matrix; the convolutional network is used to obtain the data fusion matrix as the data fusion matrix of the surrounding environment; a cyclic neural network is trained as The navigator takes the data fusion matrix as input, and the output is one of the actions of "forward, left, right, and stop" to control the direction of the robot's movement.

[0019] Introduce an embodiment of the inventive method bel...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com