Lane line detection method and system

A technology of lane line detection and lane line, which is applied in the field of lane line detection methods and systems, can solve the problems of severe performance degradation, inefficient encoder, and the number of lane lines is not fixed, etc., to achieve rich semantic features, improve positioning accuracy, and enrich The effect of global semantic information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

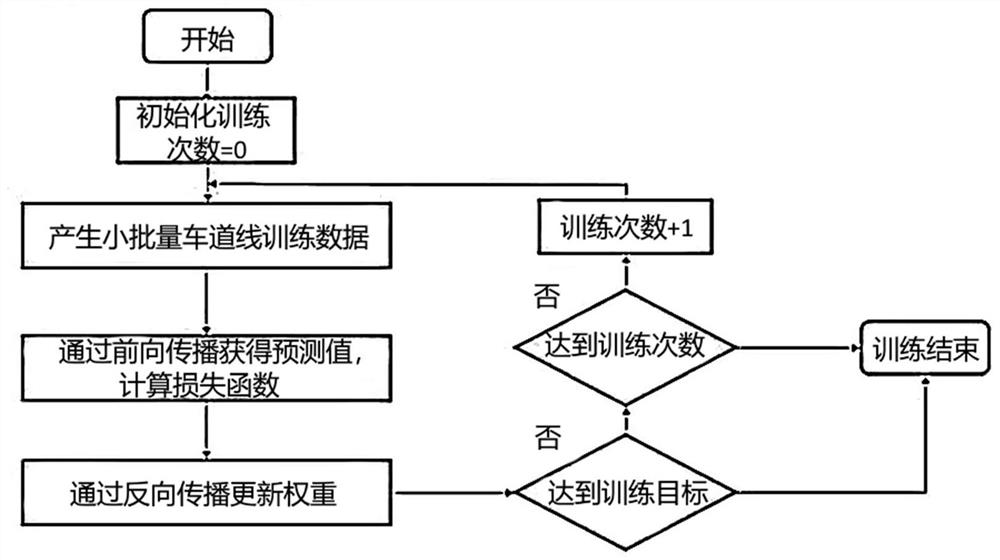

[0148] According to Embodiment 1, a lane line detection method is provided, the method comprising:

[0149] Build a convolutional neural network model;

[0150] Build training and inference phases through network models;

[0151] Adjust the preprocessing of the training phase;

[0152] The predicted lane lines are obtained through the inference stage.

Embodiment 2

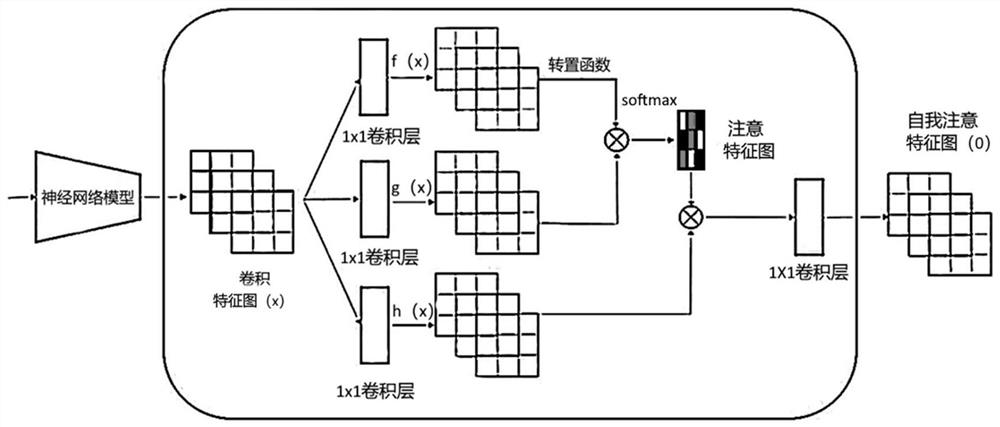

[0154] On the basis of Embodiment 1, the neural network model is constructed as follows:

[0155] The neural network model performs feature extraction on the convolutional feature map;

[0156] The convolutional feature map is connected to the three branches with a 1x1 convolutional layer;

[0157] The 1x1 convolutional layer compresses the number of channels of the feature map respectively;

[0158] The compressed data transposes the first branch and performs matrix multiplication with the second branch to obtain the attention feature map;

[0159] Pass the attention feature map through softmax to obtain the normalized attention feature map;

[0160] Matrix multiply the third branch of the compressed data with the normalized attention feature map to obtain a weighted attention feature map;

[0161] Use 1X1 convolution to increase the dimension of the above feature map channels and output the self-attention feature map.

Embodiment 3

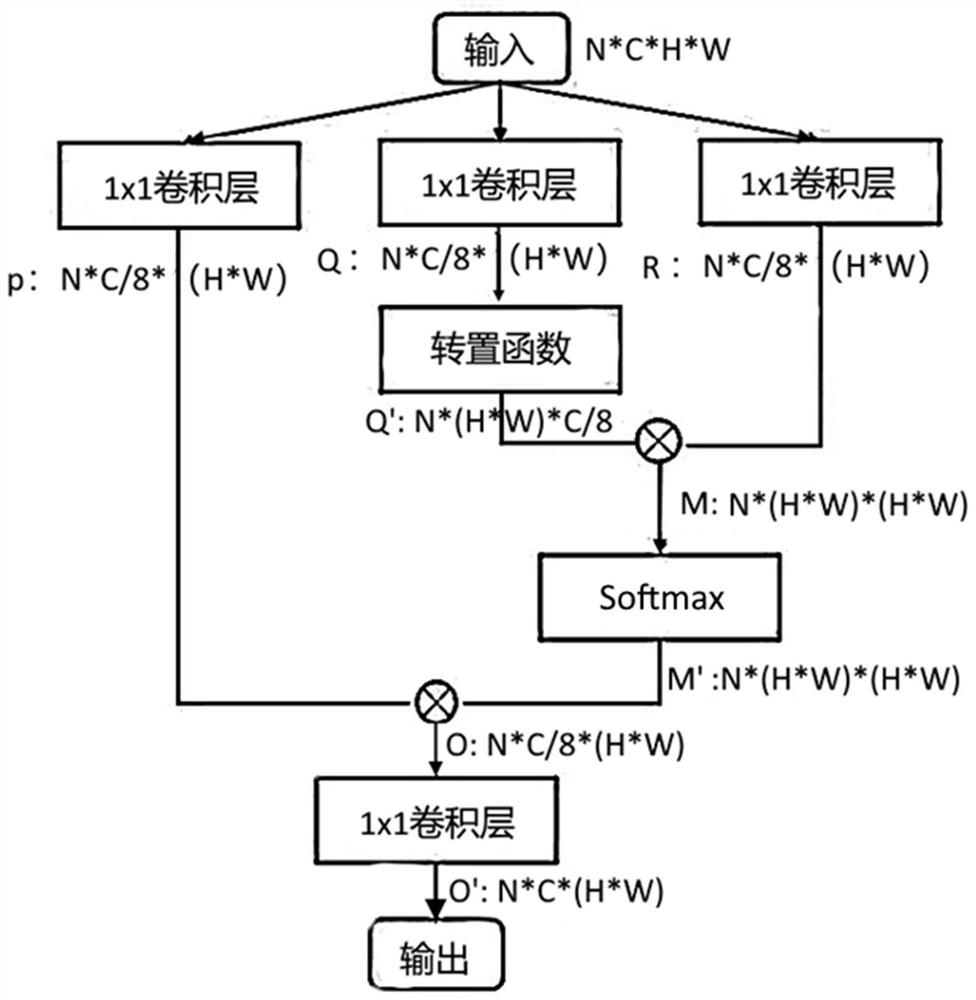

[0163] On the basis of Embodiment 2, the attention structure is obtained according to the neural network model, and the attention structure is specifically as follows:

[0164] It is assumed that the size of the feature map obtained after passing through the backbone network is N*C*H*W,

[0165] Afterwards, the three branches all use 1X1 convolution to compress the number of channels of the feature map respectively.

[0166] The size of the compressed feature map is N*C / 8*(H*W);

[0167] After that, the feature map Q of the second branch is passed through the transpose function to obtain Q', and its size is N*(H*W)*C / 8;

[0168] Then perform matrix multiplication operation on Q' and R to obtain M, and then use Softmax to normalize M to obtain M', whose size is N*(H*W)*(H*W);

[0169] Characterize the extracted global semantic information; then perform a matrix multiplication operation on P and M' to obtain a feature map O whose size is N*C / 8*(H*W);

[0170] Finally, the 1X1...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com