Multi-task chapter-level event extraction method based on multi-headed self-attention mechanism

A technology of event extraction and attention, applied in neural learning methods, natural language data processing, biological neural network models, etc., can solve the problem of not fully considering the contextual relationship in the text, ignoring the relationship between different sentences, and not being able to cross clauses, etc. problem, to achieve good recognition extraction performance and superior recognition effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0051] It should be understood that the specific embodiments described here are only used to explain the present invention, not to limit the present invention.

[0052] For those skilled in the art, it is understandable that some well-known structures and descriptions thereof may be omitted in the drawings.

[0053] In order to better illustrate this embodiment, the technical solution in this embodiment of the present invention will be clearly and completely described below in conjunction with the drawings in this embodiment of the present invention.

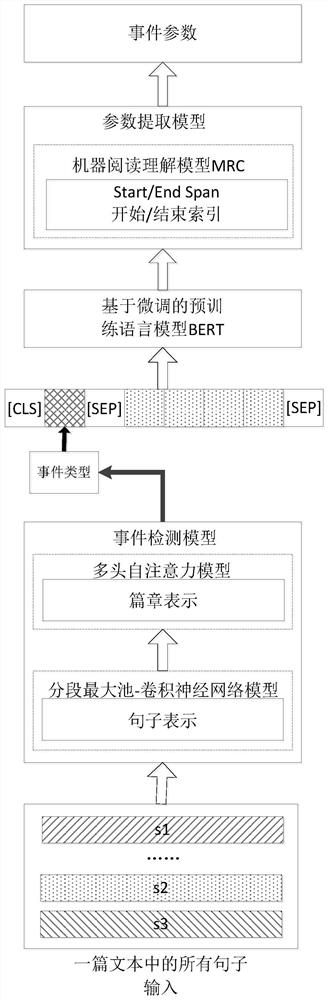

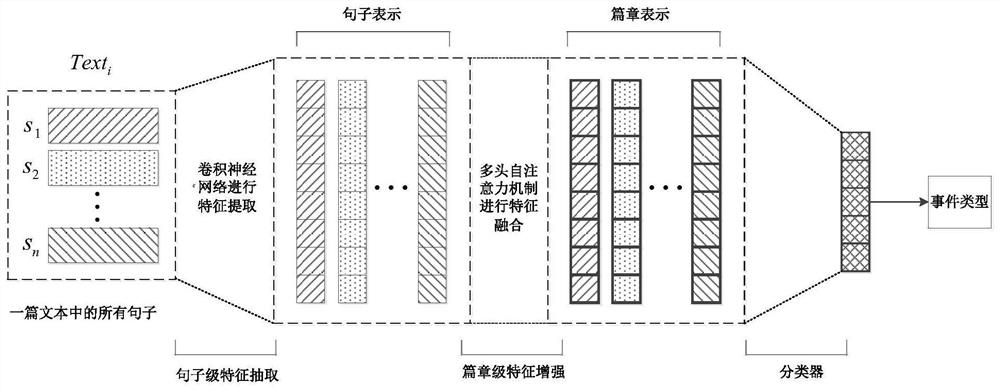

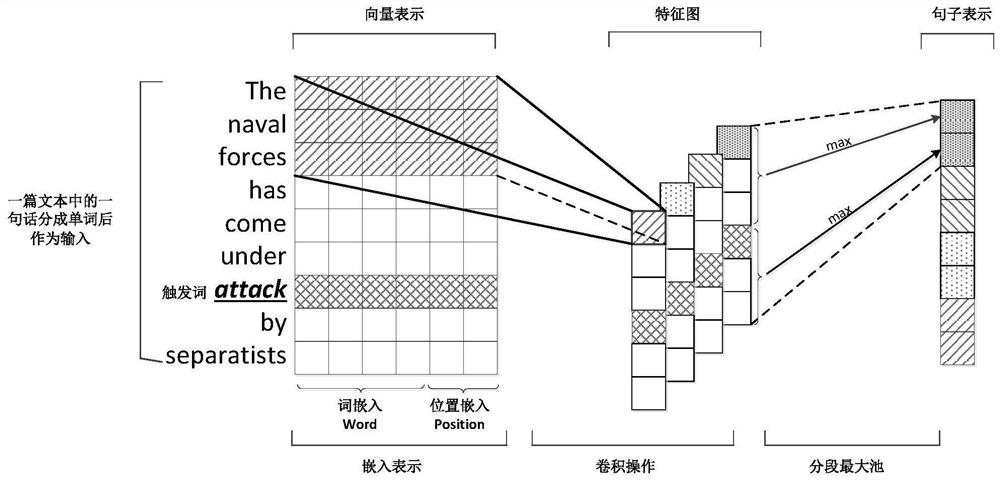

[0054] Such as figure 1 , 2 As shown, this embodiment provides a multi-task chapter-level event extraction method based on a pre-trained language model, which specifically includes the following steps:

[0055] Step 101, according to the design of experts, the general field event types are divided into 5 categories (Action, Change, Possession, Scenario, Sentiment), 168 subcategories (such as: Attack, Bringing, Cost, Departing....

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com