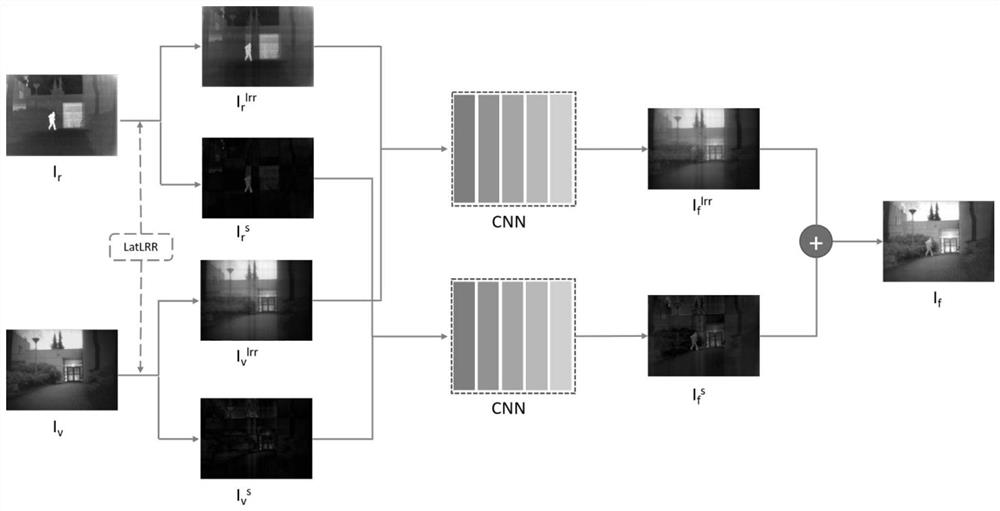

Infrared and visible light image fusion method combining potential low-rank representation and convolutional neural network

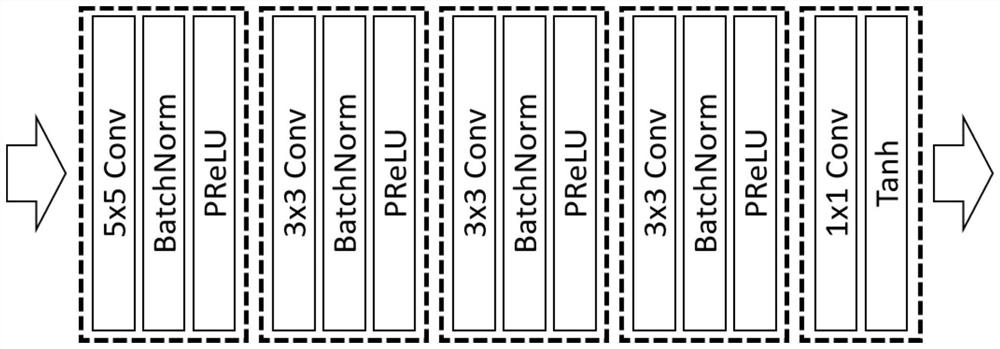

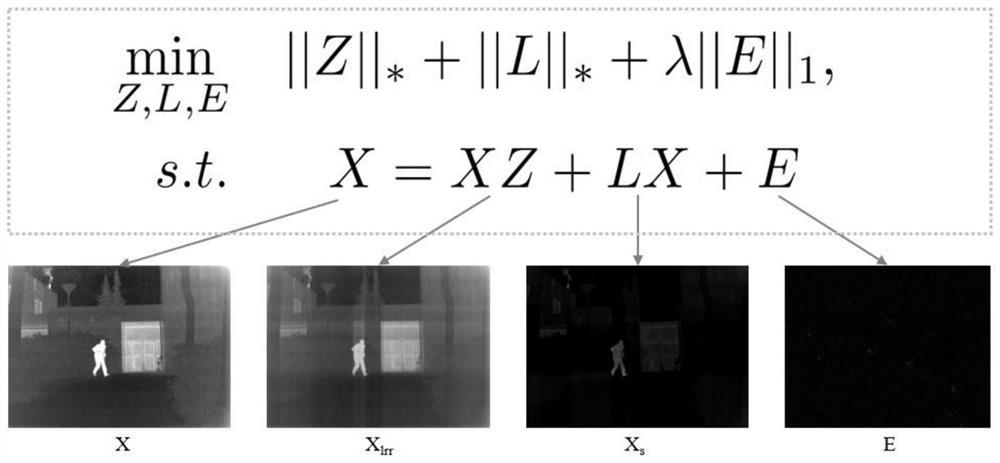

A convolutional neural network, low-rank representation technology, applied in neural learning methods, biological neural network models, neural architectures, etc., can solve problems such as the inability to fully utilize the features of the image, the difficulty of the model, and the difficulty in the implementation of the method. Guaranteed rationality and reversibility, prominent goals, and rich details and information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0049] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments. The invention provides a fusion method of infrared and visible light images combining latent low-rank representation and convolutional neural network, comprising the following steps:

[0050] Step 1) Select the infrared and visible light fusion data set, and expand the data set as the training set of the neural network.

[0051] The infrared and visible fusion dataset uses TNO, its link is https: / / figshare.com / articles / TNO_Image_Fusion_Dataset / 1008029. According to the image quality and the frequency of appearance in the paper, 28 pairs of infrared and visible light images were selected as the original images. Since the model of the present invention has a neural network structure, a large amount of data is required for training, so data expansion i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com