An in-memory computing device and method based on ping-pong structure

A technology of a computing device and a ping-pong structure, which is applied in the field of in-memory computing devices based on the ping-pong structure, can solve the problems of unconsidered and limited improvement of ping-pong in-memory computing macro performance, and achieves improved optimization efficiency, improved hardware utilization efficiency, and high energy efficiency. Calculated effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0068] An in-memory computing method of the above-mentioned in-memory computing device based on a ping-pong structure, comprising the following steps:

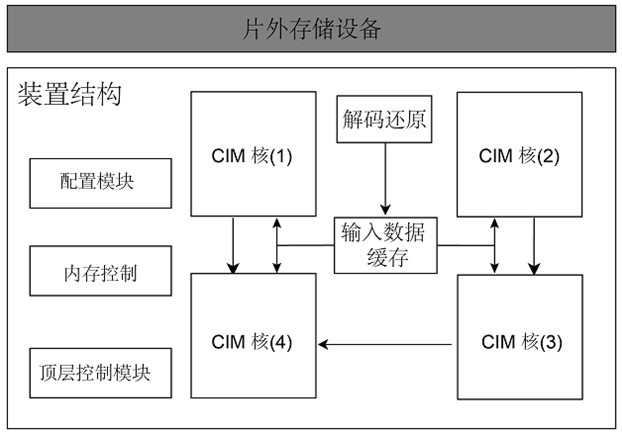

[0069] Step 1. According to the needs of the application scenario, set the specific parameters of the in-memory calculation macro, specifically: the number of rows of the in-memory calculation macro row=32, the number of columns col=256, and the number of macros num macro =4;

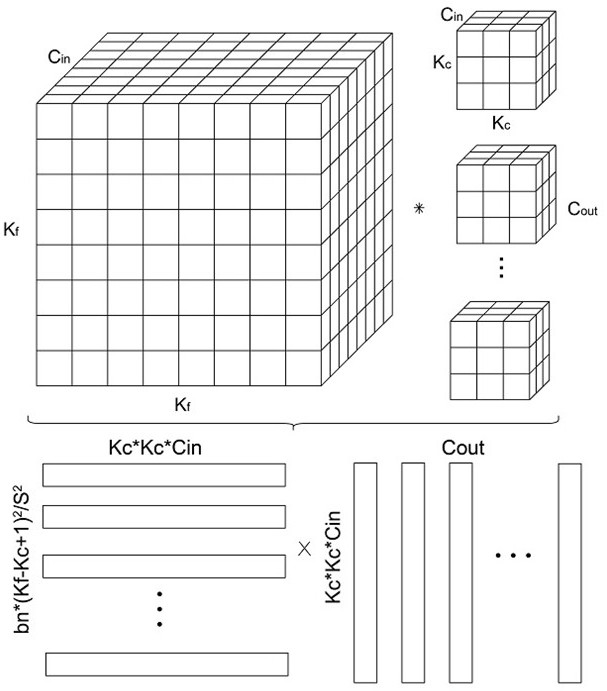

[0070] Step 2. Reconstruct the form of the data to be calculated. The data to be calculated is the convolution operation of the 10th layer of the VGG-16 neural network algorithm and bn=1, and the convolution operation is reconstructed, specifically:

[0071] Expand the neural network convolution operation into a matrix multiplication operation. For the 10th layer of the VGG-16 network, the input feature size is (1, 28, 28, 512), the convolution kernel size is (3, 3, 512, 512), the input feature size The convolution operation with the frame number bn of...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com