Scene matching/visual odometry-based inertial integrated navigation method

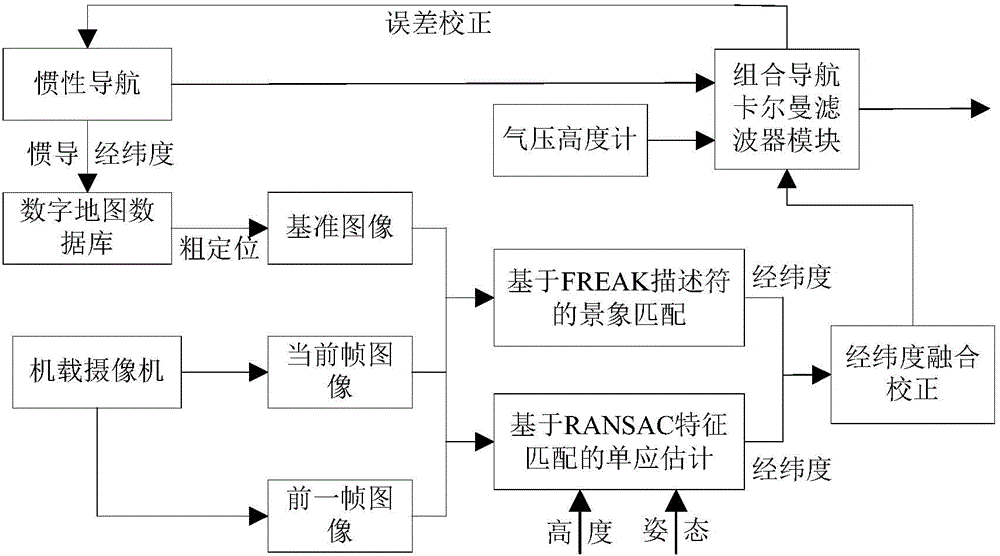

A technology of scene matching and inertial combination, applied in navigation, navigation through speed/acceleration measurement, surveying and navigation, etc., can solve the problem of reliability and accuracy that cannot meet the requirements of high-precision navigation, integrated navigation system errors, GPS signal power Weakness and other problems, to achieve the effect of improving autonomous flight capability, strong anti-interference, and high positioning accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment

[0062] 1. During the flight of the UAV, the airborne downward-looking camera acquires the ground image a in real time.

[0063] Use the downward-looking optical camera or infrared camera onboard the UAV to obtain the ground image sequence in real time, but only need to save the current frame and the previous frame image.

[0064] 2. Using the image a and the previous frame image a', by estimating the homography matrix of a and a', determine the visual mileage of the UAV.

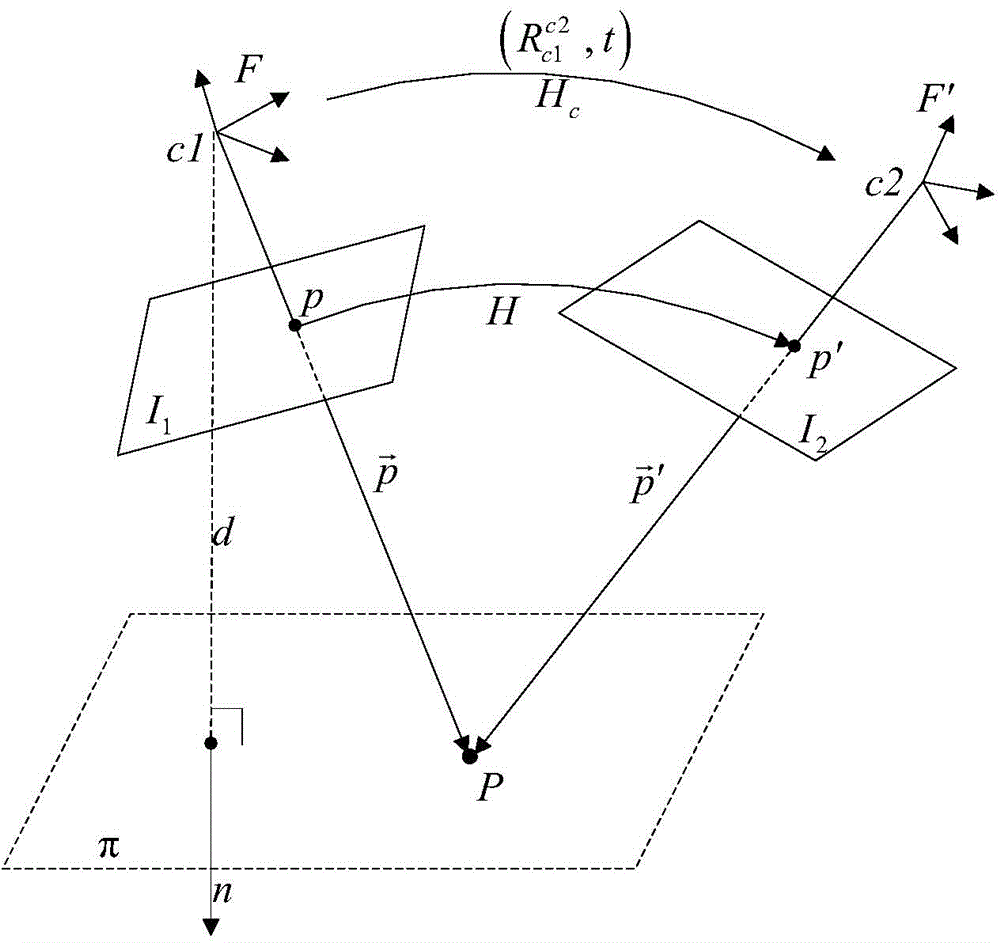

[0065] like figure 2 As shown, when the UAV is flying, the onboard camera continuously shoots two frames of images I in different poses 1 and I 2 , the corresponding camera coordinate system is F and F′, assuming that the point P on the plane π is mapped to the image I 1 Points p and I in 2 The point p' in the corresponding vector in F and F' is and exists

[0066] p → ′ = R c ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com