Depth map based hand feature point detection method

A feature point detection, depth map technology, applied in image enhancement, image analysis, image data processing and other directions, can solve problems such as connection, application range limitations, etc., to overcome the limitations.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

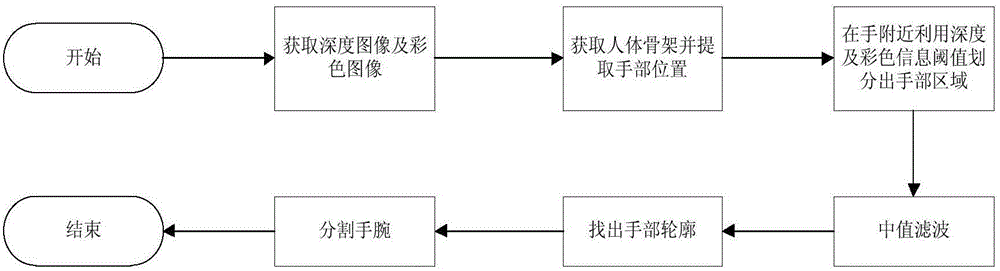

[0017] This depth map-based hand feature point detection method includes the following steps:

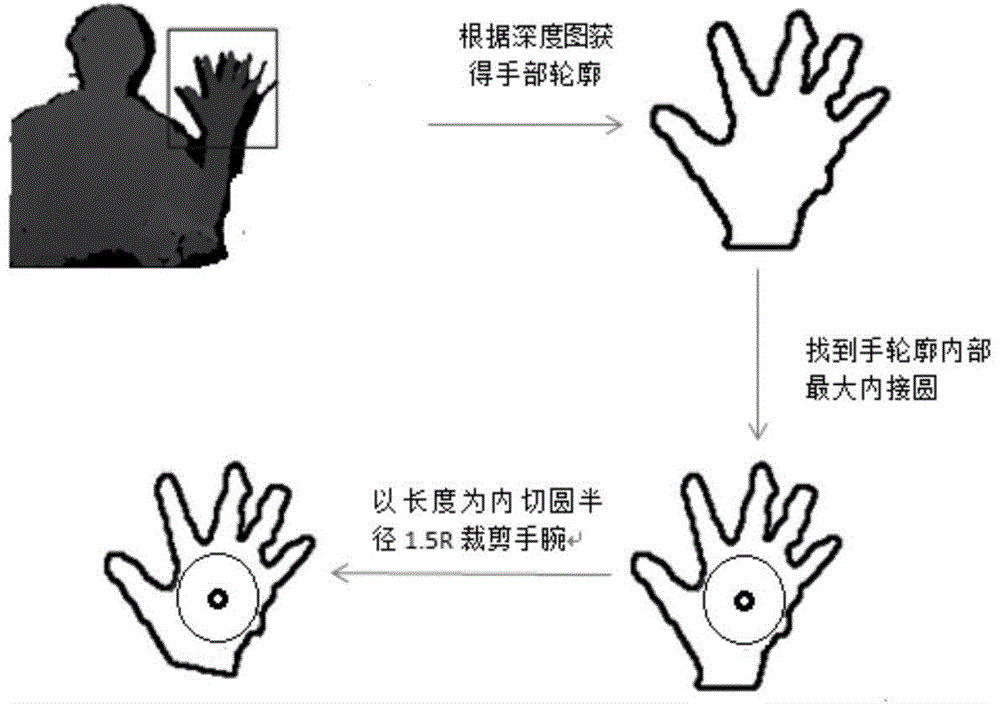

[0018] (1) Hand segmentation: Use Kinect to collect human motion video sequences to extract hands, use OPENNI to obtain human hand position information through depth maps, and obtain palm points initially by setting search areas and depth threshold methods; use OPENCV The find_contours function obtains the contour of the hand; by finding the center of the largest inscribed circle in the contour of the hand, the palm point of the hand is accurately determined, and by calculating the shortest distance m between all internal points of the hand and the contour point, the maximum value M is found in the shortest distance , the inner point of the hand represented by M is the palm point, and the inscribed circle radius R=M;

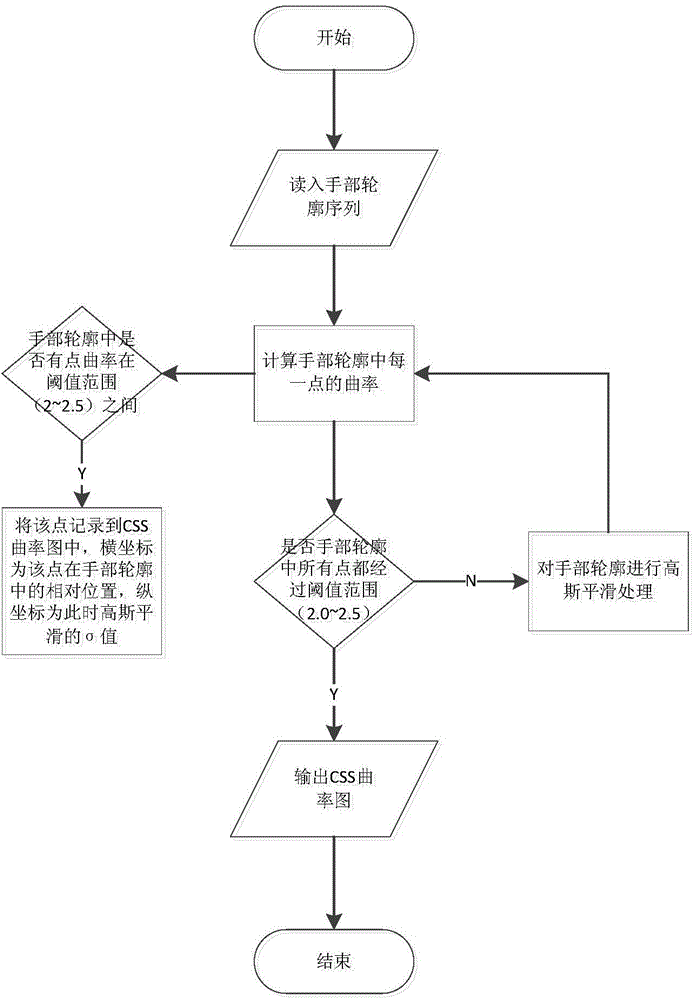

[0019] (2) Feature point extraction: Design and implement an improved hand feature point (fingertip point and finger valley point) detection method based on CSS curva...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com