A kind of caching system and method

一种缓存、存储器的技术,应用在计算机领域,能够解决处理器运算单元利用率低、处理器运算能力无法得到充分的发挥等问题

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

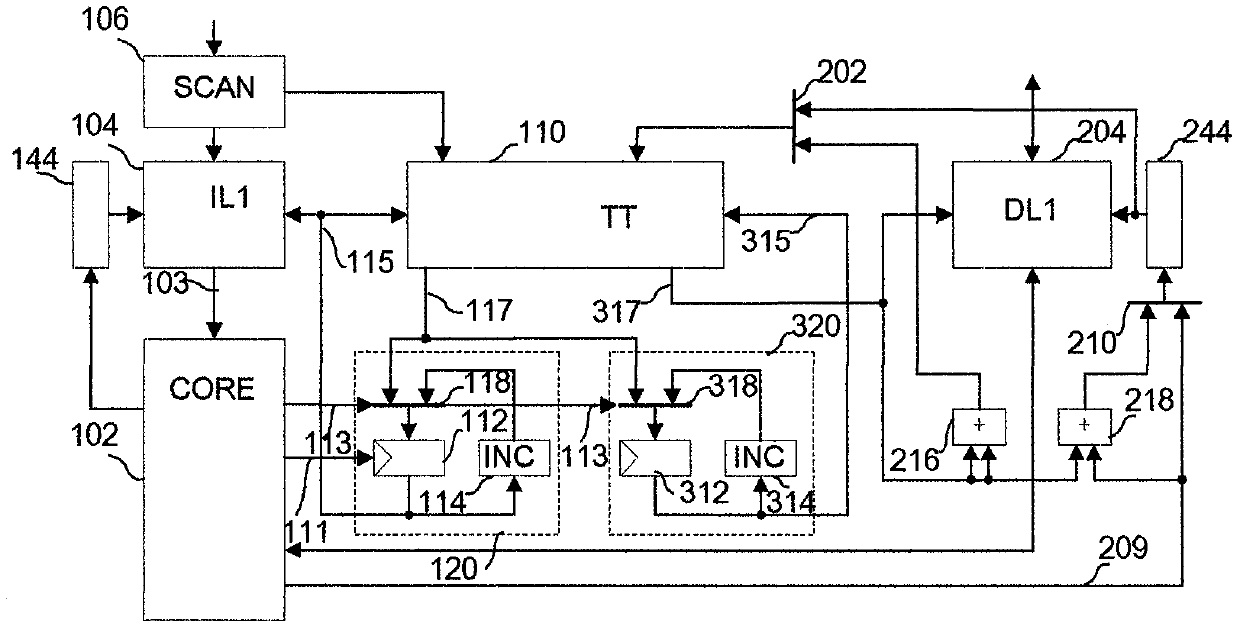

[0094] The caching system and method proposed by the present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments. Advantages and features of the present invention will be apparent from the following description and claims. It should be noted that all the drawings are in a very simplified form and use imprecise scales, and are only used to facilitate and clearly assist the purpose of illustrating the embodiments of the present invention.

[0095] It should be noted that, in order to clearly illustrate the content of the present invention, the present invention specifically cites multiple embodiments to further explain different implementation modes of the present invention, wherein the multiple embodiments are enumerated rather than exhaustive. In addition, for the sake of brevity of description, the content mentioned in the previous embodiment is often omitted in the latter embodiment, therefore, the conten...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com