Depth-auto-encoder-based human eye detection and positioning method

A self-encoder and human eye detection technology, which is applied in the direction of instruments, character and pattern recognition, acquisition/recognition of eyes, etc., can solve the problem of not being able to handle the deformation, viewing angle change and occlusion of the target detection target well, and it is difficult to achieve real-time Sexuality, it is difficult to achieve real-time problems, to avoid construction and non-maximum suppression operations, fast human eye detection and positioning, and improve the speed of detection

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0019] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be described in further detail below in conjunction with specific embodiments and with reference to the accompanying drawings.

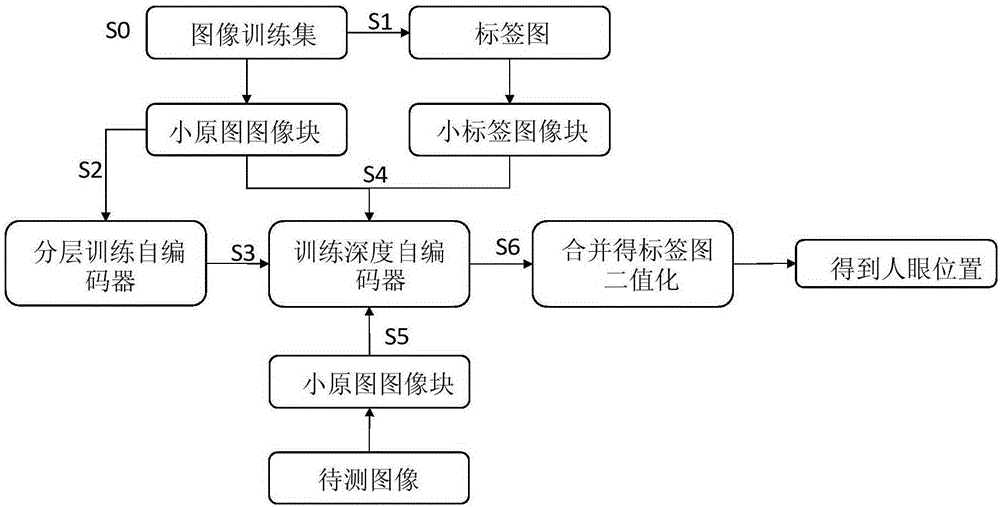

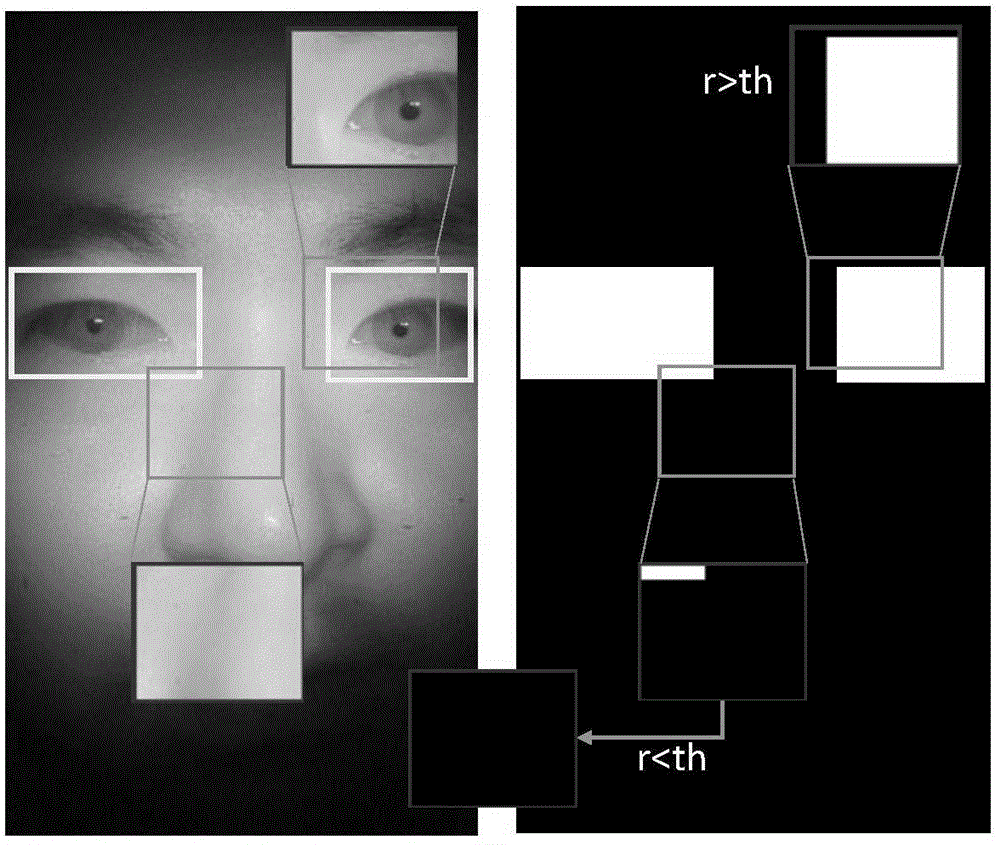

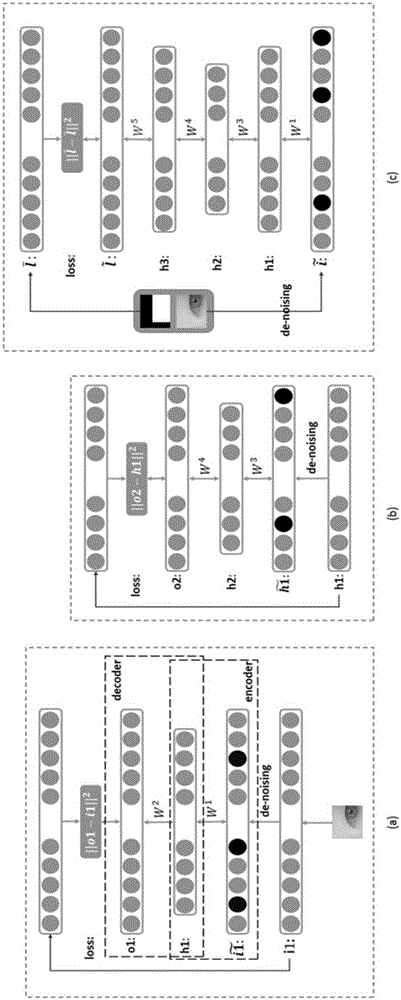

[0020] The invention proposes a target detection and positioning method based on a deep self-encoder, and applies it to the detection and positioning of human eyes. In this method, the small image blocks randomly cut from the training image and the corresponding small label image blocks cut from the label map are used to train and learn the depth autoencoder, and the mapping relationship between the small image blocks and the small label map is obtained. Then, the learned deep autoencoder is used to generate a label map corresponding to the image to be tested, and the position of the human eye is finally determined by binarizing and coordinate projection of the label map. The key steps involved in the method of the presen...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com