Neural network model compression method based on sparse backward propagation training

A neural network model and back-propagation technology, applied in biological neural network models, neural learning methods, etc., can solve the problems of long time-consuming training and inference of neural networks, difficult deep neural networks, and low accuracy of neural network models.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0056] Below in conjunction with accompanying drawing, further describe the present invention through embodiment, but do not limit the scope of the present invention in any way.

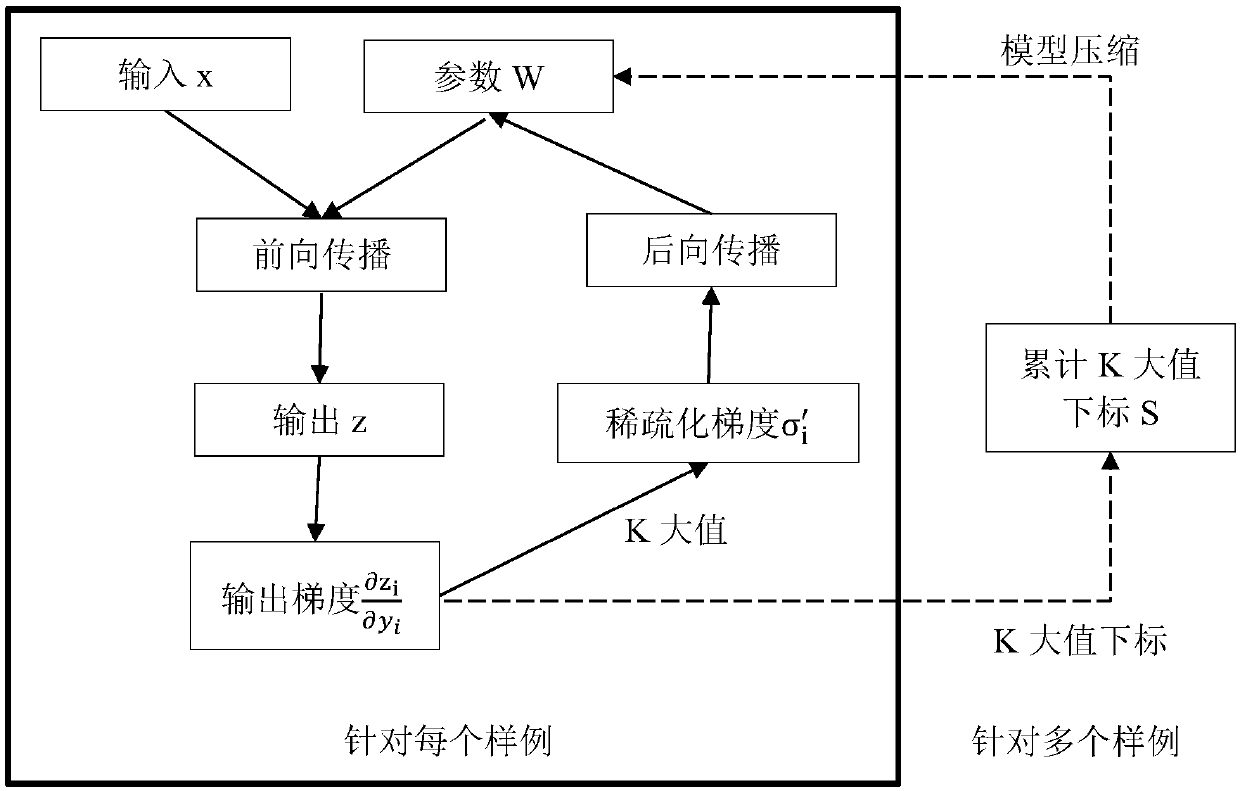

[0057] The present invention provides a sparse backpropagation training method for a neural network model, which is a sparse backpropagation training method based on a large value of k. figure 1 It is a block flow diagram of the method of the present invention.

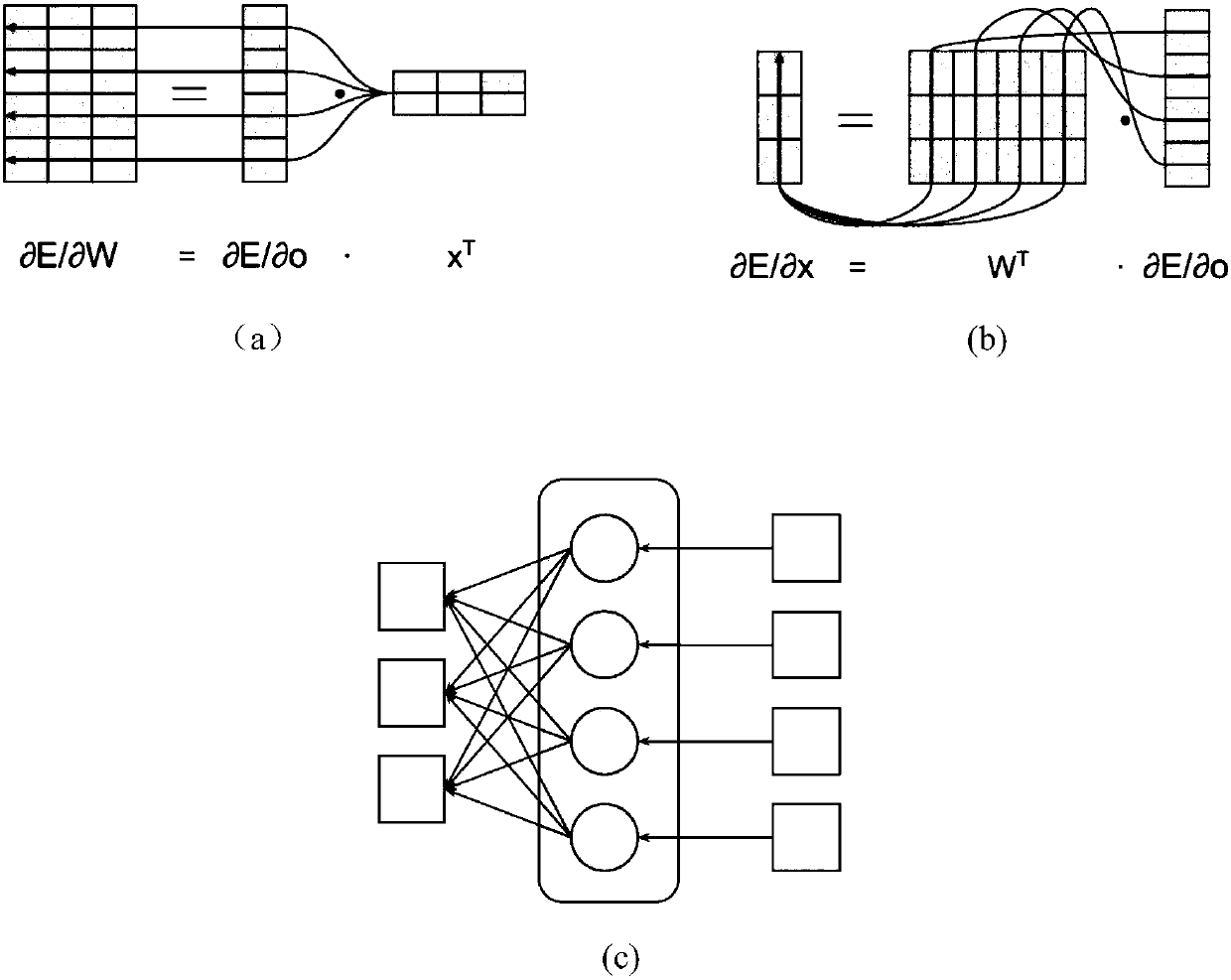

[0058] The most basic computing unit of a neural network is a linear transformation plus a nonlinear transformation. Therefore, the specific implementation takes the most basic neural network computing unit as an example, and the calculation formula is as follows:

[0059] y=Wx

[0060] z=σ(y)

[0061] Among them, W is the parameter matrix of the model, x is the input vector, y is the output after linear transformation, σ is a function of nonlinear transformation, and z is the output after nonlinear transformation.

[0062] The sparse ba...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com