Robot imitation learning method, device, robot and storage medium

A learning method and robot technology, applied in manipulators, program-controlled manipulators, manufacturing tools, etc., can solve problems such as the stability of robot imitation learning and the speed of model training that cannot be guaranteed at the same time, so as to improve the degree of humanization and guarantee The effect of stability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

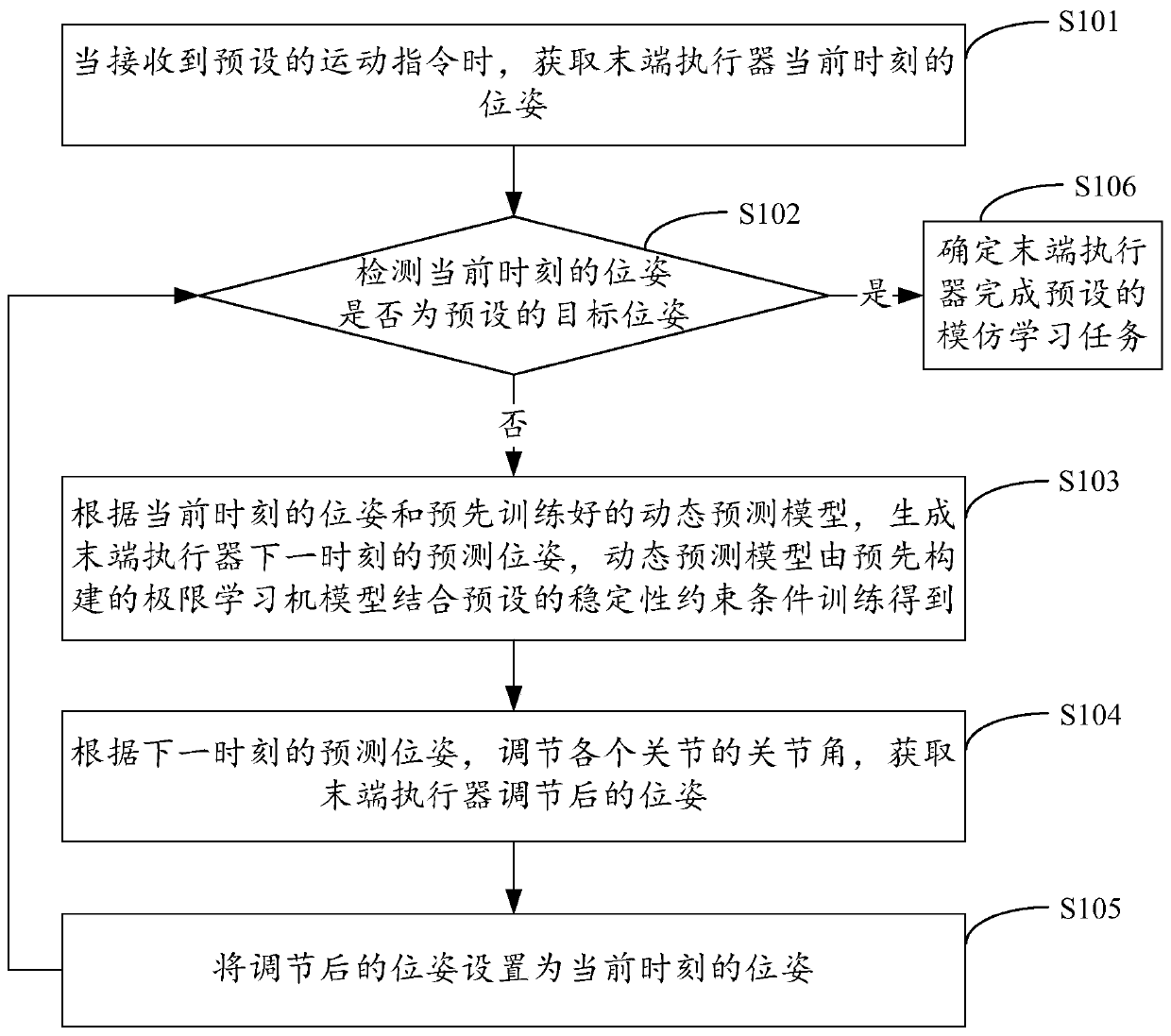

[0027] figure 1 It shows the implementation process of the imitation learning method for the robot provided by Embodiment 1 of the present invention. For the convenience of explanation, only the parts related to the embodiment of the present invention are shown, and the details are as follows:

[0028] In step S101, when a preset movement command is received, the current pose of the end effector is acquired.

[0029] The embodiments of the present invention are applicable to, but not limited to, robots with structures such as joints and connecting rods that can realize actions such as stretching and grasping. When receiving motion or movement commands sent by the user or the control system, the robot can obtain the joint angles of each joint, and then calculate the current pose of the end effector based on these joint angles and forward kinematics. In addition, if the robot itself has There is a position sensor of the end effector, through which the current pose of the end ef...

Embodiment 2

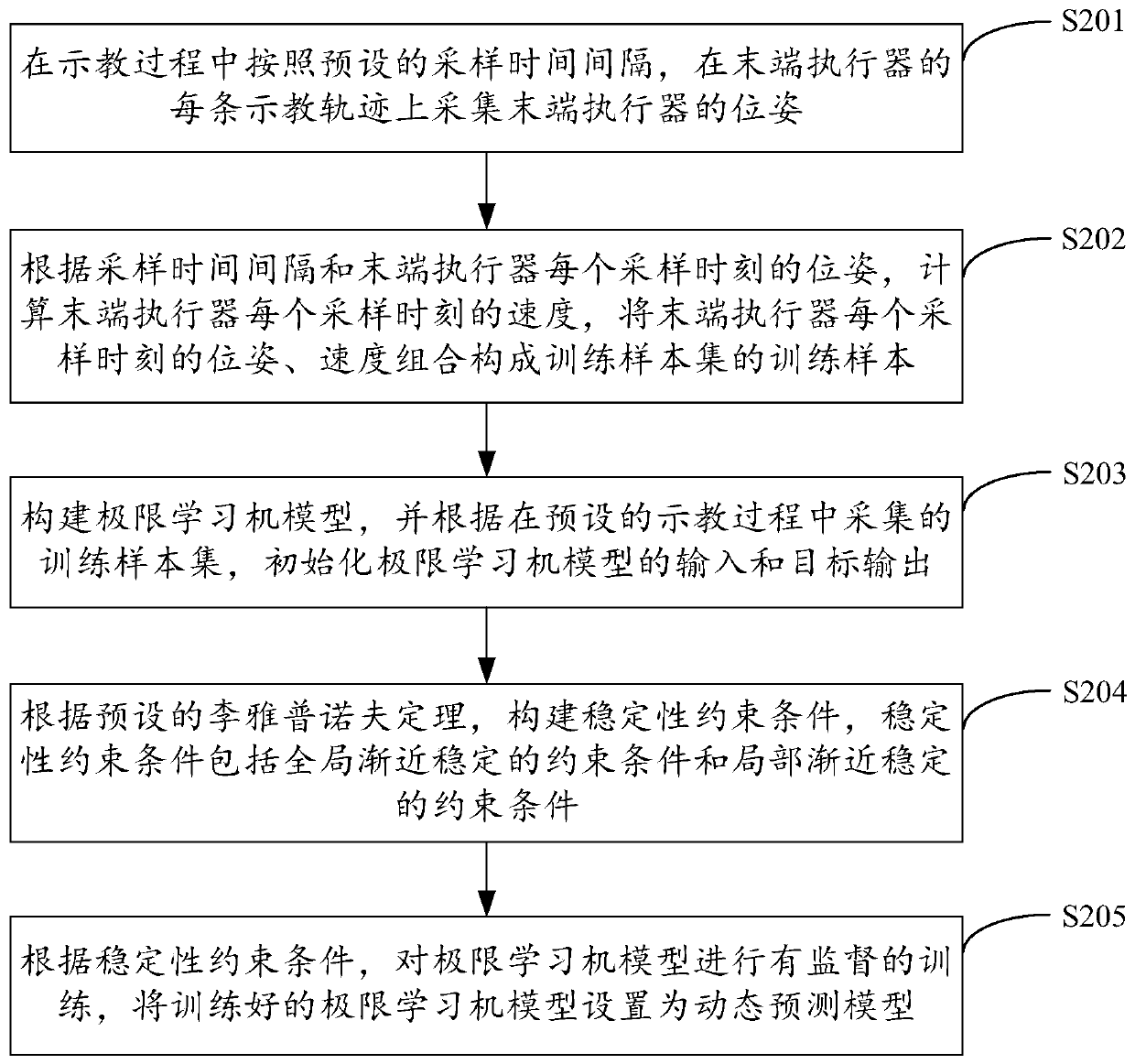

[0045] figure 2 It shows the implementation process of collecting training sample sets and training dynamic prediction models in the imitation learning method for robots provided by Embodiment 2 of the present invention. For the convenience of description, only the parts related to the embodiment of the present invention are shown, and the details are as follows:

[0046] In step S201, during the teaching process, the pose of the end effector is collected on each teaching track of the end effector according to a preset sampling time interval.

[0047] In the embodiment of the present invention, the teaching action can be given by the teaching operator or the user during the teaching process, and the end effector moves according to the teaching action, and the robot itself or the external motion capture device follows the preset sampling time interval. The pose of the end effector is collected on each motion trajectory (teaching trajectory), and the collected pose of the end e...

Embodiment 3

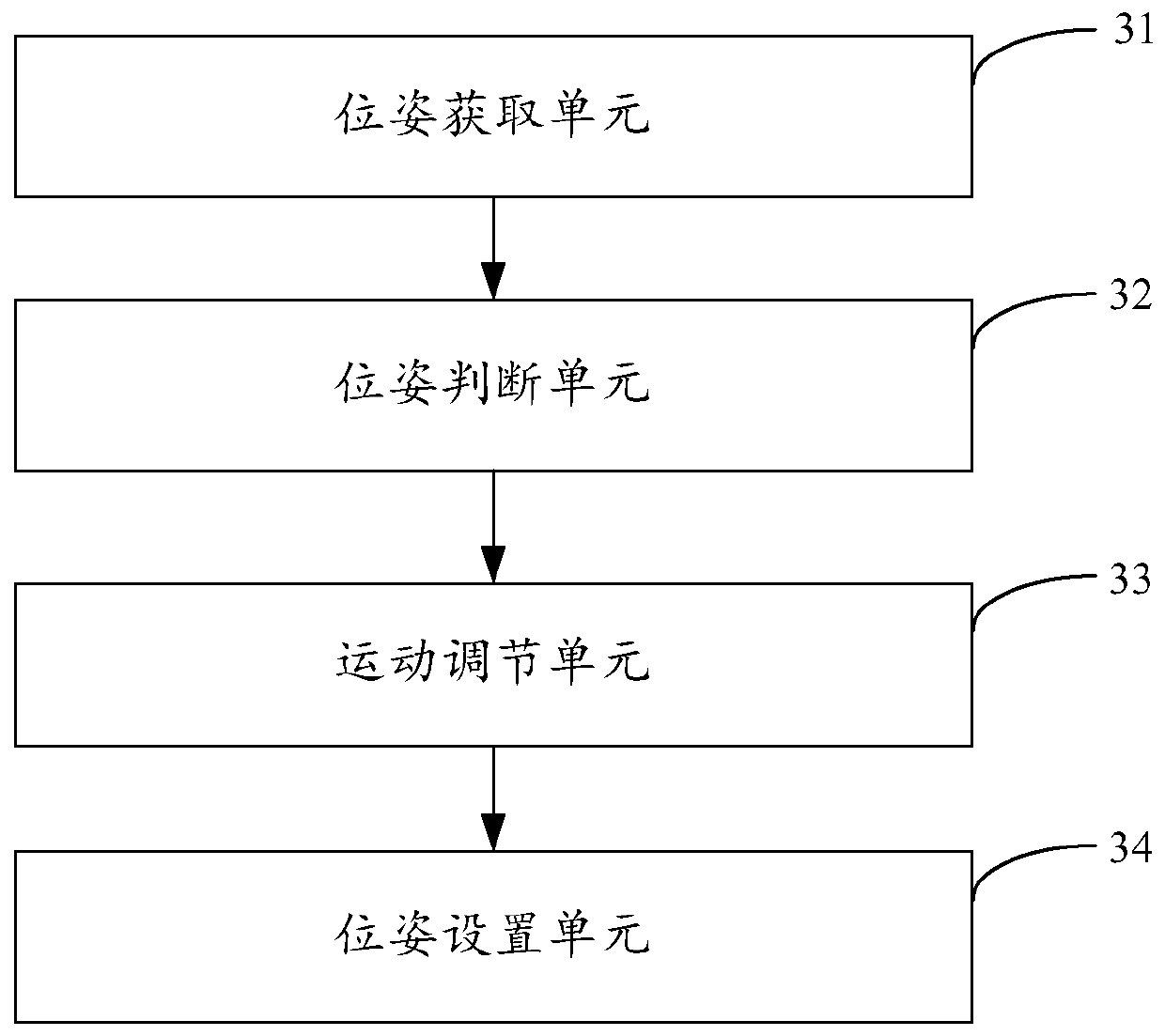

[0067] image 3 The structure of the robot imitation learning device provided by the third embodiment of the present invention is shown. For the convenience of description, only the parts related to the embodiment of the present invention are shown, including:

[0068] The pose acquiring unit 31 is configured to acquire the pose of the end effector at the current moment when a preset motion instruction is received.

[0069] In the embodiment of the present invention, when receiving the motion or movement command sent by the user or the control system, the robot can obtain the joint angles of each joint, and then calculate the current pose of the end effector based on these joint angles and forward kinematics , in addition, if the robot itself has a position sensor of the end effector, the current pose of the end effector can be directly obtained through the position sensor.

[0070] The pose judging unit 32 is used to detect whether the pose at the current moment is the prese...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com