Training method and apparatus of neural network, and object identification method and apparatus

A technology of neural network and training method, which is applied in the direction of neural learning method, biological neural network model, character and pattern recognition, etc. It can solve the problems of inapplicable central processing unit, large memory usage, small memory space, etc., and achieves small memory usage , improve adaptability, the effect of less parameters

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

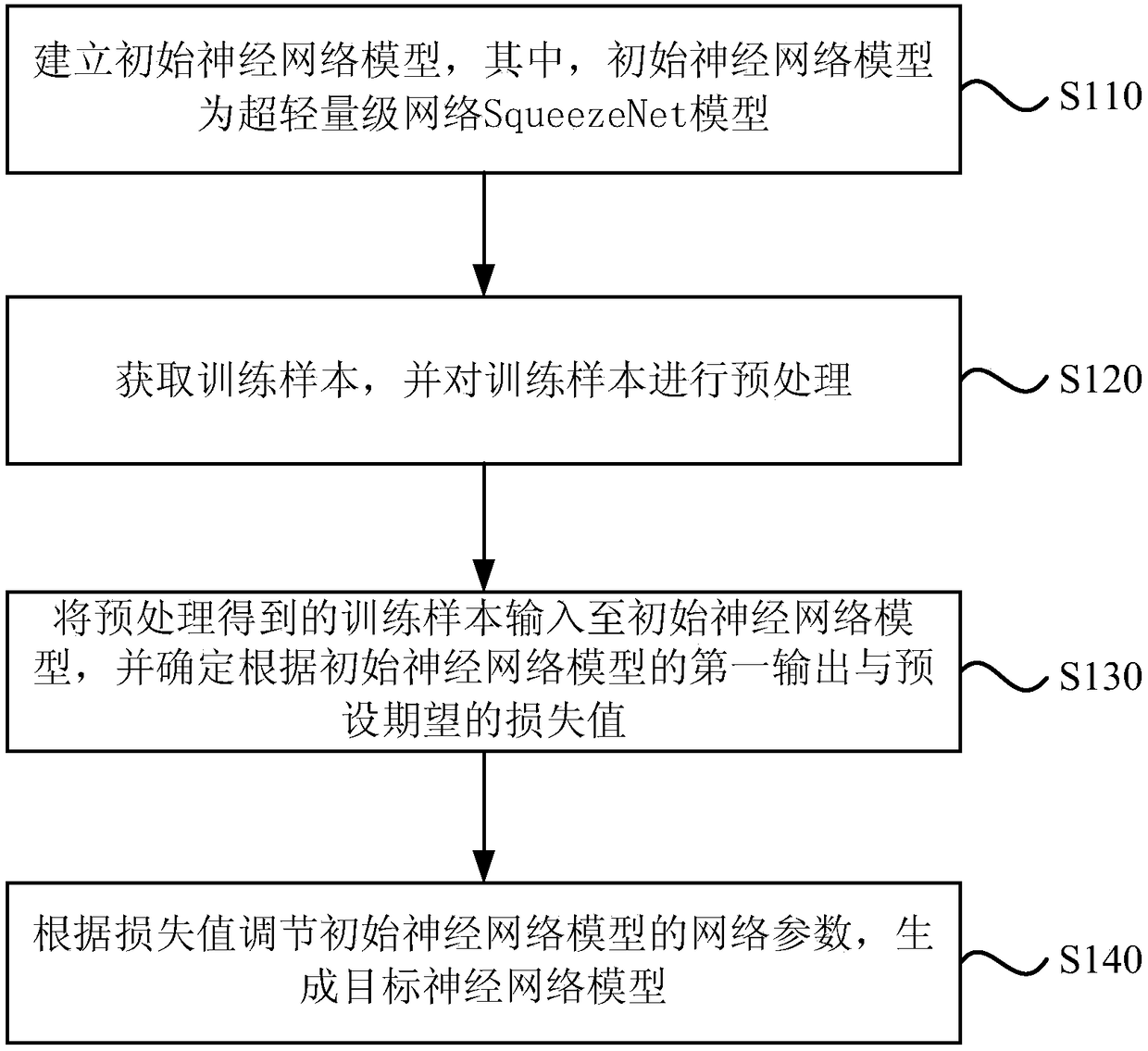

[0053] figure 1 It is a flow chart of a neural network training method provided by Embodiment 1 of the present invention. This embodiment is applicable to the situation of establishing and training a neural network model with small memory and fast calculation speed. This method can be performed by a server Execution, the server may be implemented in software and / or hardware. The method specifically includes:

[0054] S110. Establish an initial neural network model, where the initial neural network model is an ultra-lightweight network SqueezeNet model.

[0055] Among them, the ultra-lightweight network SqueezeNet model has the characteristics of few model parameters and small memory usage. Compared with the neural network models such as AlexNet, under the premise of the same recognition accuracy, the ultra-lightweight network SqueezeNet model occupies less memory. nearly 50 times. In this embodiment, an ultra-lightweight network SqueezeNet model is established to reduce the...

Embodiment 2

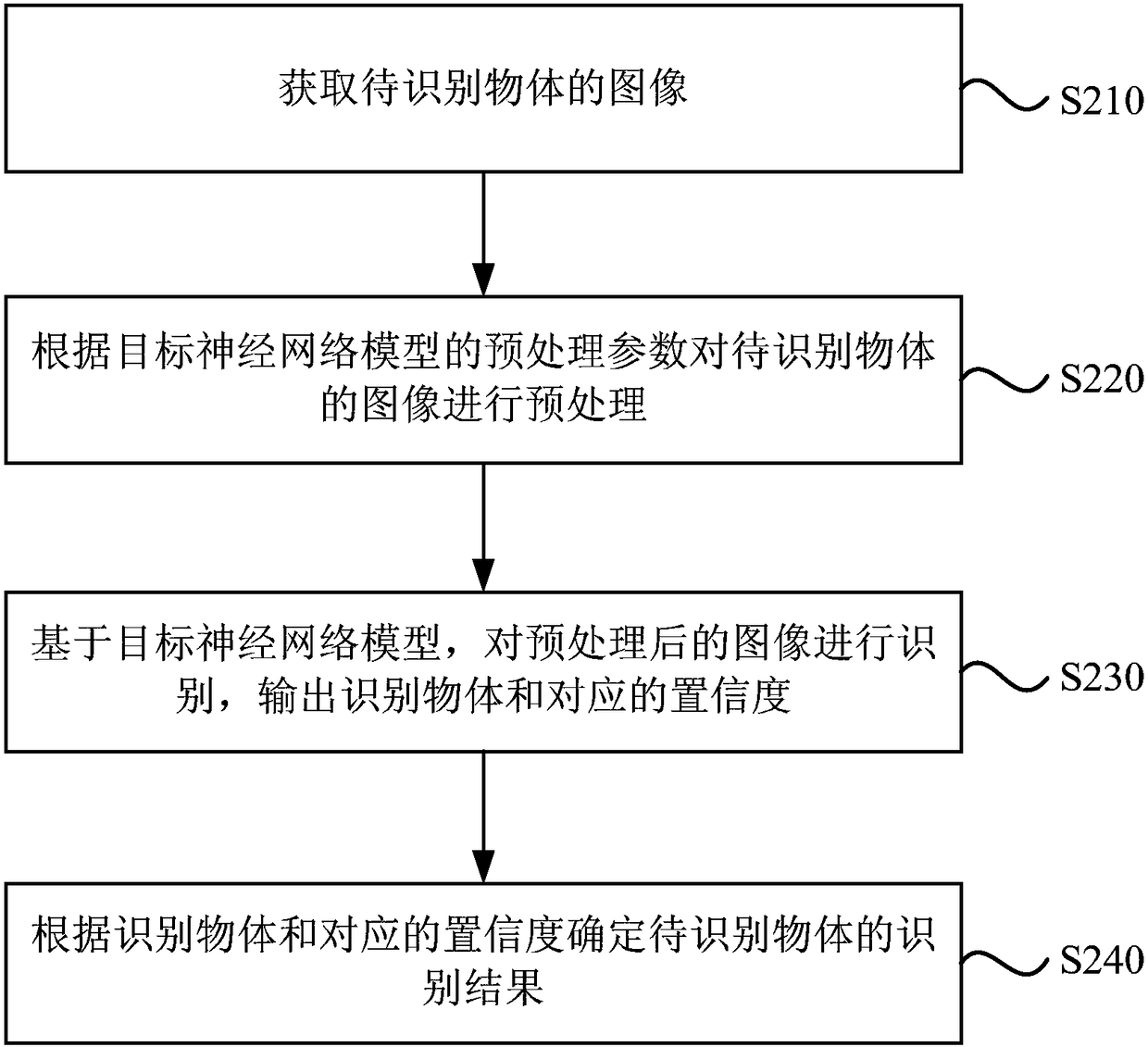

[0078] figure 2 It is a flow chart of an object recognition method provided by Embodiment 2 of the present invention. This embodiment is applicable to the situation where a mobile terminal equipped with a neural network model with a small memory footprint quickly recognizes an object. This method can be executed by a mobile terminal. Wherein the mobile terminal may be a smart phone, a tablet computer or a robot provided with a traditional central processing unit, and the mobile terminal may be realized by means of software and / or hardware. The method specifically includes:

[0079] S210. Acquire an image of the object to be recognized.

[0080] Wherein, the image may be obtained by photographing the object to be recognized, or obtained by means of network resources or cloud data.

[0081] S220. Perform preprocessing on the image of the object to be recognized according to the preprocessing parameters of the target neural network model.

[0082] In this embodiment, after ac...

Embodiment 3

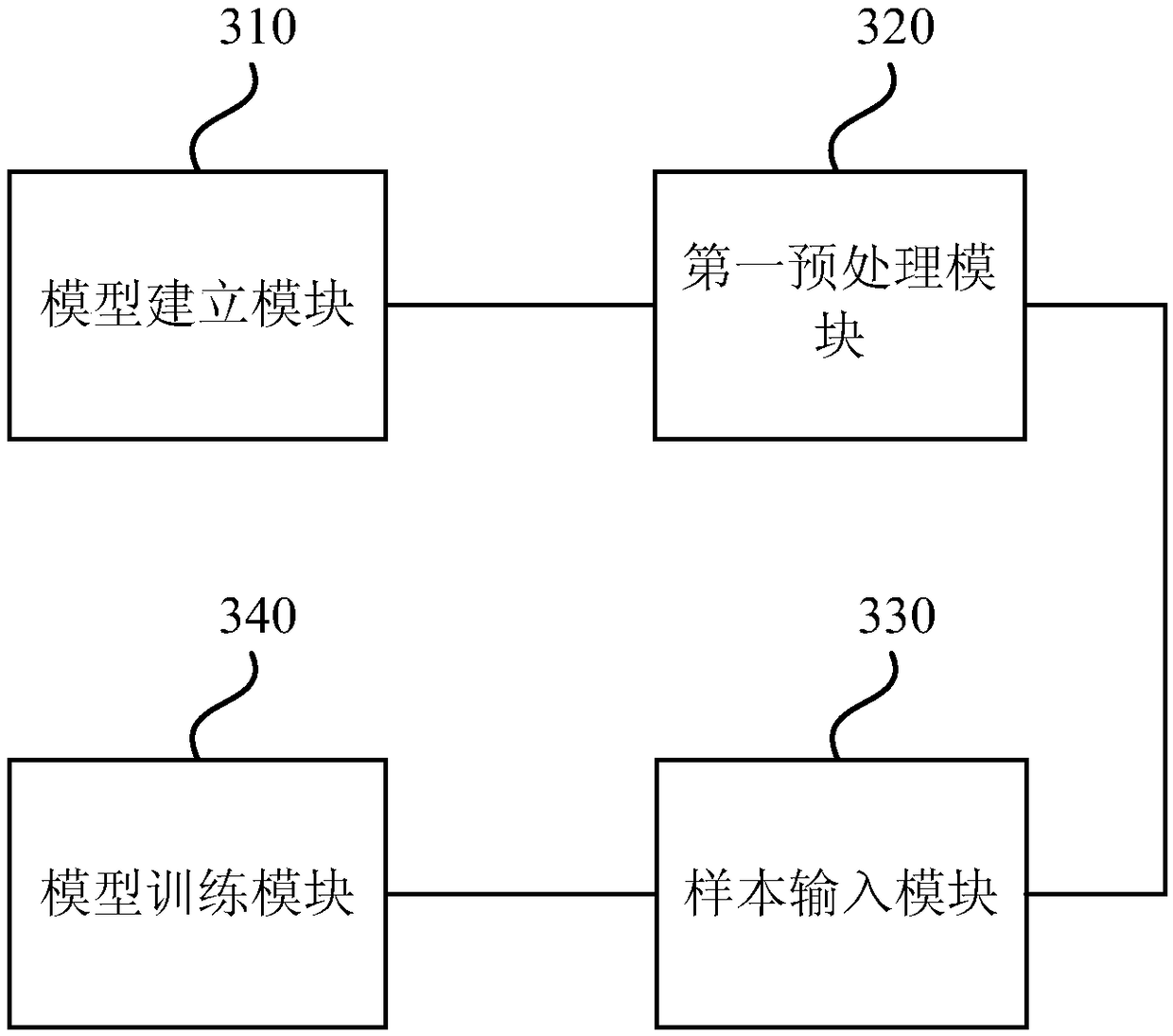

[0094] image 3 It is a schematic structural diagram of a neural network training device provided in Embodiment 3 of the present invention, and the device includes:

[0095] The model building module 310 is used to set up an initial neural network model, wherein the initial neural network model is an ultra-lightweight network SqueezeNet model;

[0096] The first preprocessing module 320 is used to acquire training samples and preprocess the training samples;

[0097] The sample input module 330 is used to input the training samples obtained by preprocessing into the initial neural network model, and determine the first output and preset expected loss value according to the initial neural network model;

[0098] The model training module 340 is configured to adjust the network parameters of the initial neural network model according to the loss value to generate a target neural network model.

[0099] Optionally, the initial neural network model includes a preset number of st...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com