Programmable Deep Neural Network Processor

A deep neural network and processor technology, applied in the field of programmable deep neural network processors, can solve the problems of high power consumption, frequent transmission of chip data, etc., achieve low power consumption, low cost, and improve hardware utilization.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

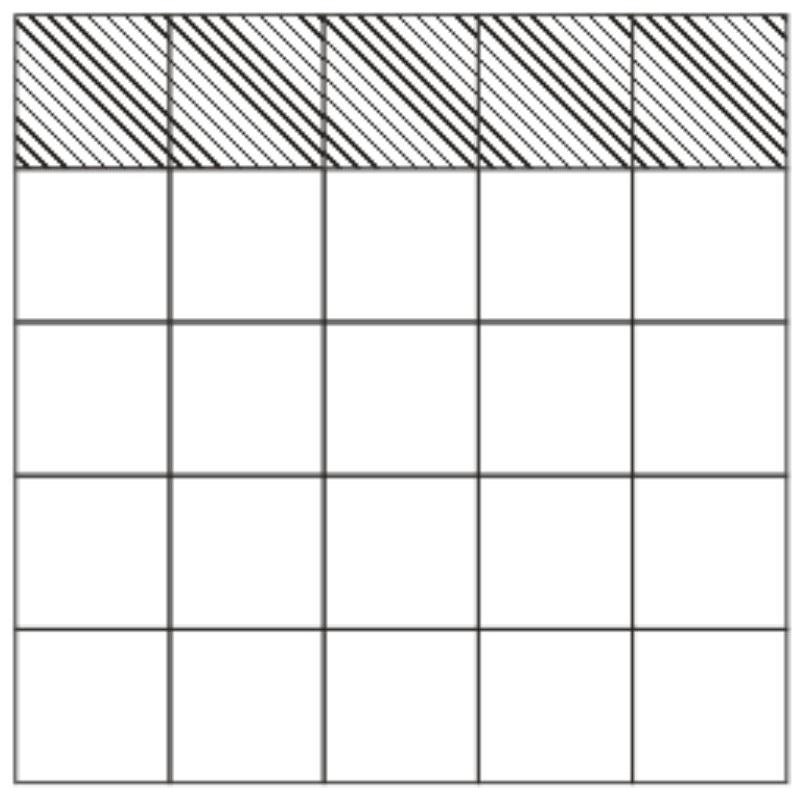

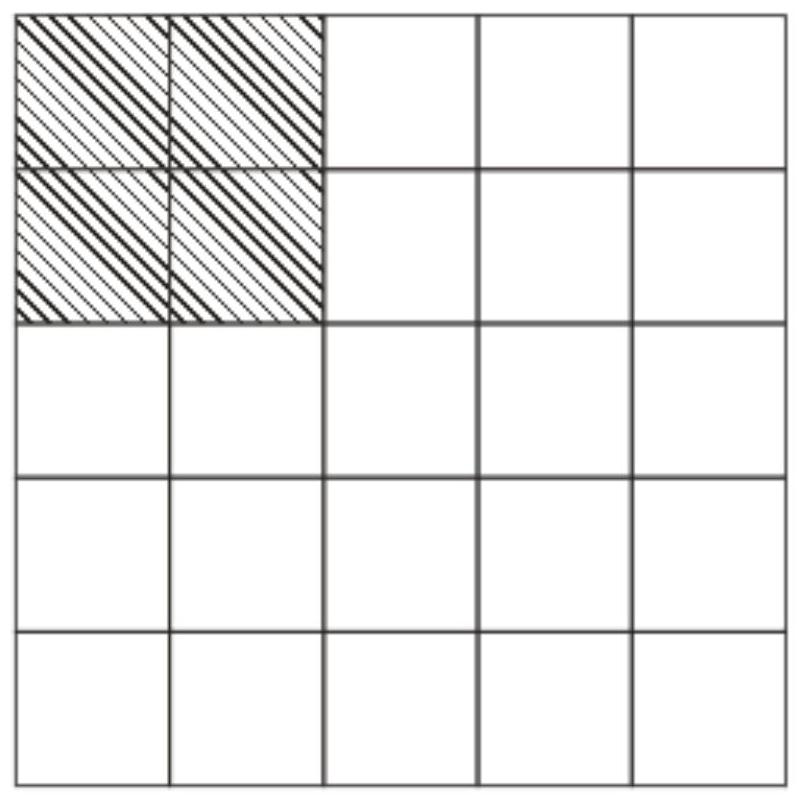

[0058] Embodiment 1: see Figure 1 to Figure 7 . In the prior art, by figure 1 It can be seen that since the points in the output feature map are calculated on a behavior basis, it is necessary to wait for multiple lines in the output feature map to complete. This makes pipelining difficult, and it also requires a first-in-first-out memory to store all points in a row, which increases hardware overhead.

[0059] Depend on figure 2 It can be seen that the present invention and figure 1 Differently, we propose a cluster-based convolution operation, which computes the points in the output feature map in units of clusters instead of rows.

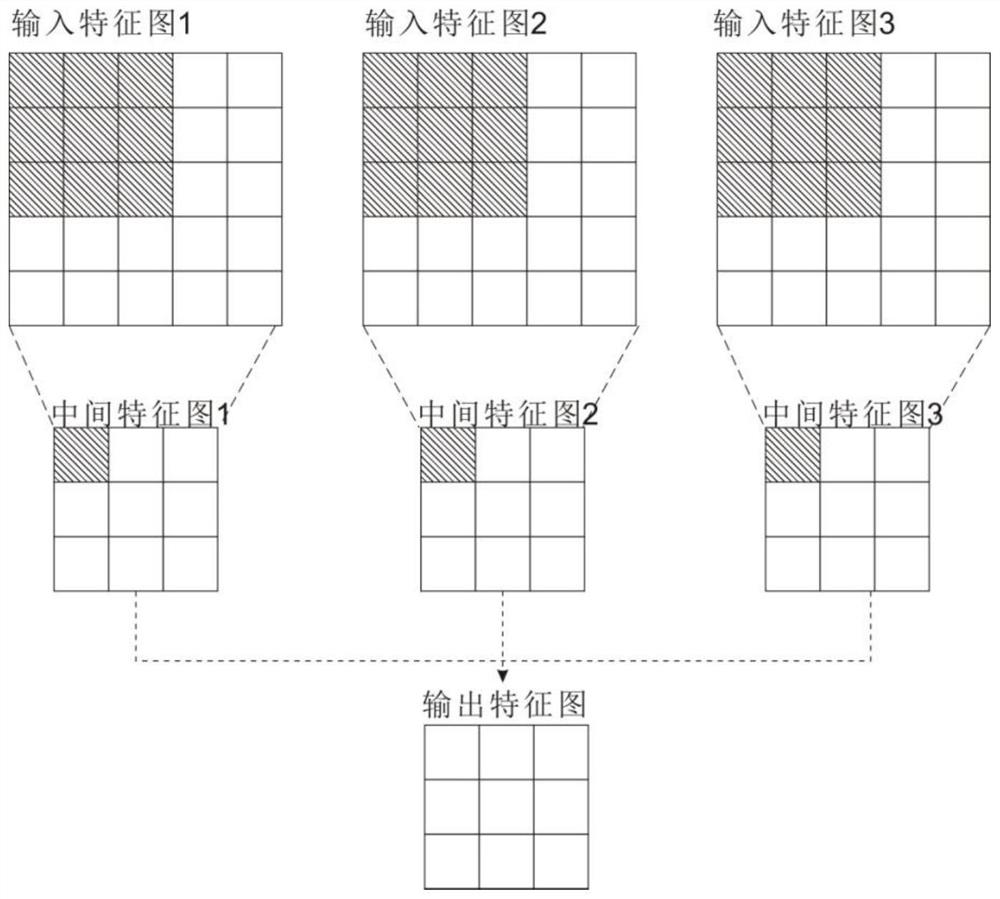

[0060] Depend on image 3 , Figure 4 , Figure 5 It can be seen that in the prior art, after convolution, the output results of different input feature maps need to be added (such as image 3 ), which is usually done by computing points with the same location from different input feature maps and adding them together (eg Figure 4 ),...

Embodiment 2

[0081] Example 2: see Figure 8 , the system constructs a block diagram of a specific embodiment. Among them, DDR3, JTAG, DDR controller, selector, arbitrator, feature map buffer and filter buffer constitute the storage part of the programmable deep neural network processor. The data comes from three parts, and one part is loaded through the JTAG port. The data, that is, user instructions and other upper instructions, part of which is data such as weights and feature maps, and part of it is intermediate data processed by the present invention, which needs to be temporarily stored in DDR3.

[0082] Therefore, DDR3 is used to store data. When the program control unit is working, the data is read from DDR3 to the chip, JTAG is used to write all data into DDR3, and the DDR controller is used to control whether DDR3 is read or written; the data passes through the DDR controller. After the read and write control, enter the arbitrator through the selector, where the selector is used...

Embodiment 3

[0085] Embodiment 3: see image 3 and Figure 4 , assuming one input feature map and one output feature map.

[0086] The pixel of the input feature map is Xin*Xin is 256*256, the pixel of the corresponding weight data is 11*11, and the convolution step S is 4;

[0087] Its processing method is:

[0088] (1) The program control unit obtains the user instruction, analyzes the user instruction, and obtains the parameters of the convolutional neural network; the parameters include that the pixel of the input feature map is Xin*Xin, which is 256*256, and the pixel Y*Y of the corresponding weight data is 11*11, the convolution step size S is 4, the input feature map is one, and the output feature map is one;

[0089] Then, the program control unit reads a feature map from the feature map buffer as an input feature map, and obtains its corresponding weight data from the filter buffer according to the input feature map, wherein the pixel of the input image is Xin*Xin , the pixel ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com