A design method of YOLO network forward inference accelerator based on FPGA

A design method and forward reasoning technology, applied in biological neural network models, neural architectures, etc., can solve the problems of accelerated network scale, FPGA can not accommodate, limitations, etc., to achieve the effect of ensuring stability, less on-chip resources, and improving speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

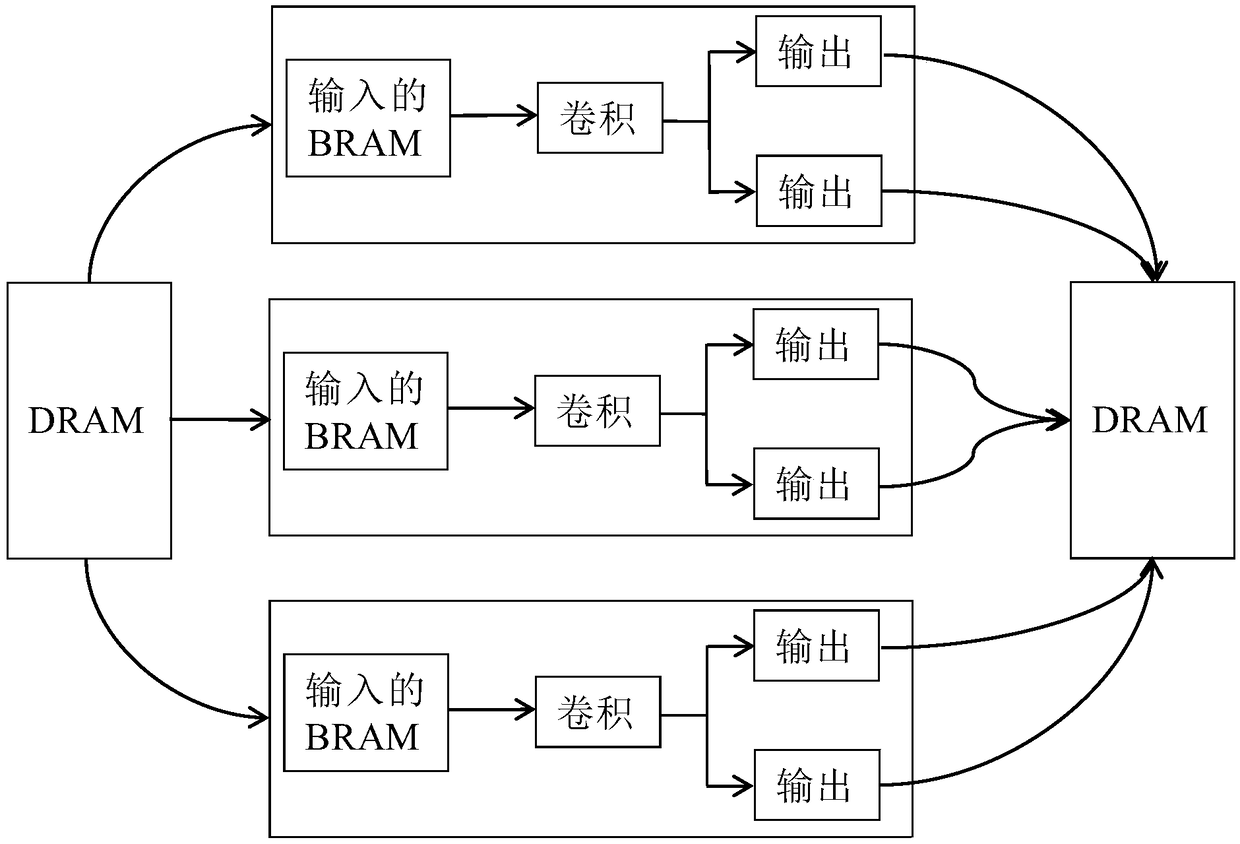

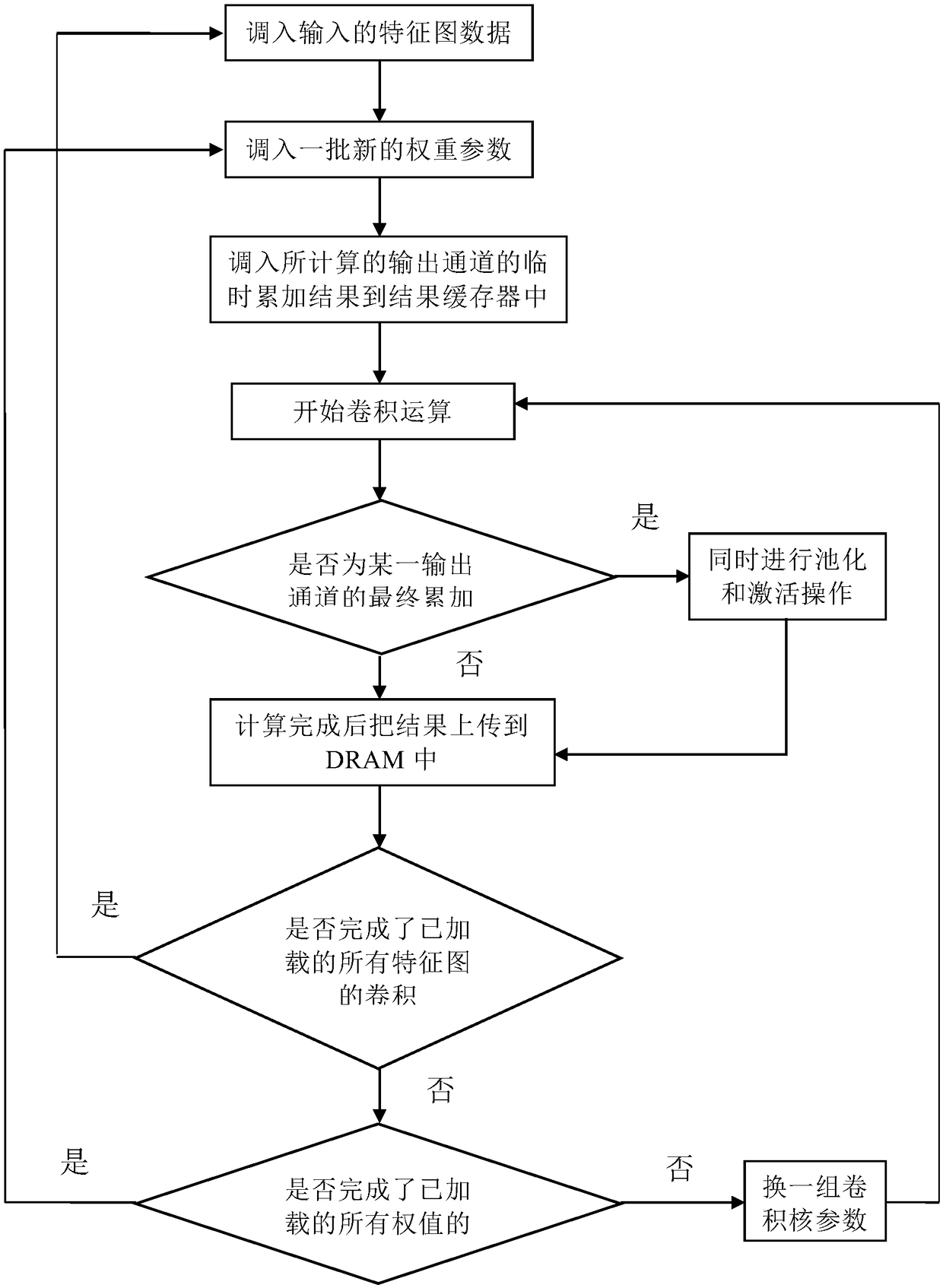

[0039] A kind of FPGA-based YOLO network forward reasoning accelerator design method, described accelerator comprises FPGA chip and DRAM, memory BRAM in the described FPGA chip is as data buffer, and described DRAM is as main storage device, uses ping-pong structure in DRAM ; It is characterized in that, described accelerator design method comprises the following steps:

[0040] (1) Perform 8-bit fixed-point quantization on the original network data to obtain the position of the decimal point that has the least impact on the detection accuracy, and form a quantization scheme. The quantization process is carried out layer by layer;

[0041] (2) The FPGA chip performs parallel computing on YOLO's nine-layer convolutional network;

[0042] (3) Location mapping.

[0043] Specifically, the quantization process of a certain layer in the step (1) is:

[0044] a) Quantify the weight data of the original network: when quantizing according to a certain decimal position of an 8bit fixe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com