A convolutional neural network accelerator based on calculation optimization of an FPGA

A convolutional neural network and accelerator technology, applied in the field of convolutional neural network accelerator hardware structure, can solve the problem of large amount of redundant calculation, achieve high computing performance, reduce reading, and improve real-time performance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0029] The technical solutions and beneficial effects of the present invention will be described in detail below in conjunction with the accompanying drawings.

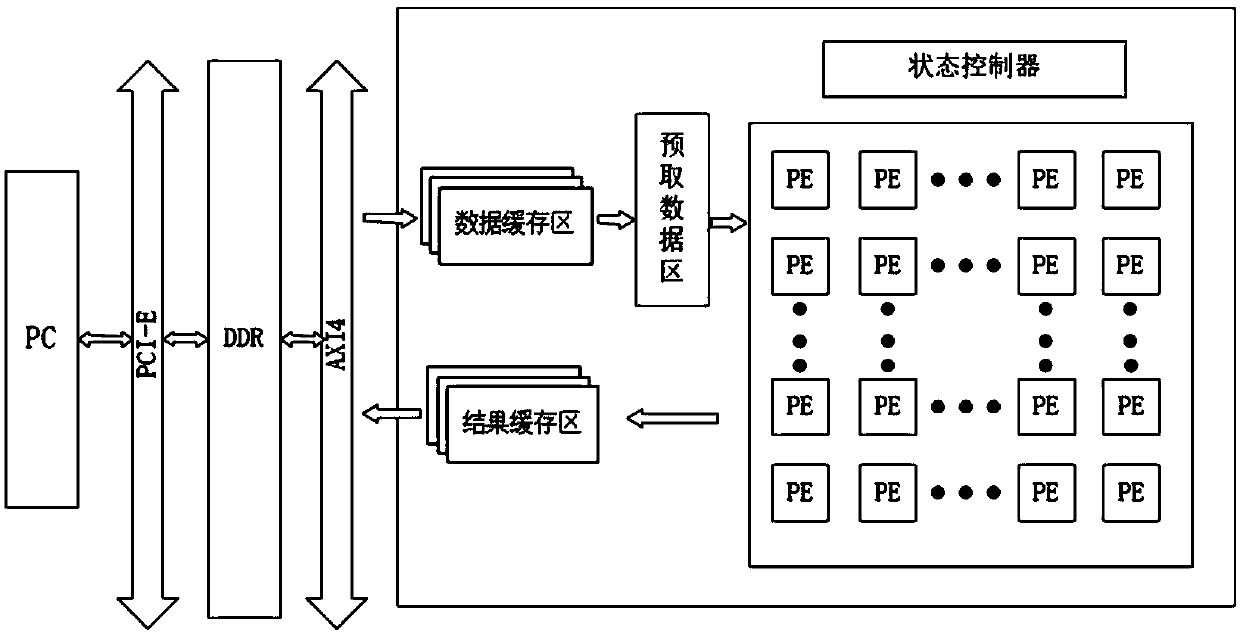

[0030] Such as figure 1 As shown, the hardware structure of the convolutional neural network accelerator designed for the present invention, the size of the PE array is 16*16, the size of the convolution kernel is 3*3, and the step size of the convolution kernel is 1 as an example. Its working method is as follows:

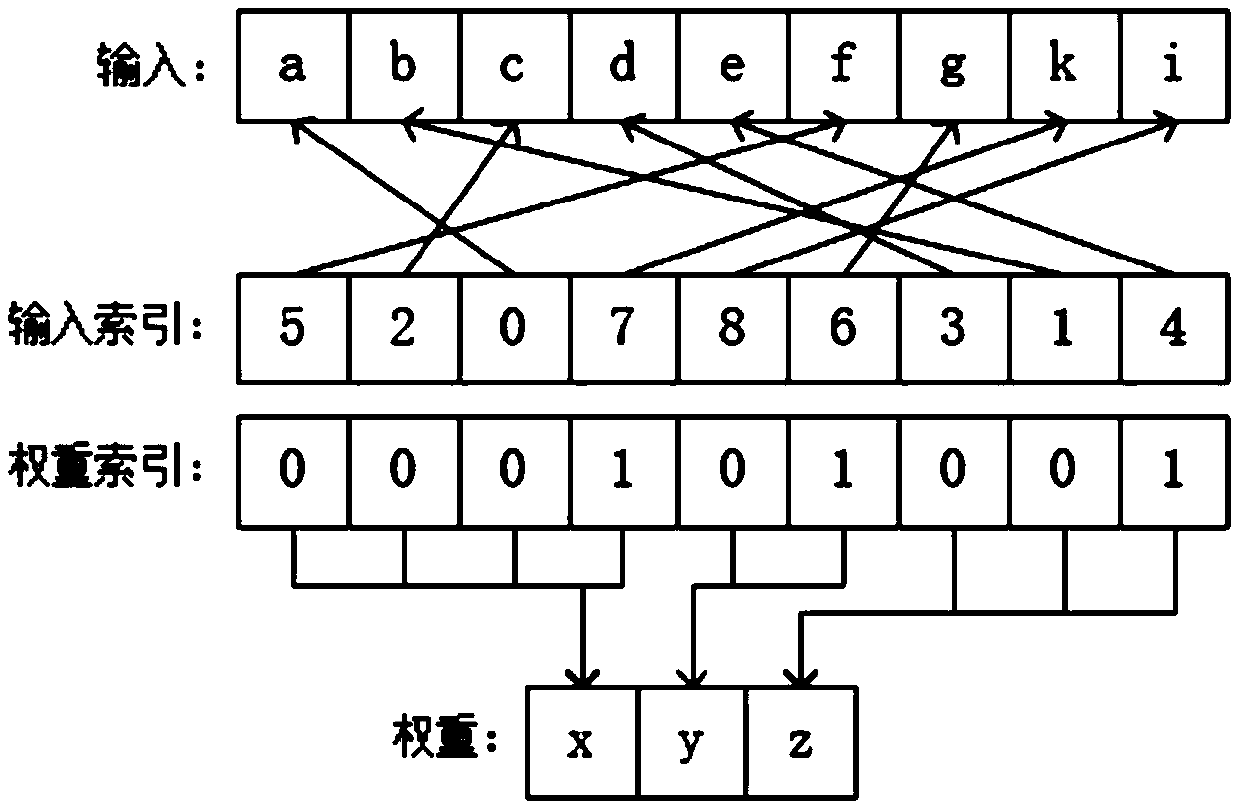

[0031] The PC caches the data partitions in the external memory DDR through the PCI-E interface. The data cache reads the feature map data through the AXI4 bus interface and caches them in three feature map sub-buffers by row. The input index value is cached in the feature map in the same way. Image subbuffer. The weight data read through the AXI4 bus interface is sequentially cached in 16 convolution kernel sub-buffers, and the weight index value is cached in the convolution kernel sub-buffers in the sa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com