Patents

Literature

223results about How to "Reduce read" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

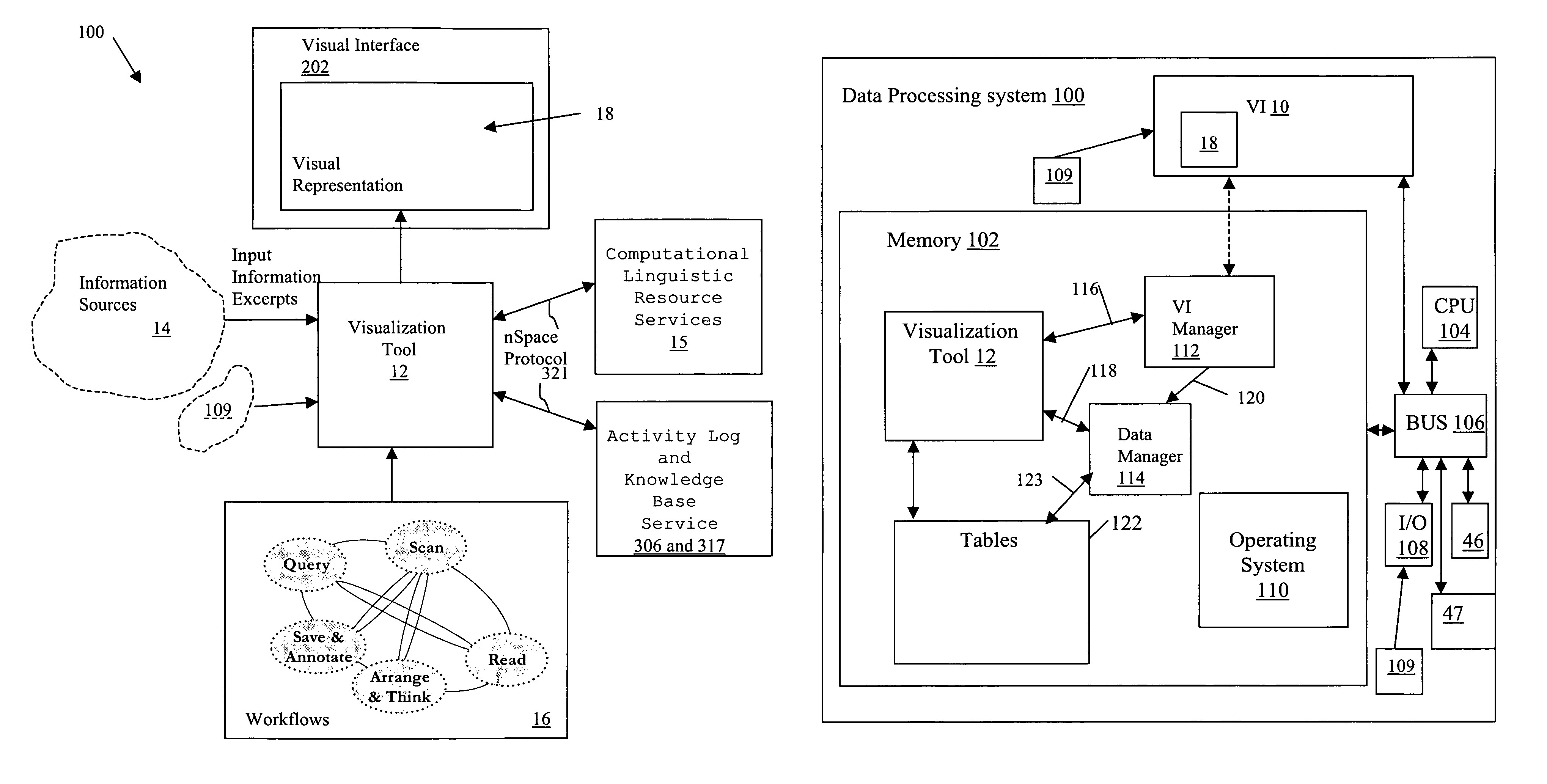

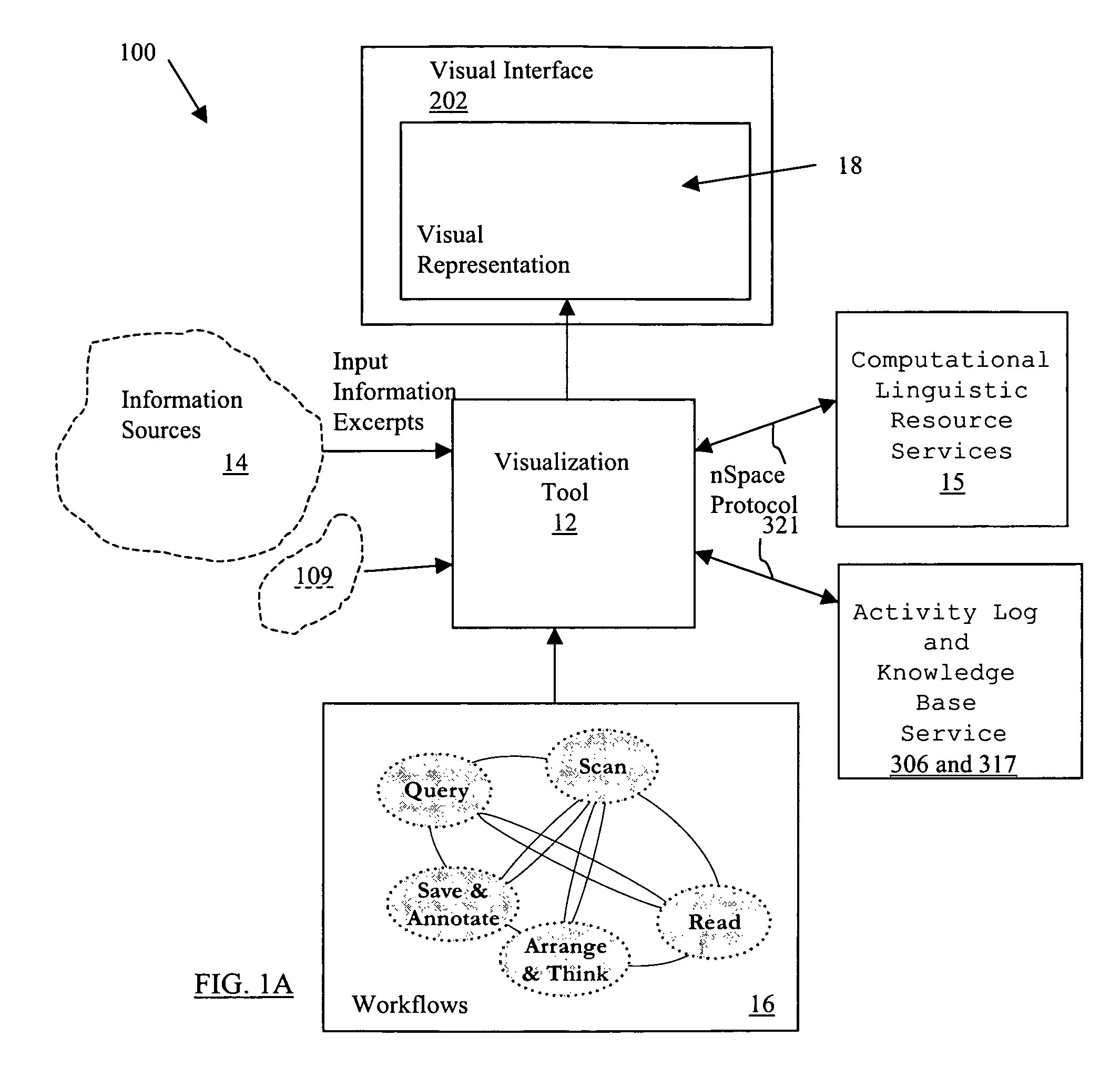

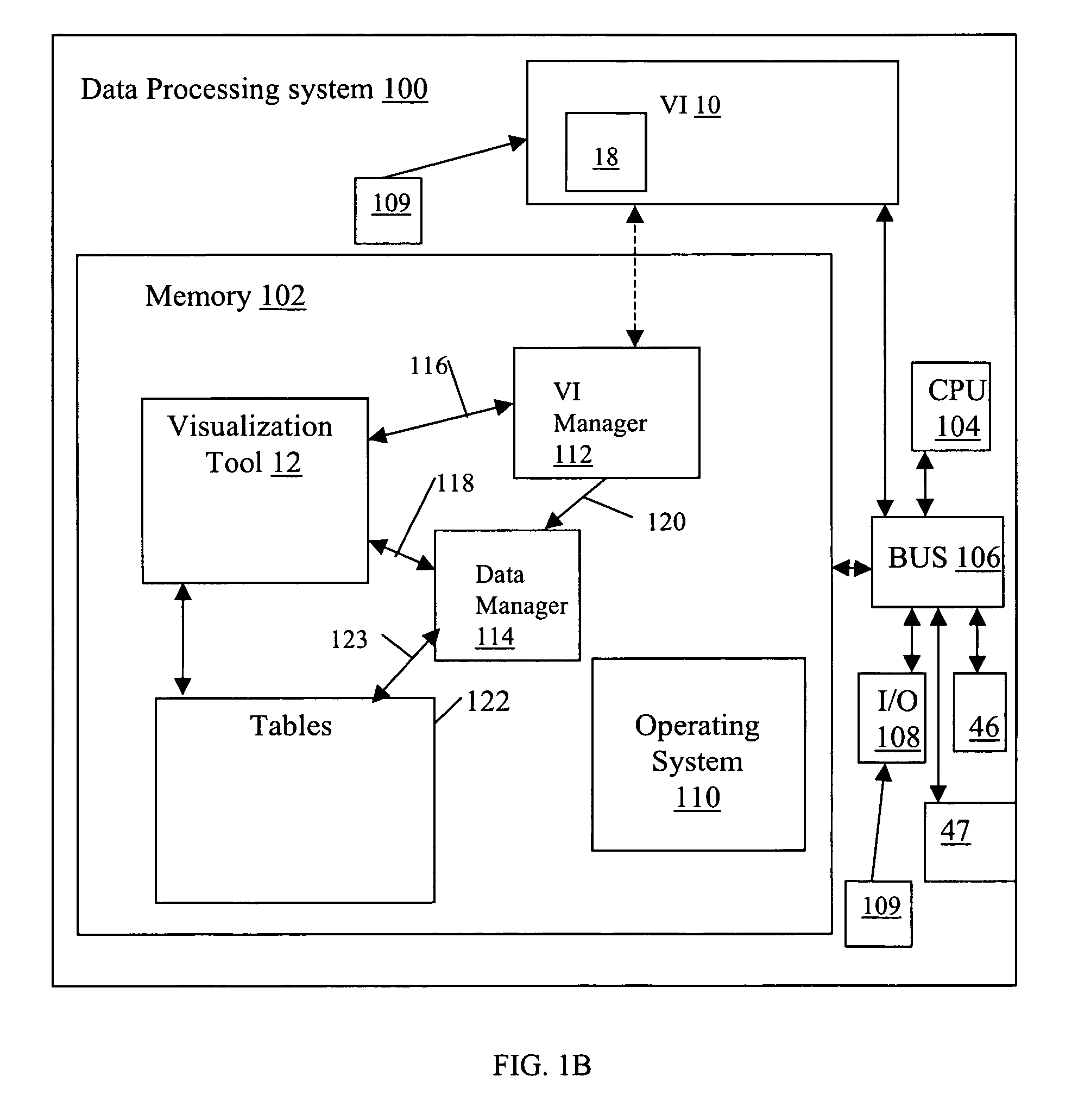

System and method for interactive multi-dimensional visual representation of information content and properties

ActiveUS20060116994A1Rapid visualizationImprove performanceDigital data processing detailsMultimedia data retrievalTriageInformation analysis

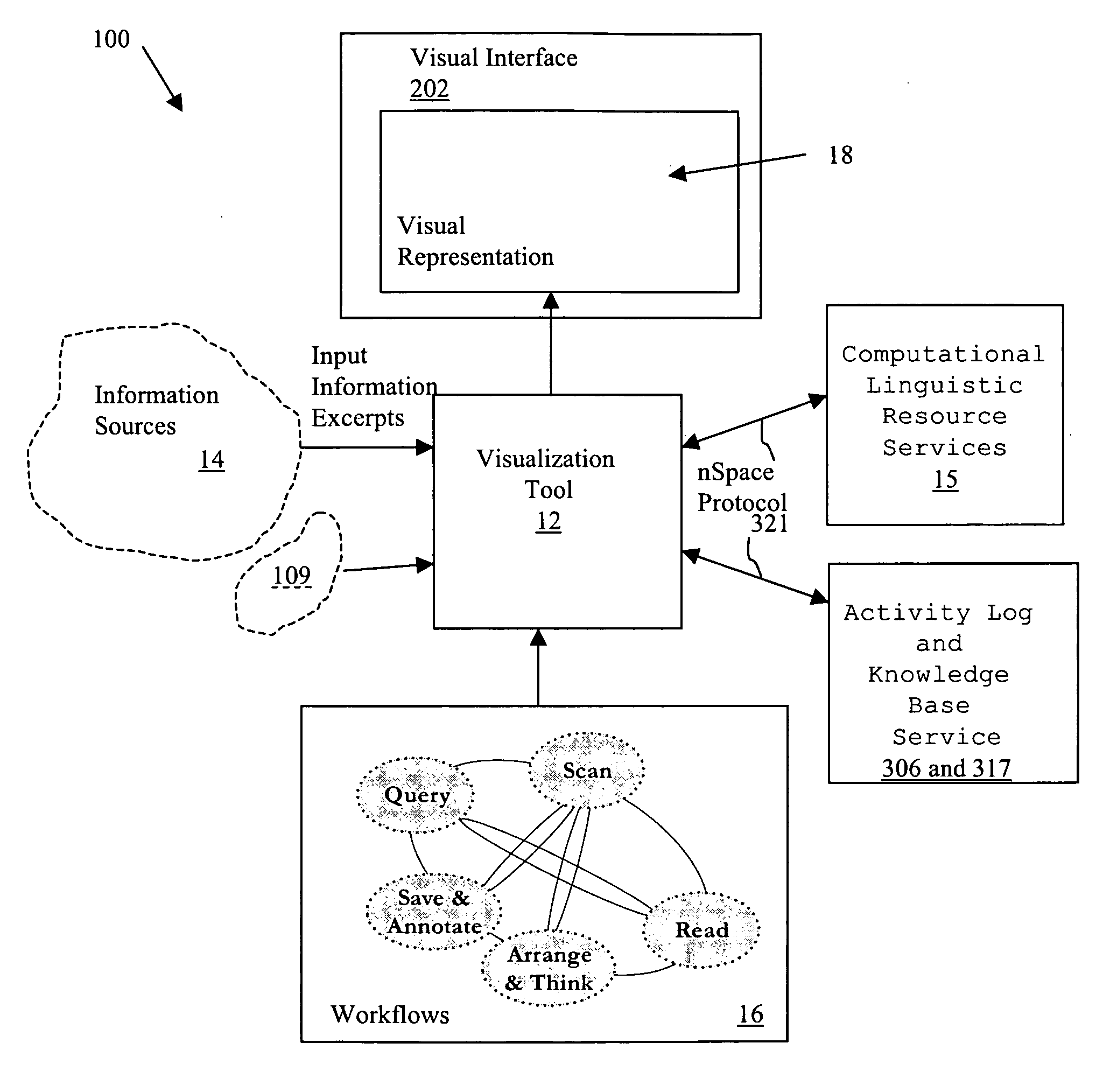

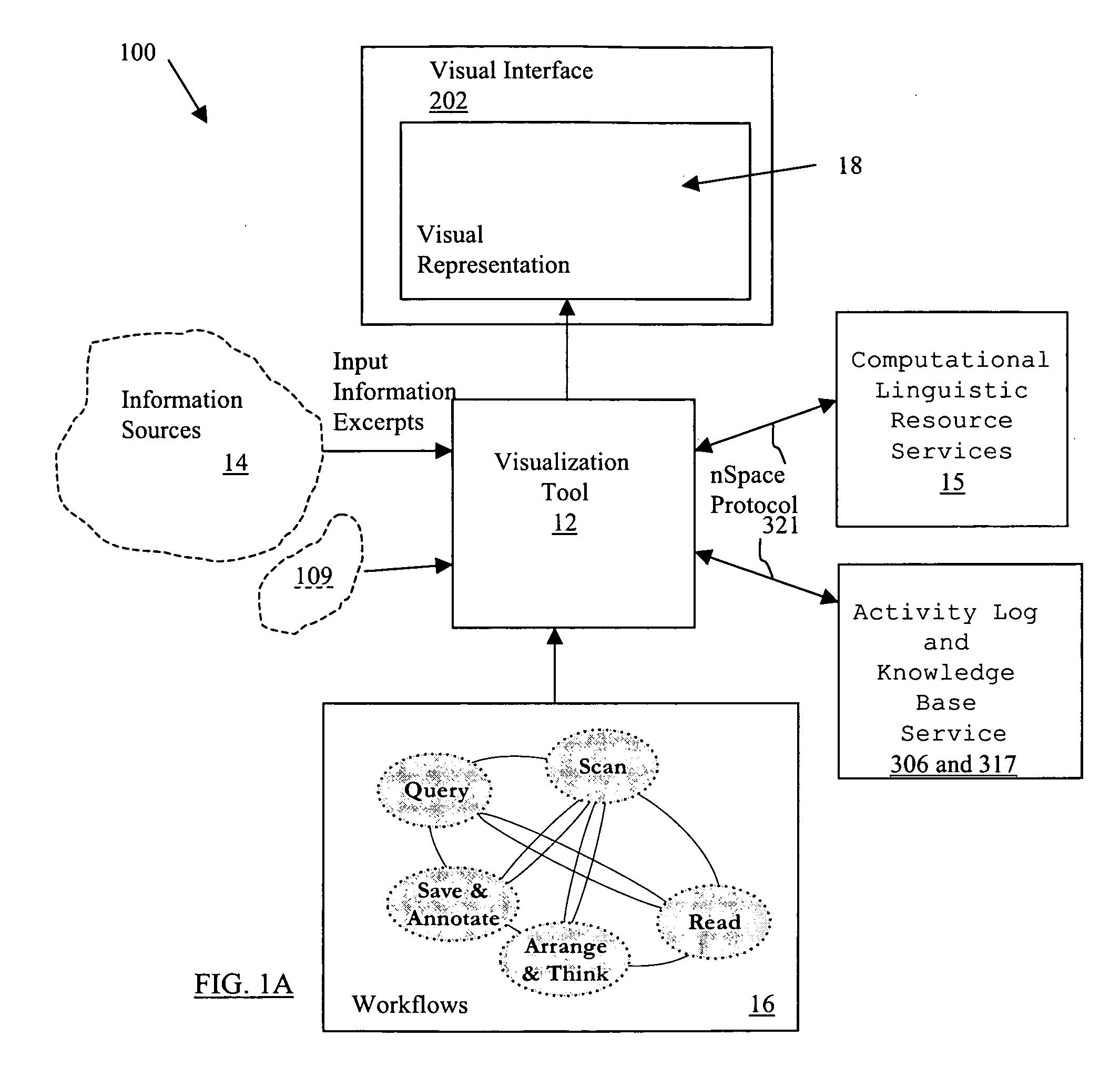

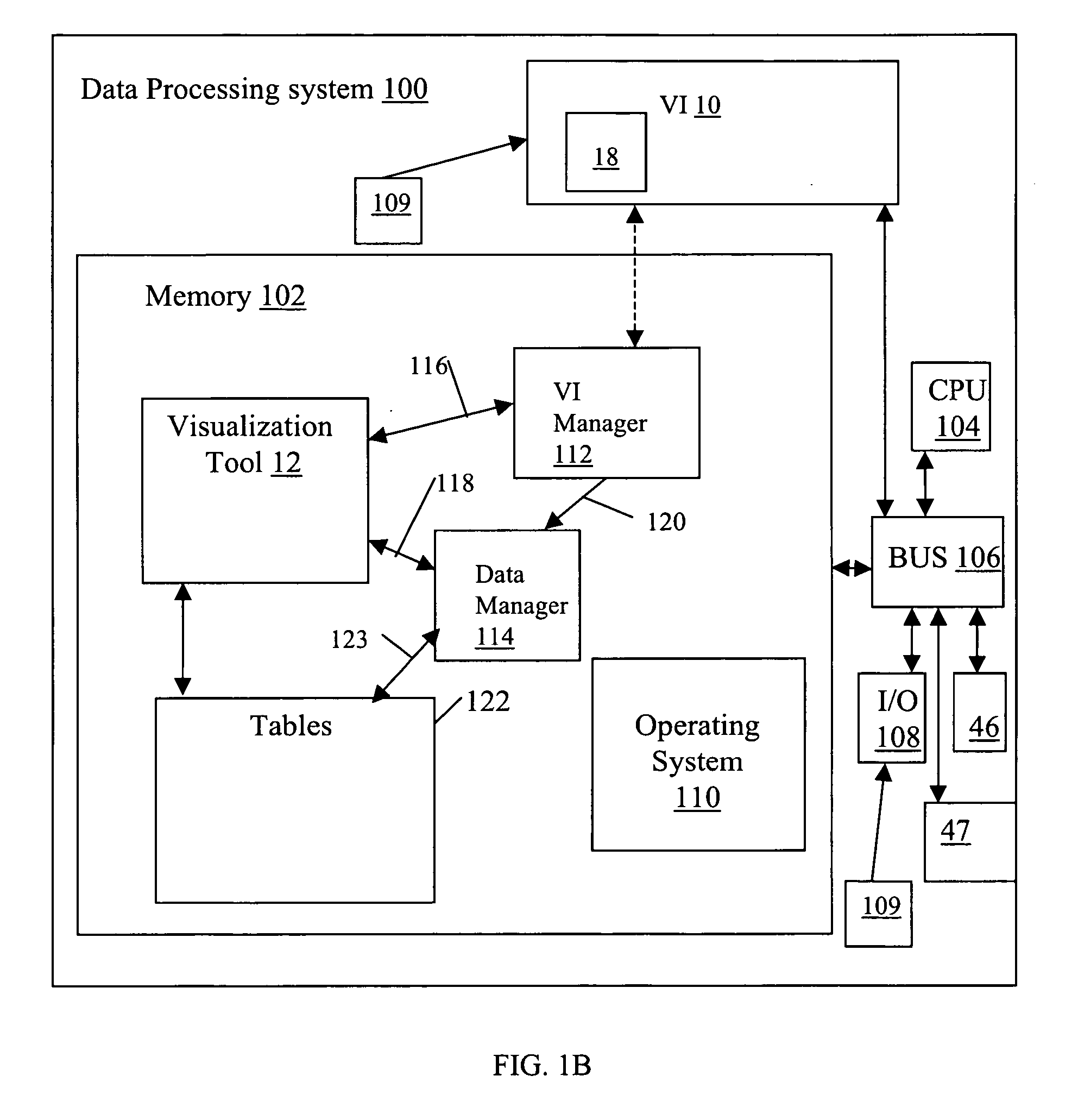

A system and method of information retrieval and triage for information analysis provides an for interactive multi-dimensional and linked visual representation of information content and properties. A query interface plans and obtains result sets. A dimension interface specifies dimensions with which to categorize the result sets. Links among results of a result set or results of different sets are automatically generated for linked selection viewing. Entities may be extracted and viewed and entity relations determined to establish further links and dimensions. Properties encoded in representations (e.g. icons) of the results in the multidimensional views maximizes display density. Multiple queries may be performed and compared. An integrated browser component responsive to the links is provided for viewing documents. Documents and other information from the result set may be used in an analysis component providing a space for visual linking, to arrange the information in the space while maintaining links automatically.

Owner:UNCHARTED SOFTWARE INC

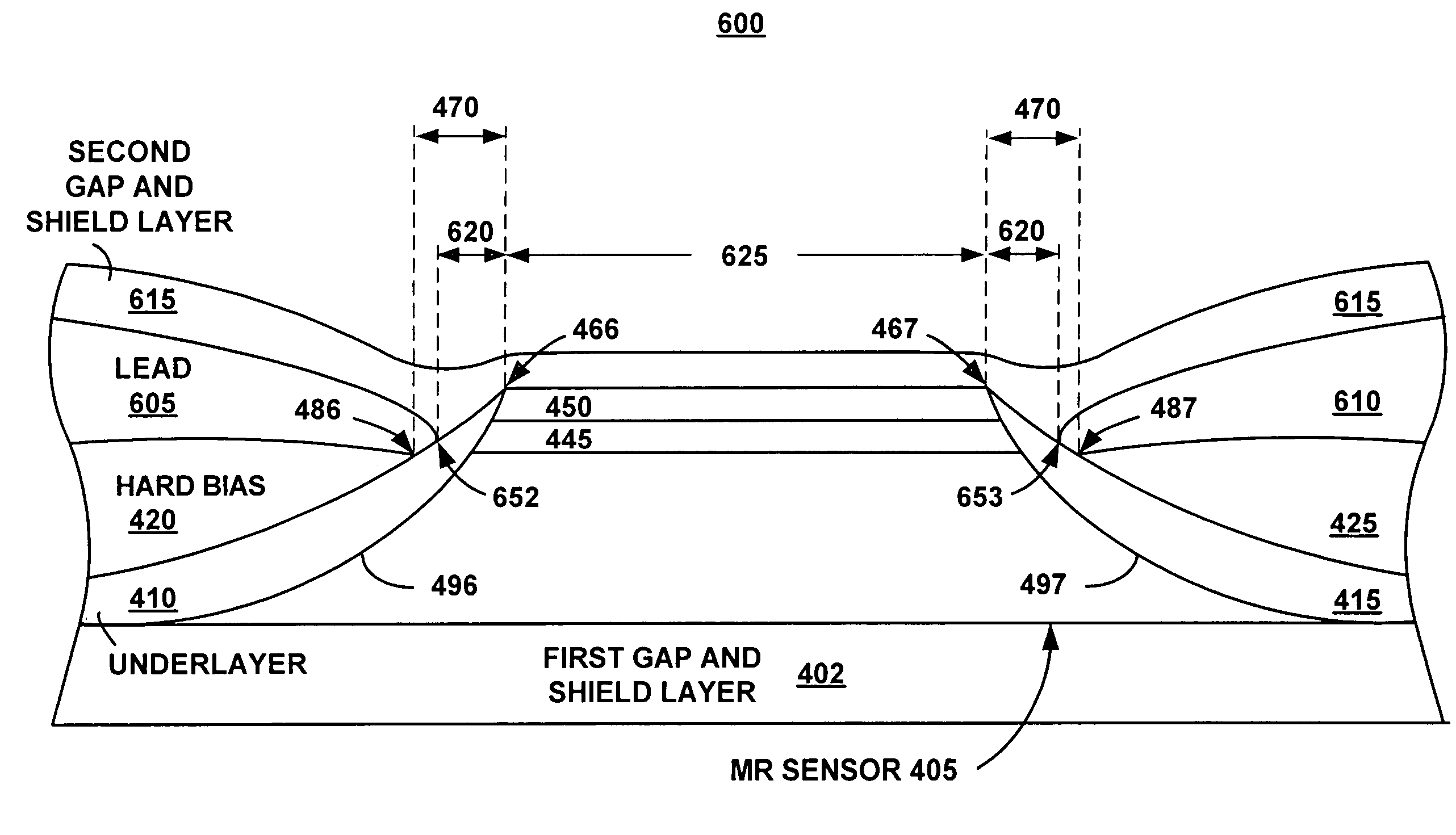

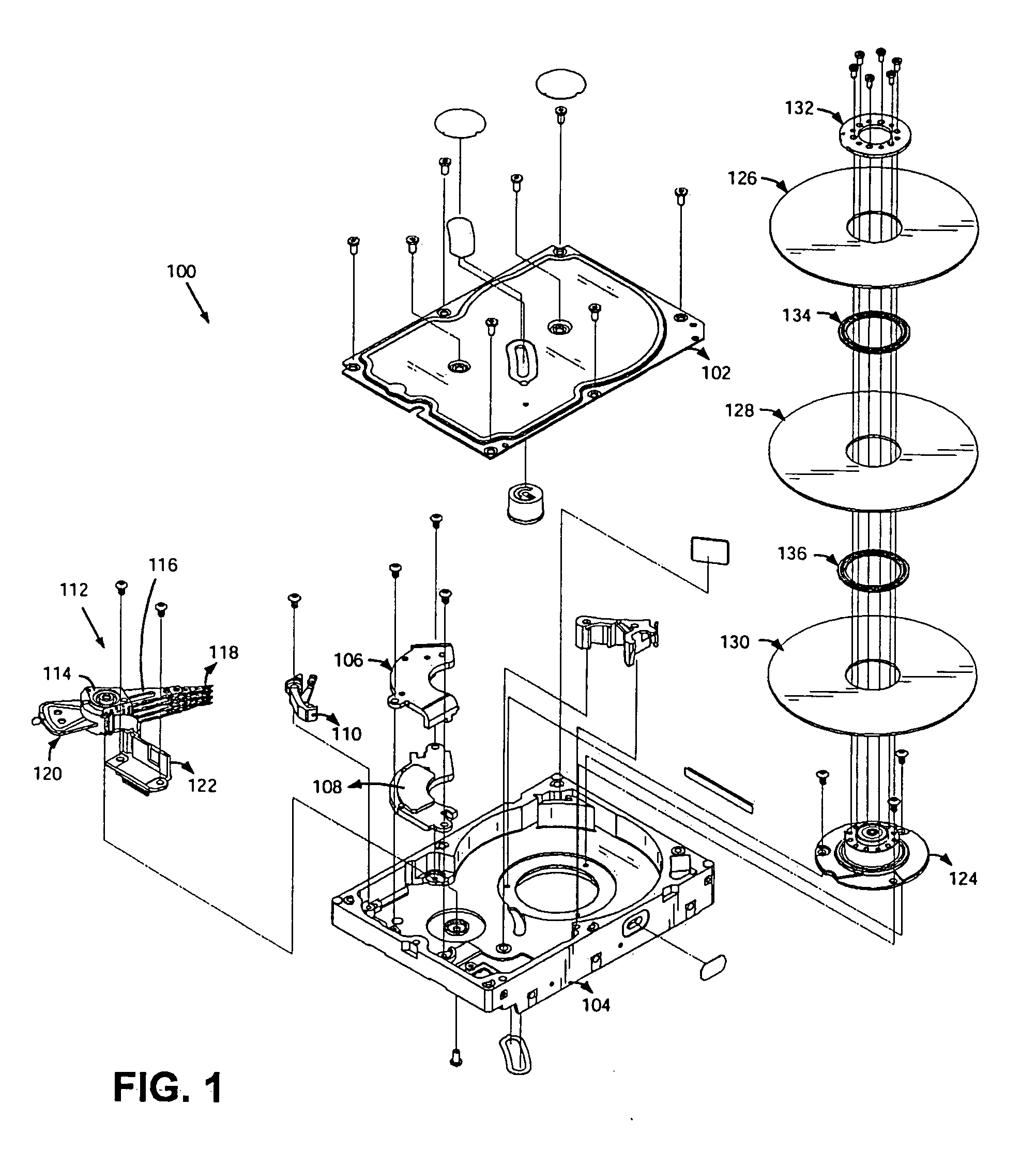

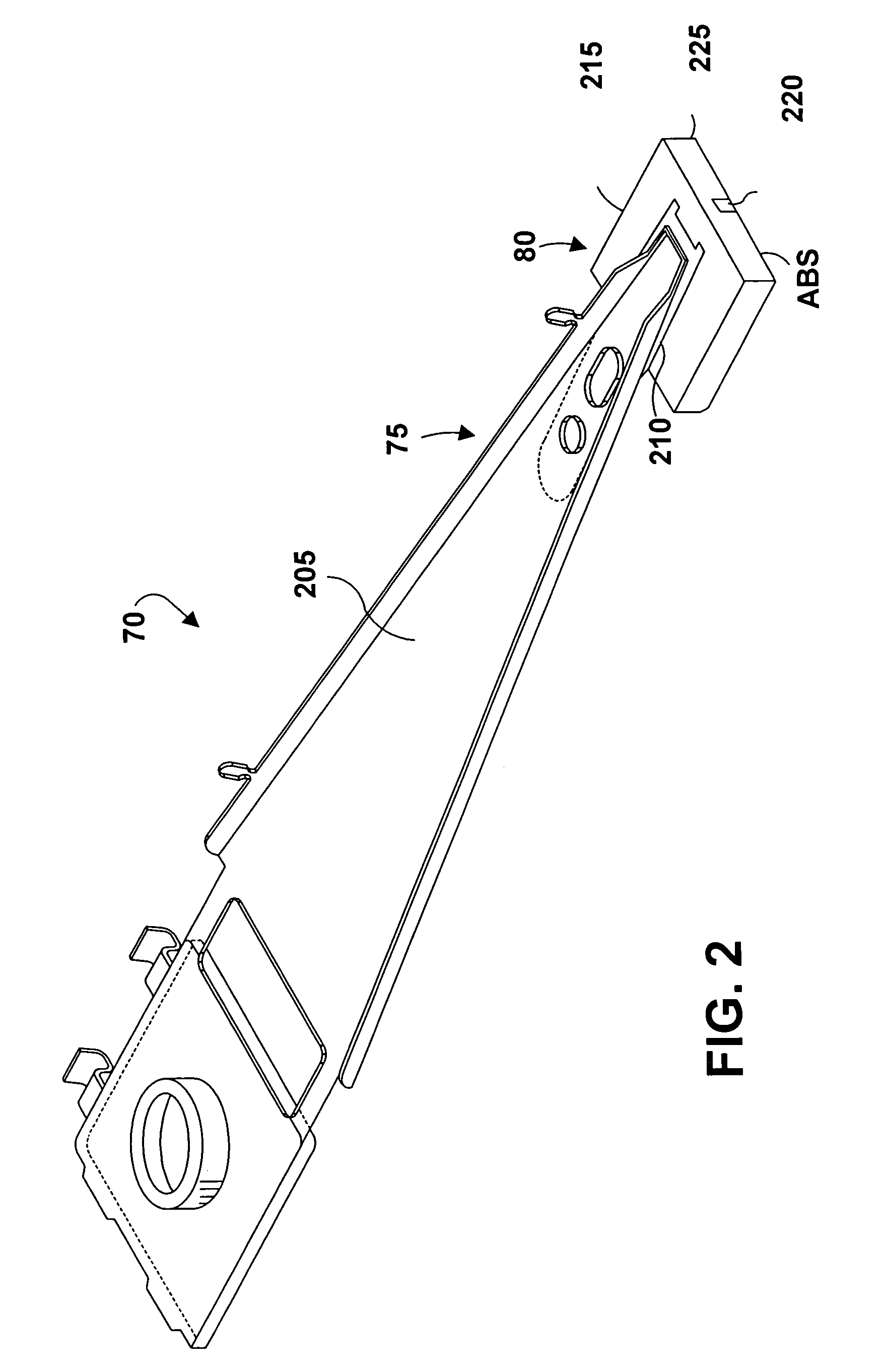

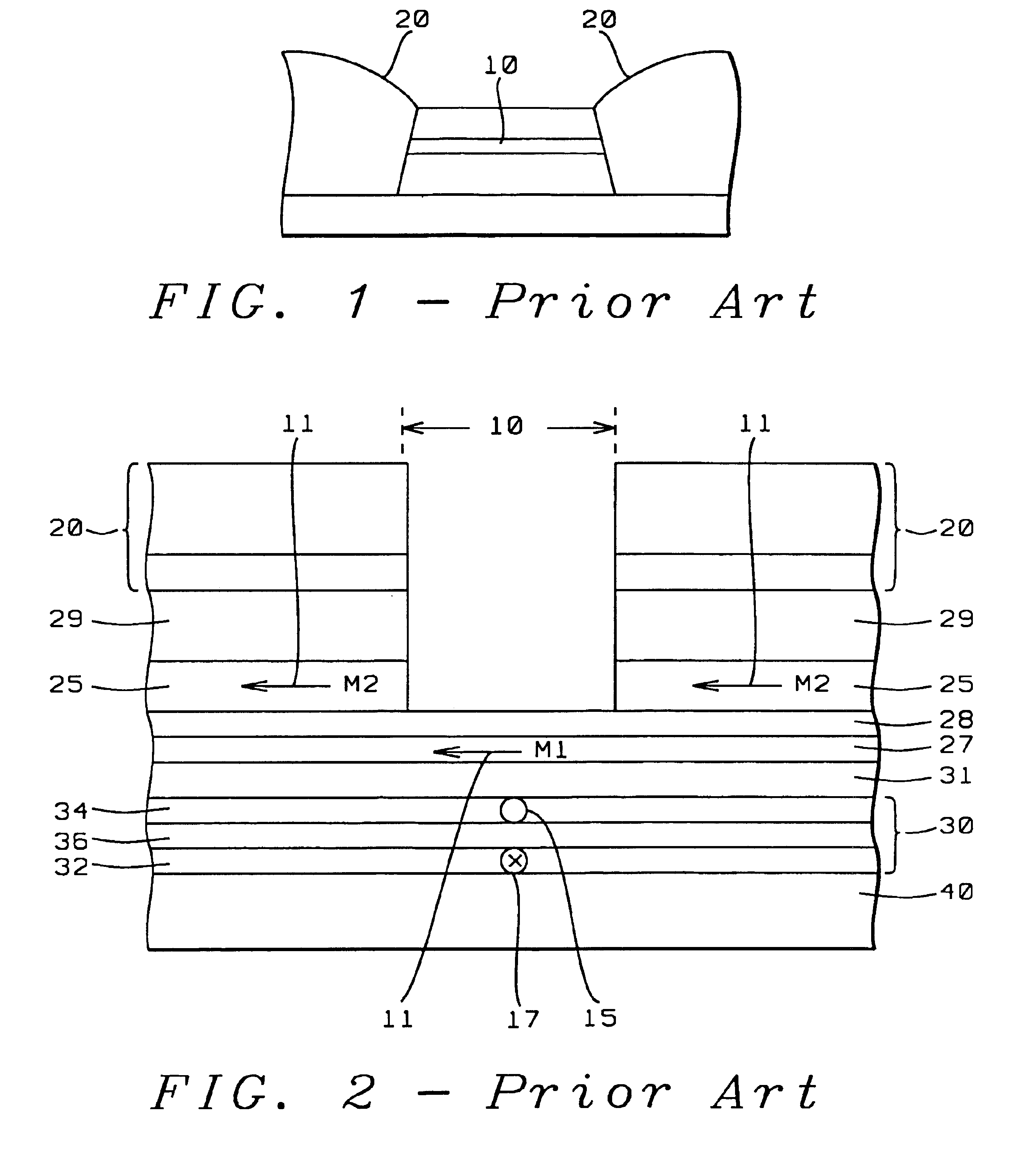

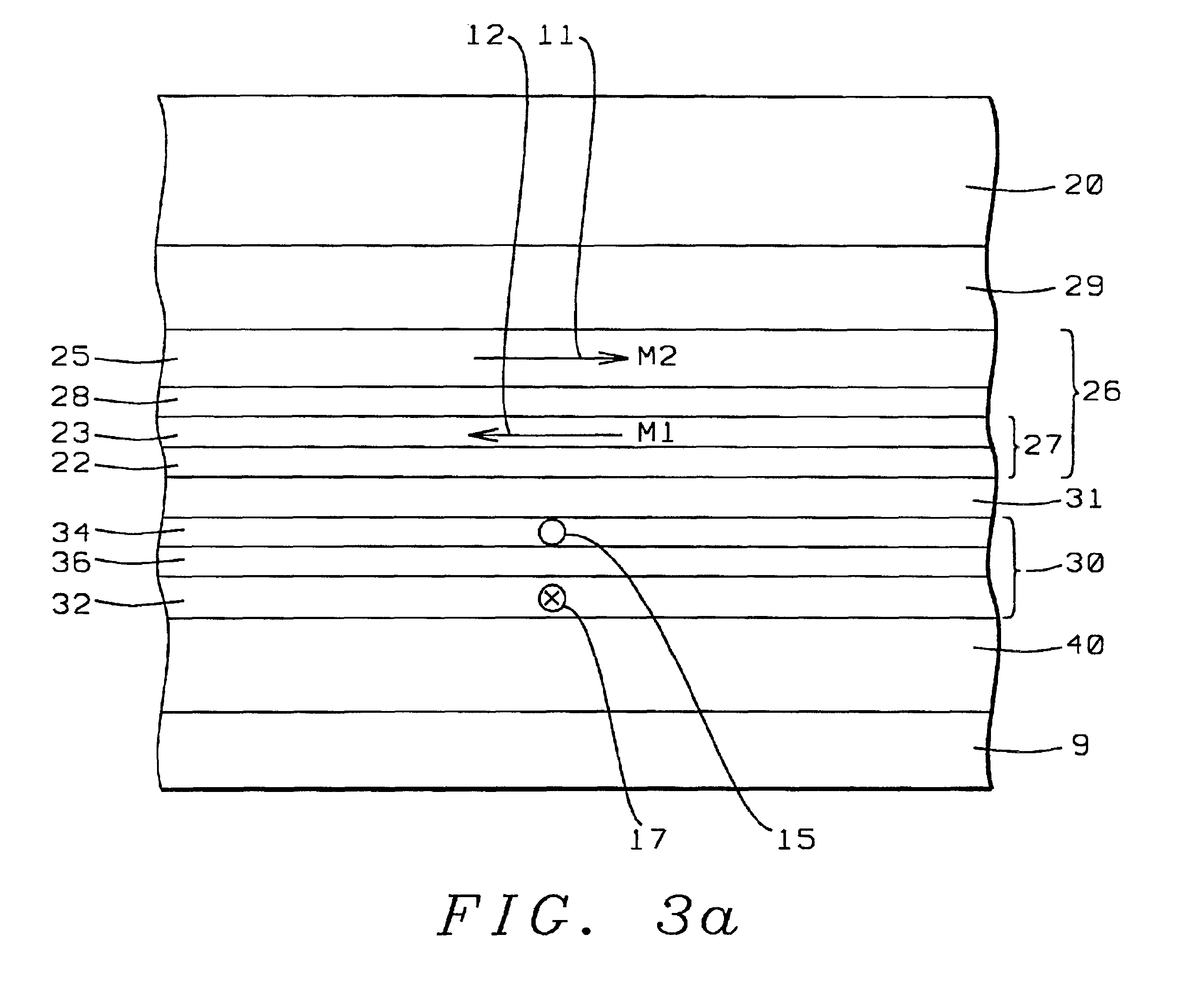

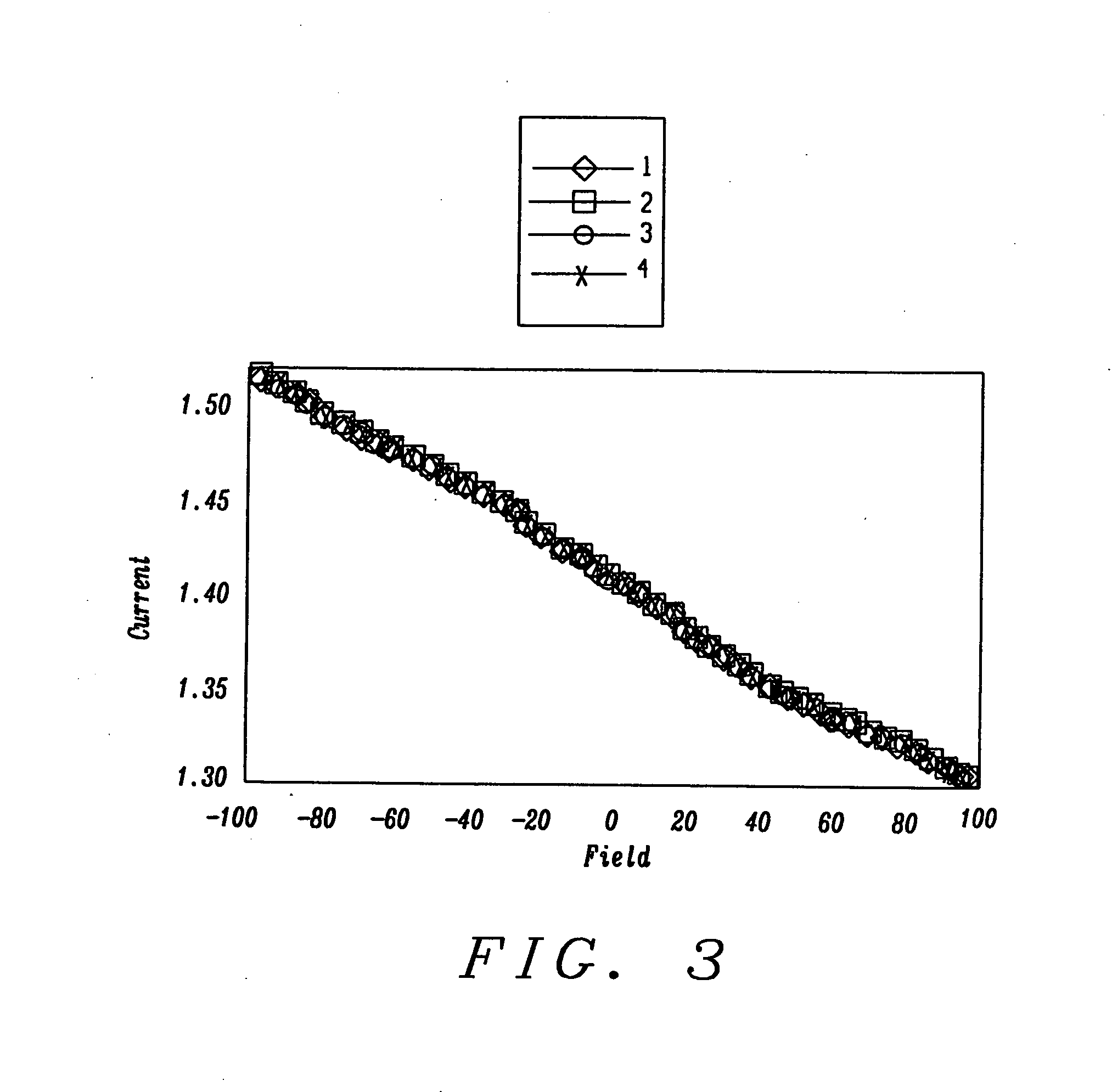

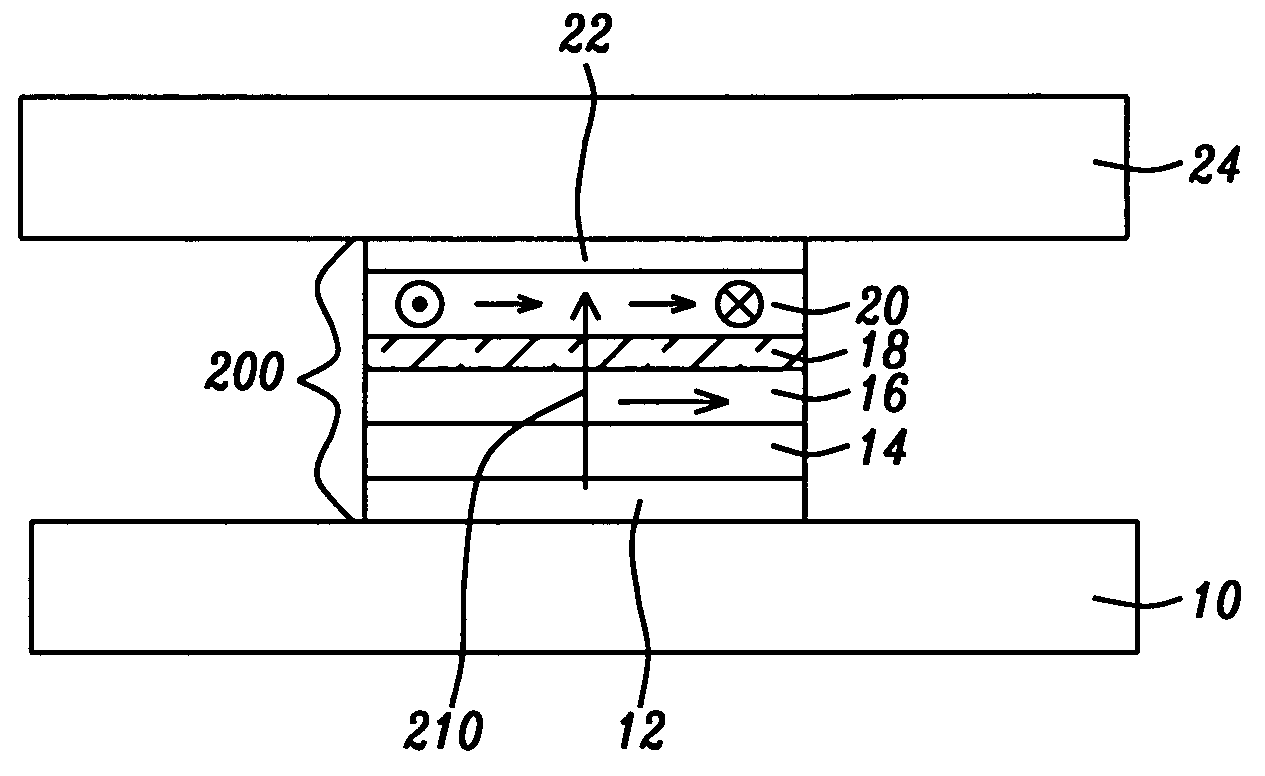

Magnetic read head with recessed hard bias and current leads

InactiveUS7212384B1Improve stabilityHigh sensitivityRecord information storageManufacture of flux-sensitive headsEngineeringElectrical current

A read element includes a magnetoresistive sensor having a side and an upper surface that defines an edge. An underlayer overlies the side of the magnetoresistive sensor, and a hard bias layer overlies at least part of the underlayer and defines a hard bias junction with the underlayer. A lead is formed atop the hard bias layer. The hard bias junction is recessed from the edge of the magnetoresistive sensor by a predetermined recess distance, to provide stability and sensitivity to the read element.

Owner:WESTERN DIGITAL TECH INC

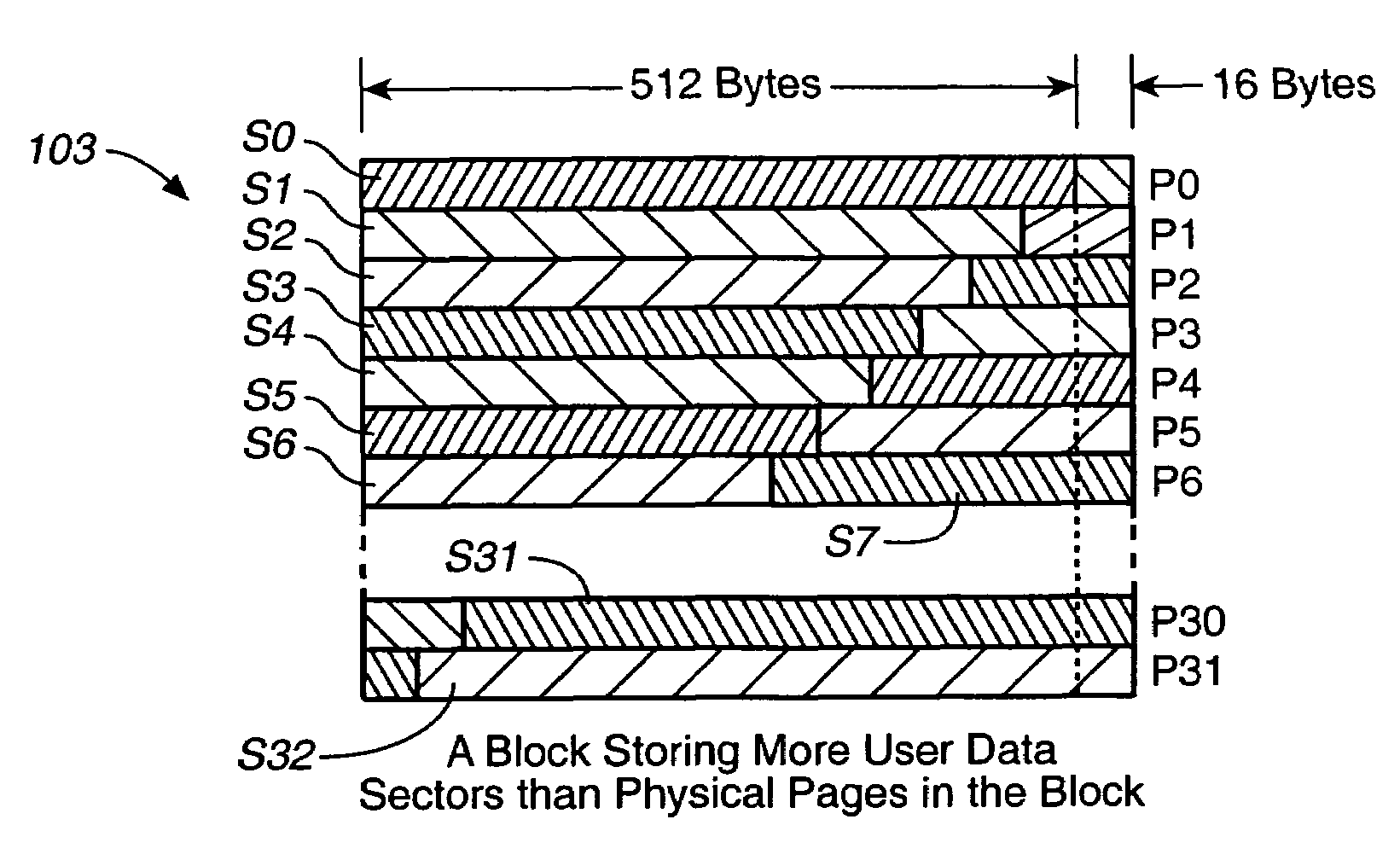

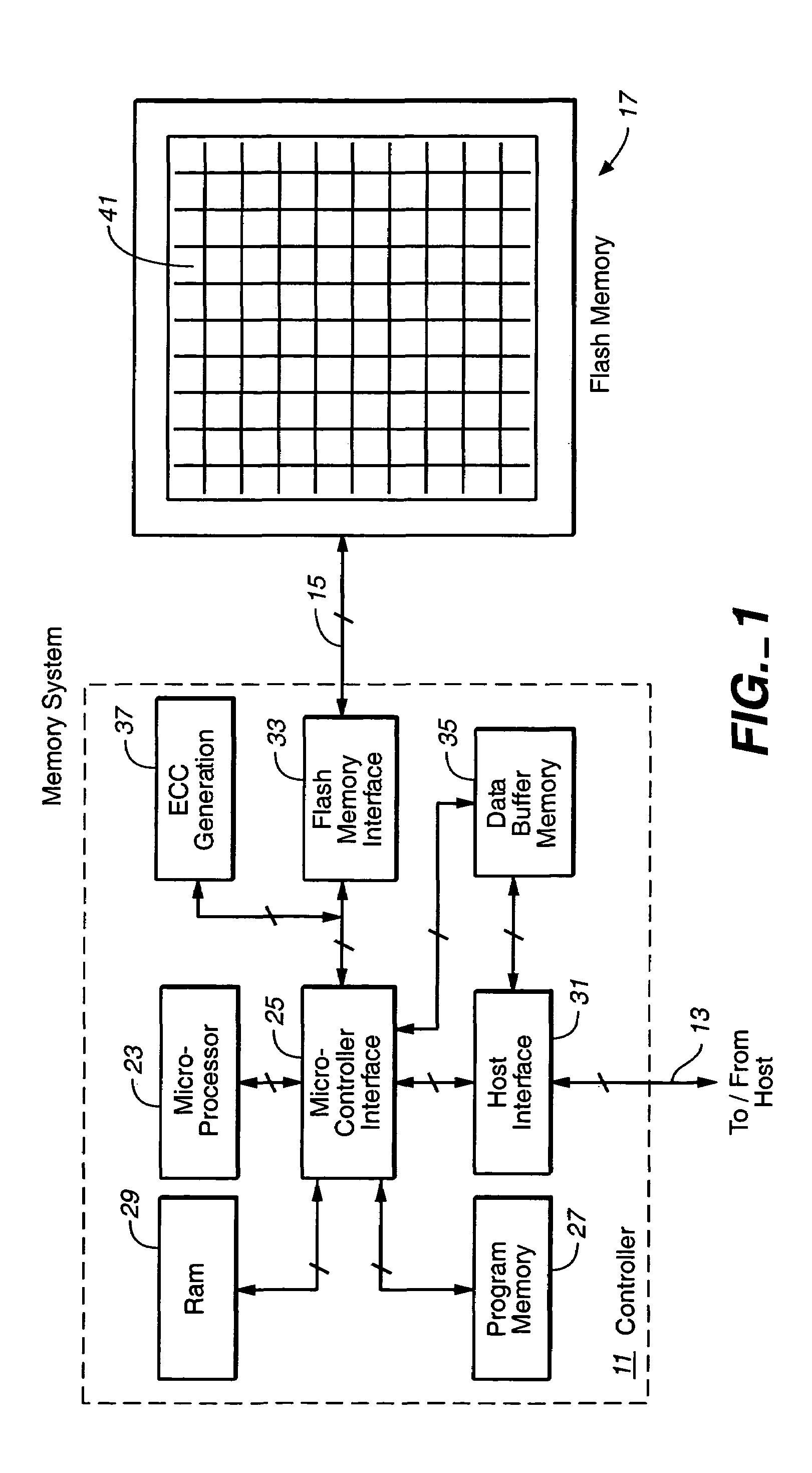

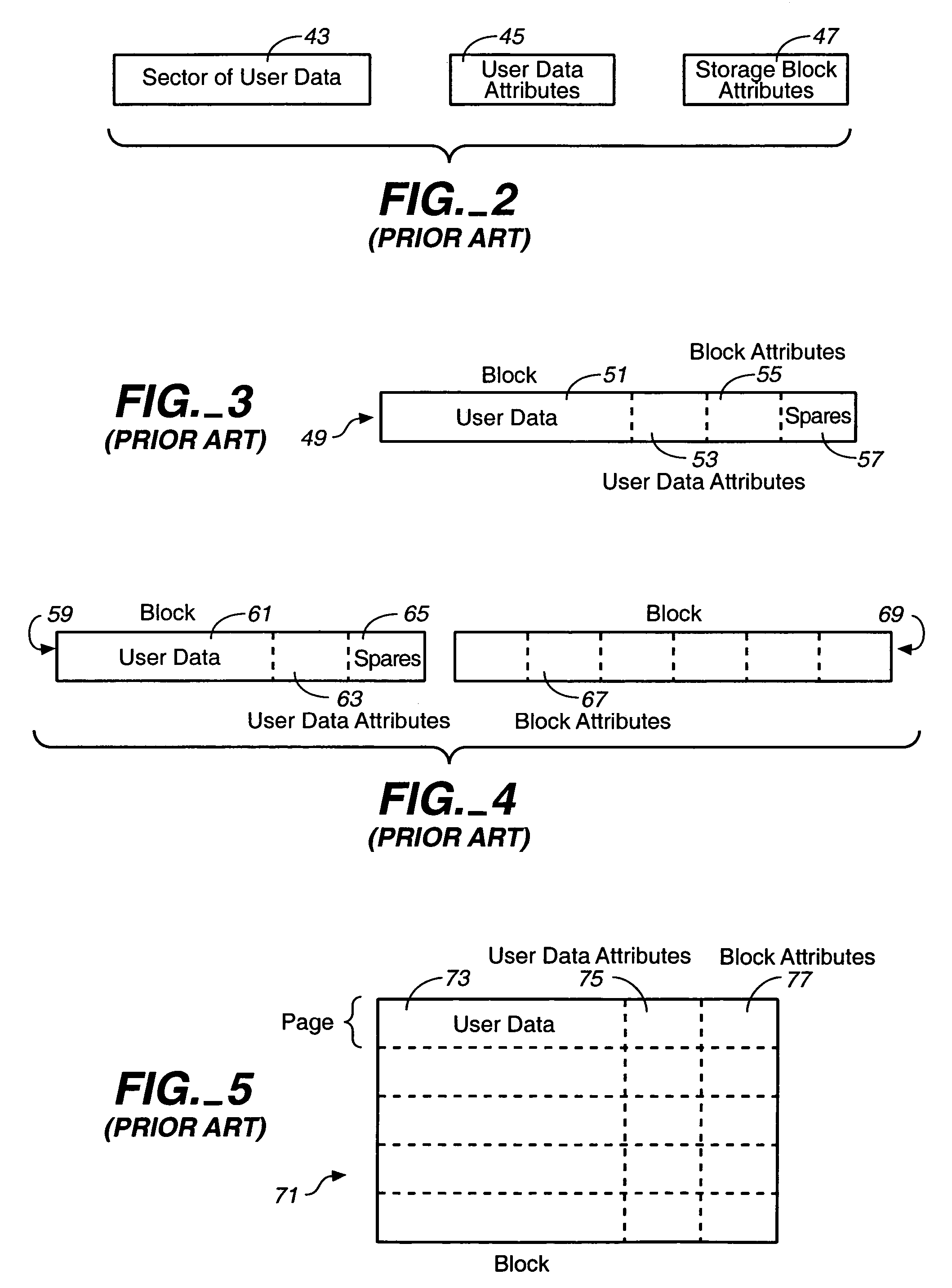

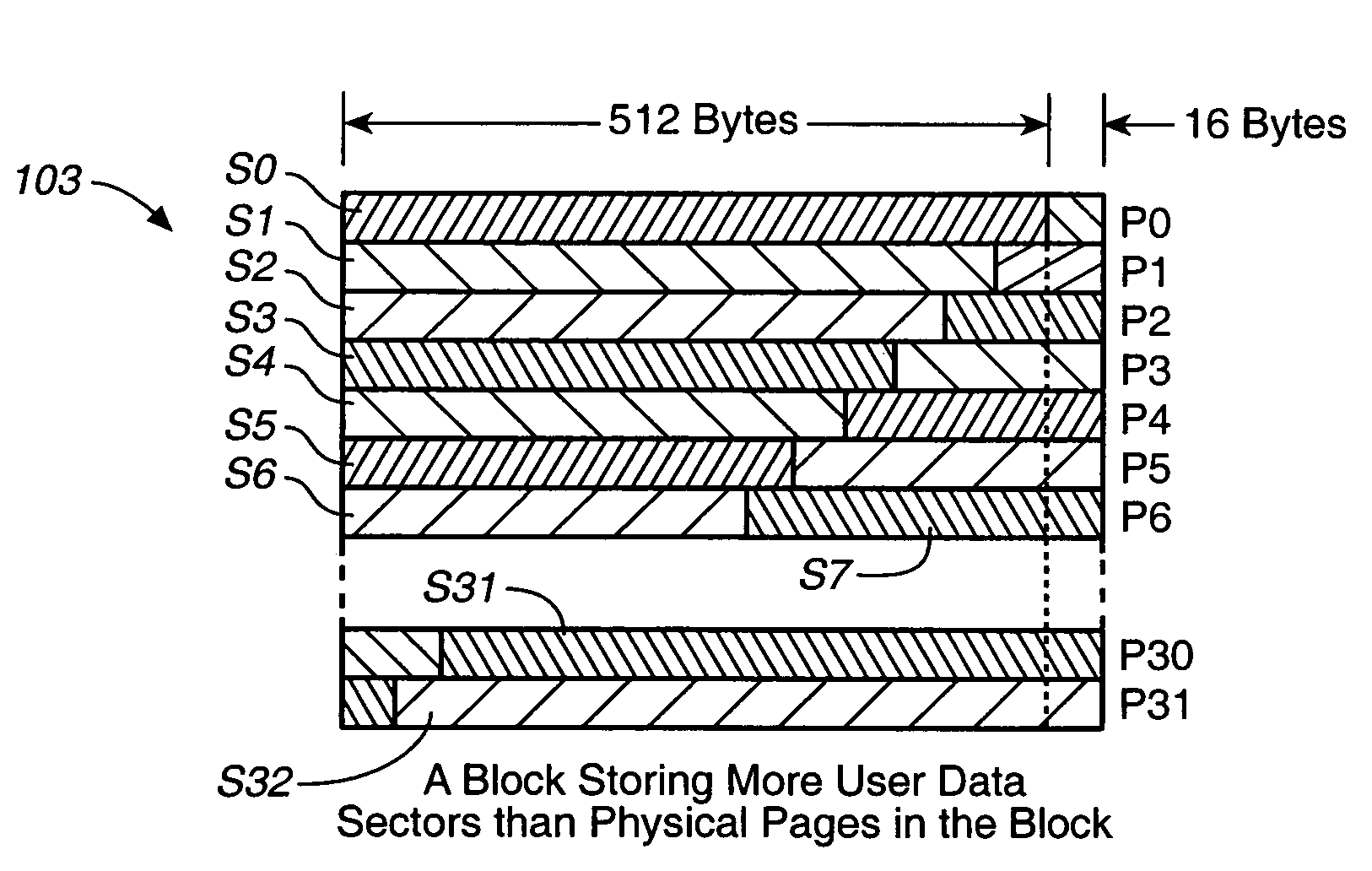

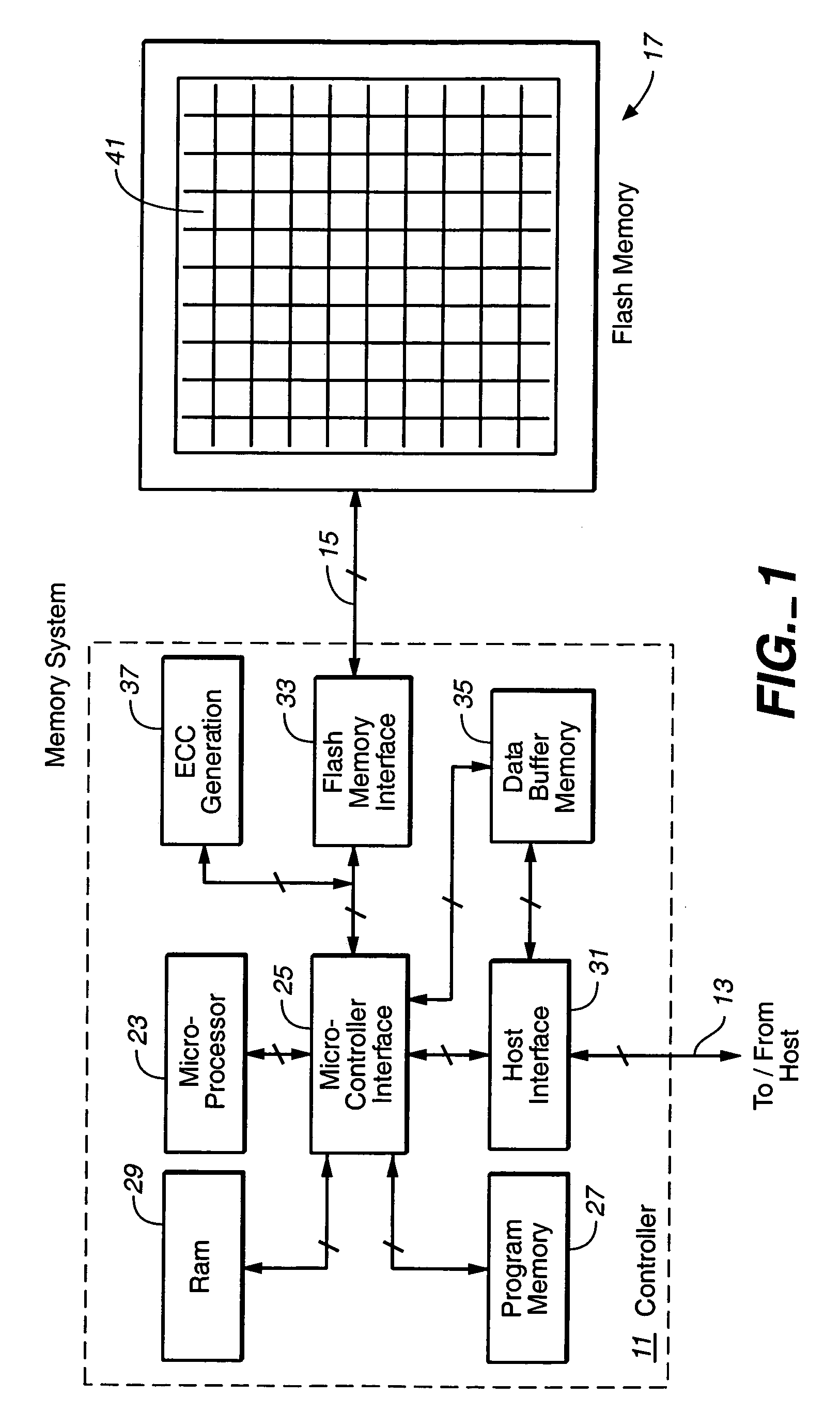

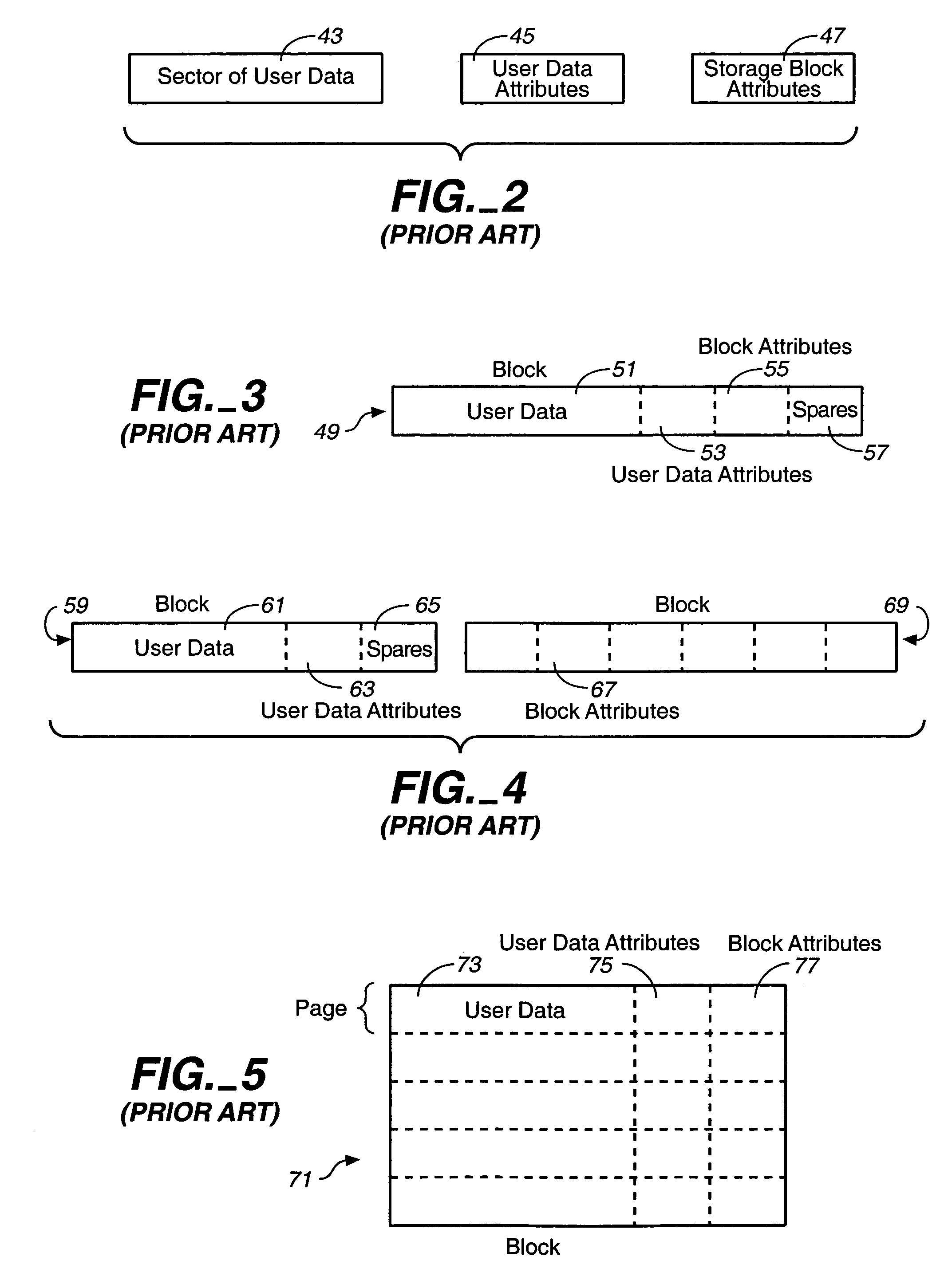

Techniques for operating non-volatile memory systems with data sectors having different sizes than the sizes of the pages and/or blocks of the memory

InactiveUS7032065B2Efficient use ofImprove performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationEEPROMNon-volatile memory

A non-volatile memory system, such as a flash EEPROM system, is disclosed to be divided into a plurality of blocks and each of the blocks into one or more pages, with sectors of data being stored therein that are of a different size than either the pages or blocks. One specific technique packs more sectors into a block than pages provided for that block. Error correction codes and other attribute data for a number of user data sectors are preferably stored together in different pages and blocks than the user data.

Owner:SANDISK TECH LLC

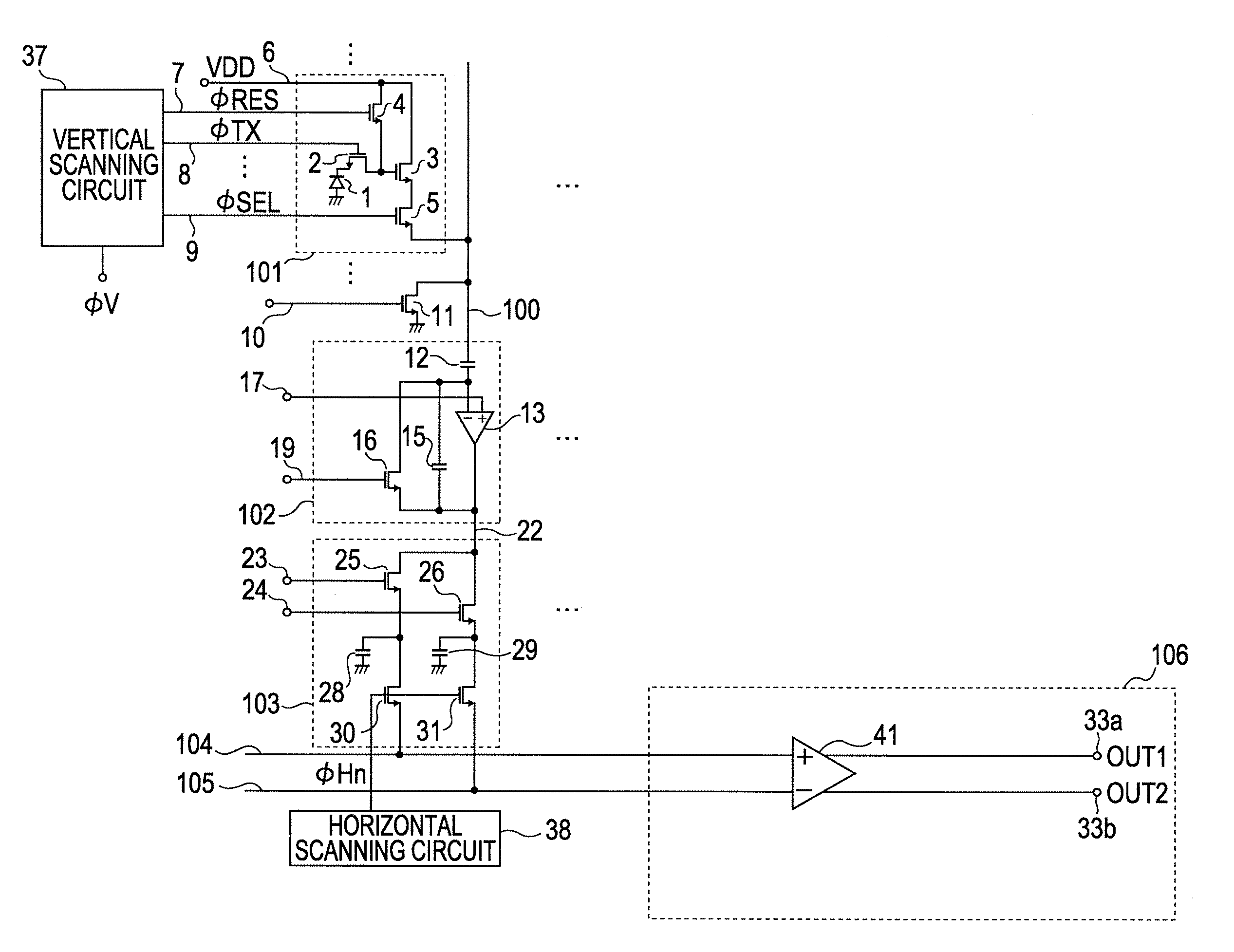

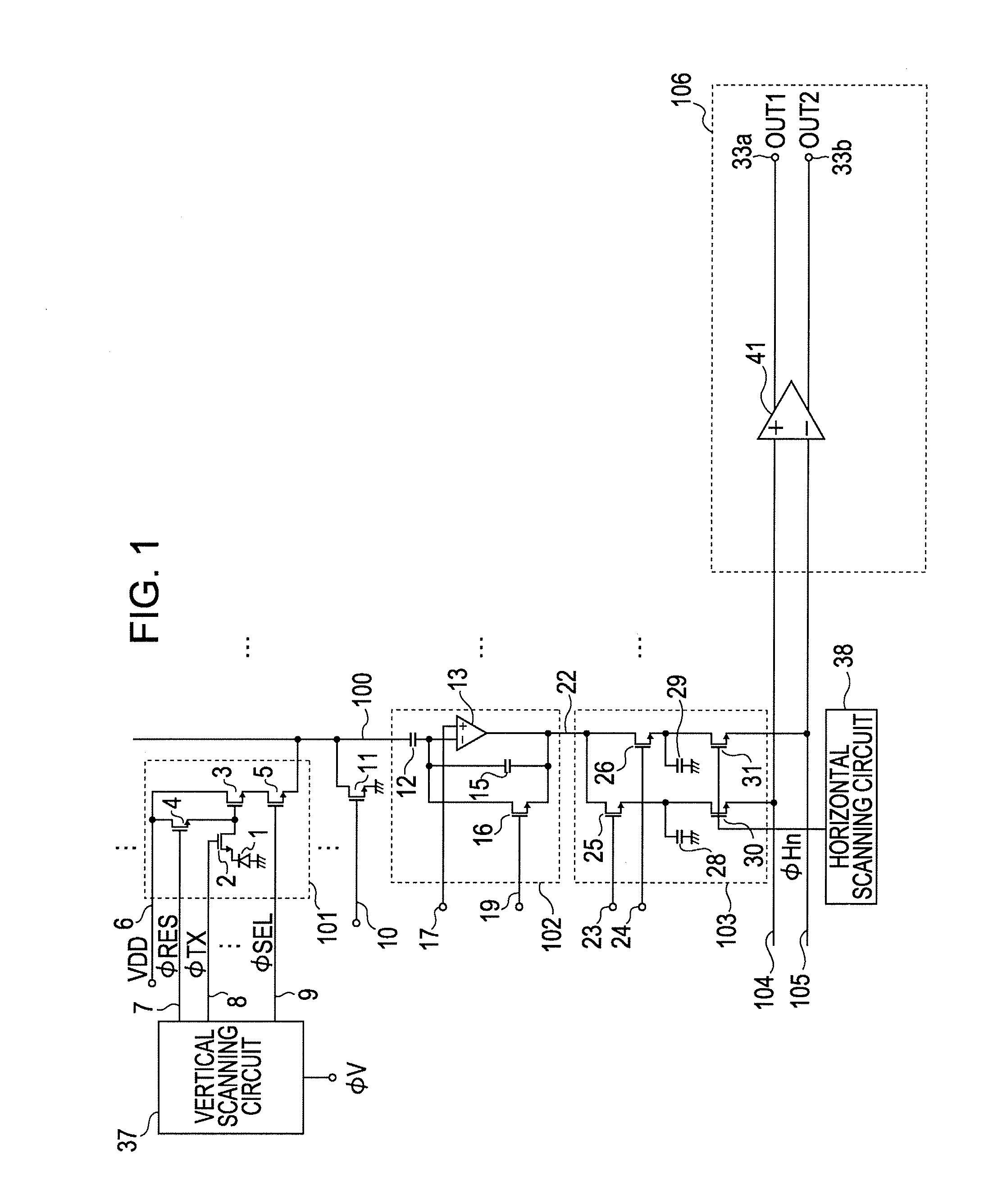

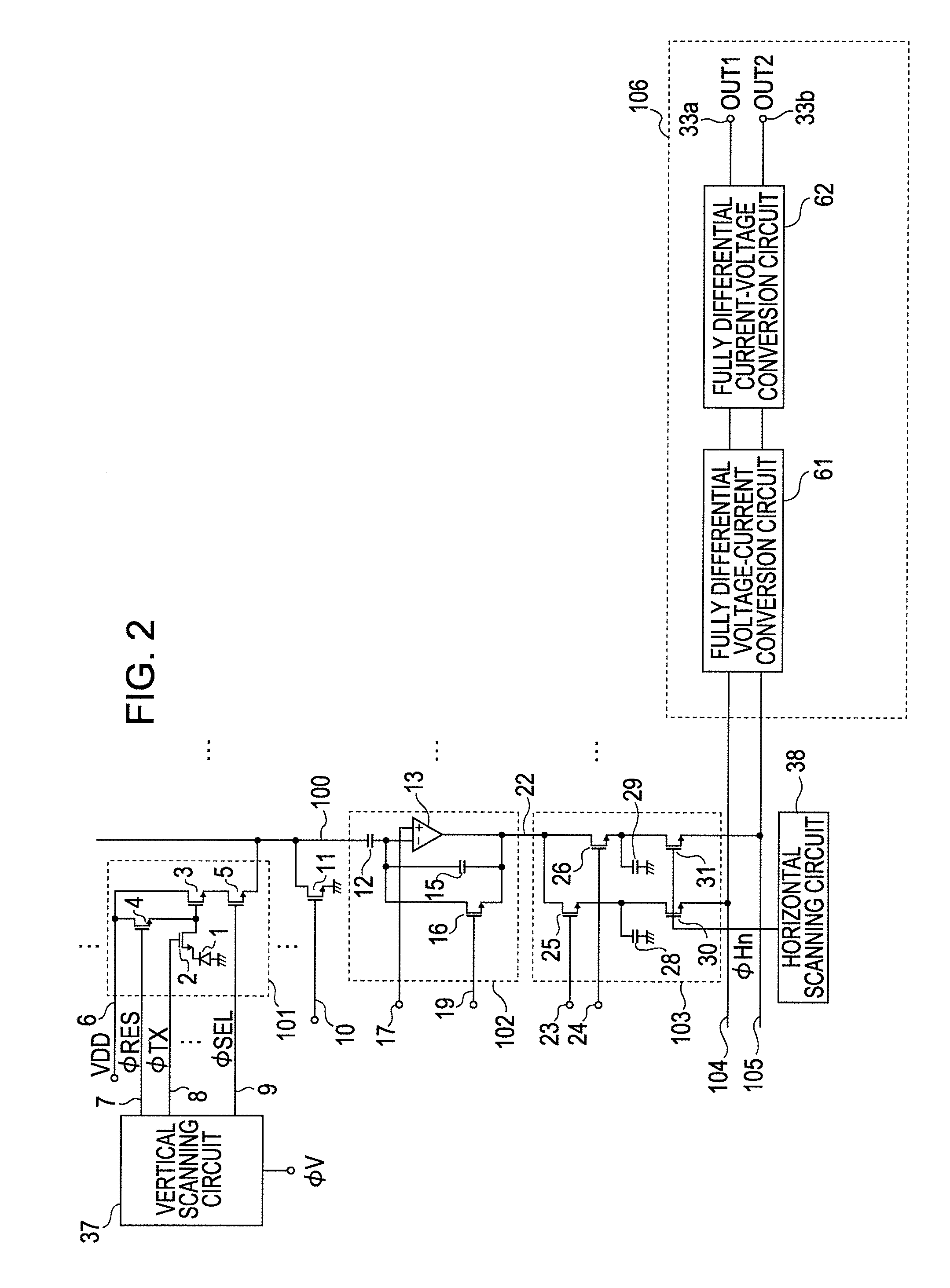

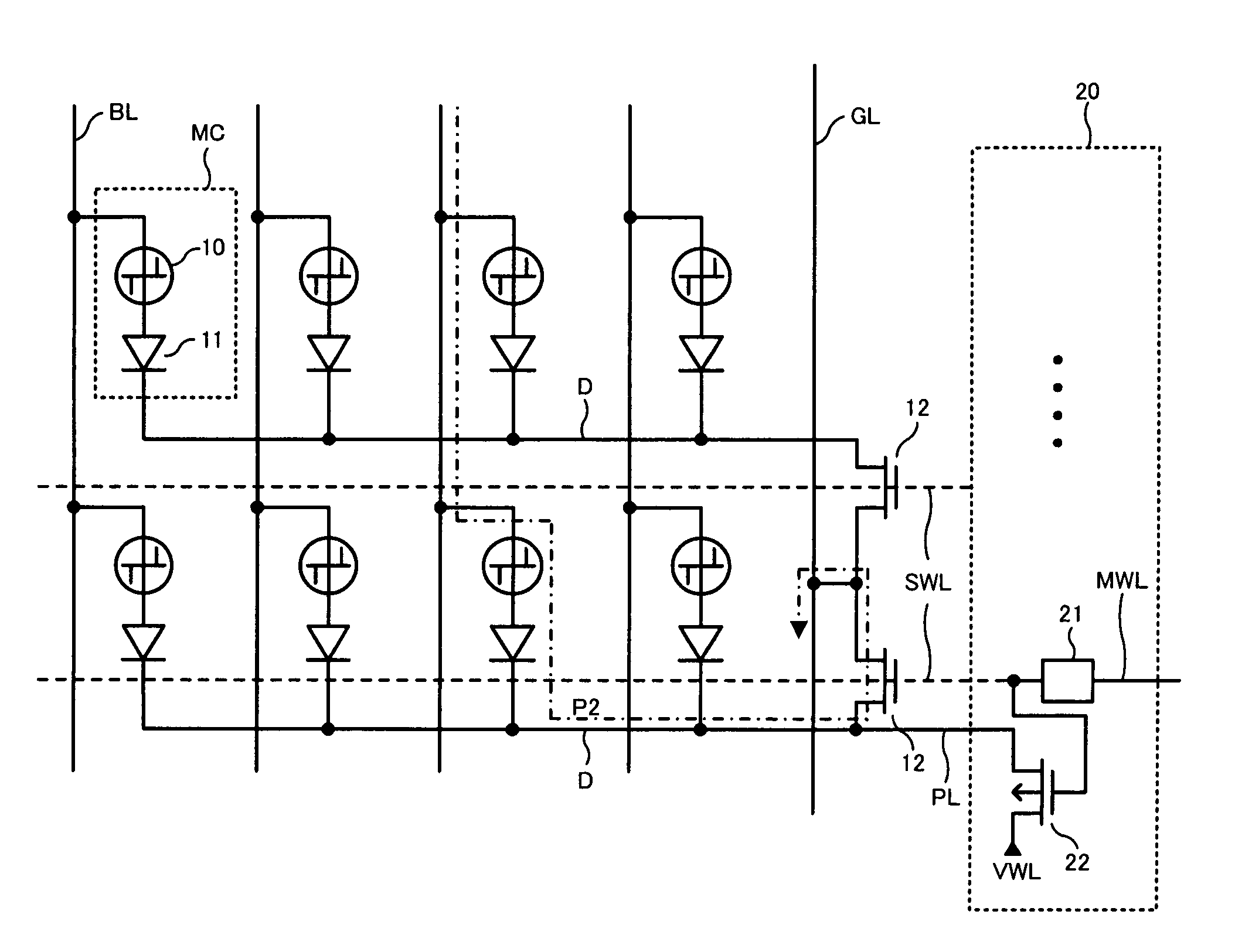

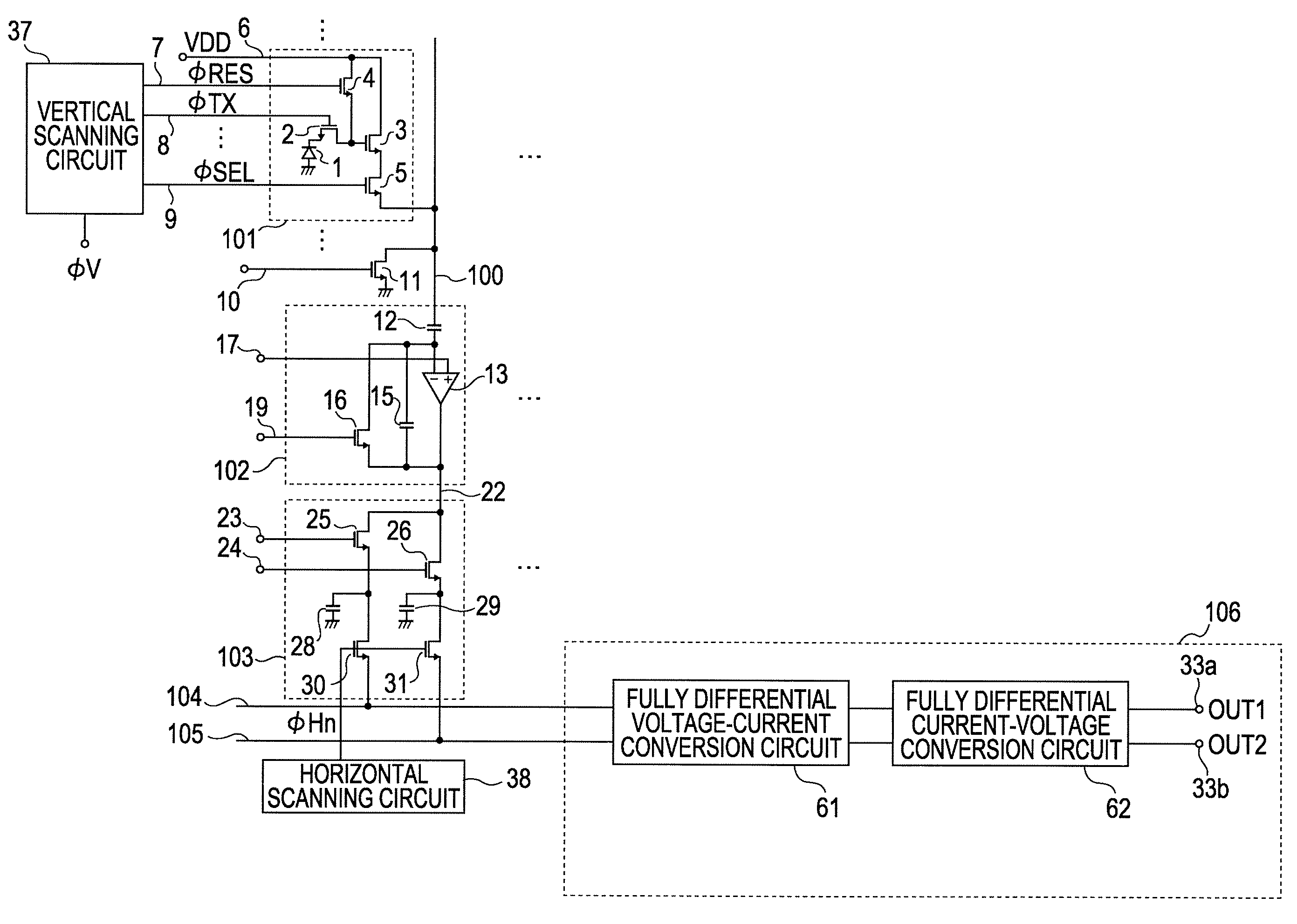

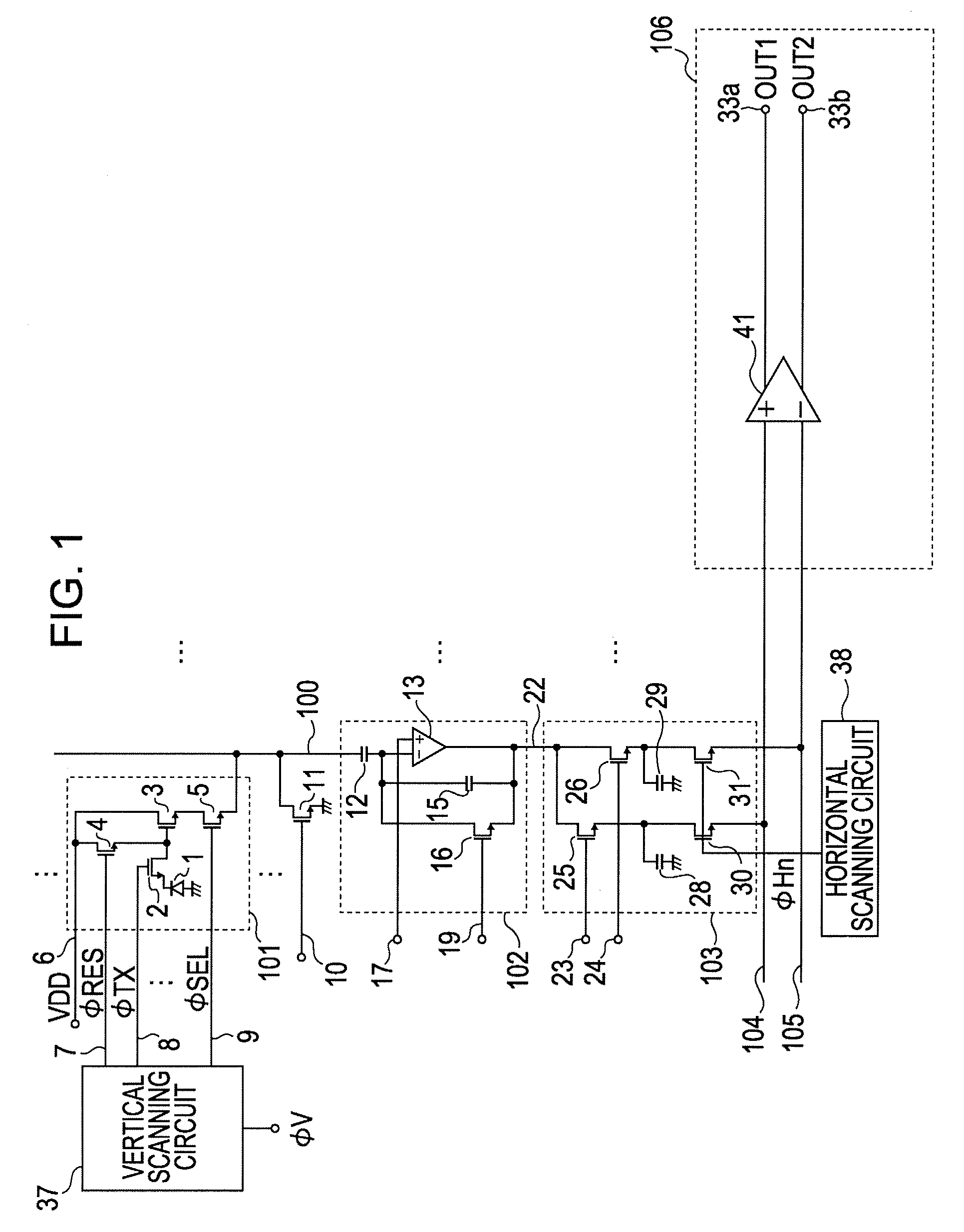

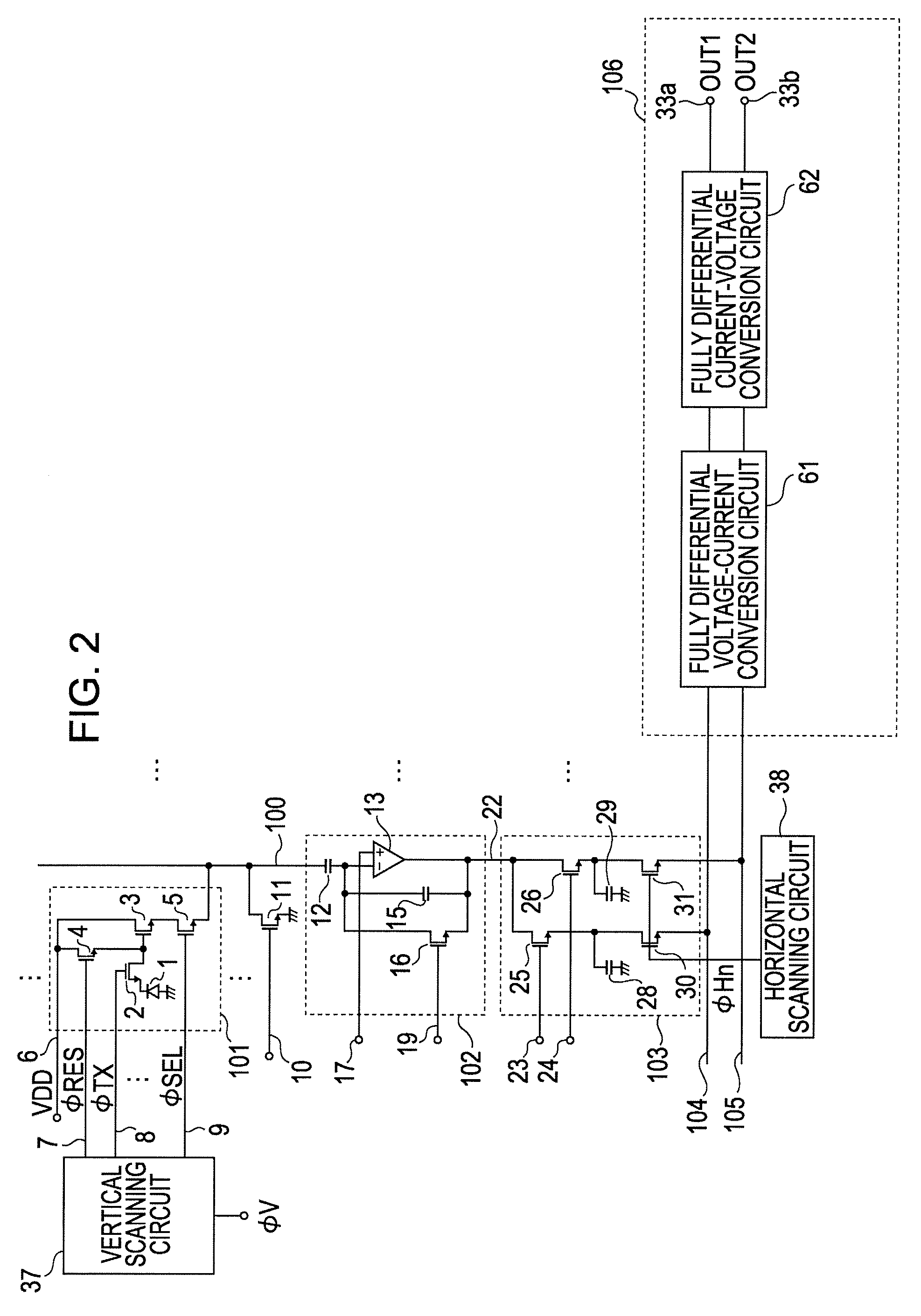

Photoelectric conversion apparatus and image sensing system

InactiveUS20080062295A1Decrease in reading speed can be suppressedReduce readTelevision system detailsTelevision system scanning detailsAudio power amplifierPhotoelectric conversion

To provide a configuration including a fully differential amplifier in which decrease in a reading speed can be suppressed. A photoelectric conversion apparatus according to the present invention includes a pixel area where a plurality of pixels are arranged; an amplifier configured to amplify a signal from the pixel area; a plurality of signal paths for transmitting the signals from the pixel area to the amplifier. The amplifier is a fully differential amplifier which includes a plurality of input terminals including a first input terminal and a second input terminal to which the signals from the plurality of signal paths are supplied and a plurality of output terminals including a first output terminal and a second output terminal and the input terminals and the output terminals have no feedback path provided therebetween.

Owner:CANON KK

System and method for interactive multi-dimensional visual representation of information content and properties

ActiveUS8131779B2Rapid visualizationImprove performanceDigital data processing detailsMultimedia data retrievalTriageInformation analysis

A system and method of information retrieval and triage for Information analysis provides an for interactive multi-dimensional and linked visual representation of information content and properties. A query Interface plans and obtains result sets. A dimension interface specifies dimensions with which to categorize the result sets. Links among results of a result set or results of different sets are automatically generated for linked selection viewing. Entitles may be extracted and viewed and entity relations determined to establish further links and dimensions. Properties encoded in representations of the results in the multi-dimensional views maximizes display density. Multiple queries may be performed and compared. An integrated browser component responsive to the links is provided for viewing documents. Documents and other information from the result set may be used in an analysis component providing a space for visual thinking, to arrange the information in the space while maintaining links automatically.

Owner:UNCHARTED SOFTWARE INC

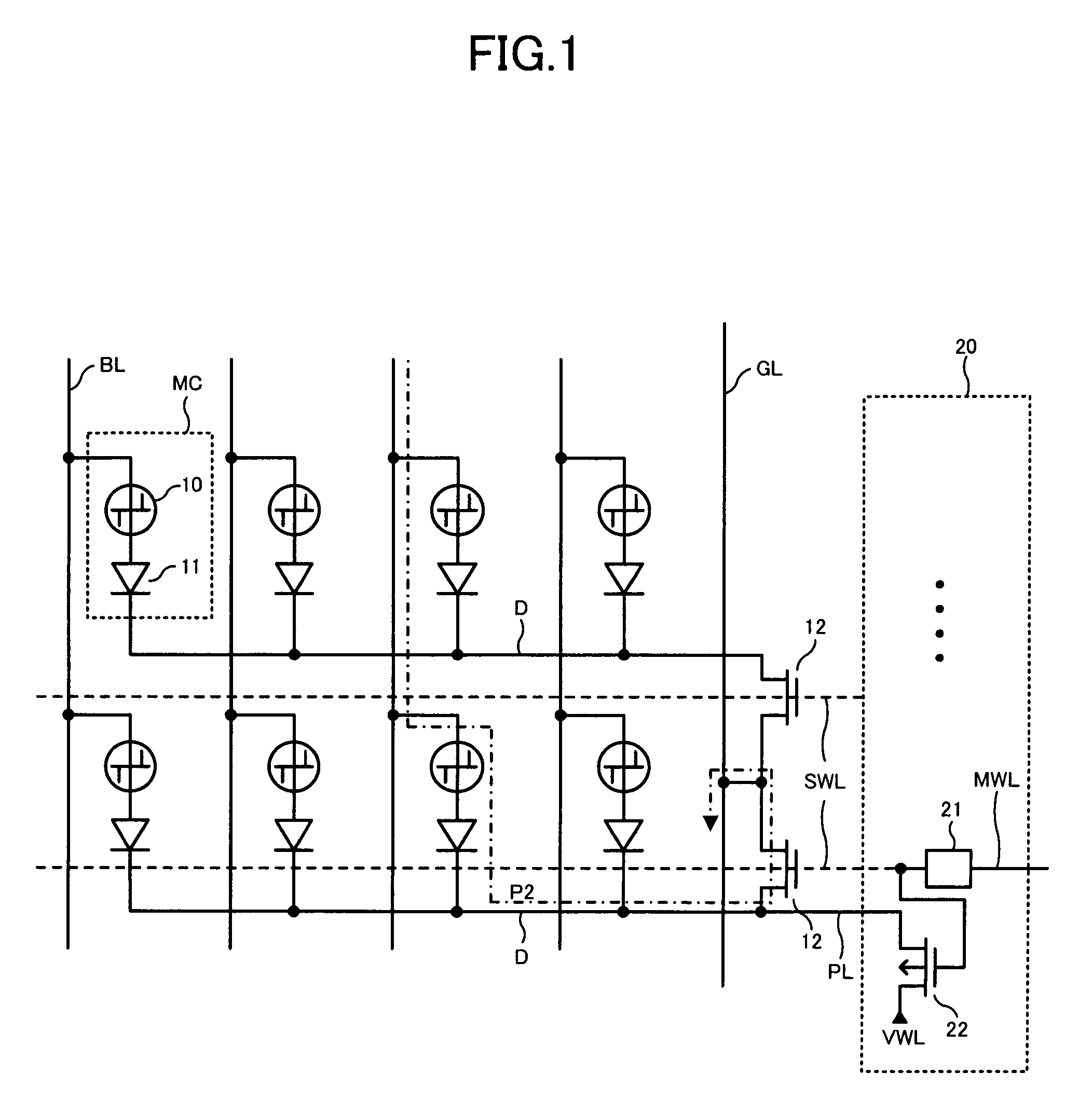

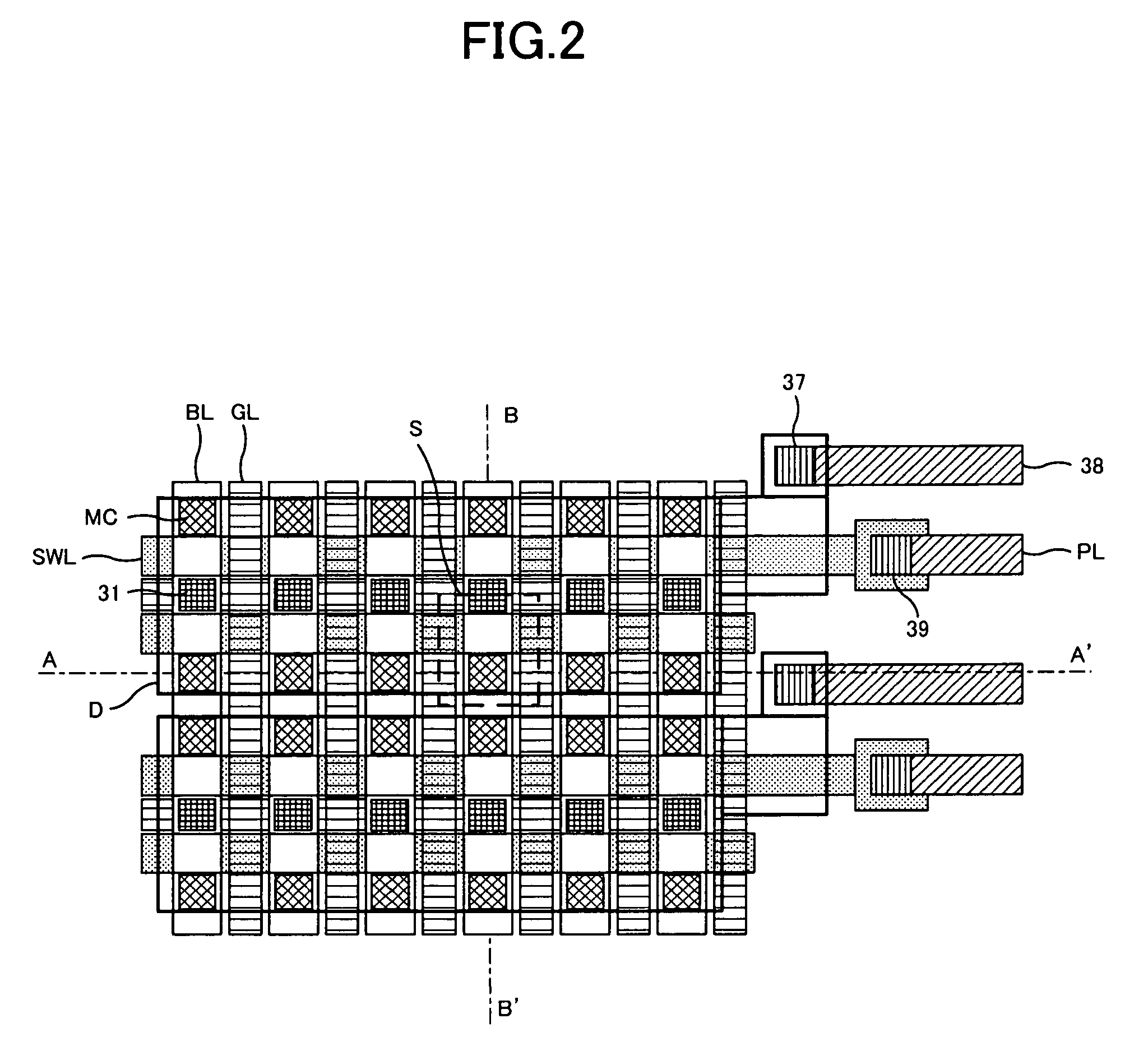

Phase change memory device

A phase change memory device comprises: a phase change element for rewritably storing data by changing a resistance state; a memory cell arranged at an intersection of a word line and a bit line and formed of the phase change element and a diode connected in series; a select transistor formed in a diffusion layer below the memory cell, for selectively controlling electric connection between an anode of the diode and a ground line in response to a potential of the word line connected to a gate; and a precharge circuit for precharging the diffusion layer below the memory cell corresponding to a non-selected word line to a predetermined voltage and for disconnecting the diffusion layer below the memory cell corresponding to a selected word line from the predetermined voltage.

Owner:LONGITUDE LICENSING LTD

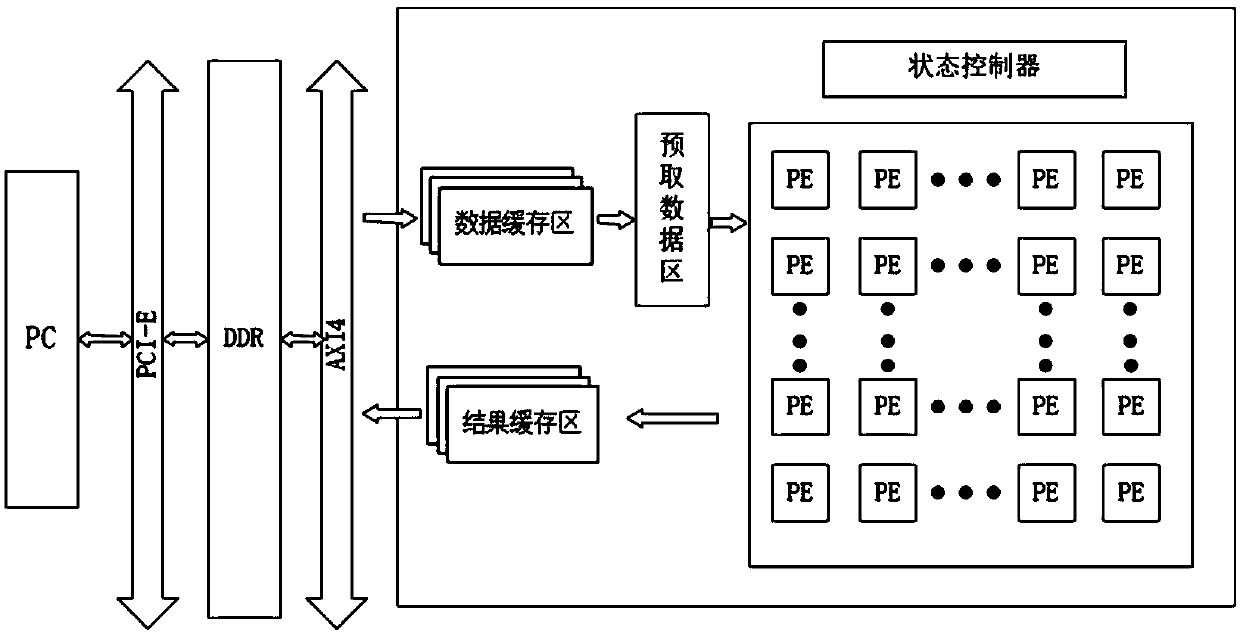

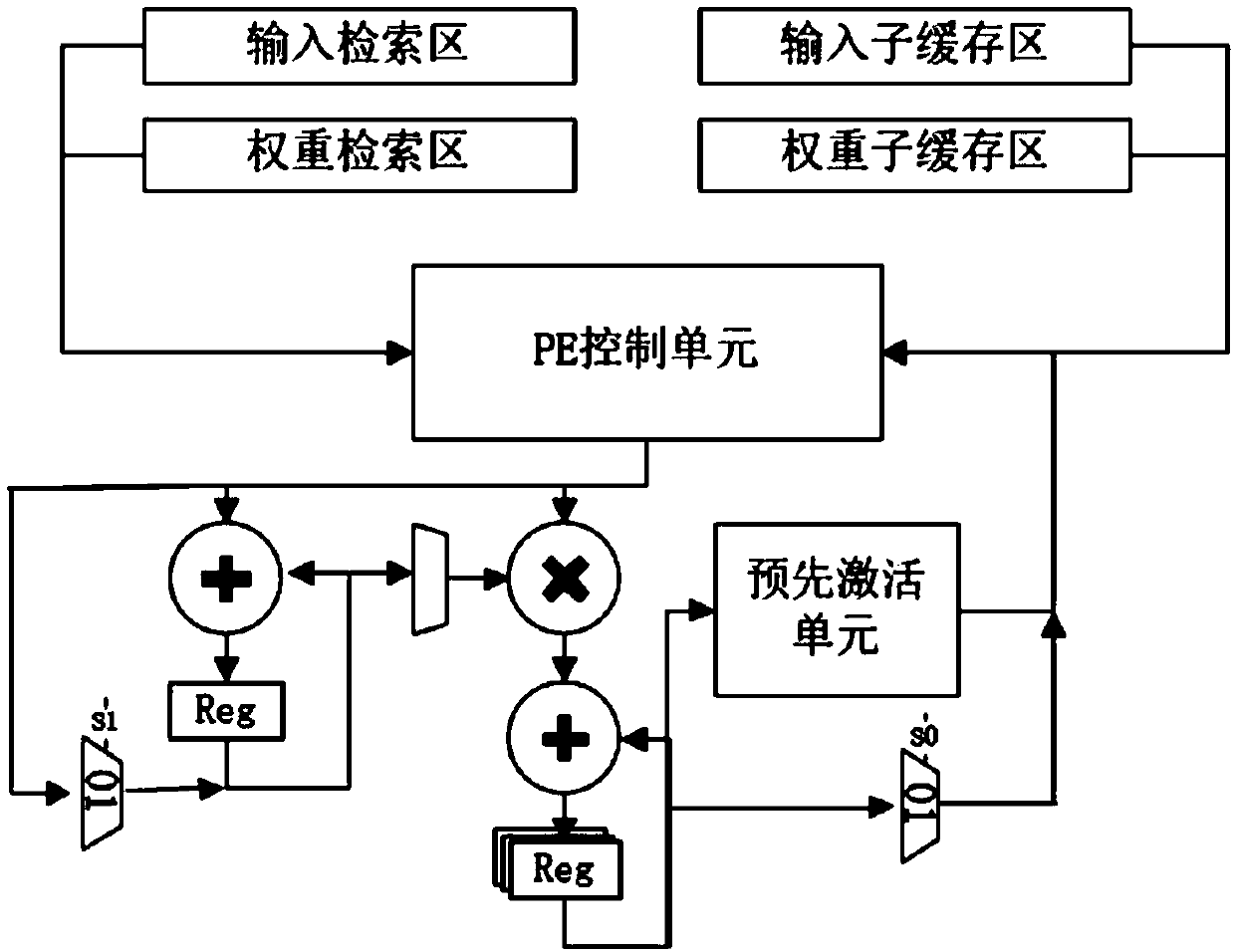

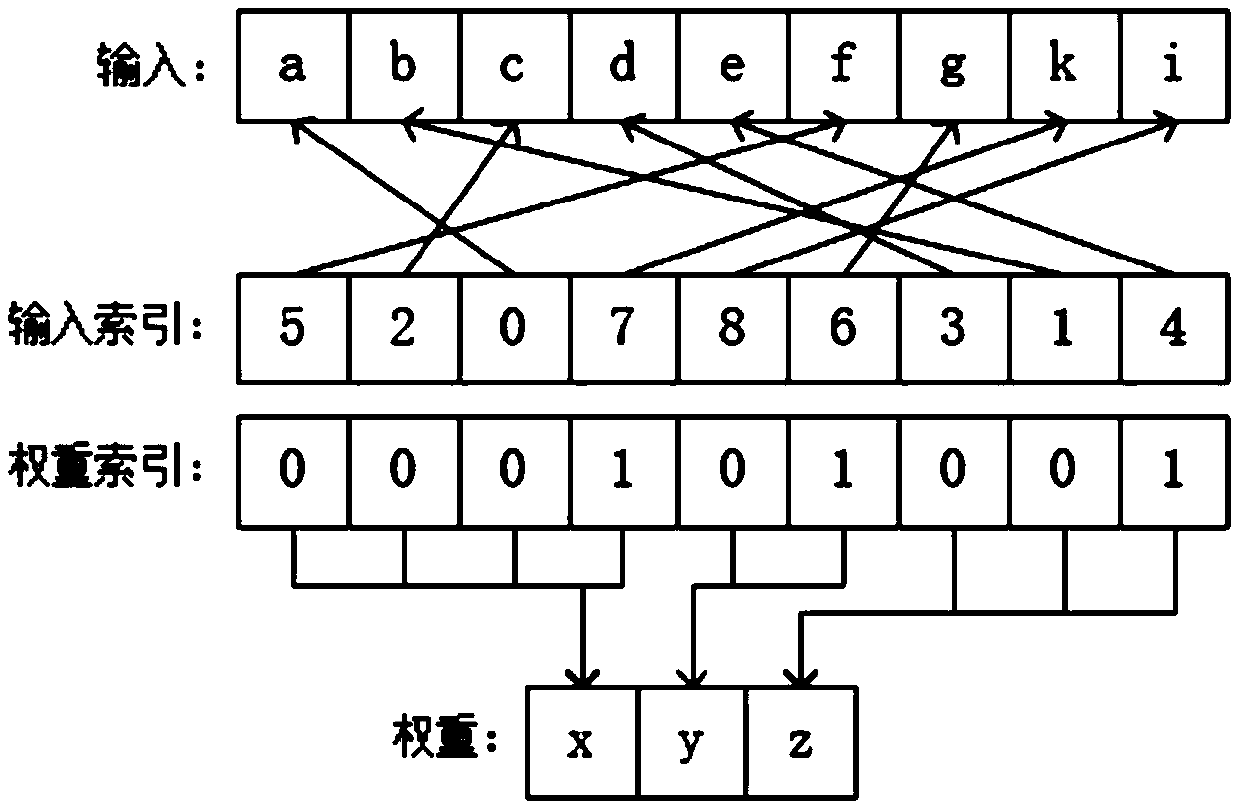

A convolutional neural network accelerator based on calculation optimization of an FPGA

PendingCN109598338AReduce readImprove real-time performanceNeural architecturesPhysical realisationBus interfaceData needs

The invention discloses a convolutional neural network accelerator based on calculation optimization of an FPGA. The convolutional neural network accelerator comprises an AXI4 bus interface, a data cache region, a pre-fetched data region, a result cache region, a state controller and a PE array. The data cache region is used for caching feature map data, convolution kernel data and index values read from an external memory DDR through an AXI4 bus interface; The pre-fetched data area is used for pre-fetching feature map data needing to be input into the PE array in parallel from the feature mapsub-cache area; The result cache region is used for caching a calculation result of each row of PE; The state controller is used for controlling the working state of the accelerator to realize conversion between the working states; And the PE array is used for reading the data in the pre-fetched data area and the convolution kernel sub-cache area to carry out convolution operation. The accelerator utilizes the characteristics of parameter sparsity, repeated weight data and an activation function Relu to end redundant calculation in advance, so that the calculation amount is reduced, and the energy consumption is reduced by reducing the access memory frequency.

Owner:SOUTHEAST UNIV +2

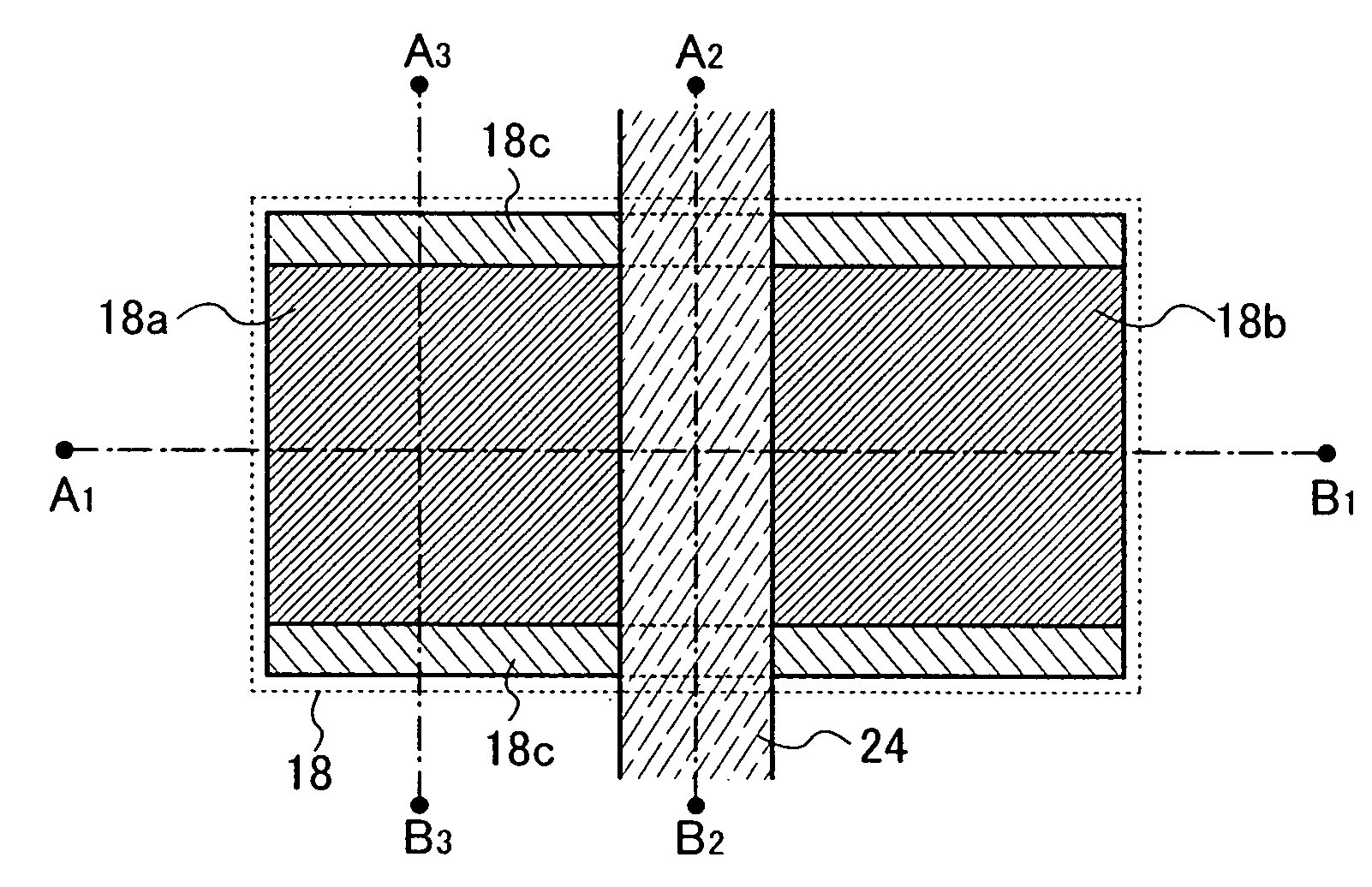

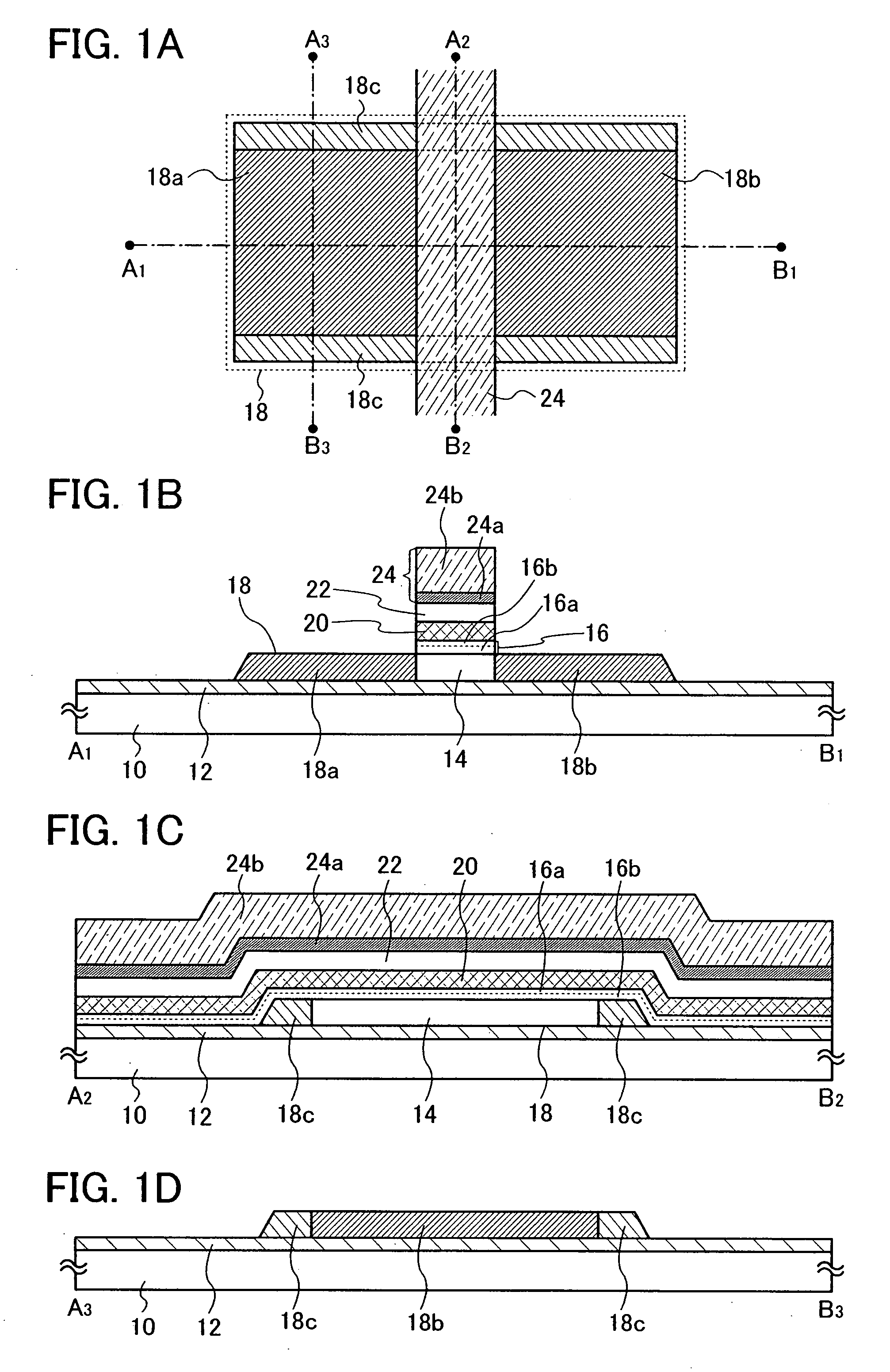

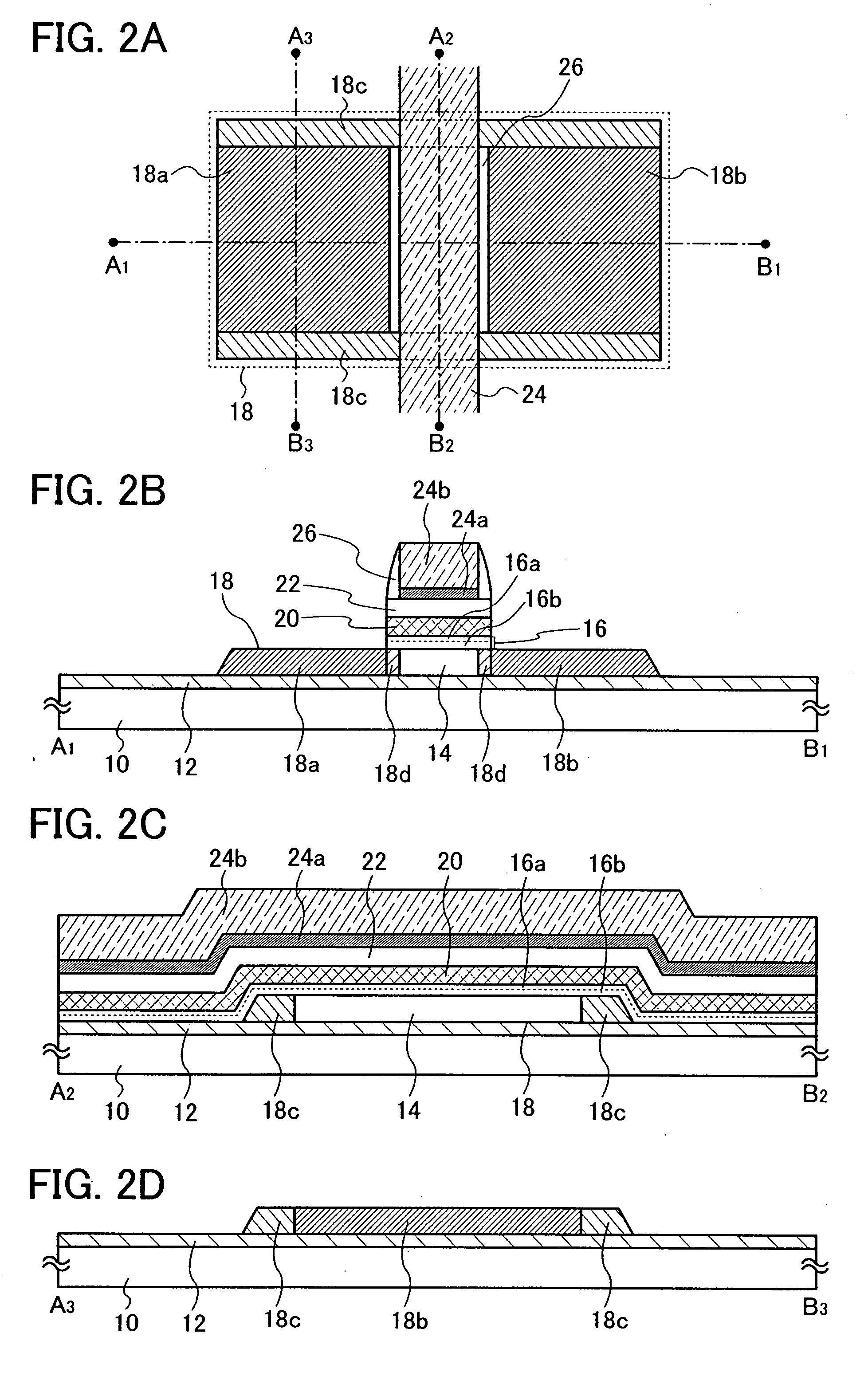

Nonvolatile semiconductor memory device and manufacturing method thereof

InactiveUS20070228420A1Improve reliabilityReduce readSemiconductor/solid-state device detailsSolid-state devicesImpuritySemiconductor

A nonvolatile semiconductor memory device is provided in such a manner that a semiconductor layer is formed over a substrate, a charge accumulating layer is formed over the semiconductor layer with a first insulating layer interposed therebetween, and a gate electrode is provided over the charge accumulating layer with a second insulating layer interposed therebetween. The semiconductor layer includes a channel formation region provided in a region overlapping with the gate electrode, a first impurity region for forming a source region or drain region, which is provided to be adjacent to the channel formation region, and a second impurity region provided to be adjacent to the channel formation region and the first impurity region. A conductivity type of the first impurity region is different from that of the second impurity region.

Owner:SEMICON ENERGY LAB CO LTD

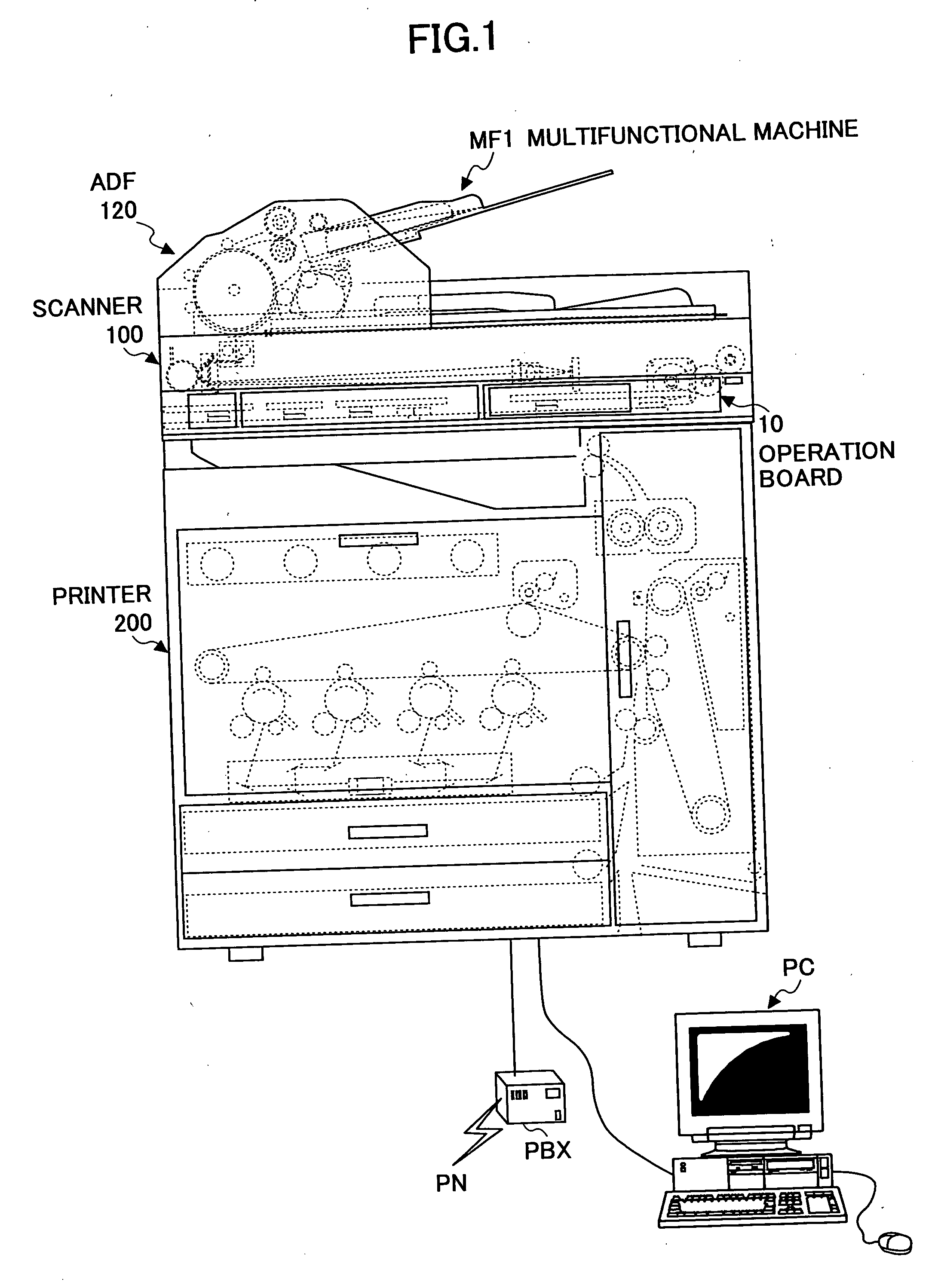

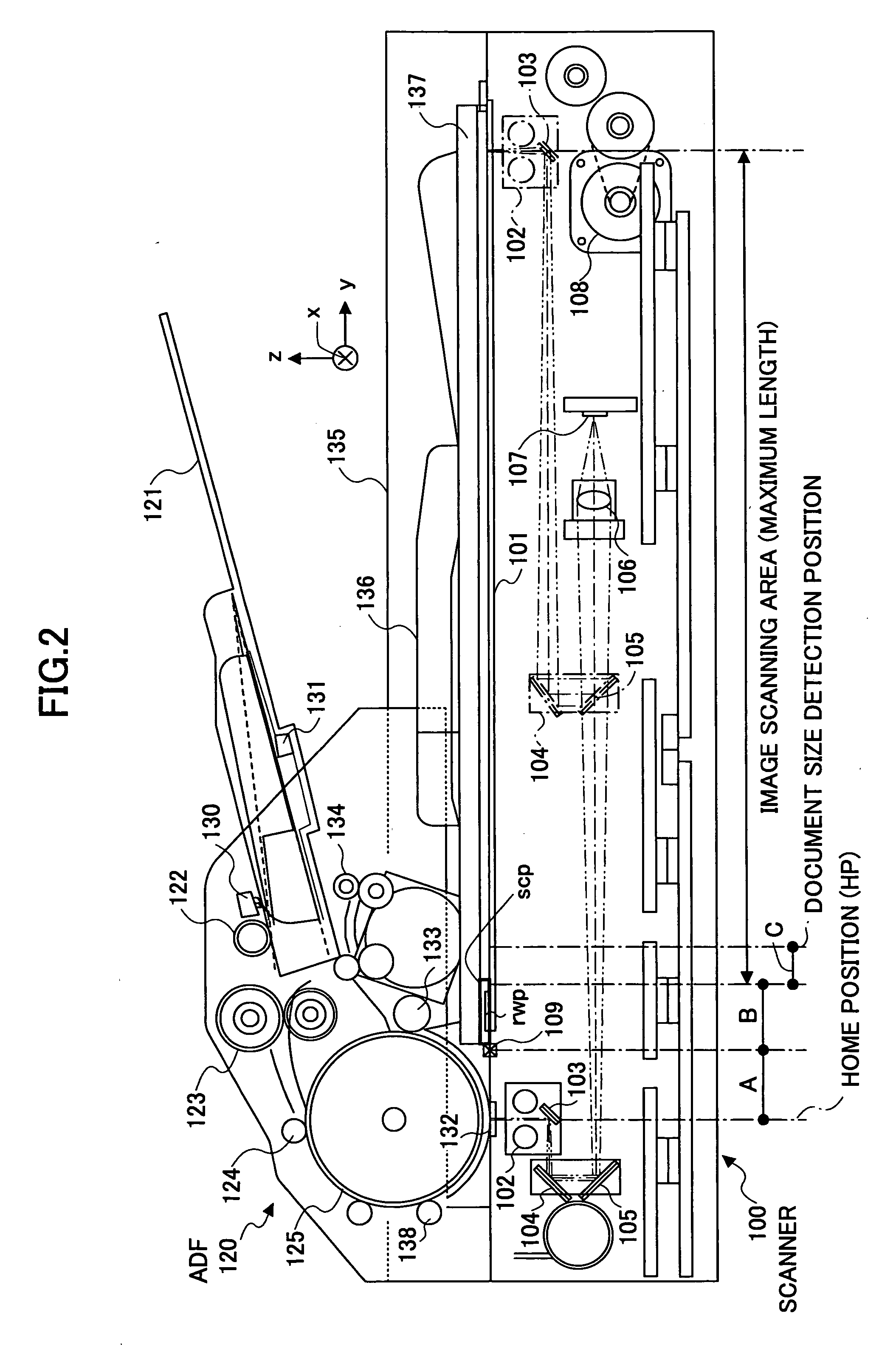

Document reading apparatus and an image formation apparatus

InactiveUS20060203306A1Task is not increasedImprove read qualityDigitally marking record carriersDigital computer detailsCurrent timeSignal amplification

A document reading apparatus is disclosed. The document reading apparatus includes a scanner; an energy-saving power supply unit; an energy-saving control unit; a clock IC; an output compensation unit for updating digital conversion parameters including image signal amplification gain such that image data of a reference white board read by a CCD of the scanner are made into a proper value; and an output compensation controlling unit. The output compensation controlling unit reads time data when an operation mode is shifted from pause mode to waiting mode, stores the digital conversion parameters updated by the output compensation unit in a non-volatile memory, updates operation time with the present time if the elapsed time from a previous operation time stored in the non-volatile memory is equal to or greater than a setup value, and uses the digital conversion parameters stored in the non-volatile memory as they are, if the elapsed time from the previous operation time stored in the non-volatile memory is less than the setup value.

Owner:RICOH KK

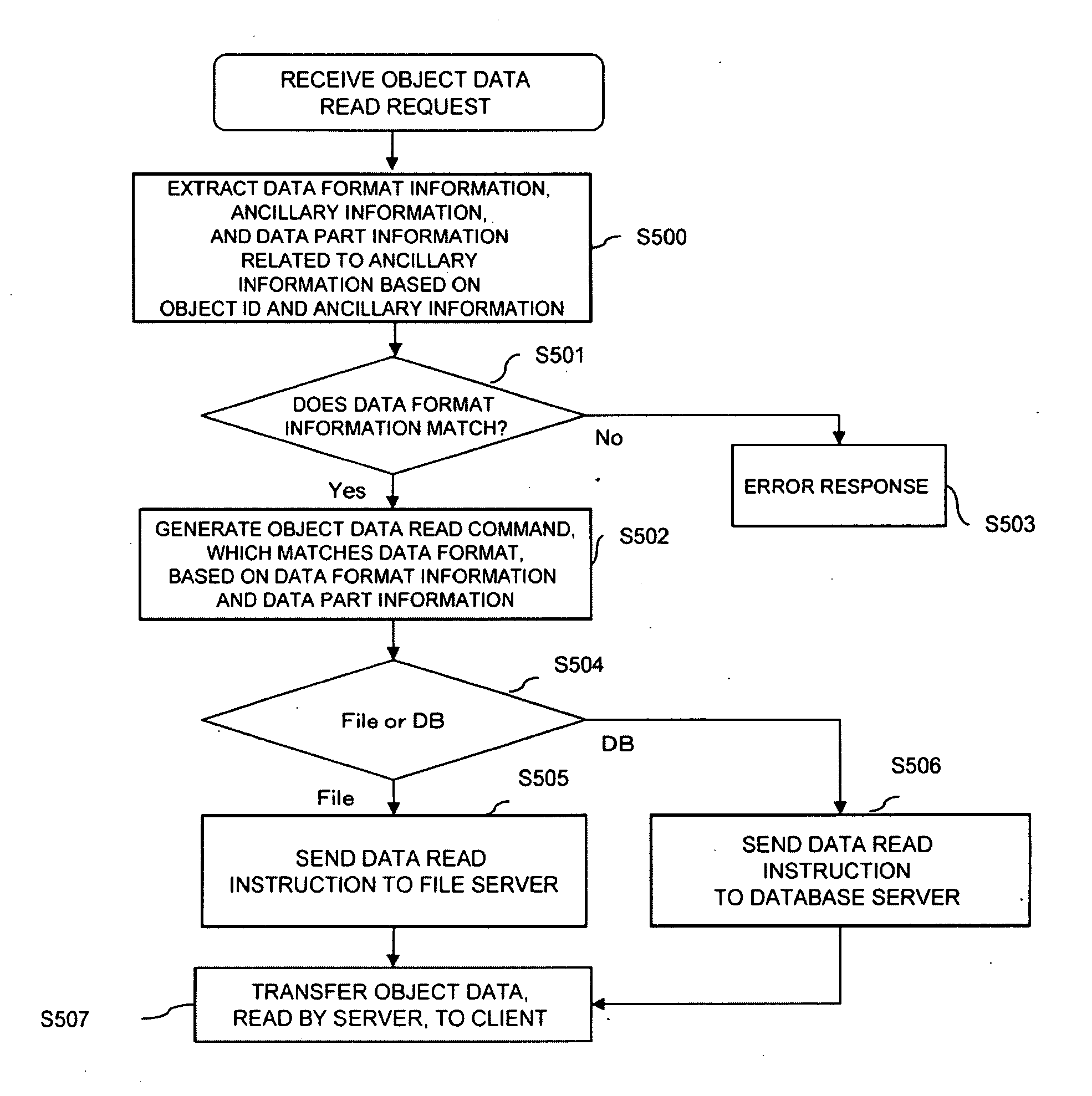

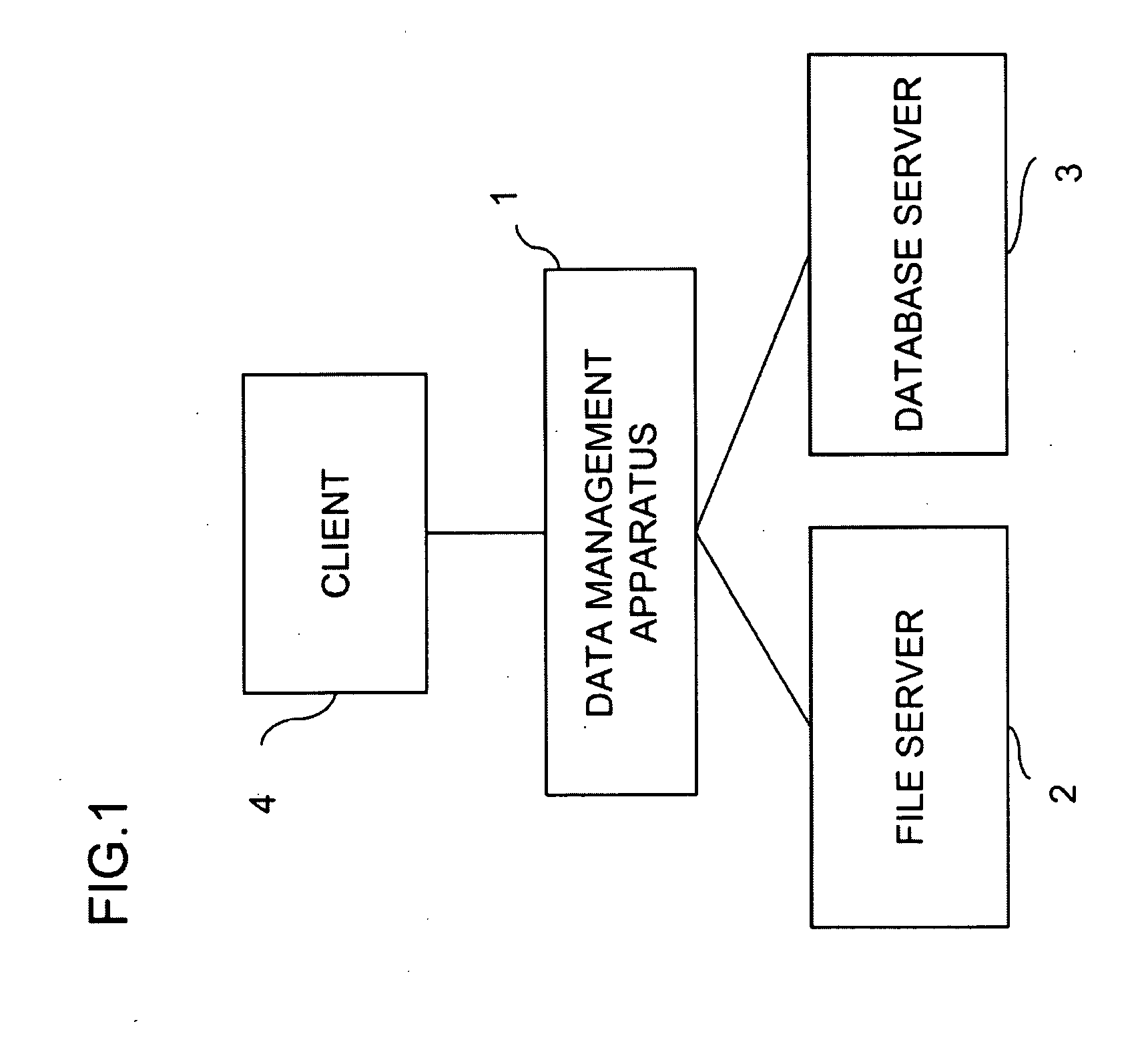

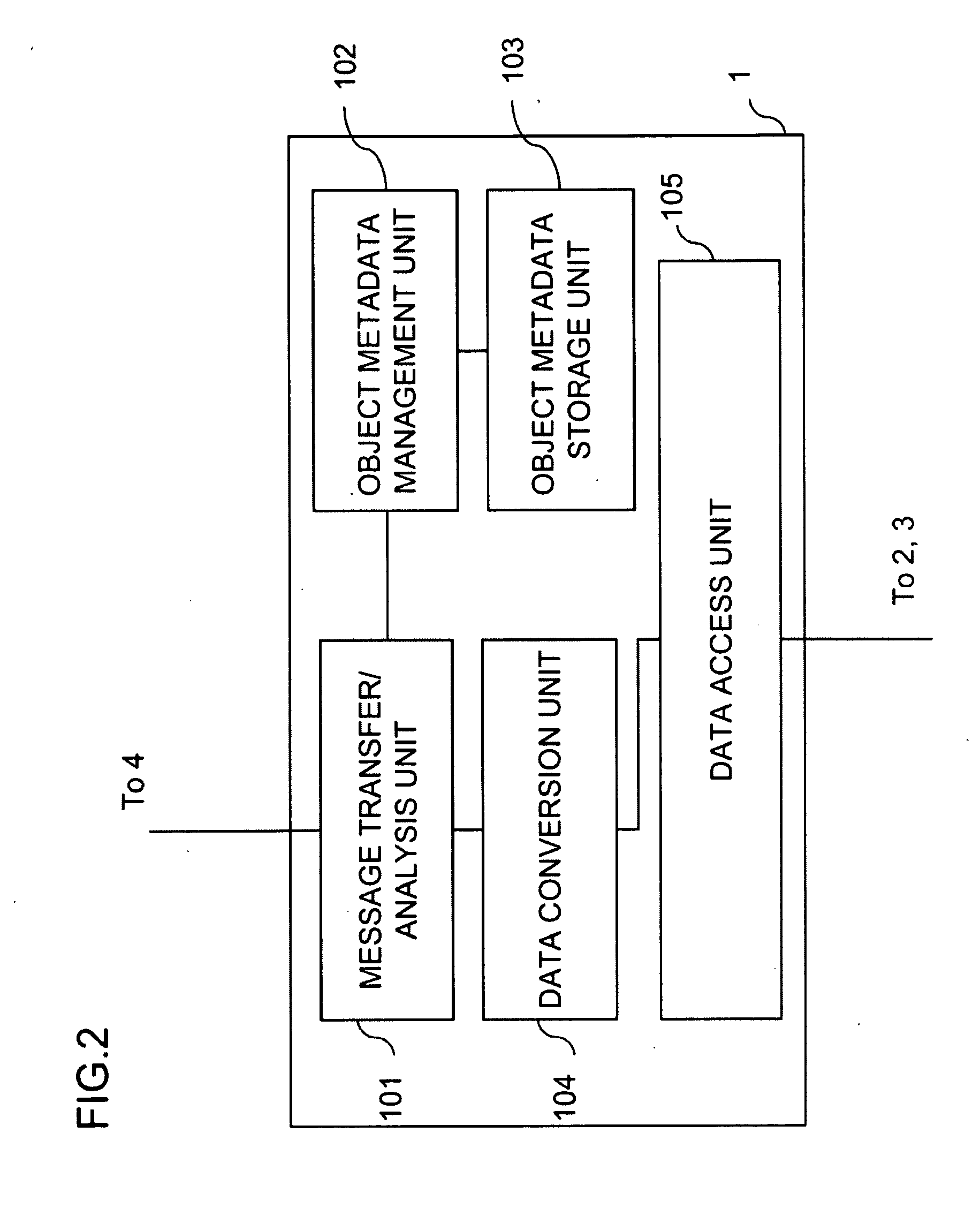

Data management apparatus, method and program

InactiveUS20100094803A1Reducing unnecessary data reading processingReduce readDigital data processing detailsSpecial data processing applicationsSide informationDatabase server

Disclosed is a data management apparatus comprising a message transfer / analysis unit that receives an object data access request from a client and returns a response; an object metadata storage unit that stores, as metadata, a set of ancillary information on data for an object and a data area corresponding to the ancillary information; an object metadata management unit that performs reading, updating, and registration processing for the metadata; a data conversion unit that sends the data access request to a data access unit, extracts object data; and a data access unit that sends a data access command, specified by the data conversion unit, to a file server or a database server and sends response data, received from the file server or the database server, to the data conversion unit.

Owner:NEC CORP

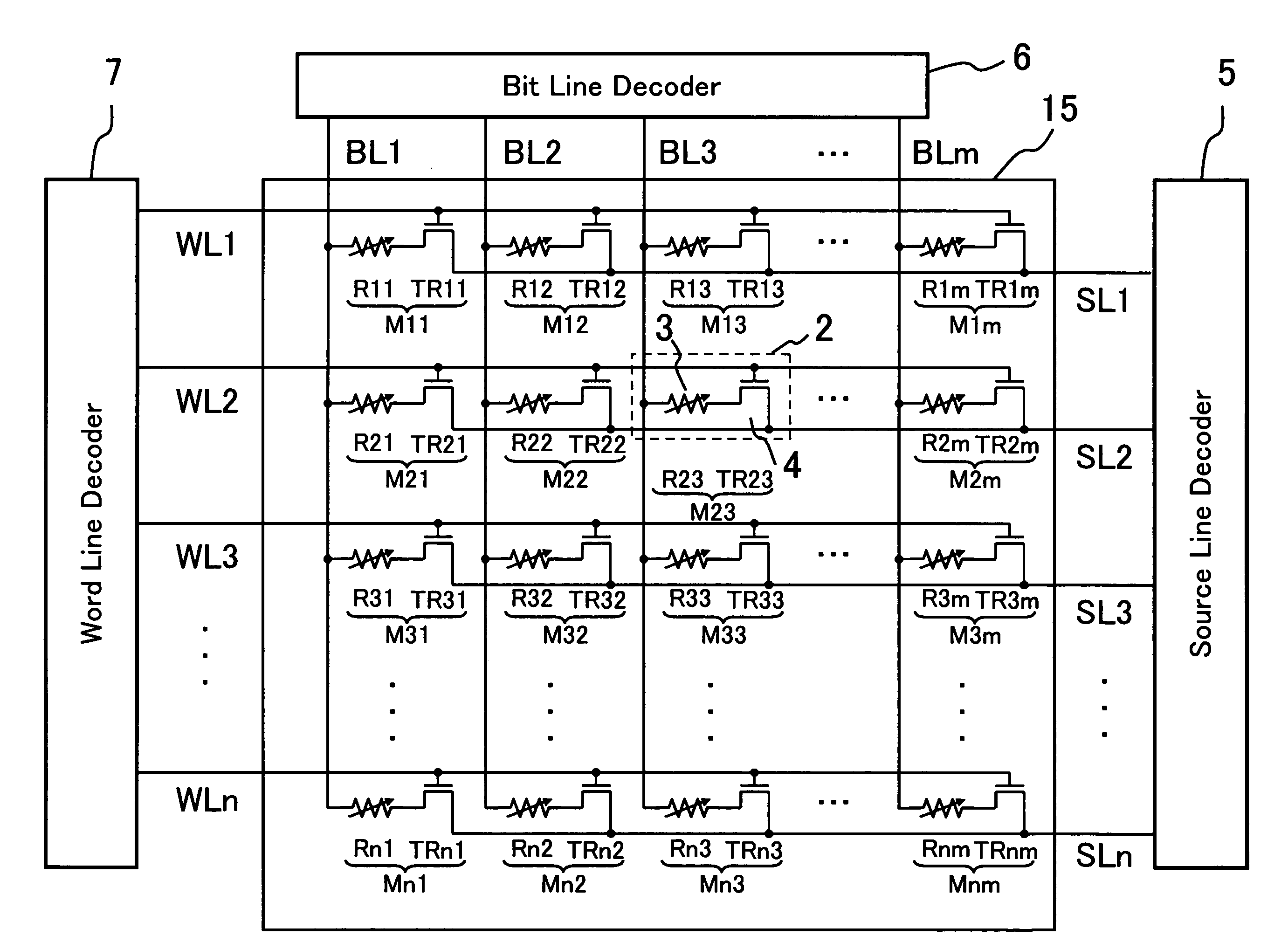

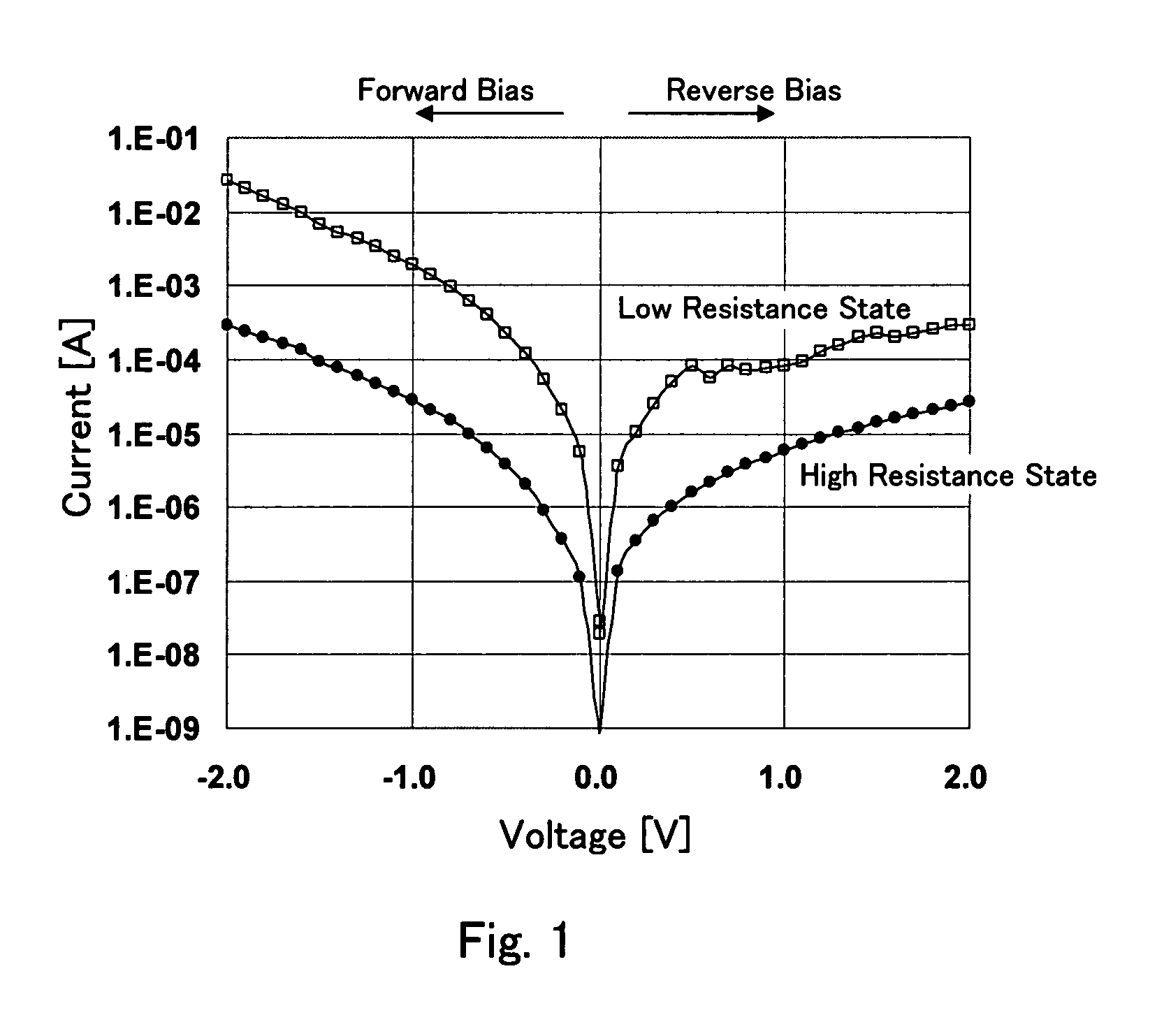

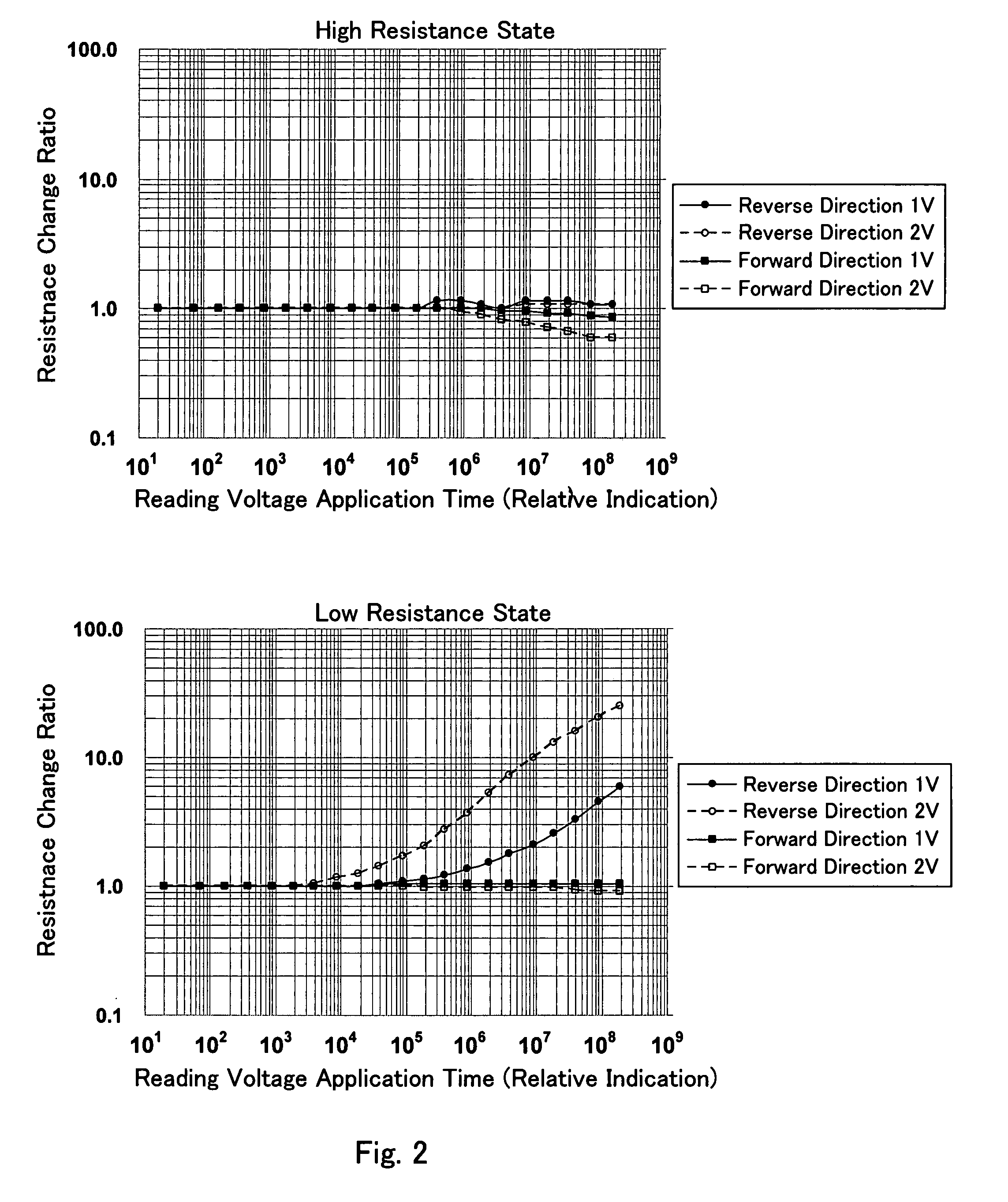

Nonvolatile semiconductor memory device

InactiveUS20080025072A1Keep reading marginAvoid changeRead-only memoriesDigital storageHemt circuitsEngineering

A nonvolatile semiconductor memory device comprises a memory cell including a variable resistance element changing its electric resistance by voltage application and having current-voltage characteristics in which a positive bias current flowing when a positive voltage is applied from one electrode as a reference electrode to the other electrode through an incorporated rectifier junction is larger than a negative bias current, a memory cell selection circuit for selecting the memory cell from the memory cell array, a voltage supply circuit for supplying a voltage to the memory cell so that a predetermined positive voltage corresponding to the reading operation is applied to the other electrode of the variable resistance element, in the reading operation, and a readout circuit for detecting the amount of the positive bias current and reading the information stored in the selected memory cell, in order to suppress the reading disturbance of the memory cell.

Owner:SHARP KK +1

Photoelectric conversion apparatus with fully differential amplifier

InactiveUS7808537B2Reduce readTelevision system detailsTelevision system scanning detailsAudio power amplifierEngineering

To provide a configuration including a fully differential amplifier in which decrease in a reading speed can be suppressed. A photoelectric conversion apparatus according to the present invention includes a pixel area where a plurality of pixels are arranged; an amplifier configured to amplify a signal from the pixel area; a plurality of signal paths for transmitting the signals from the pixel area to the amplifier. The amplifier is a fully differential amplifier which includes a plurality of input terminals including a first input terminal and a second input terminal to which the signals from the plurality of signal paths are supplied and a plurality of output terminals including a first output terminal and a second output terminal and the input terminals and the output terminals have no feedback path provided therebetween.

Owner:CANON KK

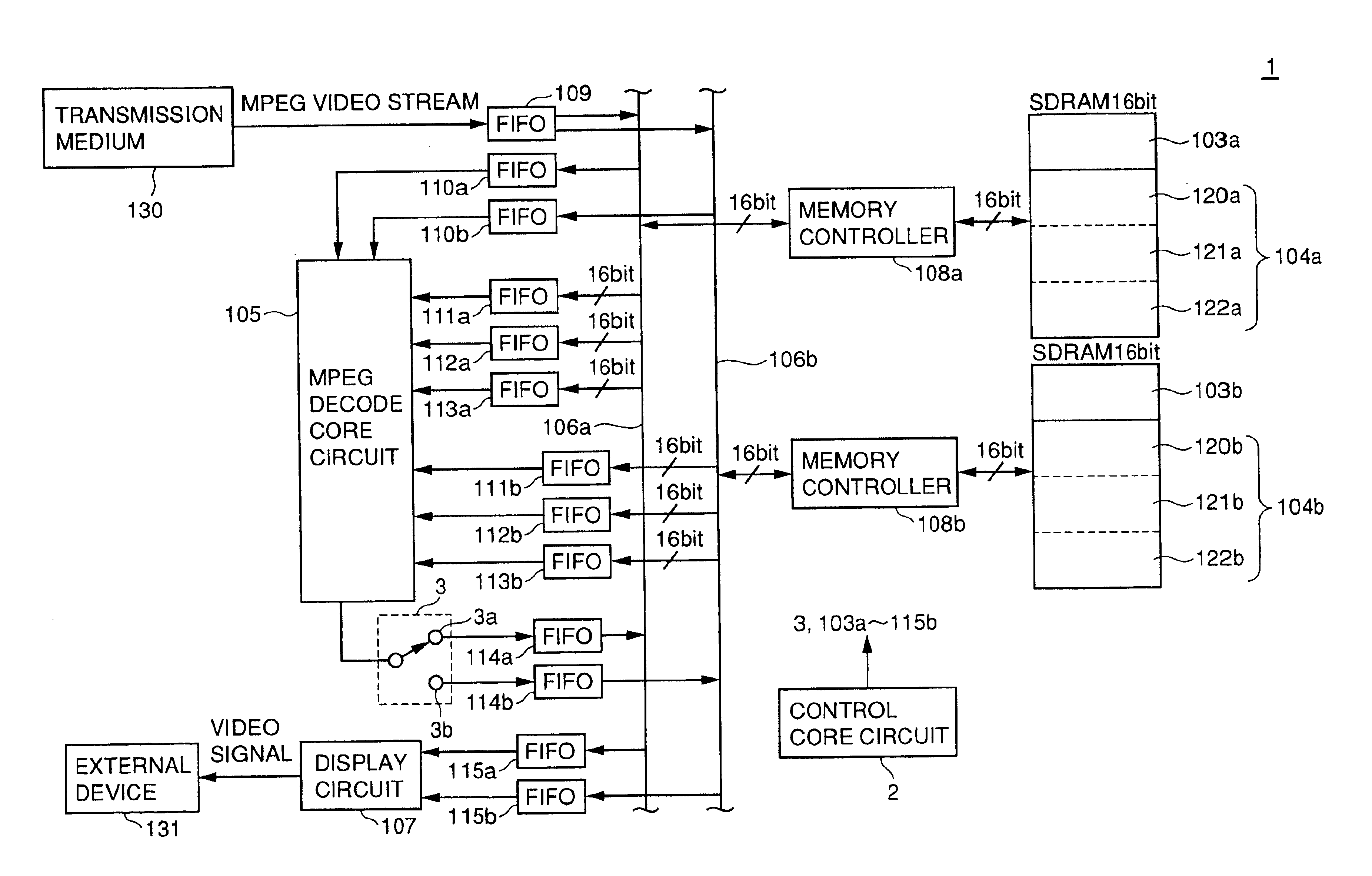

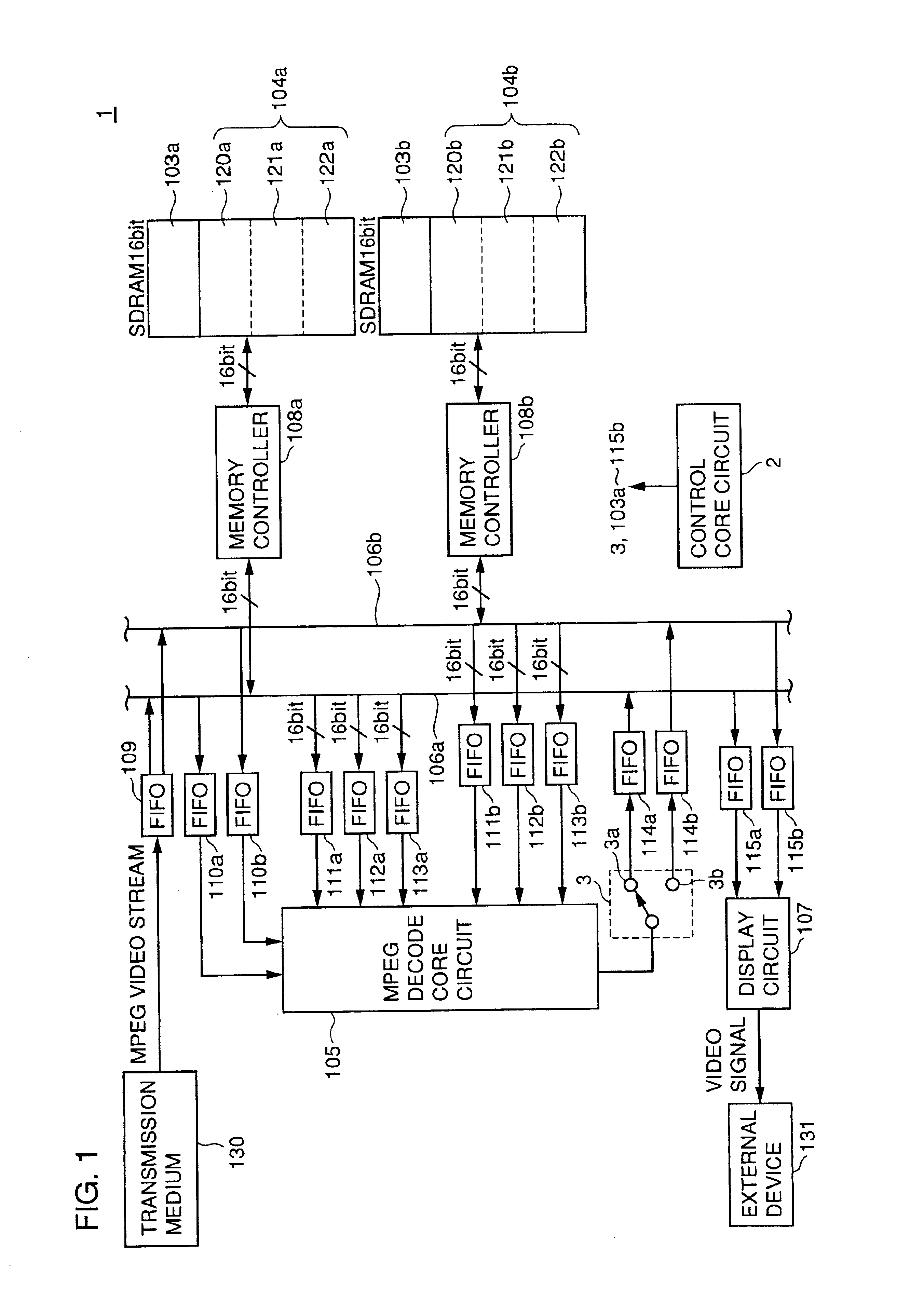

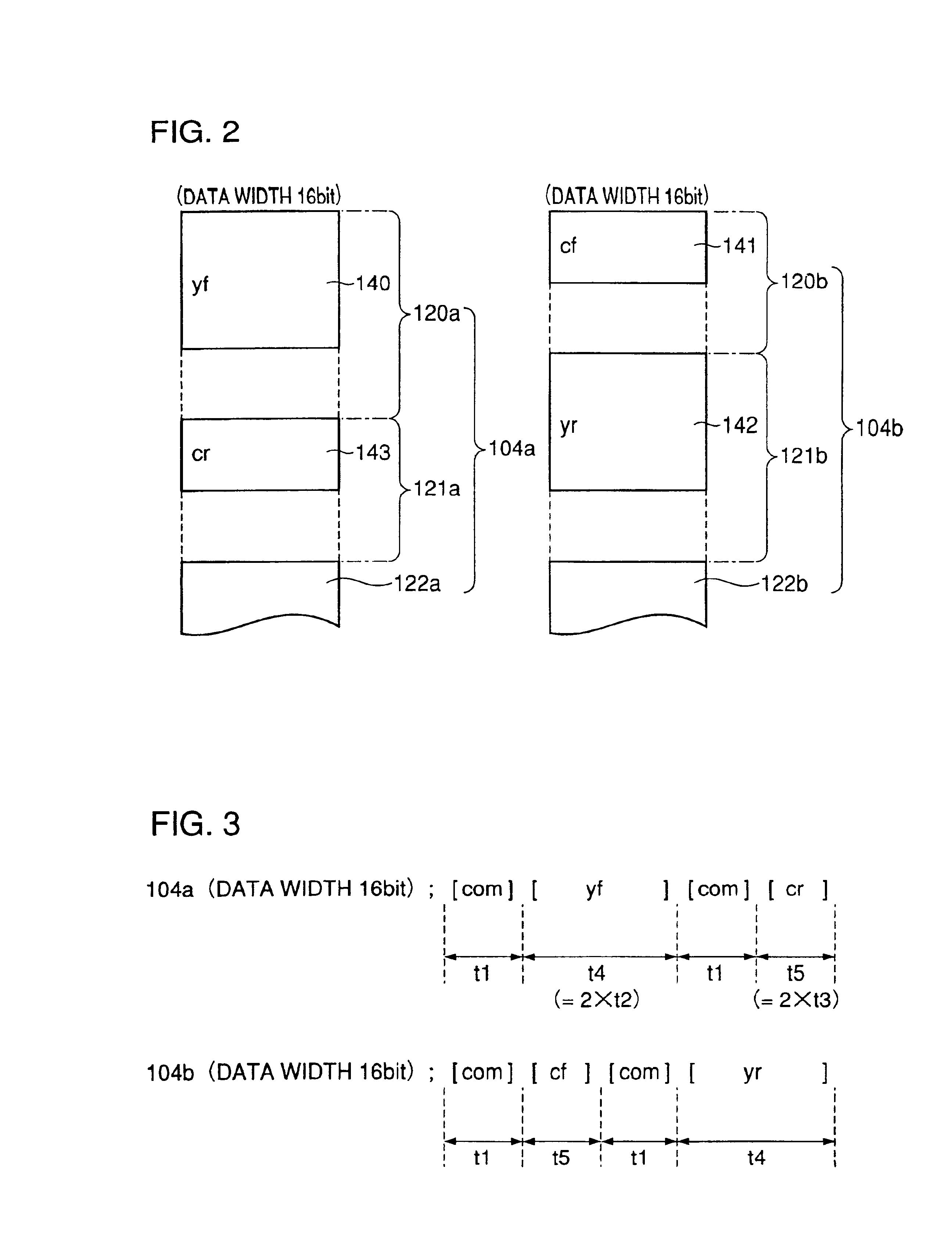

Video decoder

InactiveUS6871001B1High speedShorten the time periodTelevision system detailsColor signal processing circuits16-bitDiscrete cosine transform

An MPEG video decoder 1 decodes a MPEG video stream using a discrete cosine transform together with a motion compensated prediction performing backward prediction and forward prediction. A frame buffer 104a is provided with a storage area for forward-reference luminance data used for the backward prediction and a storage area for rearward-reference color-difference data used for the forward prediction. A frame buffer 104b is provided with a storage area for forward-reference color-difference data used for the backward prediction and a storage area for rearward-reference luminance data used for the forward prediction. Memory access for each of the frame buffers 104a, 104b with an input / output data bus width of 16 bit is performed in a parallel processing.

Owner:SANYO ELECTRIC CO LTD

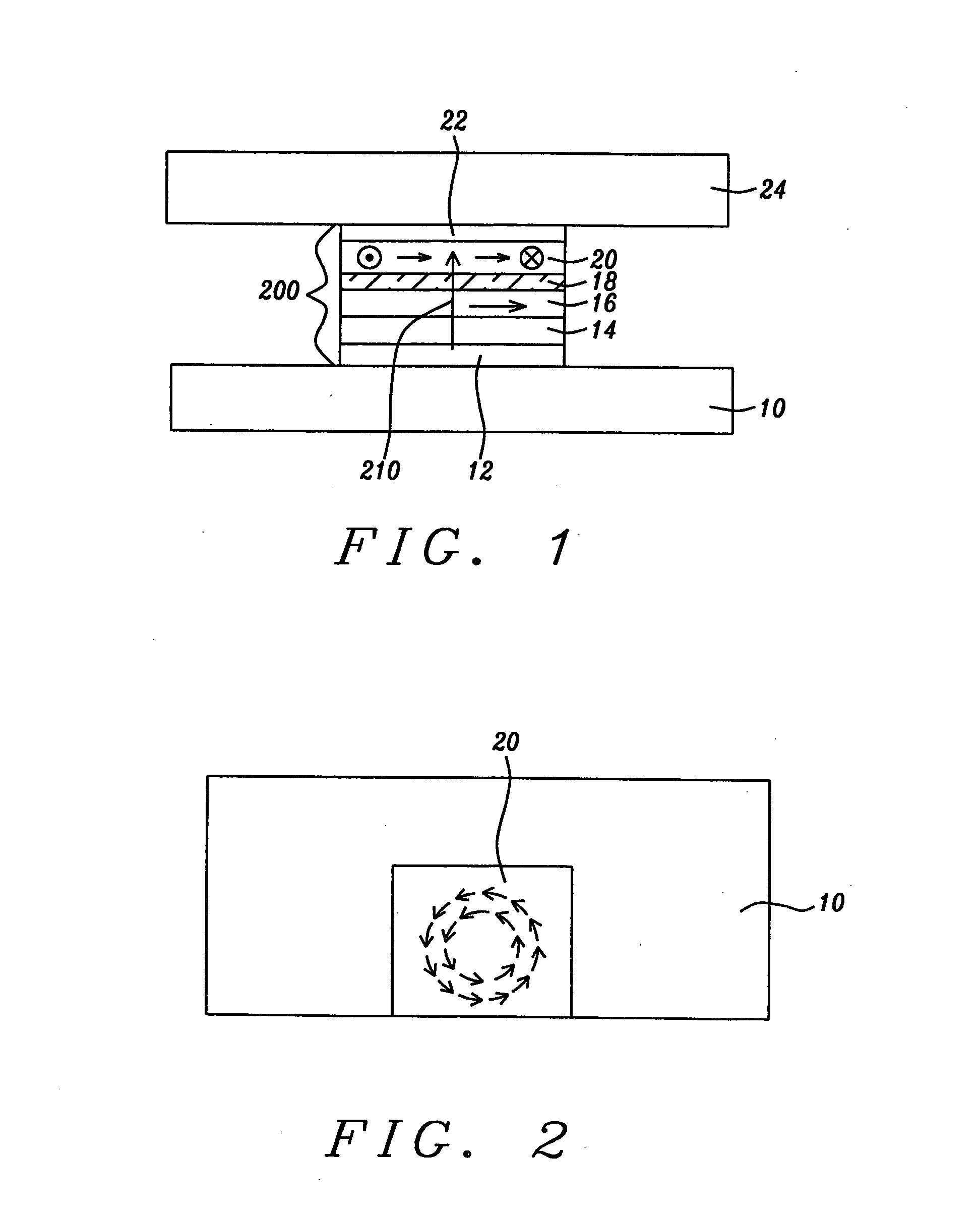

Method for fabricating a patterned synthetic longitudinal exchange biased GMR sensor

InactiveUS6857180B2Reduce contribution of signal AStabilizing bias pointNanomagnetismElectrical transducersMagnetizationExchange bias

Patterned, longitudinally and transversely antiferromagnetically exchange biased GMR sensors are provided which have narrow effective trackwidths and reduced side reading. The exchange biasing significantly reduces signals produced by the portion of the ferromagnetic free layer that is underneath the conducting leads while still providing a strong pinning field to maintain sensor stability. In the case of the transversely biased sensor, the magnetization of the free and biasing layers in the same direction as the pinned layer simplifies the fabrication process and permits the formation of thinner leads by eliminating the necessity for current shunting.

Owner:HEADWAY TECH INC

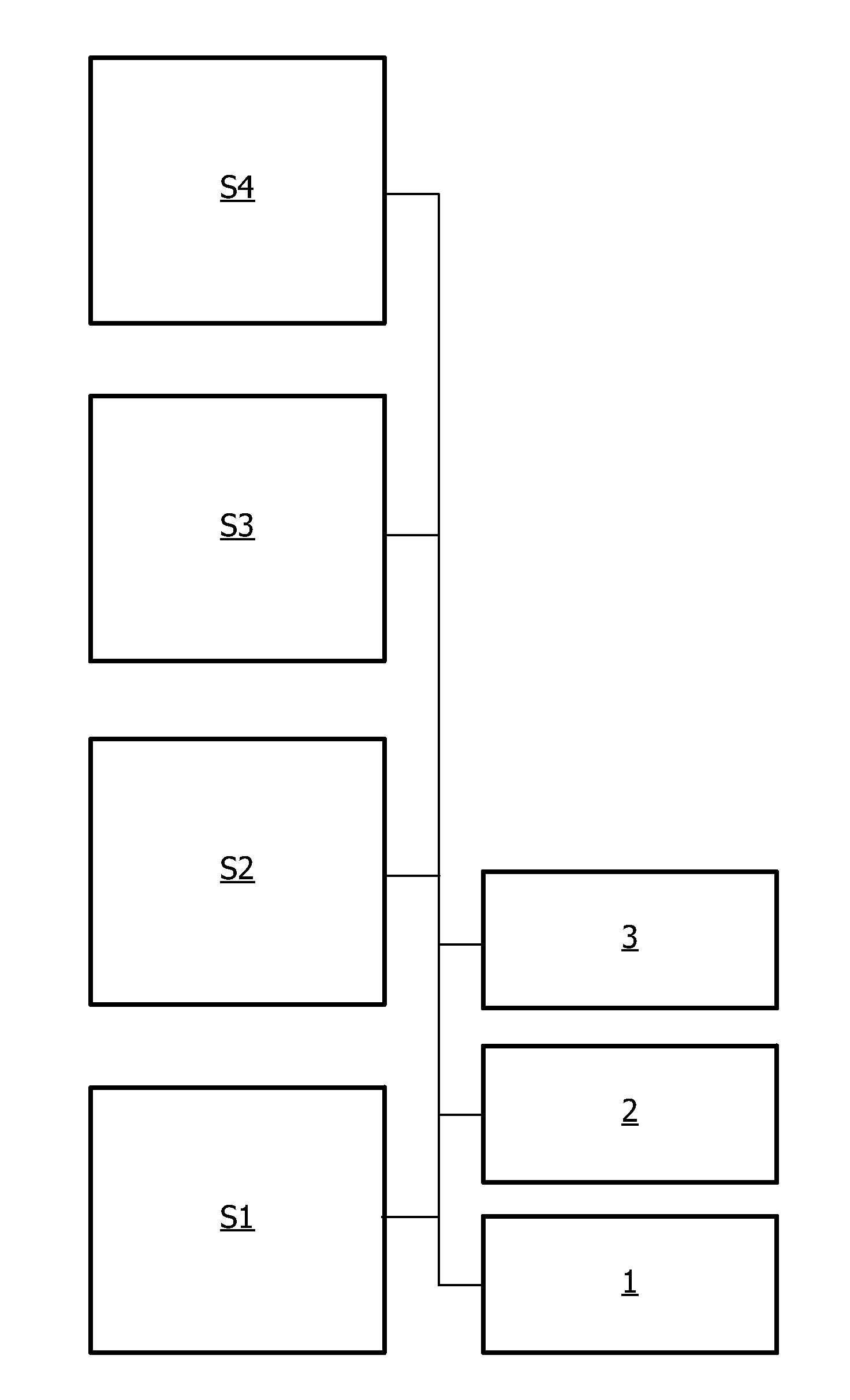

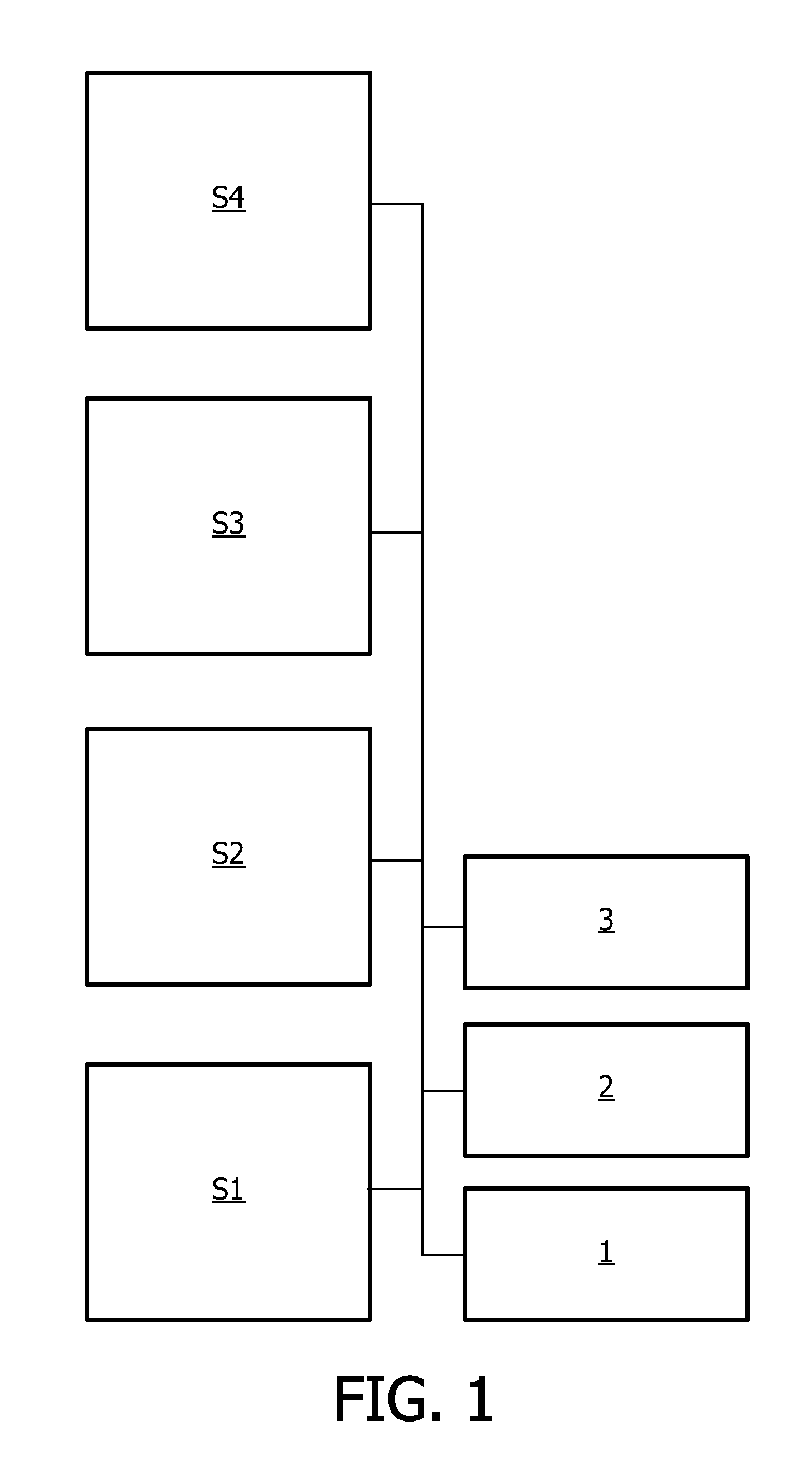

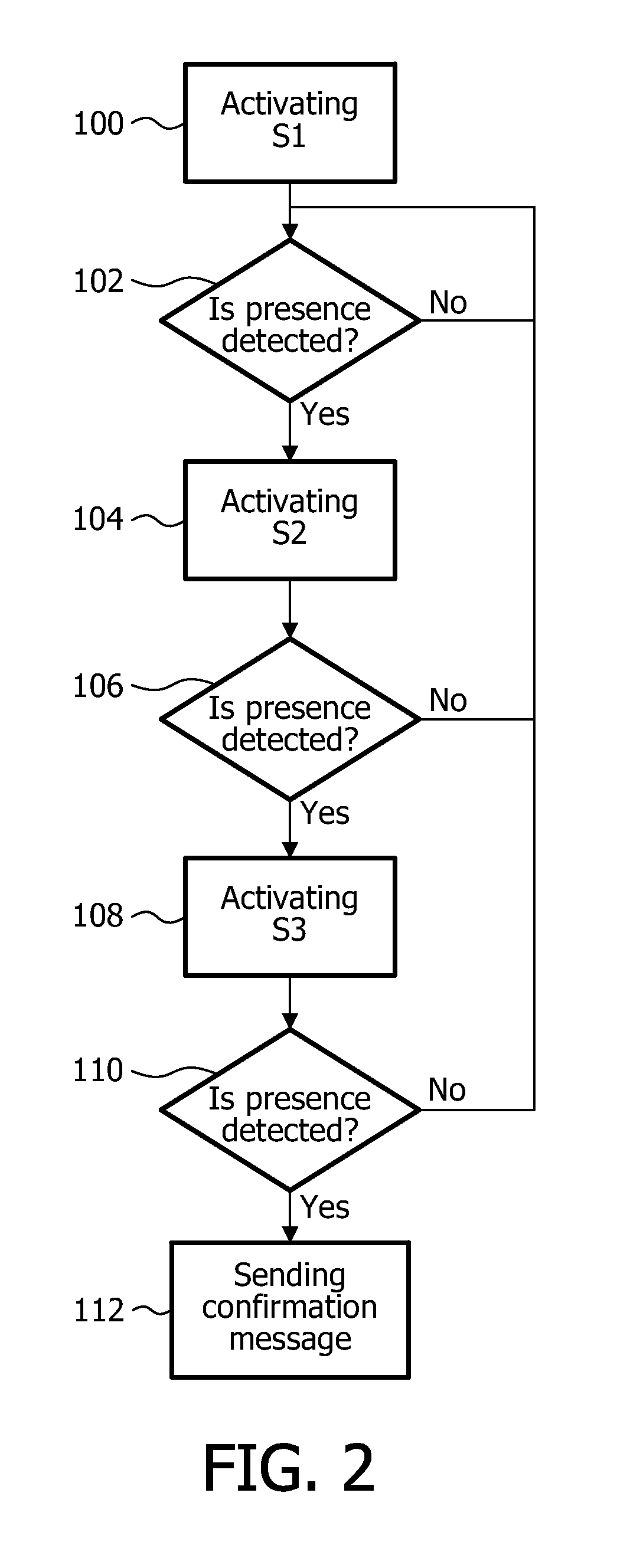

Energy efficient cascade of sensors for automatic presence detection

InactiveUS20120105193A1Reduce power consumptionImprove accuracyElectrical apparatusElectric testing/monitoringOutput deviceEngineering

The present invention relates to a method and a system for detecting presence in a predefined space. The system comprises cascade connected sensors (s1, s2, . . . , sn), an output device (1), a control unit (2) and a processing unit (3). The method comprises the steps of activating a first sensor (s1) in the cascade and waiting until the first sensor detects presence. When presence is detected, a successive sensor (s2, . . . , sn) in the cascade is activated, and when the successive sensor (s2, . . . , sn) also detects presence the step of activating the successive sensor is repeated until all sensors in the cascade have been activated. If the successive sensor (s2, . . . , sn) does not detect presence the method returns to the waiting step of the first sensor. When the ultimate sensor in the cascade (sn) detects presence, a confirmation message is sent to the output device (1).

Owner:SIGNIFY HLDG BV +1

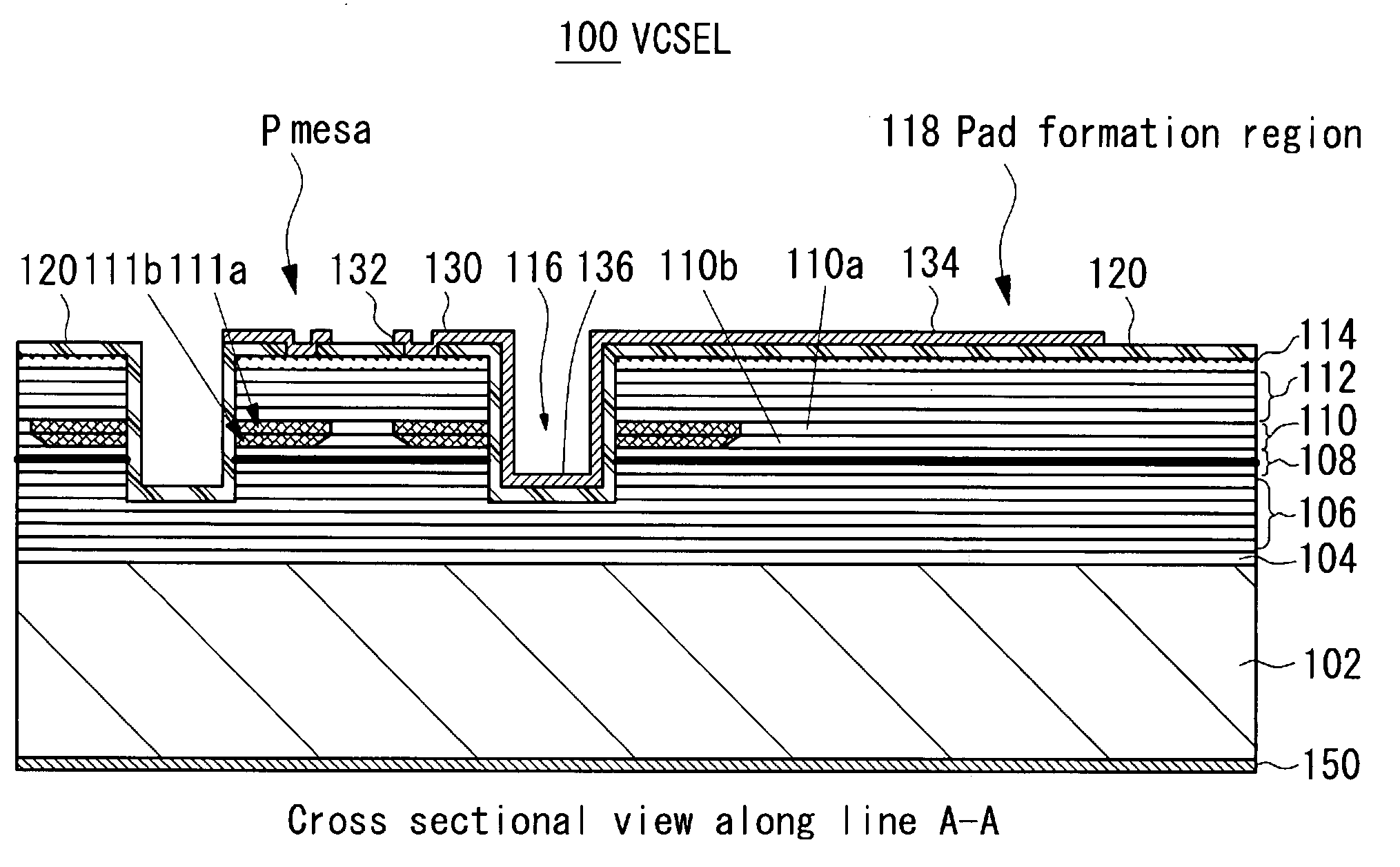

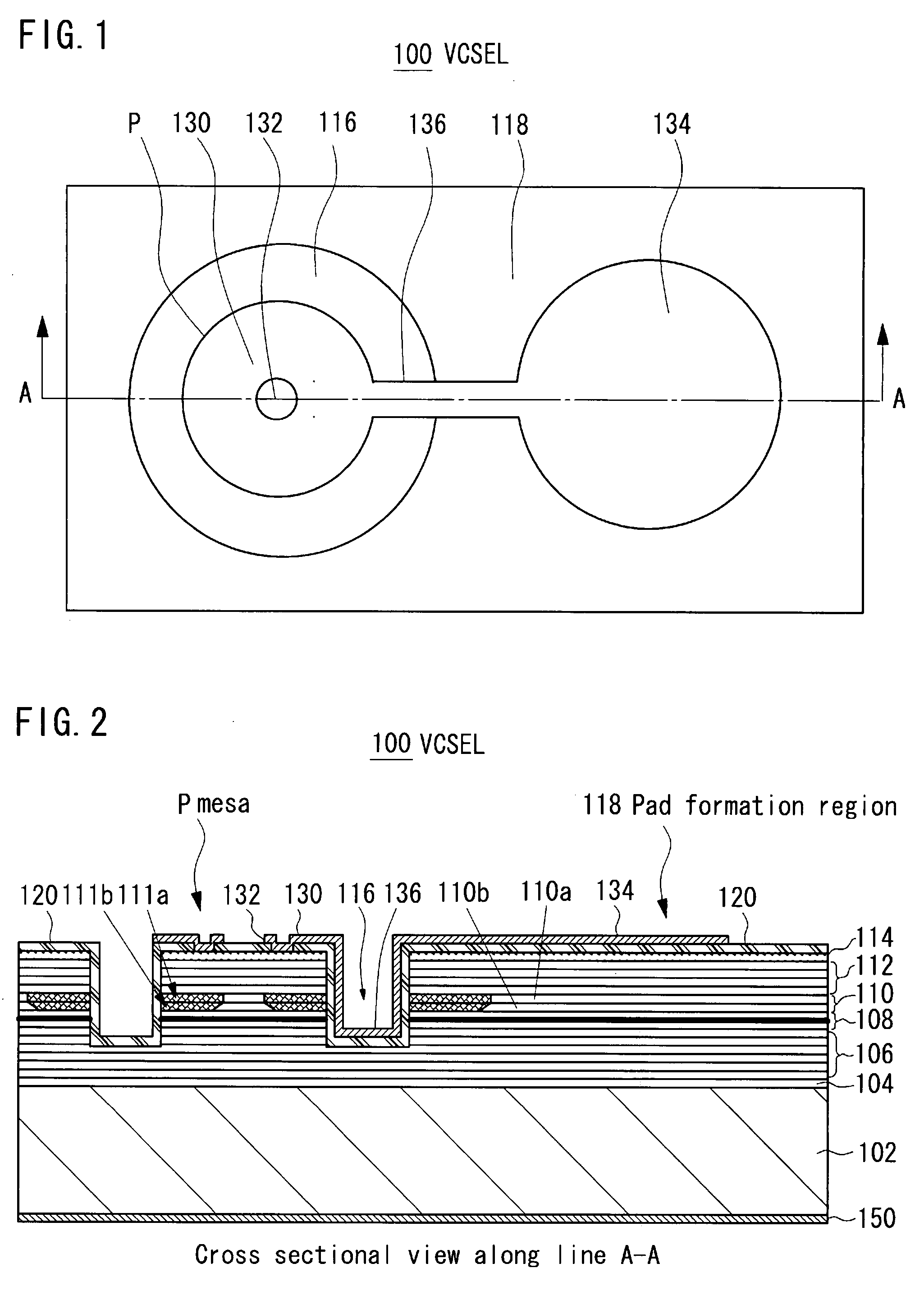

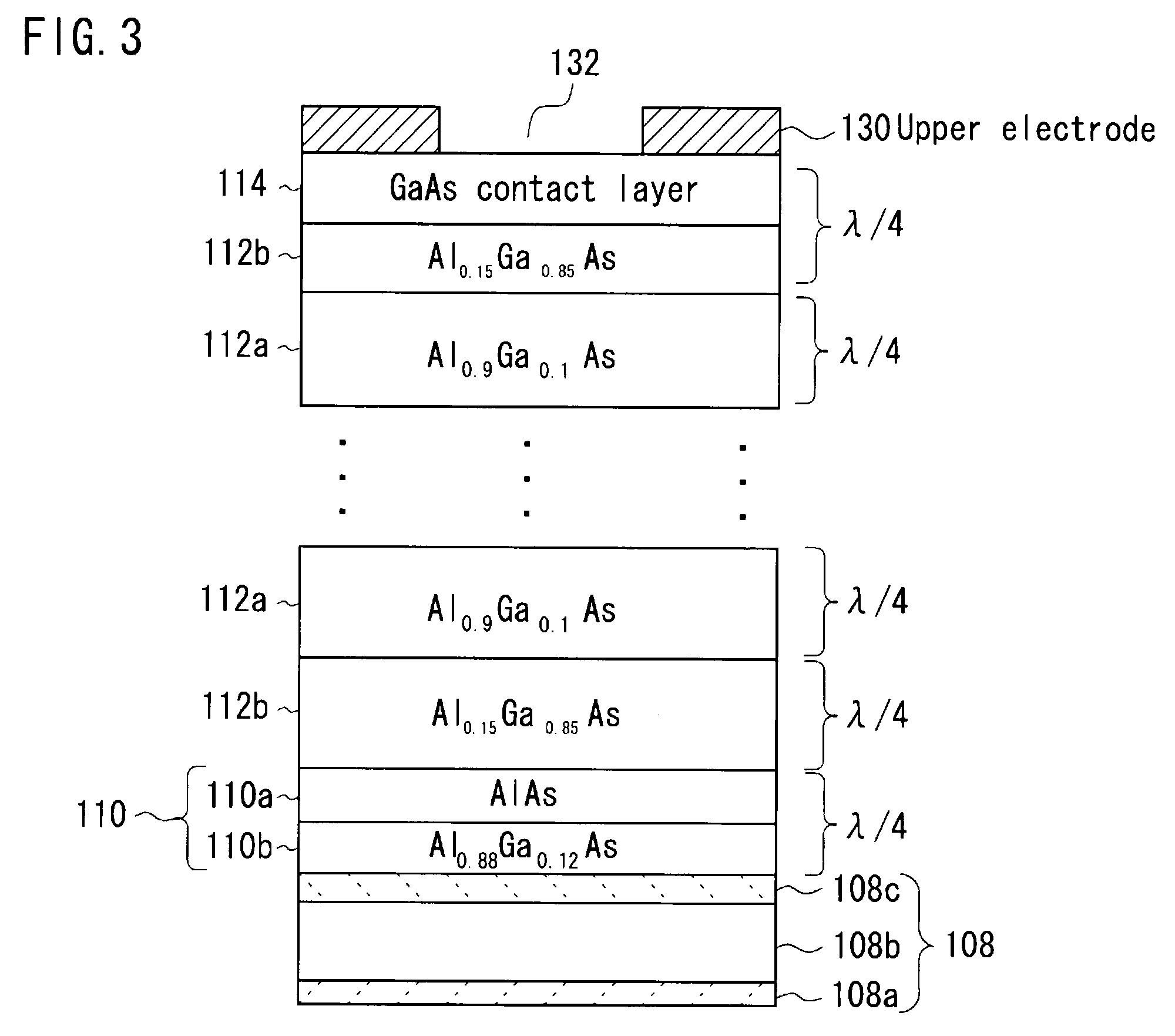

VCSEL, manufacturing method thereof, optical device, light irradiation device, data processing device, light sending device, optical spatial transmission device, and optical transmission system

ActiveUS20080187015A1Increase incidenceReduce dataLaser detailsSemiconductor/solid-state device manufacturingOptical spaceElectrical conductor

A VCSEL includes a first conductivity-type first semiconductor mirror layer on a substrate, an active region thereon, a second conductivity-type second semiconductor mirror layer thereon, and a current confining layer in proximity to the active region. A mesa structure is formed such that at least a side surface of the current confining layer is exposed. The current confining layer includes a first semiconductor layer having an Al-composition and a second semiconductor layer having an Al-composition and being formed nearer to the active region than the first semiconductor layer does. Al concentration of the first semiconductor layer is higher than that of the second semiconductor layer. When oscillation wavelength of laser light is λ, optical thickness being sum of the thickness of the first and second semiconductor layers is λ / 4. The first and second semiconductor layers are selectively oxidized from the side surface of the mesa structure.

Owner:FUJIFILM BUSINESS INNOVATION CORP

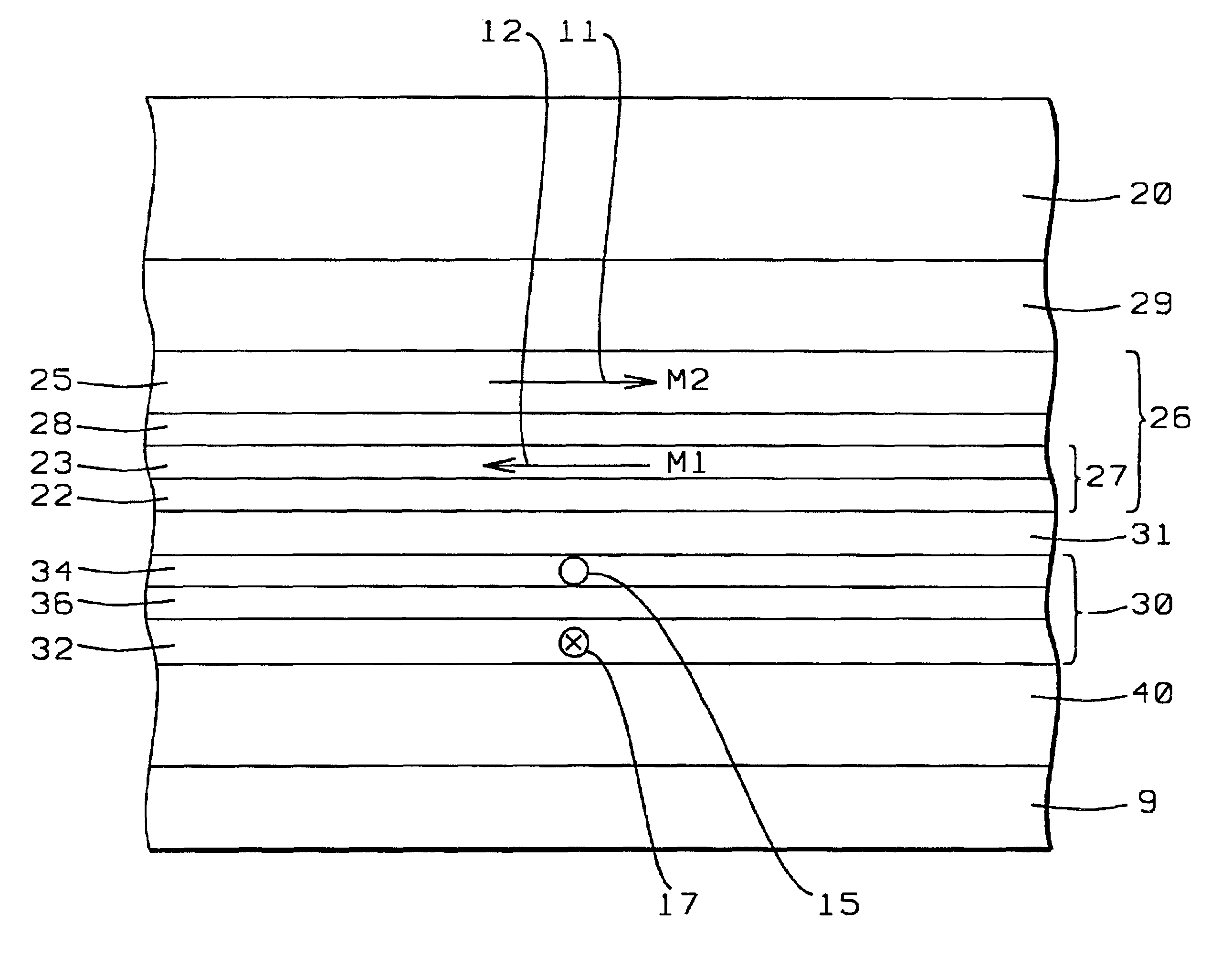

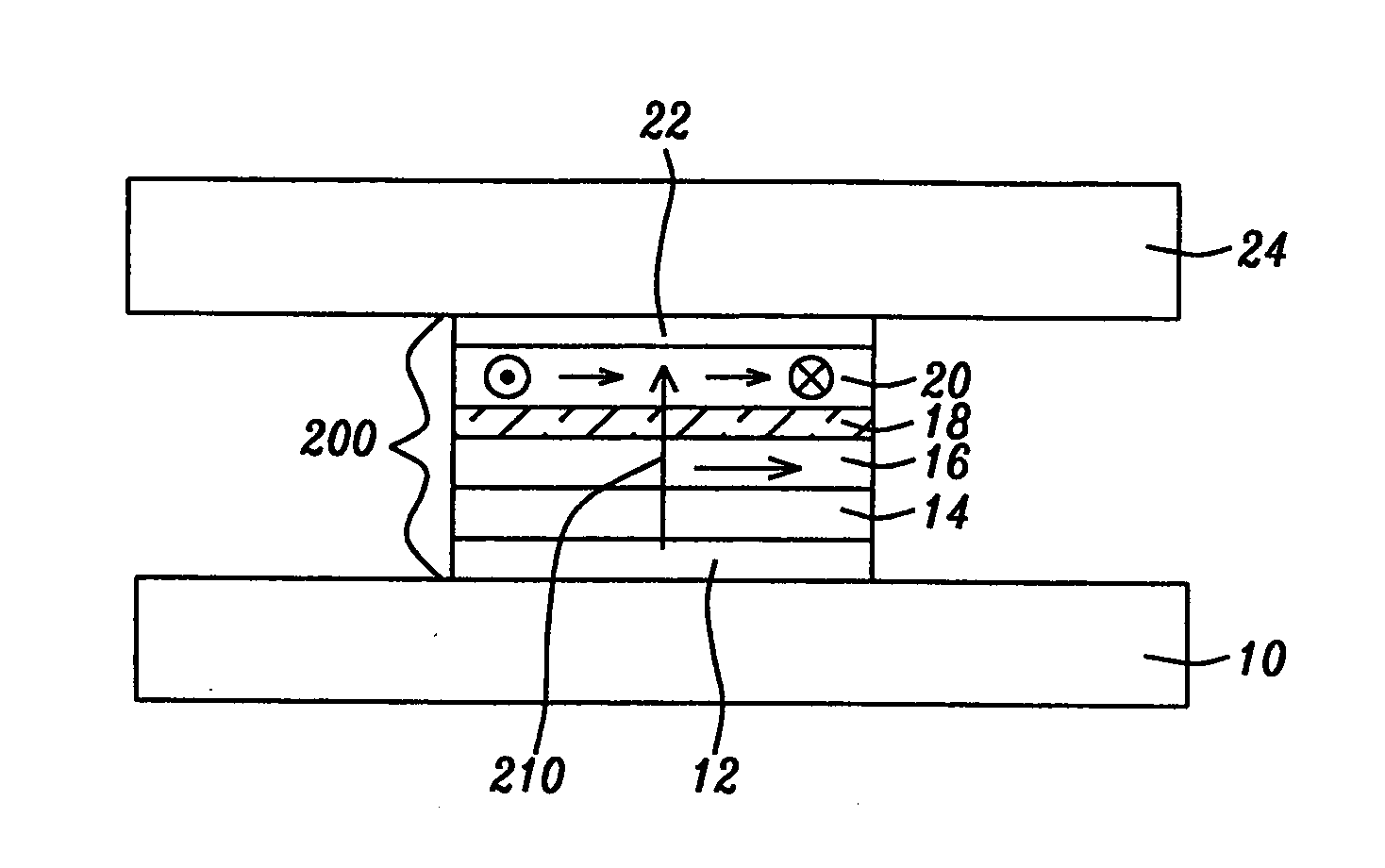

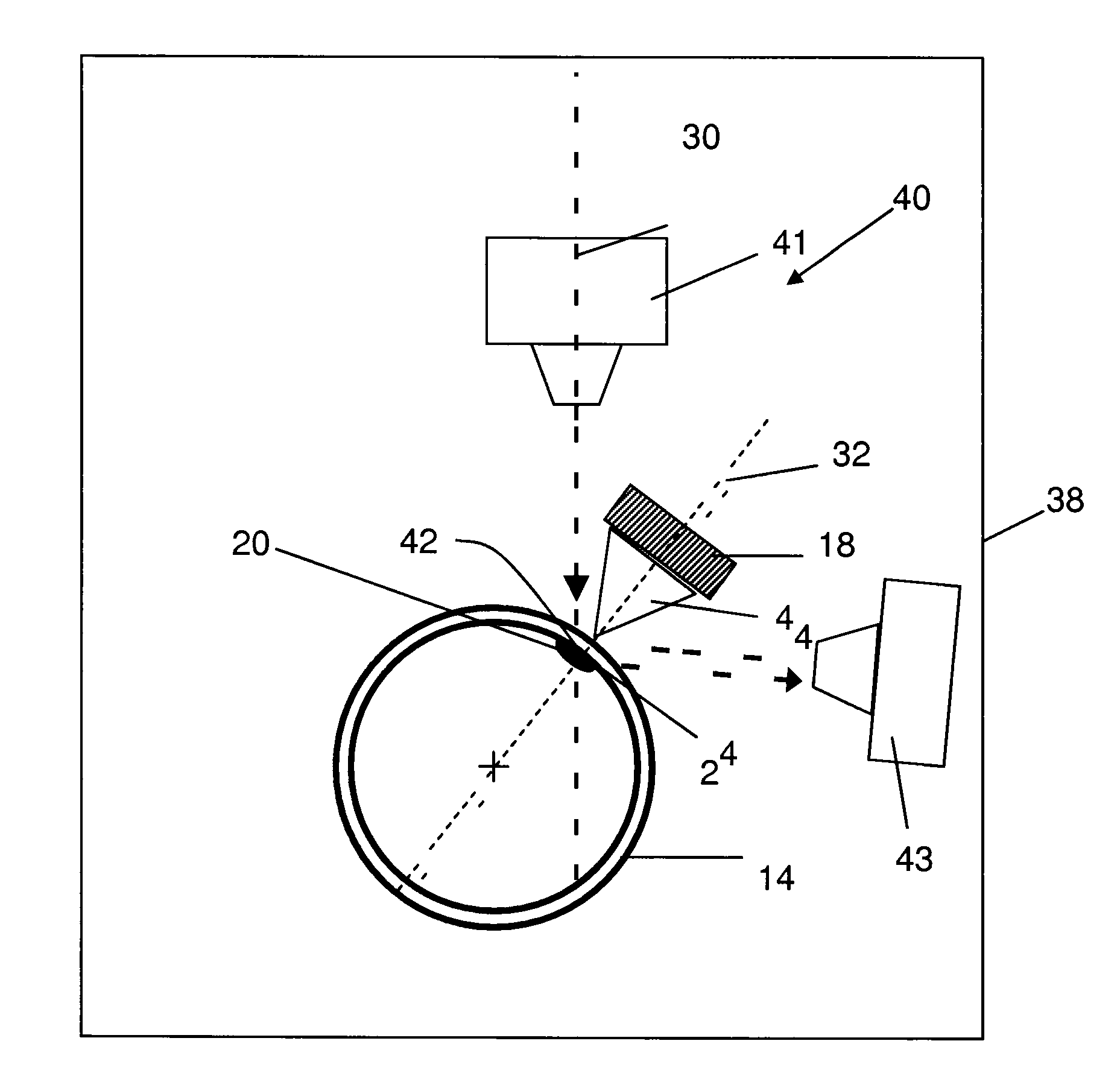

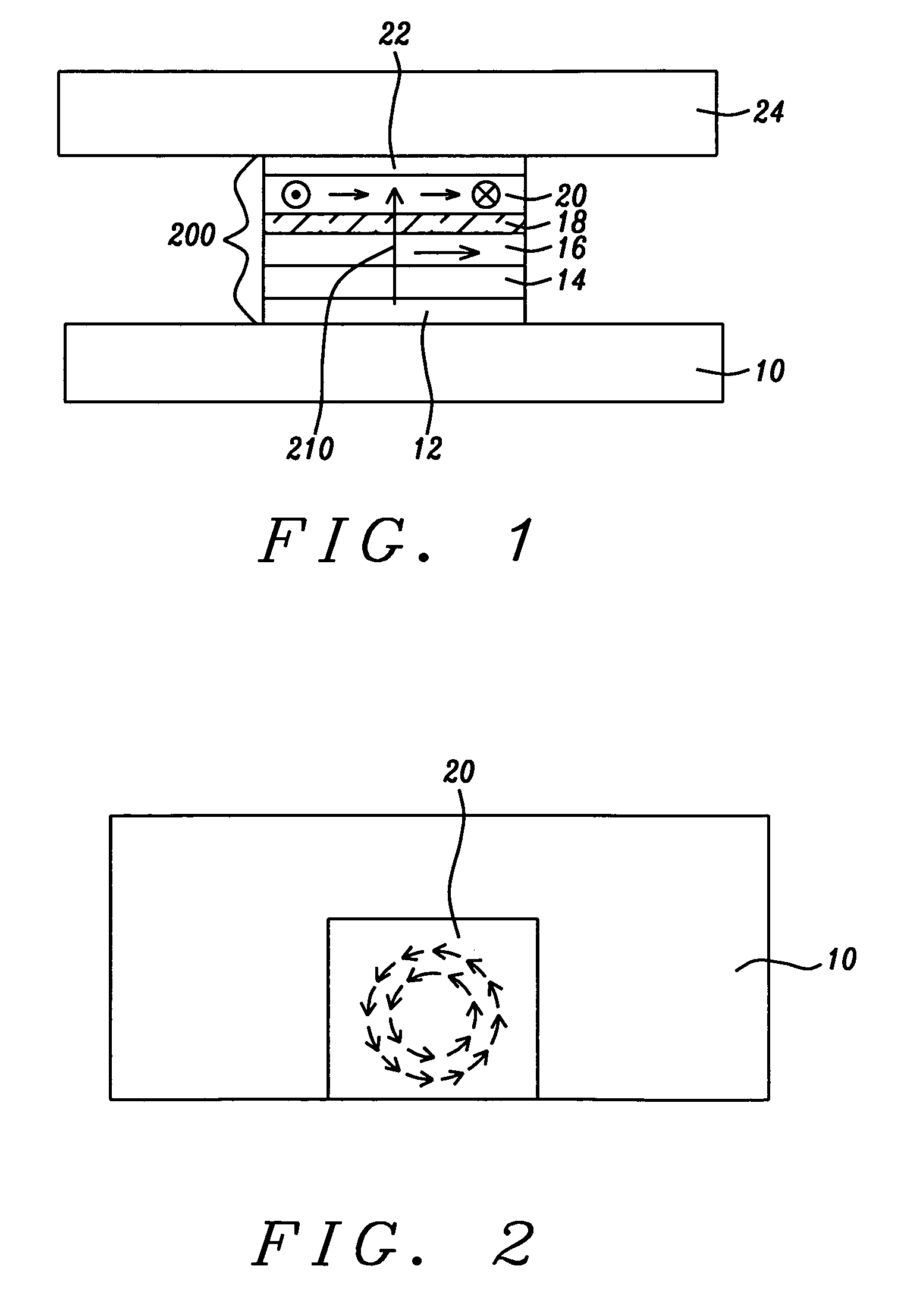

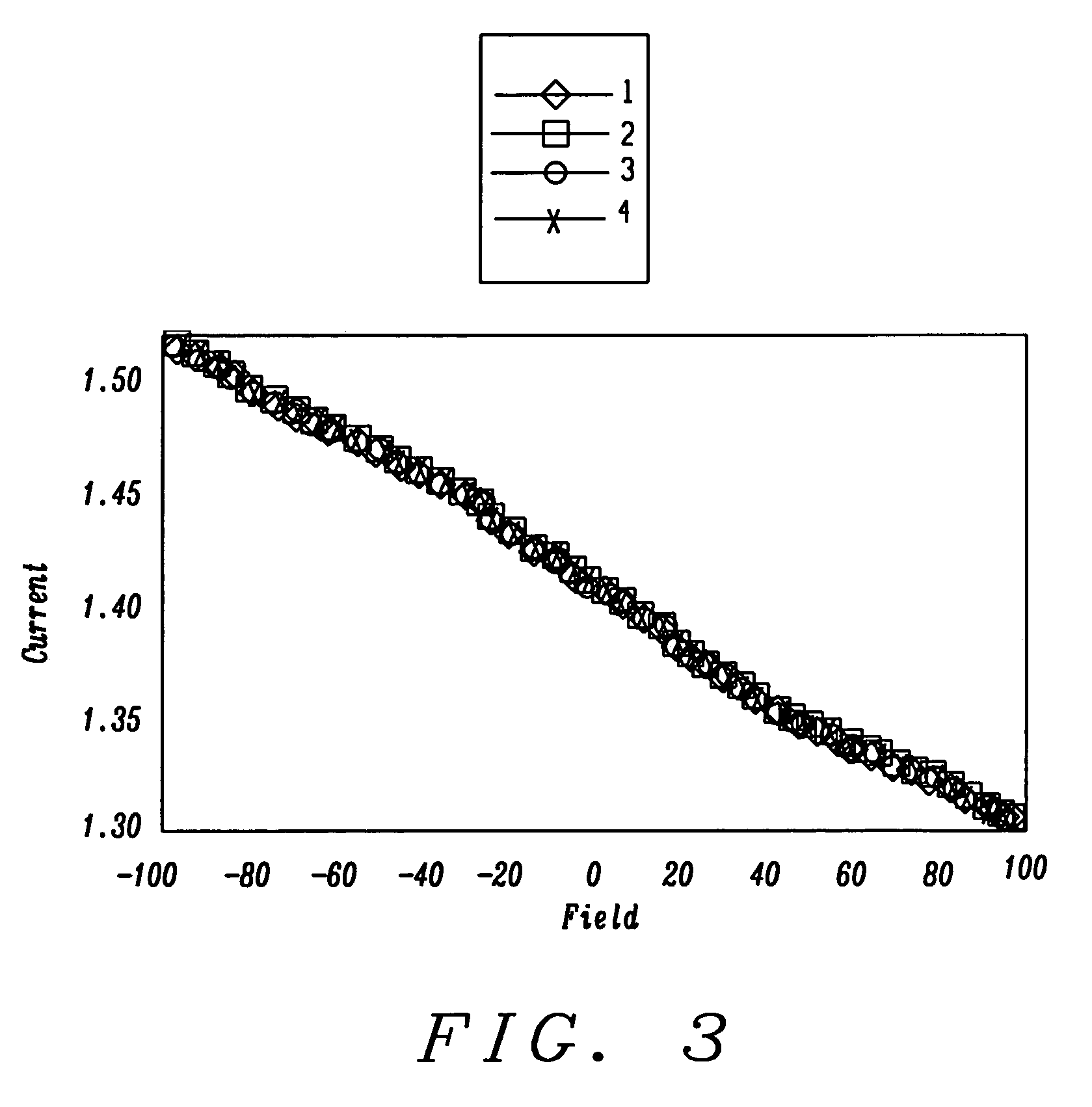

CPP magnetic recording head with self-stabilizing vortex configuration

InactiveUS20080180865A1Eliminating side-readingEffective shieldingNanomagnetismMagnetic measurementsMagnetizationVertical field

A CPP MTJ or GMR read sensor is provided in which the free layer is self-stabilized by a magnetization in a circumferential vortex configuration. This magnetization permits the pinned layer to be magnetized in a direction parallel to the ABS plane, which thereby makes the pinned layer directionally more stable as well. The lack of lateral horizontal bias layers or in-stack biasing allows the formation of closely configured shields, thereby providing protection against side-reading. The vortex magnetization is accomplished by first magnetizing the free layer in a uniform vertical field, then applying a vertical current while the field is still present.

Owner:HEADWAY TECH INC

Rapid data recovery method and system based on crossed code correction and deletion

ActiveCN106844098AAutomatic Priority AdjustmentAutomatically adjust the number of threadsRedundant data error correctionRedundant operation error correctionExclusive orData recovery

The invention provides a rapid data recovery method and system based on crossed code correction and deletion. The method comprises the steps that calculation is performed according to an LRC encoding mode when data is written in, global encoding blocks are grouped in pairs, the global encoding blocks in each group are divided into two parts, after being subjected to exclusive-or operation, the second half part of one global encoding block and the first half part of the other global encoding block are written into the second half part of the current global encoding block, when the first global encoding block is lost, the second half part of the data block is read, the two second half parts, obtained before the exclusive-or operation is performed, of the lost data block are obtained, the first half part of the lost data block is obtained after the second half part, obtained after exclusive-or operation is performed, of the other global encoding block is subjected to exclusive-or operation, the data obtained before exclusive-or operation of the lost data block and the first half part of the other global encoding block are subjected to exclusive-or operation to obtain the second half part of the lost data block; the data is brushed back to a corresponding magnetic disk in a stripe mode to be stored, and after the data is written into a storage server, asynchronization longitudinal encoding calculation is performed.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI +1

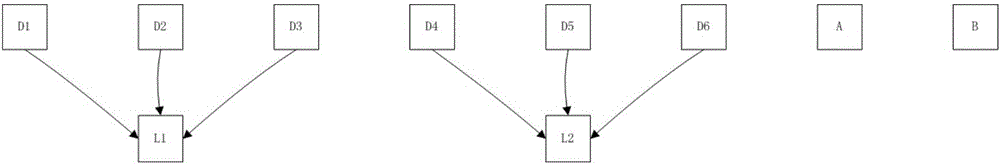

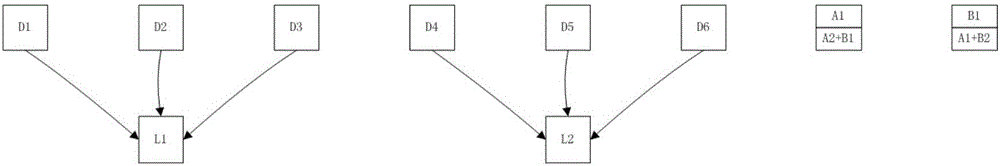

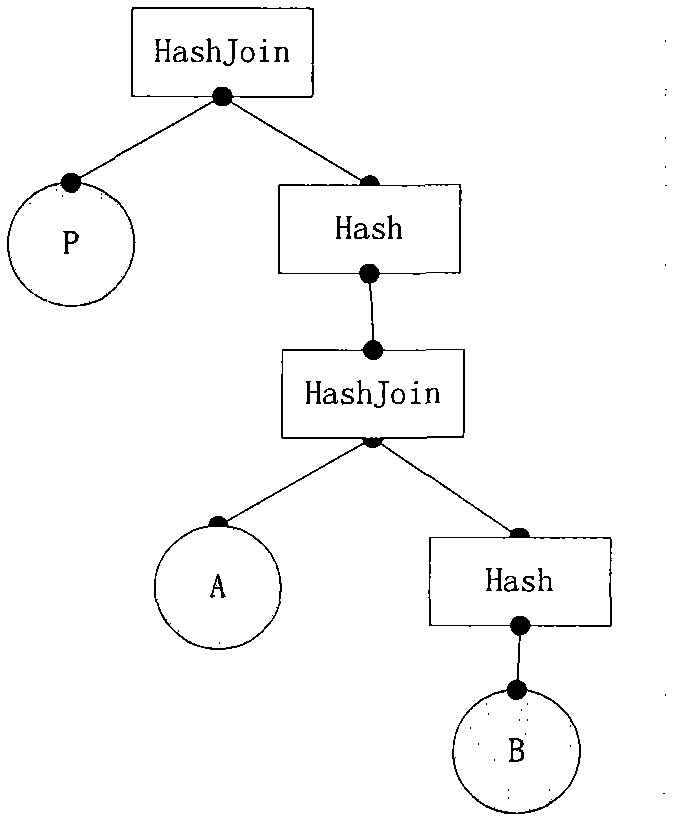

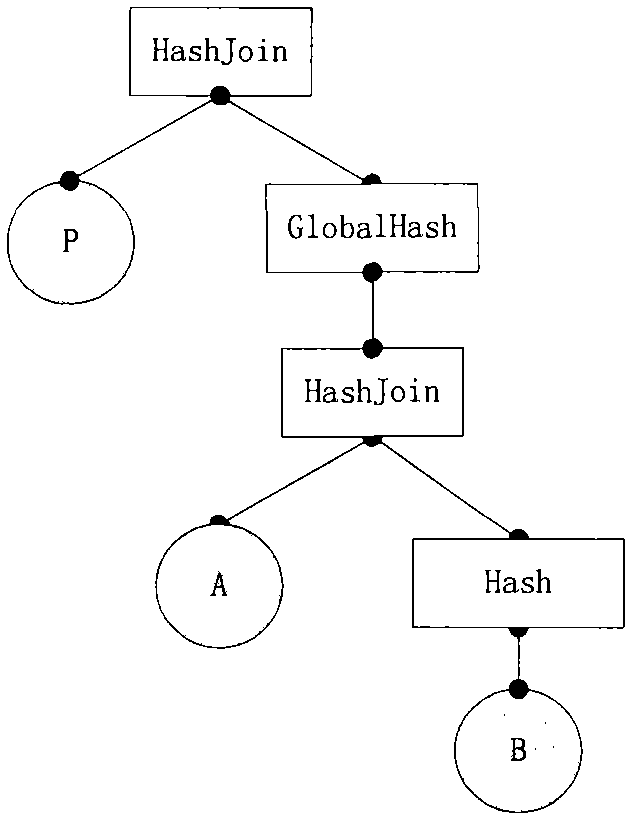

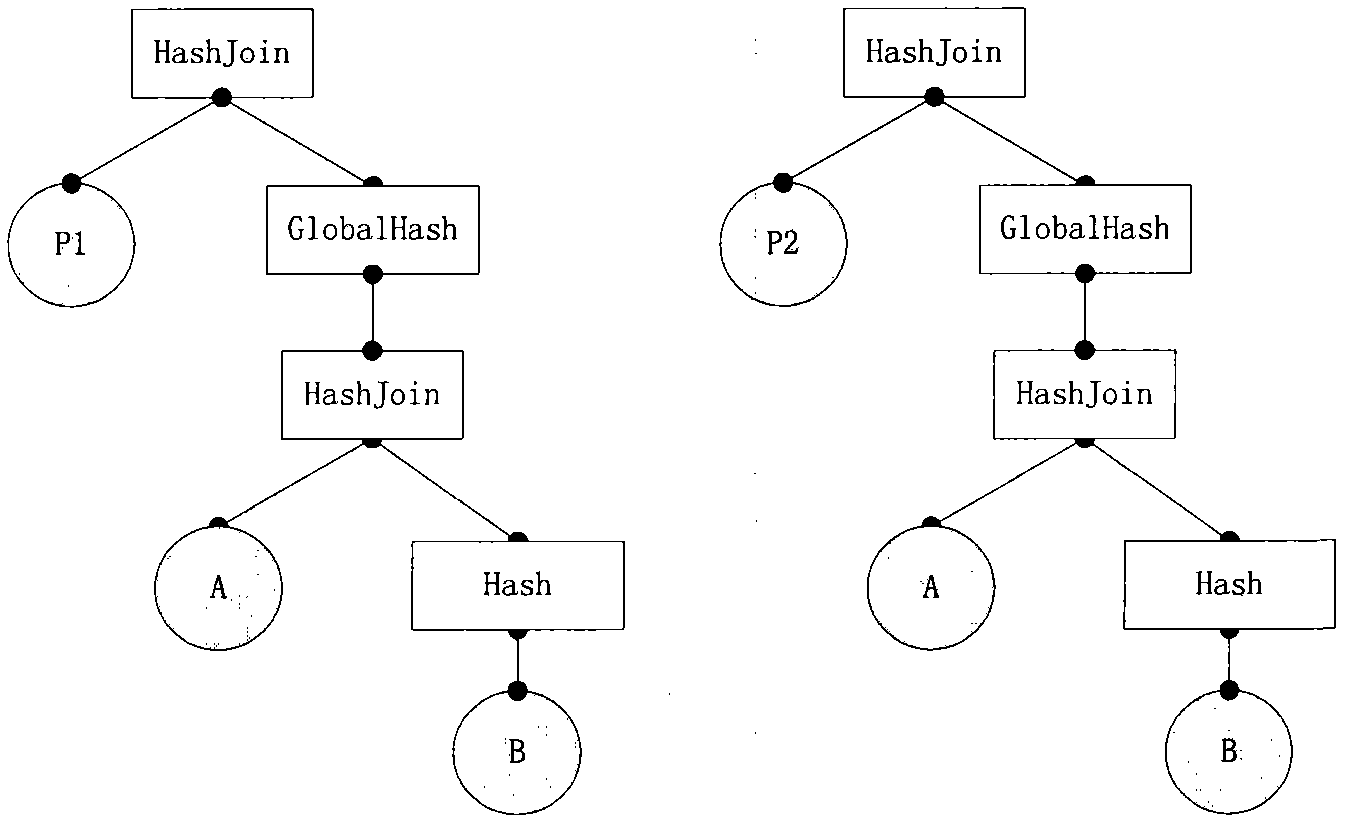

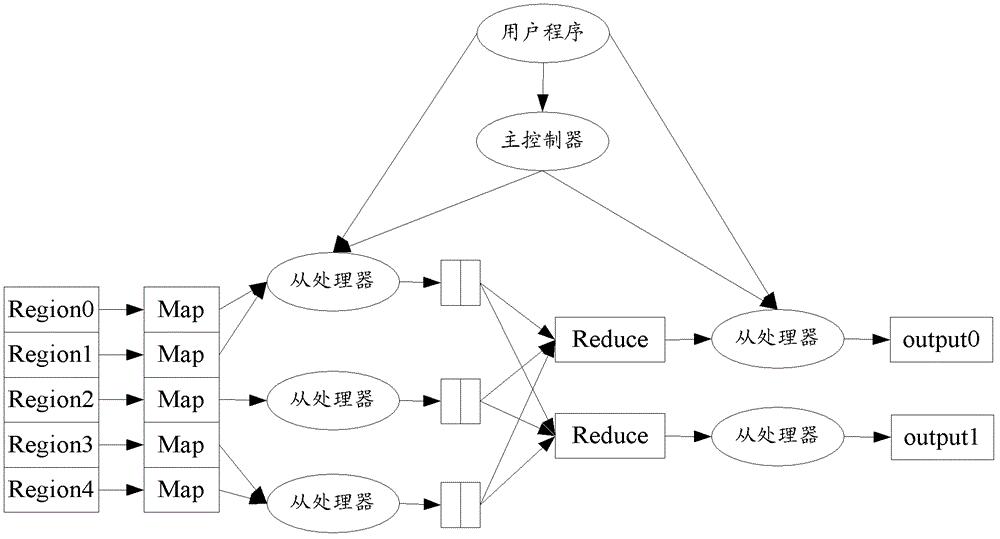

Implementation method for operator reuse in parallel database

ActiveCN102323946AReduce readSave memory resourcesSpecial data processing applicationsQuery planDirected graph

The invention discloses an implementation method for operator reuse in a parallel database, comprising the following steps of: step 1, generating a serial query plan for query through a normal query planning method, wherein the query plan is a binary tree structure; step 2, executing the query plane by scanning from top to bottom, searching materialized reusable operators, changing the query plane structure, and changing thread level materialized operators into global reusable materialized operators; step 3, parallelizing the query plan changed in the step 2, and generating a plan forest for parallel execution of a plurality of threads; step 4, executing global reusable operator combination on the plan forest generated in the step 3, and generating a directed graph plan for the materialized reusable operators capable of being executed by the plurality of threads in parallel; step 5, executing own plan part in the directed graph by each thread in parallel, wherein the thread which executes the global reusable operator firstly is called a main thread, the main thread locks the global reusable operator and truly executes the operator and the plan of the operator, and other threads wait; step 6, unlocking the global reusable operator by the main thread after execution, wherein other threads start to read data from the global reusable operator and continue to execute own plan tree;and step 7, releasing the materialized data of the operator by the main thread after all the plans read the data of the global reusable operator.

Owner:天津神舟通用数据技术有限公司

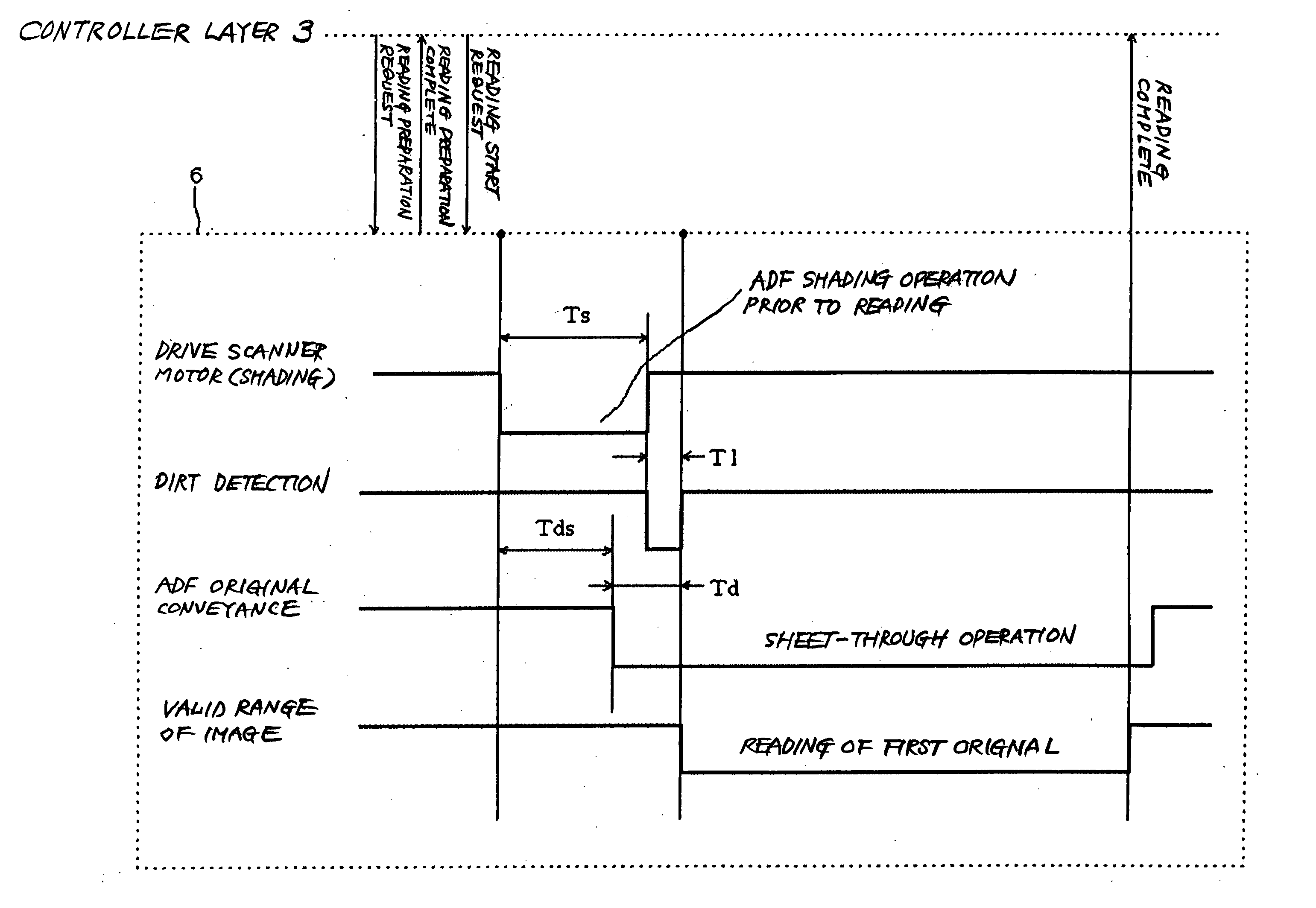

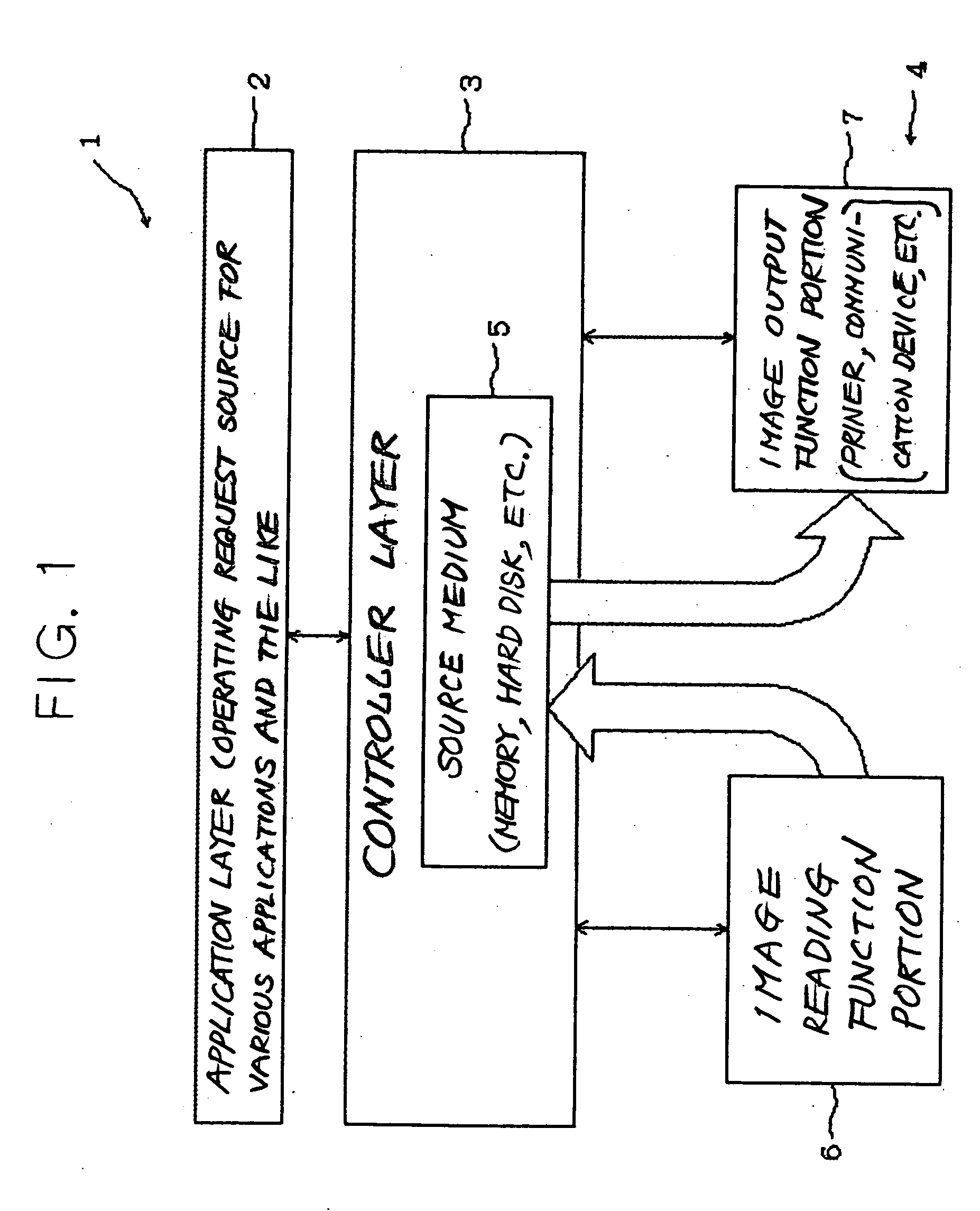

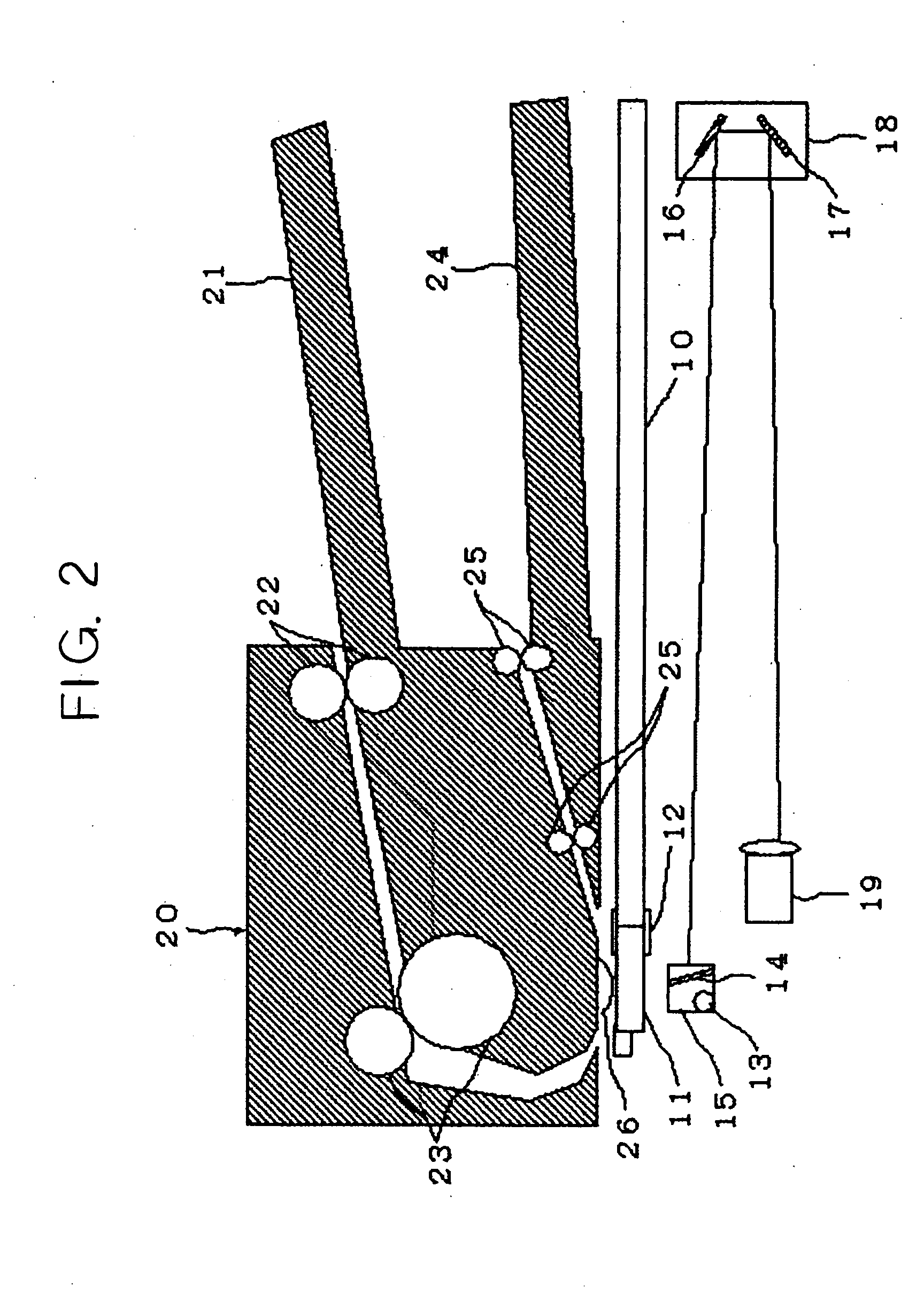

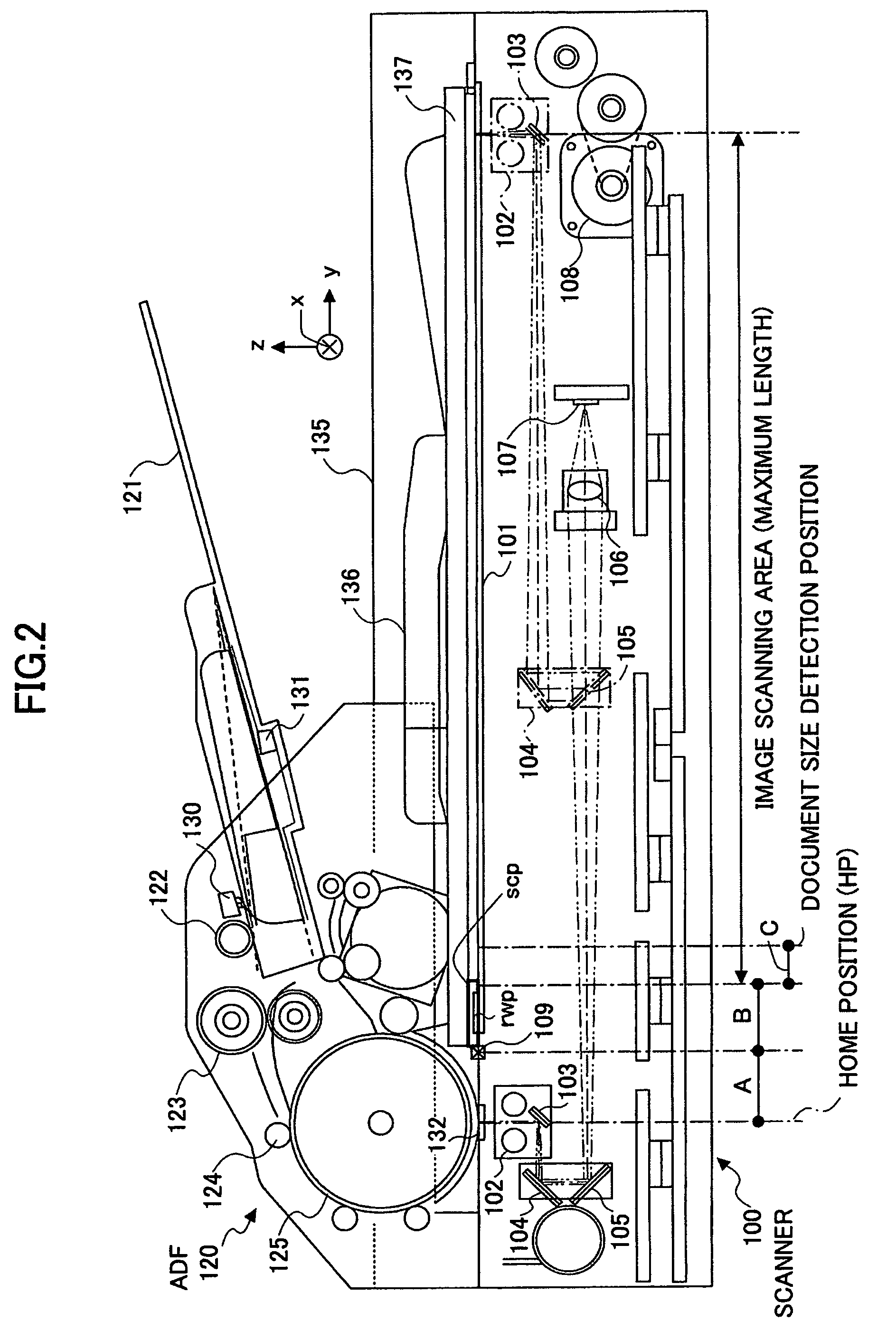

Image reading apparatus

InactiveUS20050157351A1Improve productivityEfficient and accurate readingPictoral communicationComputer scienceCopying

A digital copying device, which serves as an image reading apparatus for improving processing speed by adjusting the timing of various operations when reading is performed using a sheet-through system, causes a first traveling body to move beneath a white reference plate in order to read the white reference plate, and then performs an ADF shading operation to move the first traveling body beneath a sheet-through contact glass. The sheet-through contact glass is then subjected to a dirt detection operation by reading a dirt detection reference plate, whereupon an original is conveyed onto the sheet-through contact glass by an ADF to perform a sheet-through reading operation whereby an image of the original is read. When a sheet-through reading operation is to be performed, the sheet-through reading operation is started following the reception of an image reading request and after the elapse of a time (T=Ts+Tl−Td) obtained by subtracting a time Td, which extends from the beginning of the sheet-through reading operation to the point at which the tip of the original reaches a sheet-through reading position, from the sum of a time Ts required for the ADF shading operation and a time Tl required for the dirt detection operation.

Owner:RICOH KK

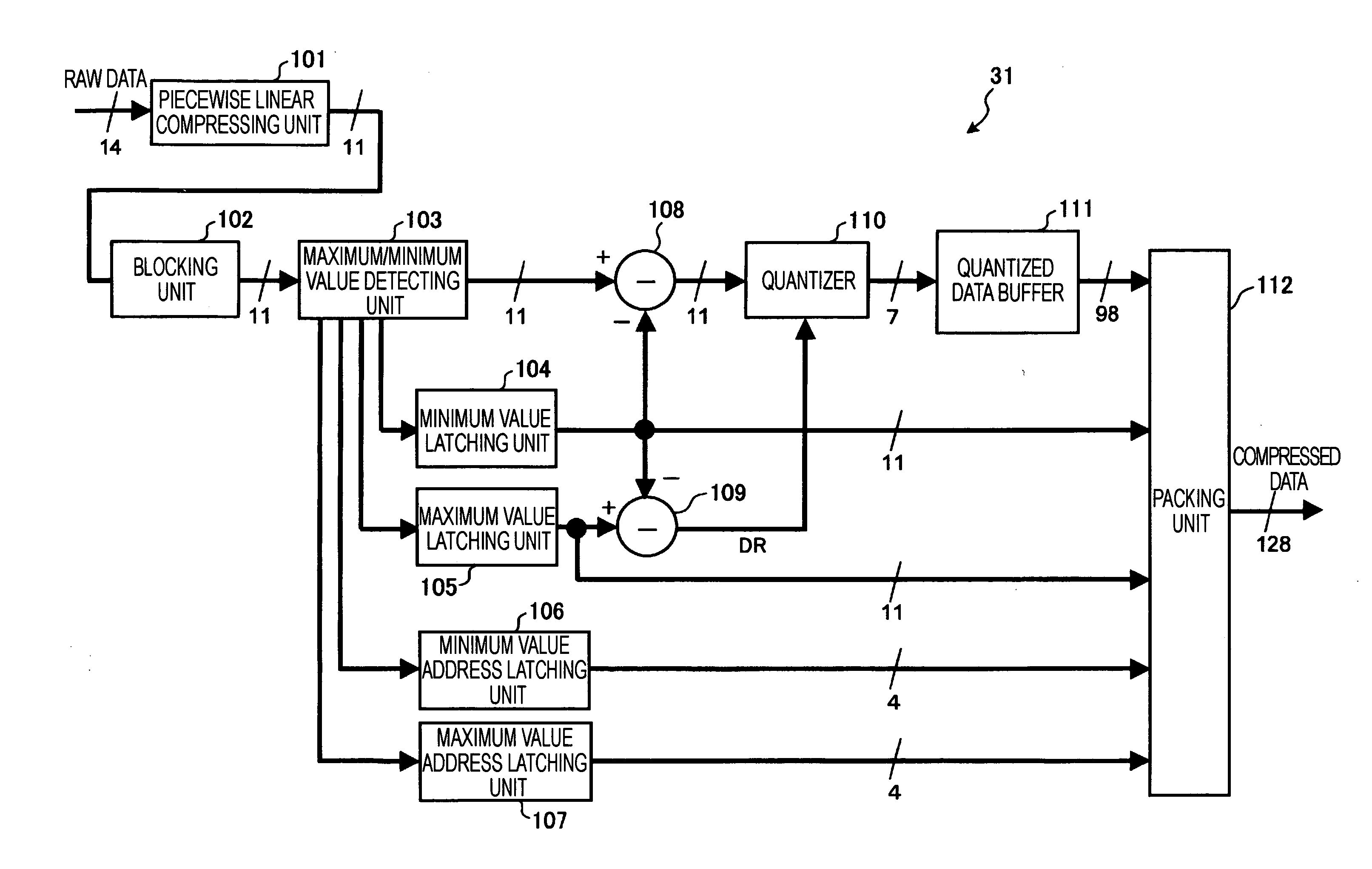

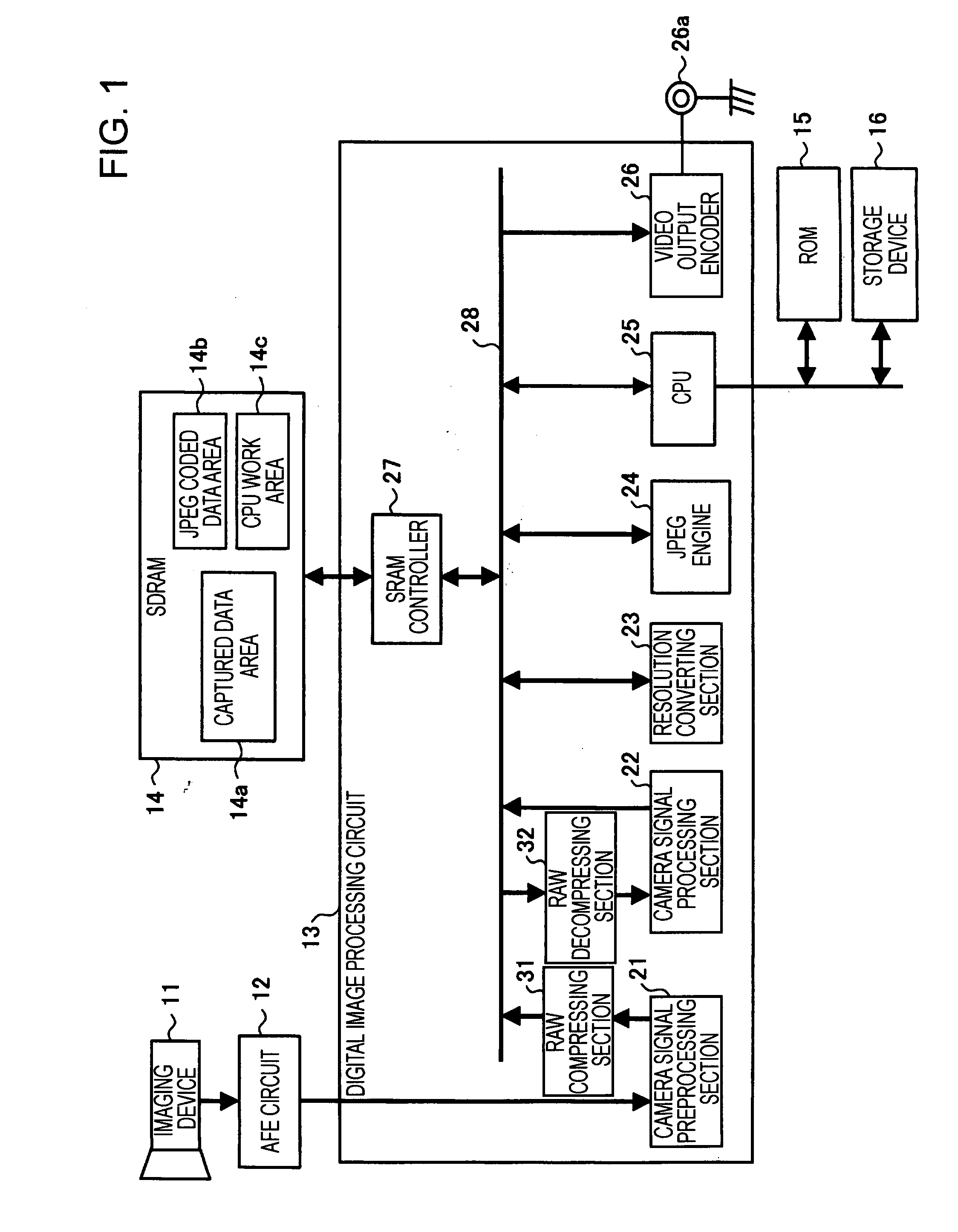

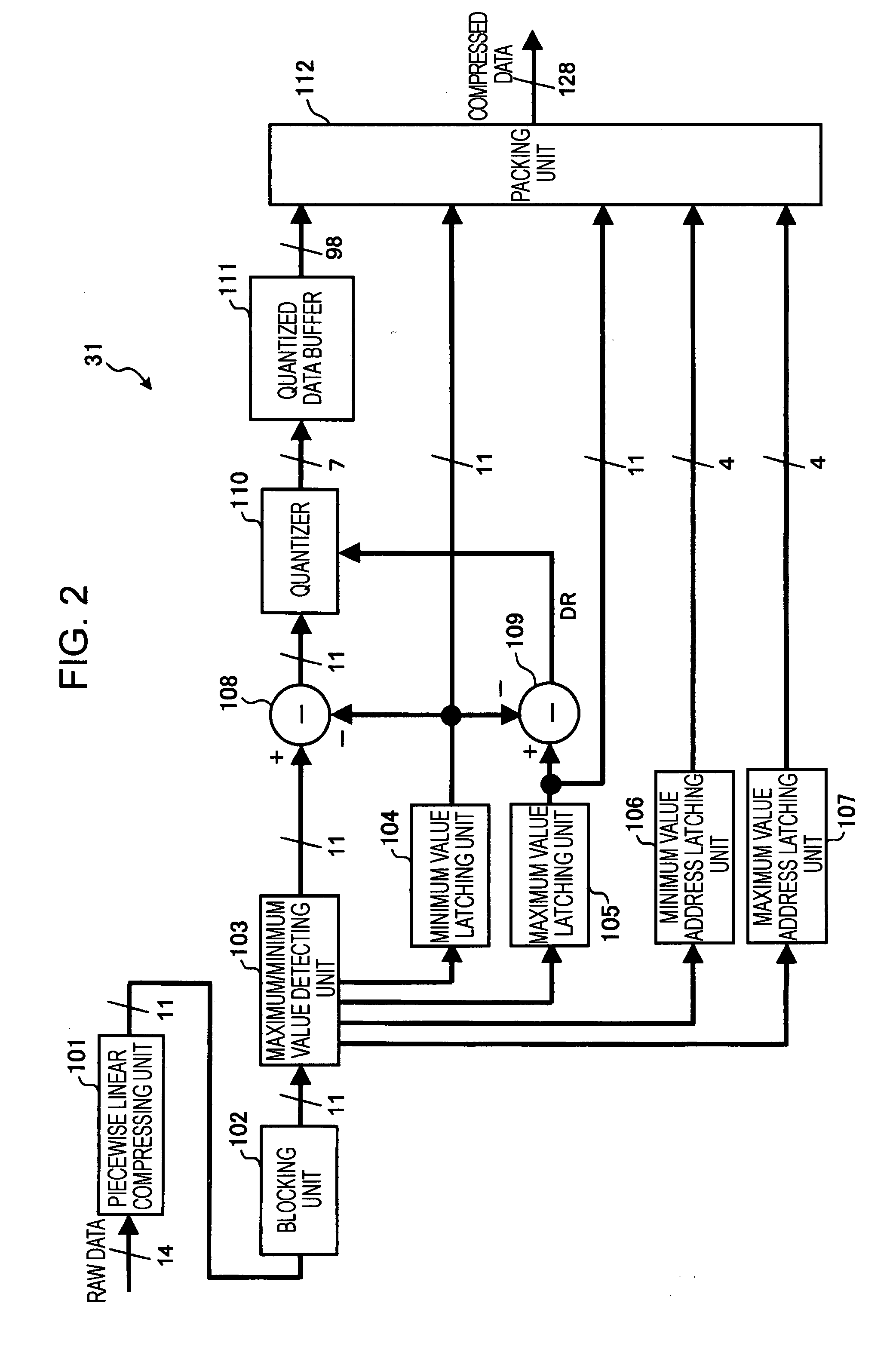

Image capturing apparatus, imaging circuit, and image capturing method

InactiveUS20070223824A1Shorten the timeReduce dataTelevision system detailsCharacter and pattern recognitionImaging qualityDigitization

An image capturing apparatus for capturing an image with solid state imaging devices may include a compressing section, a memory, a decompressing section, and a signal processing section. The compressing section may compress digitalized data of an image captured with the solid state imaging devices. The memory may temporarily store the compressed image data that is compressed by the compressing section. The decompressing section may decompress the compressed image data that is read out from the memory. The signal processing section may perform an image quality correction operation on the image data decompressed by the decompressing section. The compressed image data may contain a maximum value and a minimum value of pixel data in a block, information regarding positions of the maximum value and the minimum value in the block, and quantized data.

Owner:SONY CORP

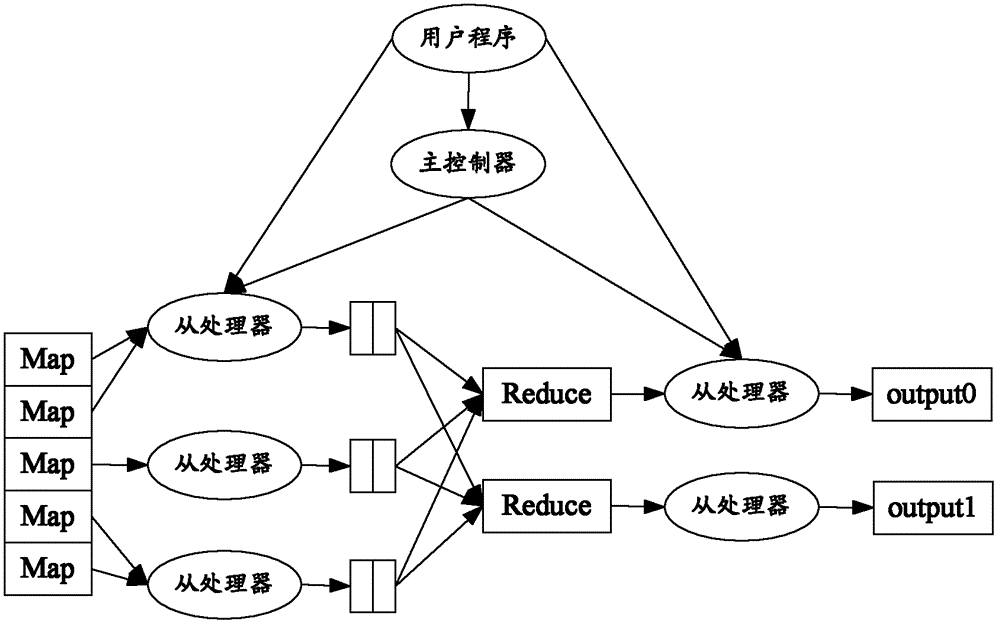

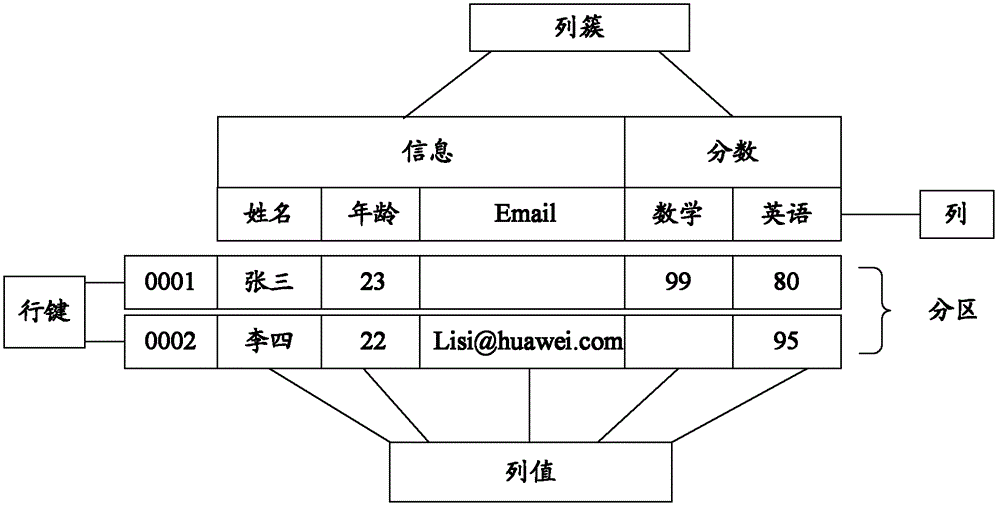

Method and apparatus for optimizing data access, method and apparatus for optimizing data storage

ActiveCN102725753AImprove processing efficiencyReduce readMemory adressing/allocation/relocationSpecial data processing applicationsData accessData entry

Provided are a method and an apparatus for optimizing data access, and a method and an apparatus for optimizing data storage. The method for optimizing data access comprises that: a host controller receives a request that a user accesses a data table in HBASE (Hadoop Database), wherein the request carries information of data input ranges, and the data input ranges comprise a plurality of data input ranges; input partitioning information is determined according to partitioning information of the data table and the data input range information; the number of Map tasks is determined on the basis of the input partitioning information; data in the data table, which is read from a processor, is distributed according to the number of the Map tasks; and the data read from the processor is returned to the user.

Owner:HUAWEI CLOUD COMPUTING TECH CO LTD

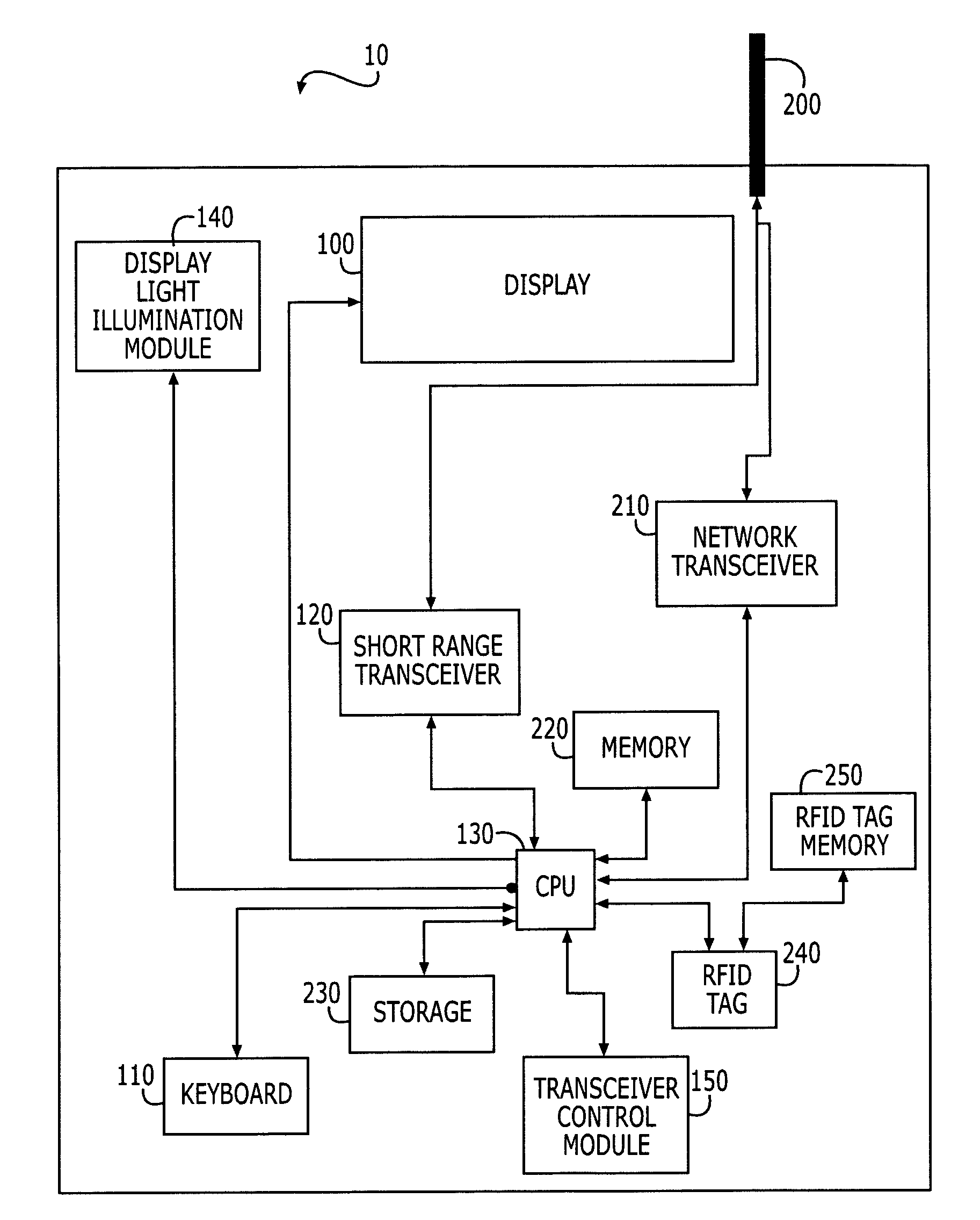

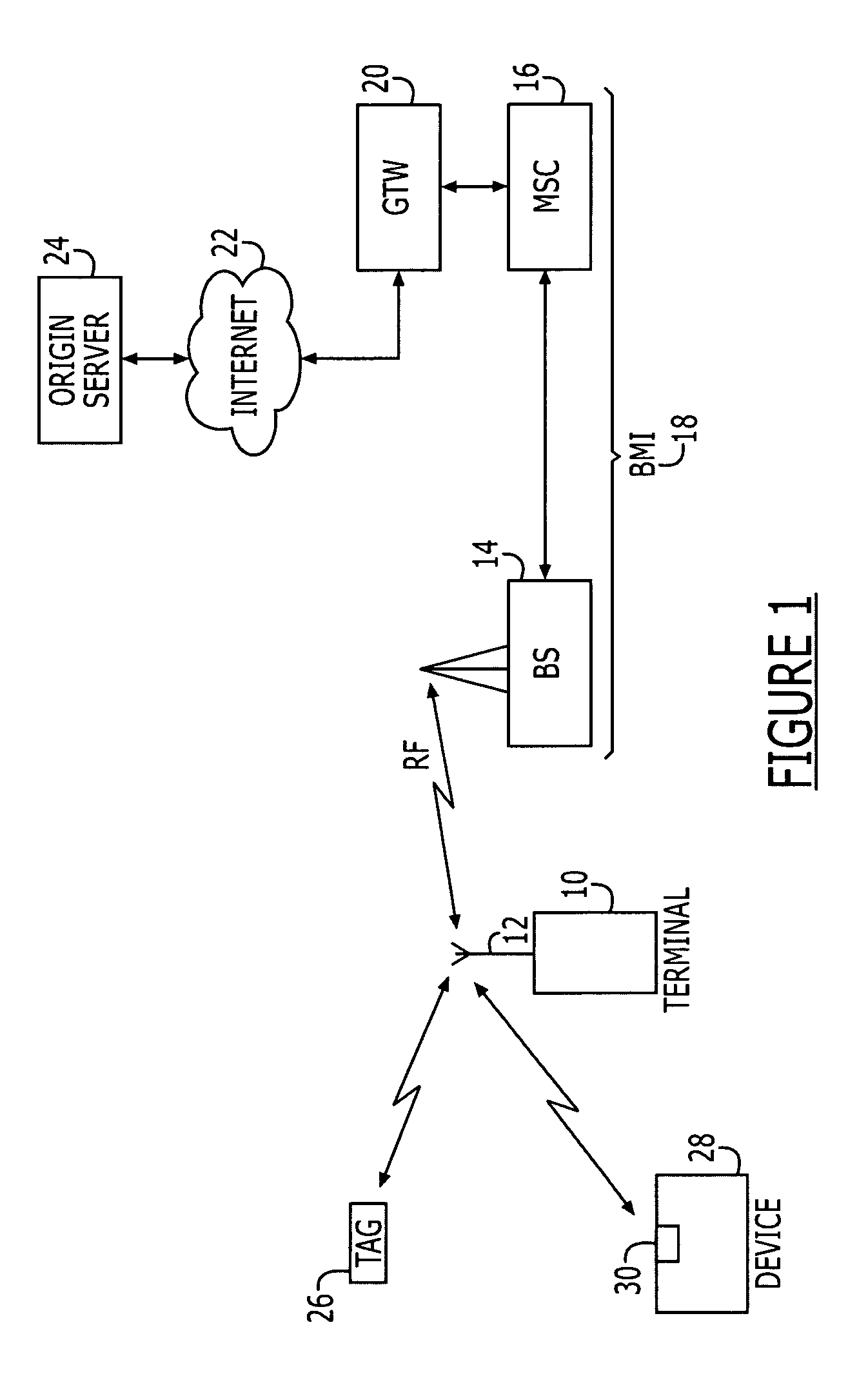

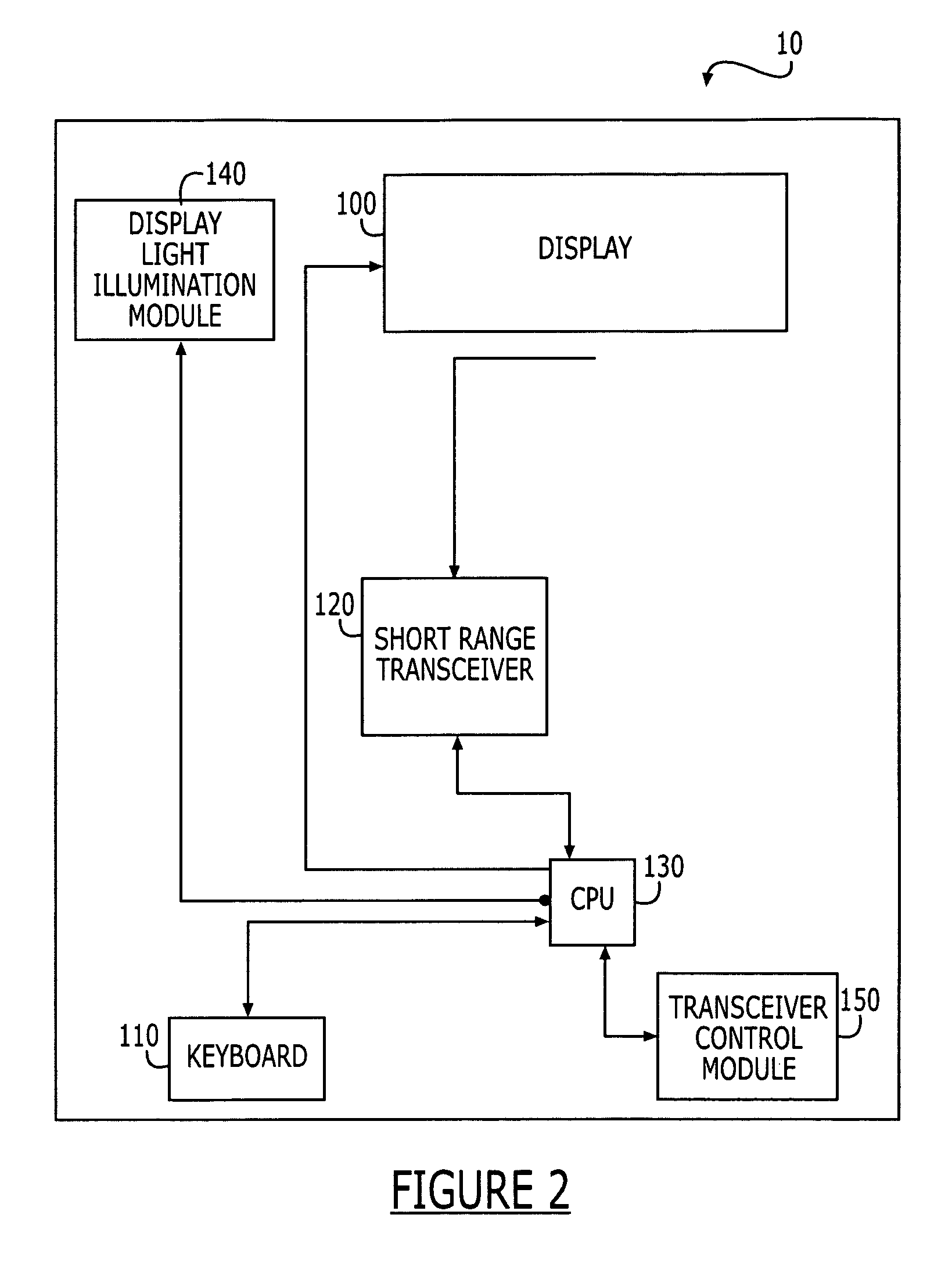

Intuitive energy management of a short-range communication transceiver associated with a mobile terminal

InactiveUS7499985B2Good for conservationLess powerEnergy efficient ICTPower managementTransceiverComputer terminal

The method, terminal and computer program product of the present invention are capable of adjusting the power consumption of short-range communication transceivers, such as RFID, IR transceivers or the like. Energy management of the transceiver is achieved by limiting activation of the transceiver to periods of user-interface illumination. The transceiver, therefore, uses less power because it is only activated by an intentional gesture by the user, i.e., a gesture that will initiate the illumination of a user-interface. In addition, the user of terminal is aware, via observation of the illumination, that the terminal is in an active transceiver reading state and, thus, the invention provides for a safe environment in which inadvertent reading of tags or data communication is lessened.

Owner:NOKIA TECH OY

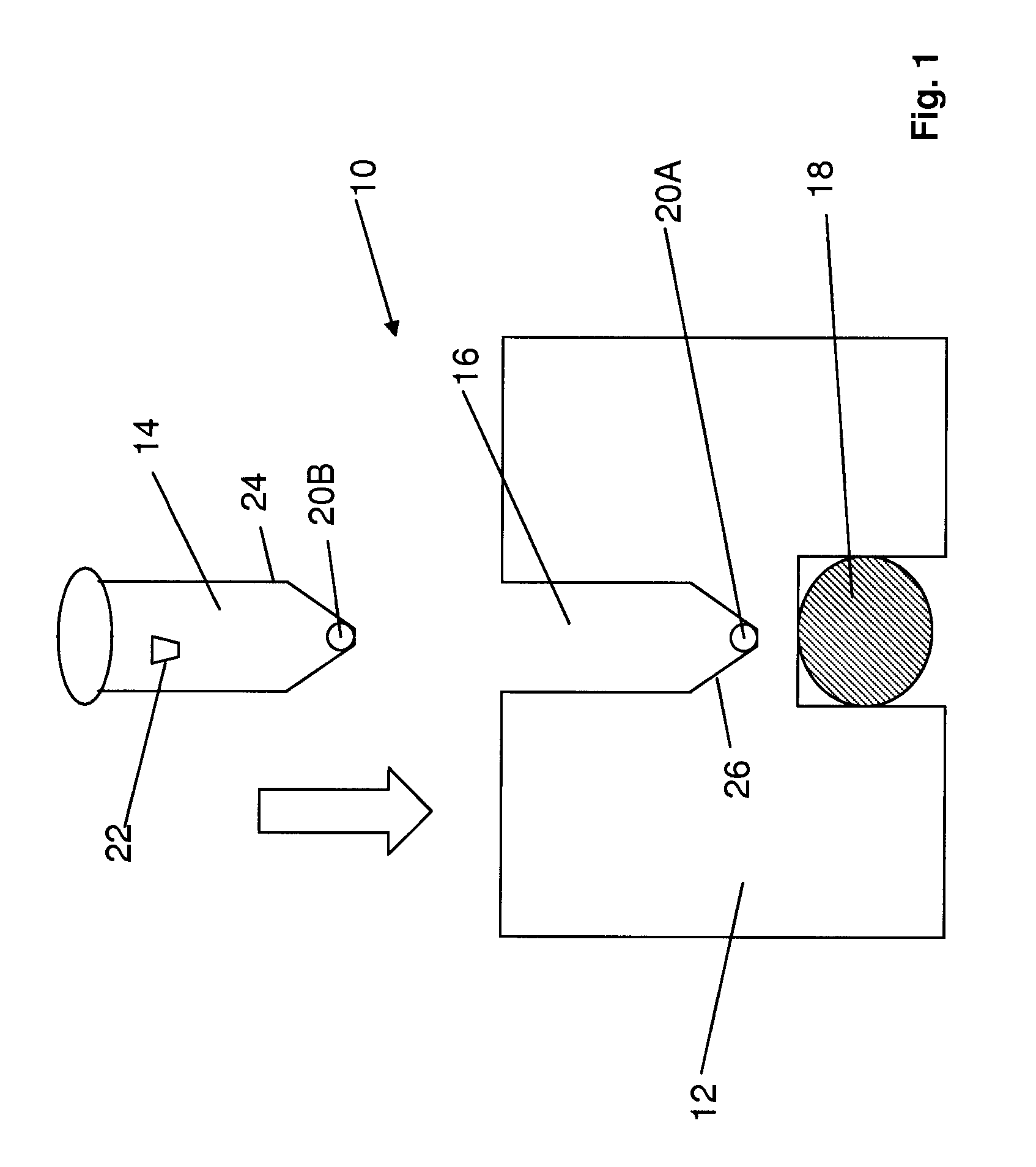

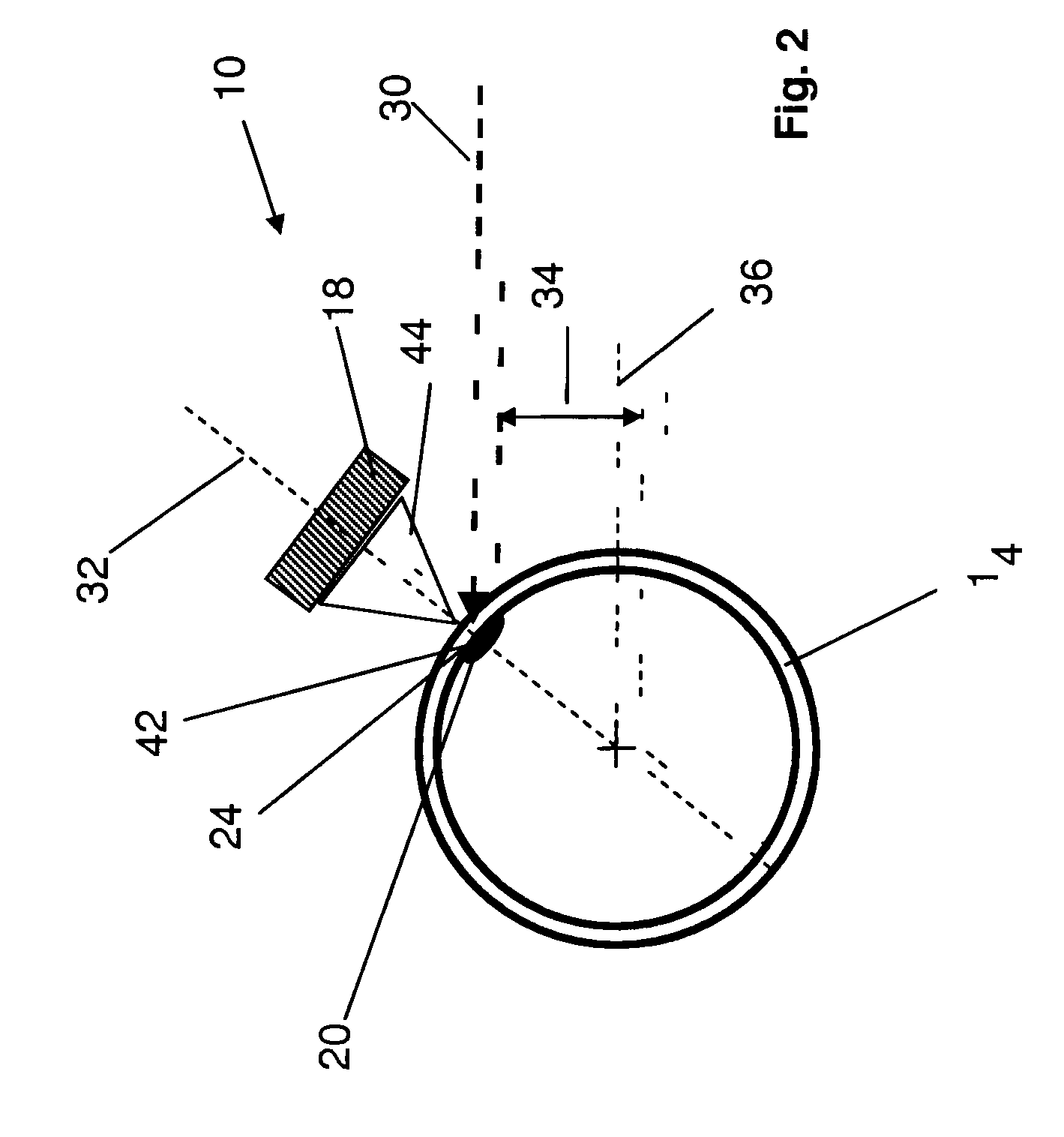

Assay particle concentration and imaging apparatus and method

InactiveUS20100060893A1Quantity minimizationImproved accuracy in placing the magnetic particlesRadiation pyrometryNanomagnetismImaging equipmentAcoustics

An assay apparatus having a sample vessel within which an assay may be performed. The apparatus further includes a holder having a receptacle, socket or other device configured to operatively receive the sample vessel in a precise and easily repeated location with respect to the holder. A magnet may be operatively associated with the holder such that a magnetic field generated by the magnet intersects a portion of the sample vessel defining a magnetic concentration region within the sample vessel. A separate or integrated detection or interrogation instrument, typically a spectrometer, may be provided.

Owner:BECTON DICKINSON & CO

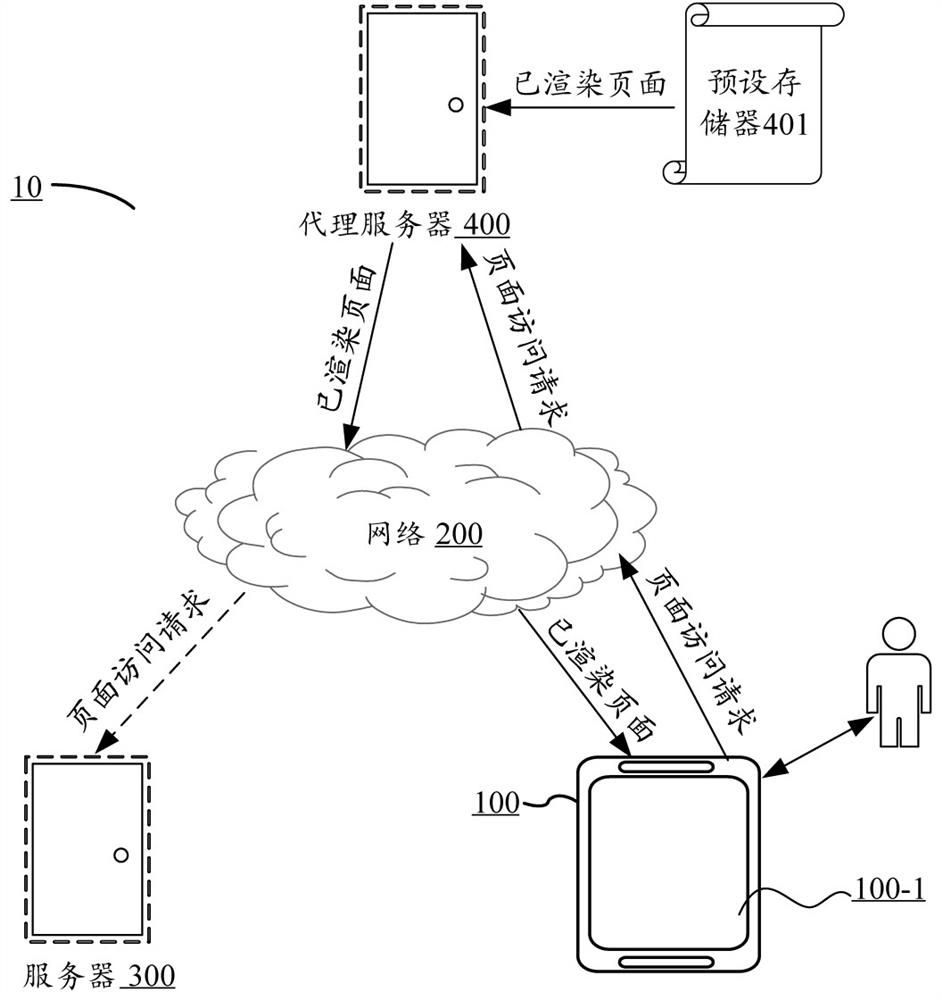

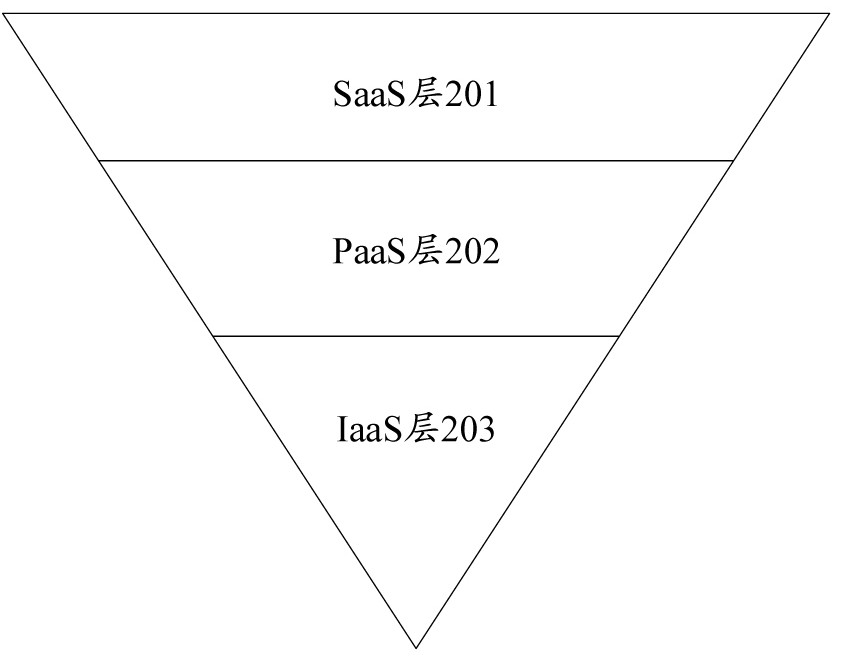

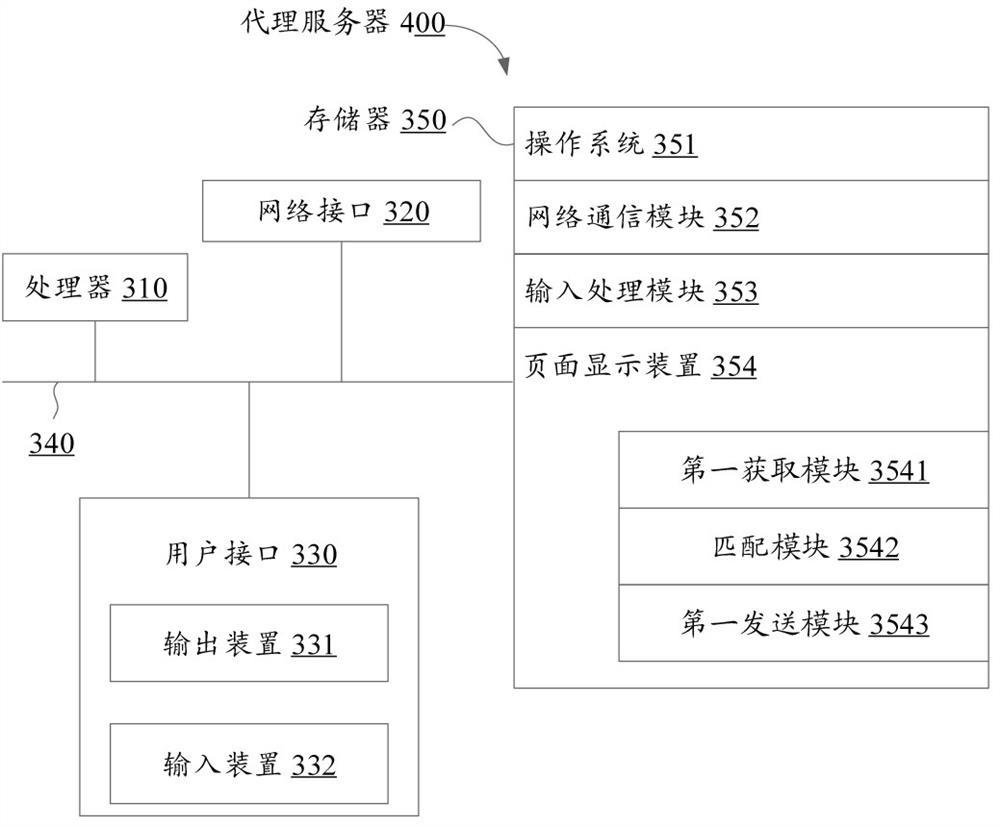

Page display method, device and equipment and computer readable storage medium

ActiveCN111708600AImprove experienceReduce readWebsite content managementExecution for user interfacesComputer hardwareEngineering

The embodiment of the invention provides a page display method, device and equipment and a computer readable storage medium, and relates to the technical field of clouds, and the method comprises thesteps: enabling a proxy server to obtain a page access request when a client sends the page access request to a server, wherein the page access request comprises a page identifier; matching a renderedpage corresponding to the page identifier in a preset memory; and taking the rendered page as an access result of the page access request, and sending the access result to the client, so as to enablethe client to display the rendered page. Through the embodiment of the invention, the reading and rendering time during page access can be greatly shortened, the white screen display time is shortened, and the user experience is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

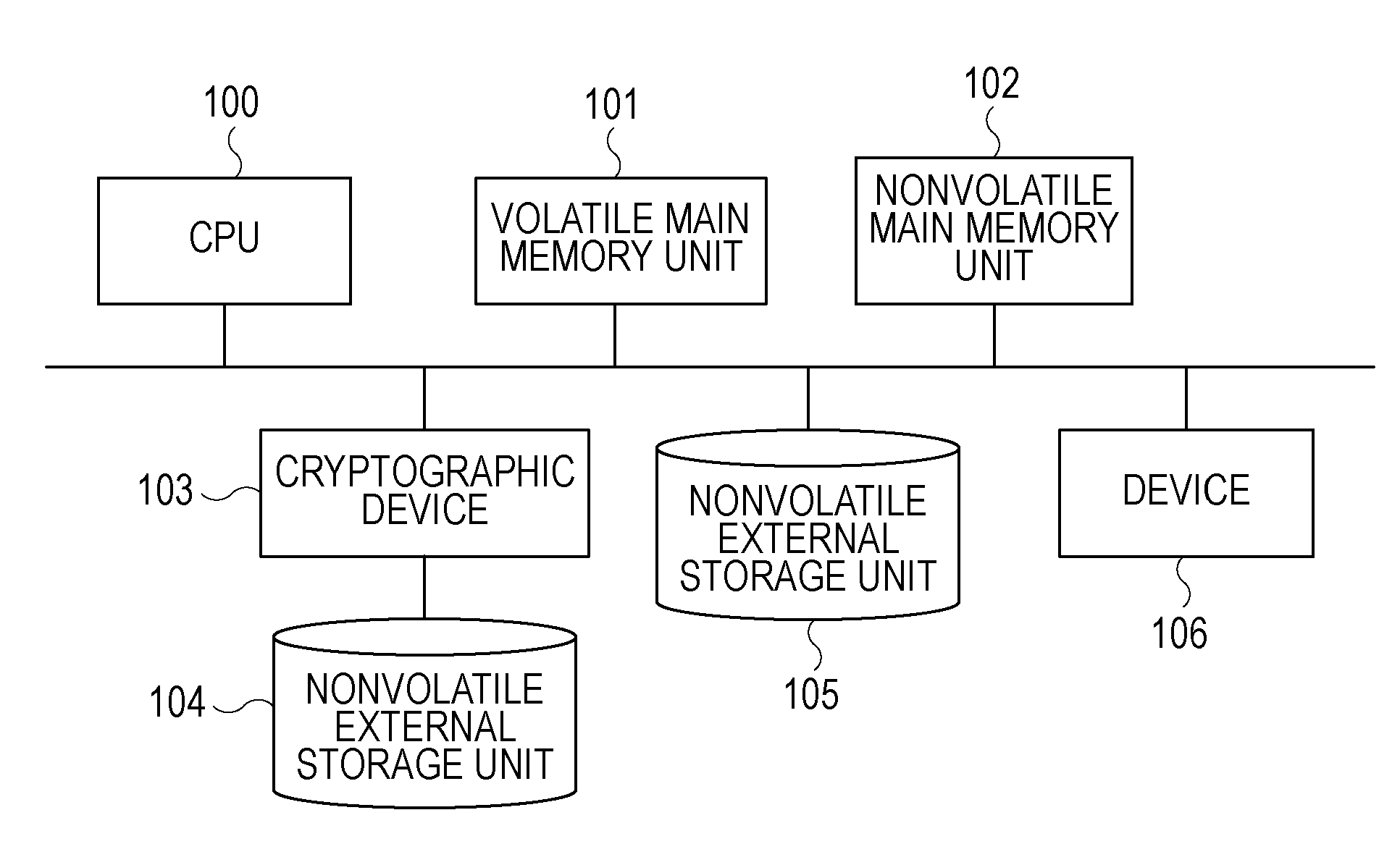

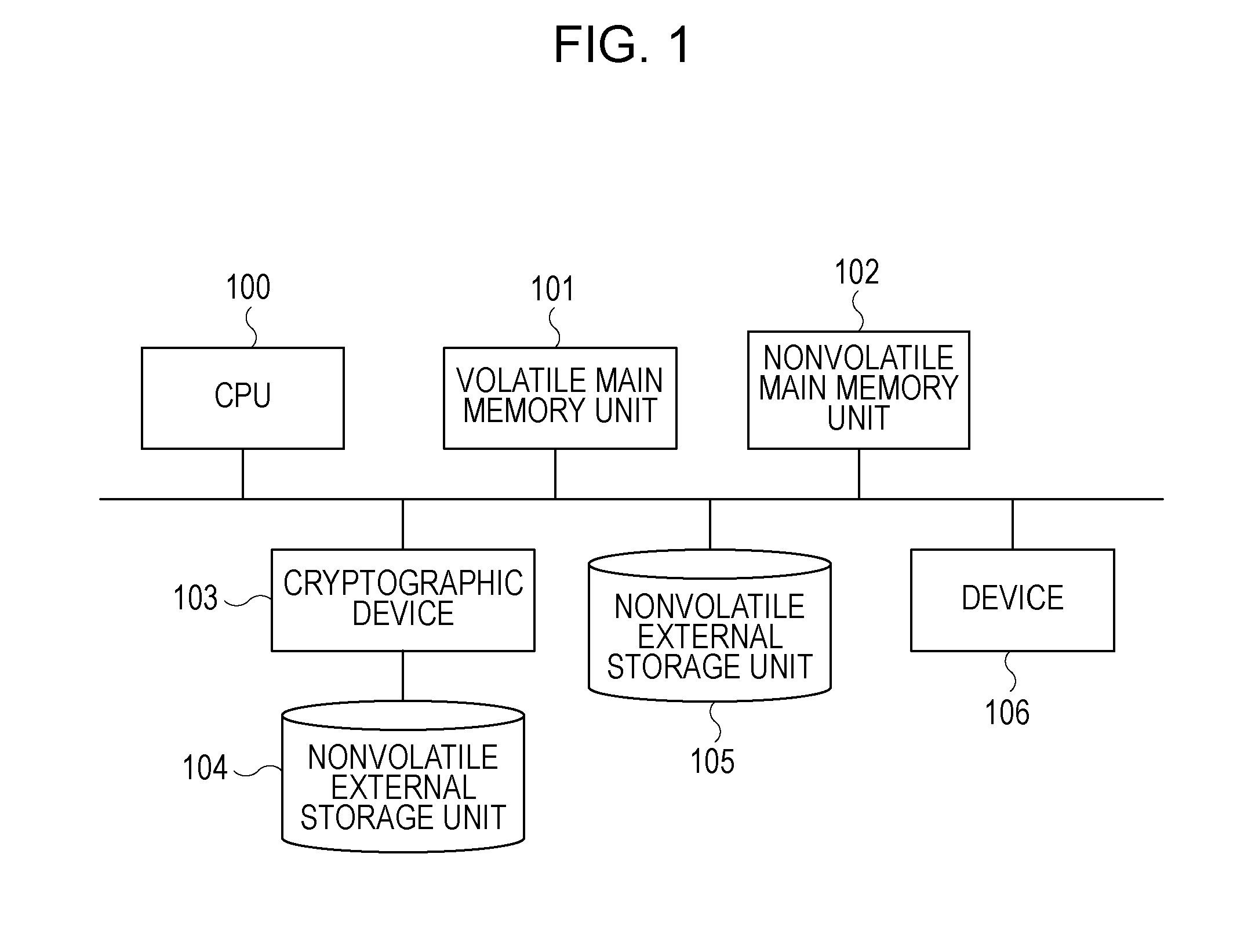

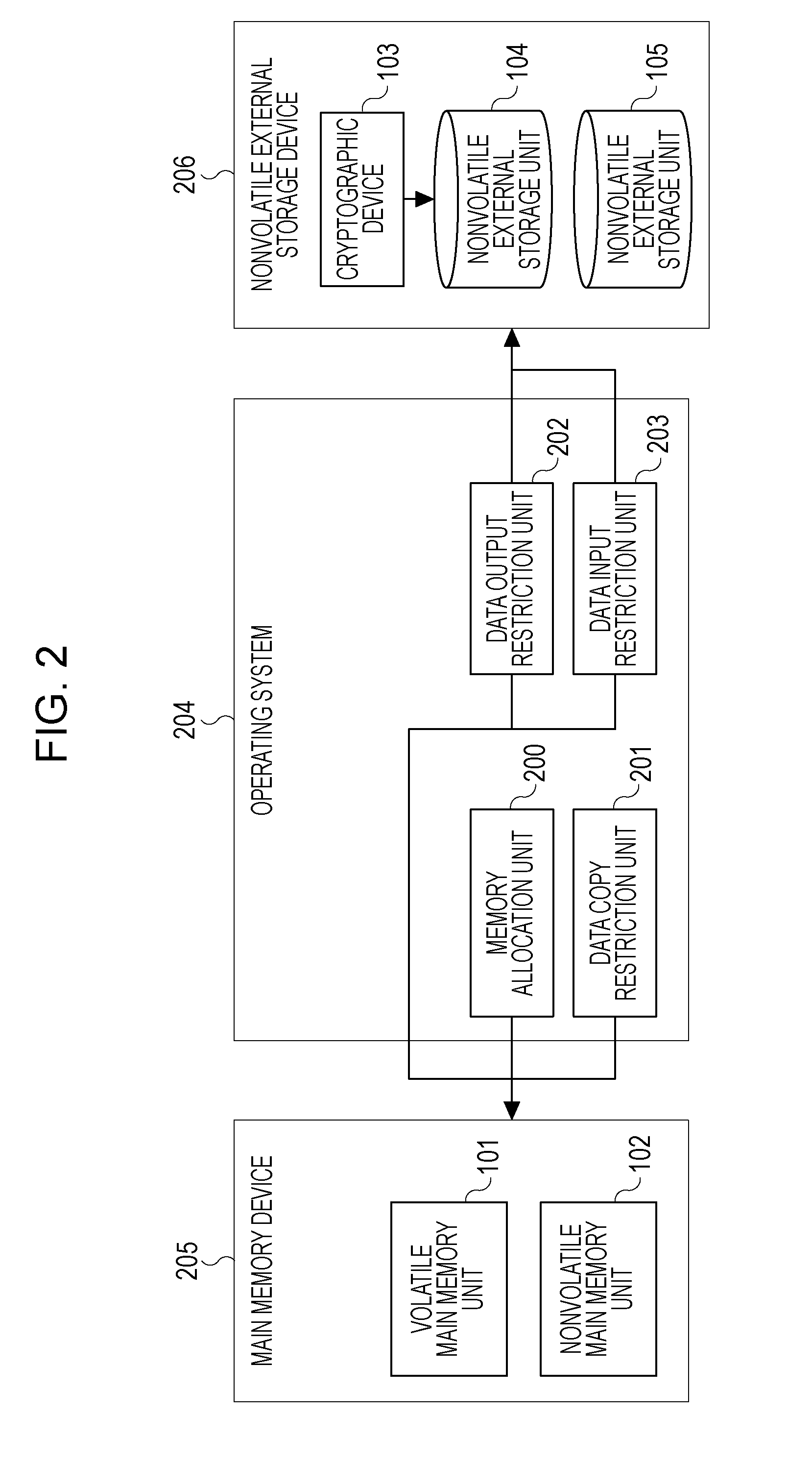

Information processing apparatus, information processing method, and storage medium

ActiveUS20150370704A1Prevent leakageReduce processing speedMemory architecture accessing/allocationMemory adressing/allocation/relocationInformation processingInformation handling

A An information processing apparatus for processing data using a main memory device and a nonvolatile secondary storage device includes a nonvolatile main memory unit, a volatile main memory unit, a determination unit that determines whether the data is designated as confidential data, and a control unit that stores the data in the volatile main memory unit if the determination unit determines that the data is designated as confidential data and stores the data in the nonvolatile main memory unit if the determination unit determines that the data is not designated as confidential data.

Owner:CANON KK

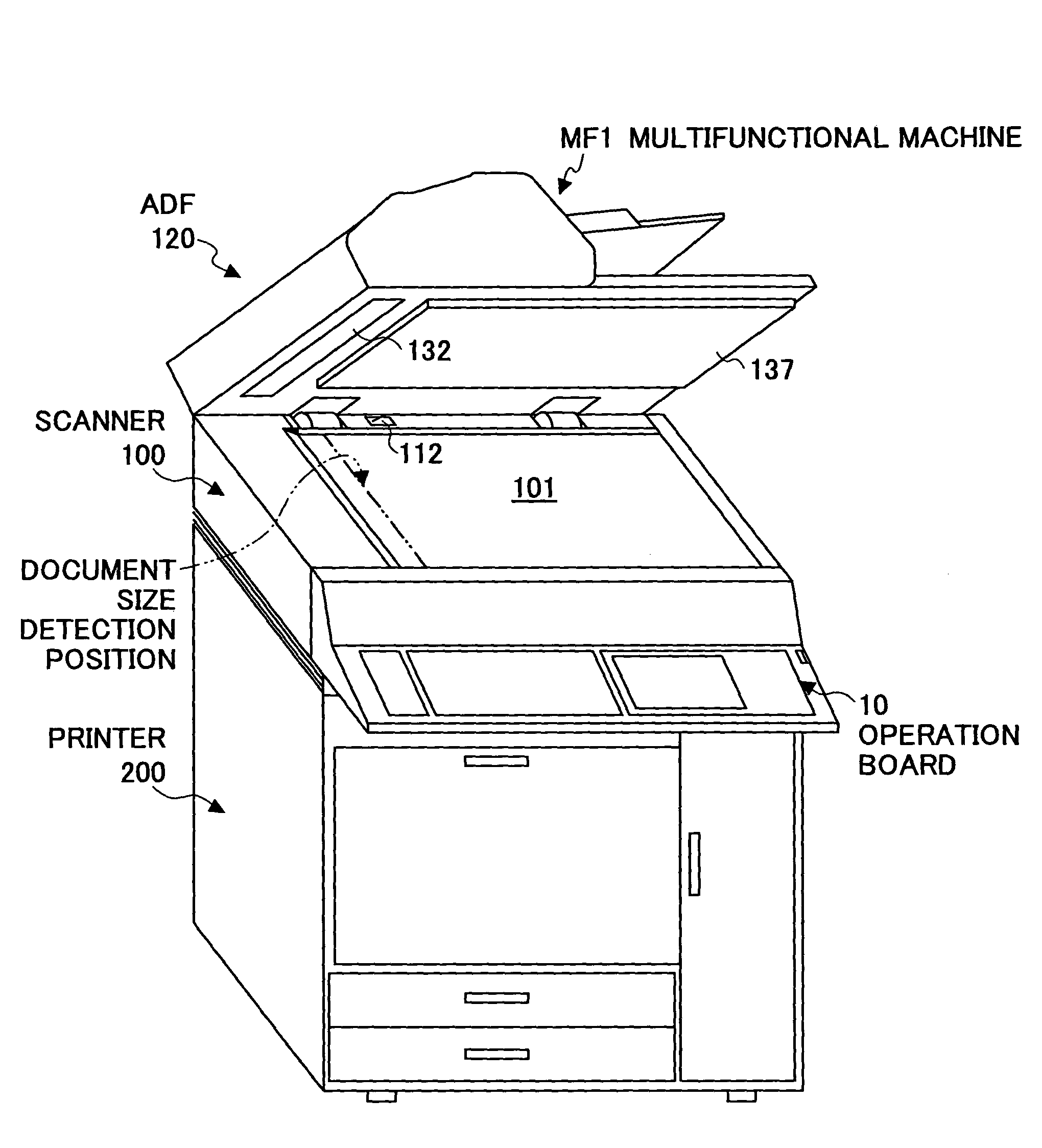

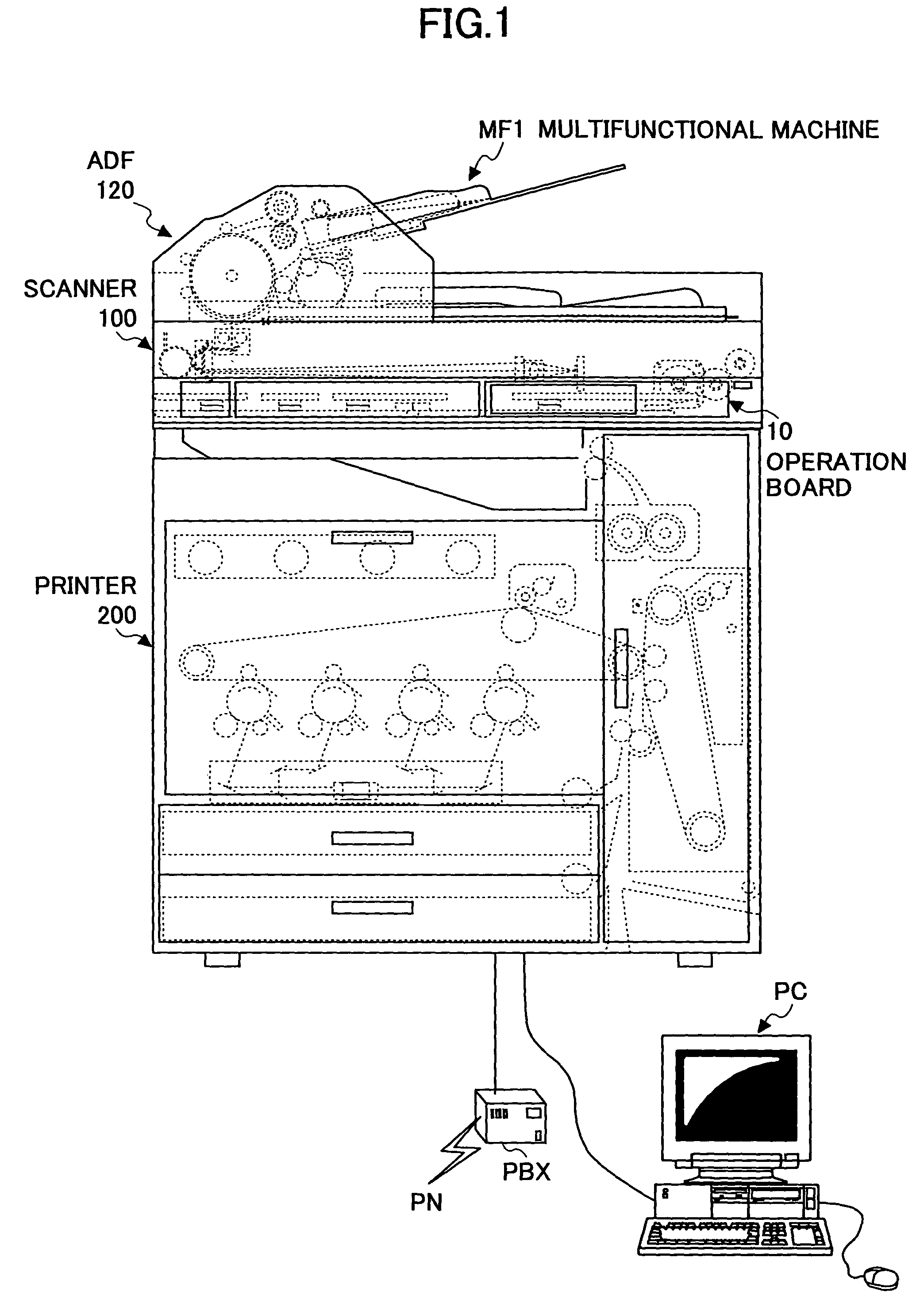

Document reading apparatus and an image formation apparatus

InactiveUS7843610B2Reduce the burden onReduce readDigitally marking record carriersDigital computer detailsOperation modeTime data

A document reading apparatus is disclosed. The document reading apparatus includes a scanner; an energy-saving power supply unit; an energy-saving control unit; a clock IC; an output compensation unit for updating digital conversion parameters including image signal amplification gain such that image data of a reference white board read by a CCD of the scanner are made into a proper value; andan output compensation controlling unit. The output compensation controlling unit reads time data when an operation mode is shifted from pause mode to waiting mode, stores the digital conversion parameters updated by the output compensation unit in a non-volatile memory, updates operation time with the present time if the elapsed time from a previous operation time stored in the non-volatile memory is equal to or greater than a setup value, and uses the digital conversion parameters stored in the non-volatile memory as they are, if the elapsed time from the previous operation time stored in the non-volatile memory is less than the setup value.

Owner:RICOH KK

Method of forming a CPP magnetic recording head with a self-stabilizing vortex configuration

InactiveUS7356909B1Eliminating side-readingEffective shieldingNanomagnetismMagnetic measurementsMagnetizationVertical field

A method is provided for forming a CPP MTJ or GMR read sensor in which the free layer is self-stabilized by a magnetization in a circumferential vortex configuration. This magnetization permits the pinned layer to be magnetized in a direction parallel to the ABS plane, which thereby makes the pinned layer directionally more stable as well. The lack of lateral horizontal bias layers or in-stack biasing allows the formation of closely configured shields, thereby providing protection against side-reading. The vortex magnetization is accomplished by first magnetizing the free layer in a uniform vertical field, then applying a vertical current while the field is still present.

Owner:HEADWAY TECH INC

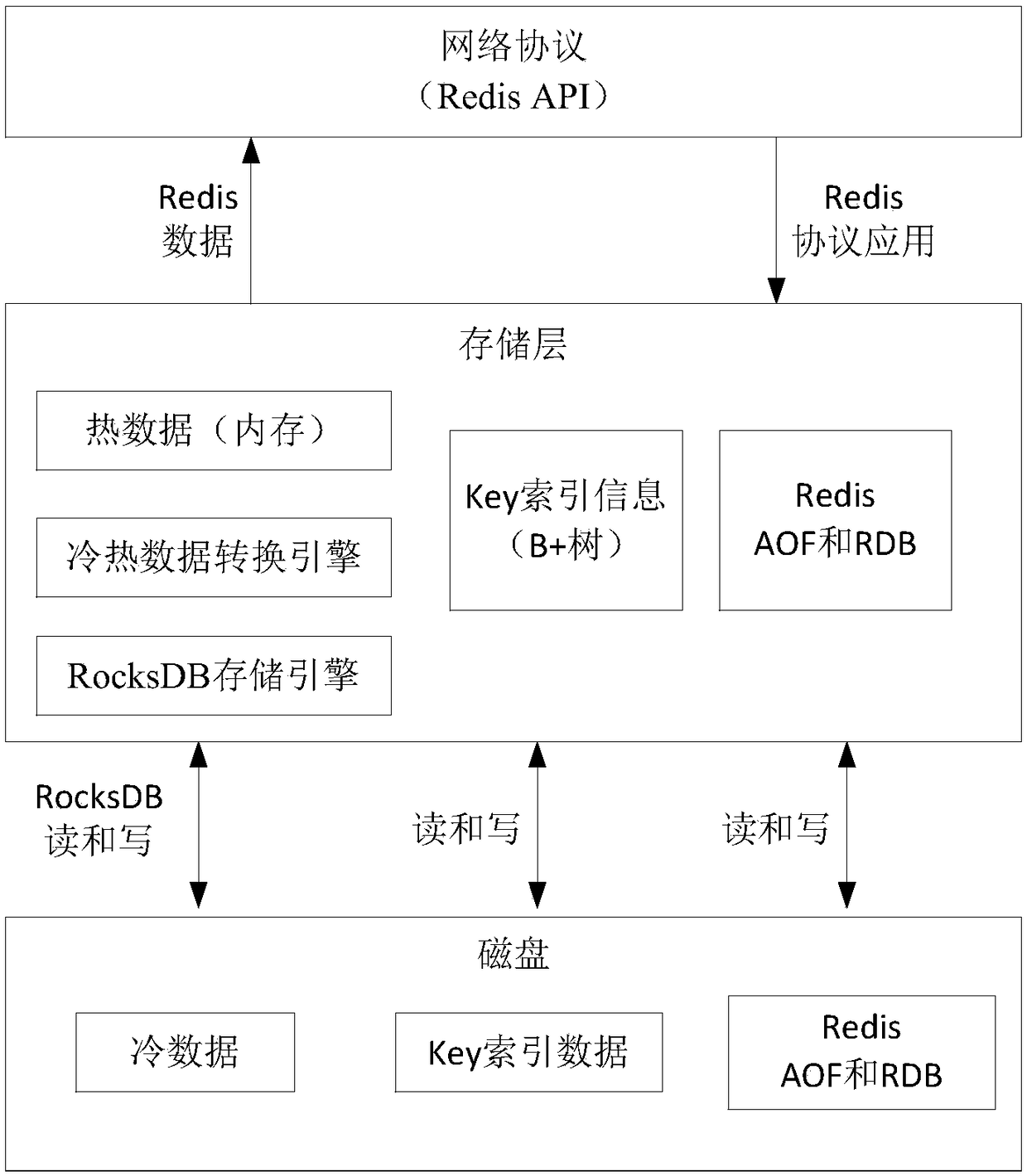

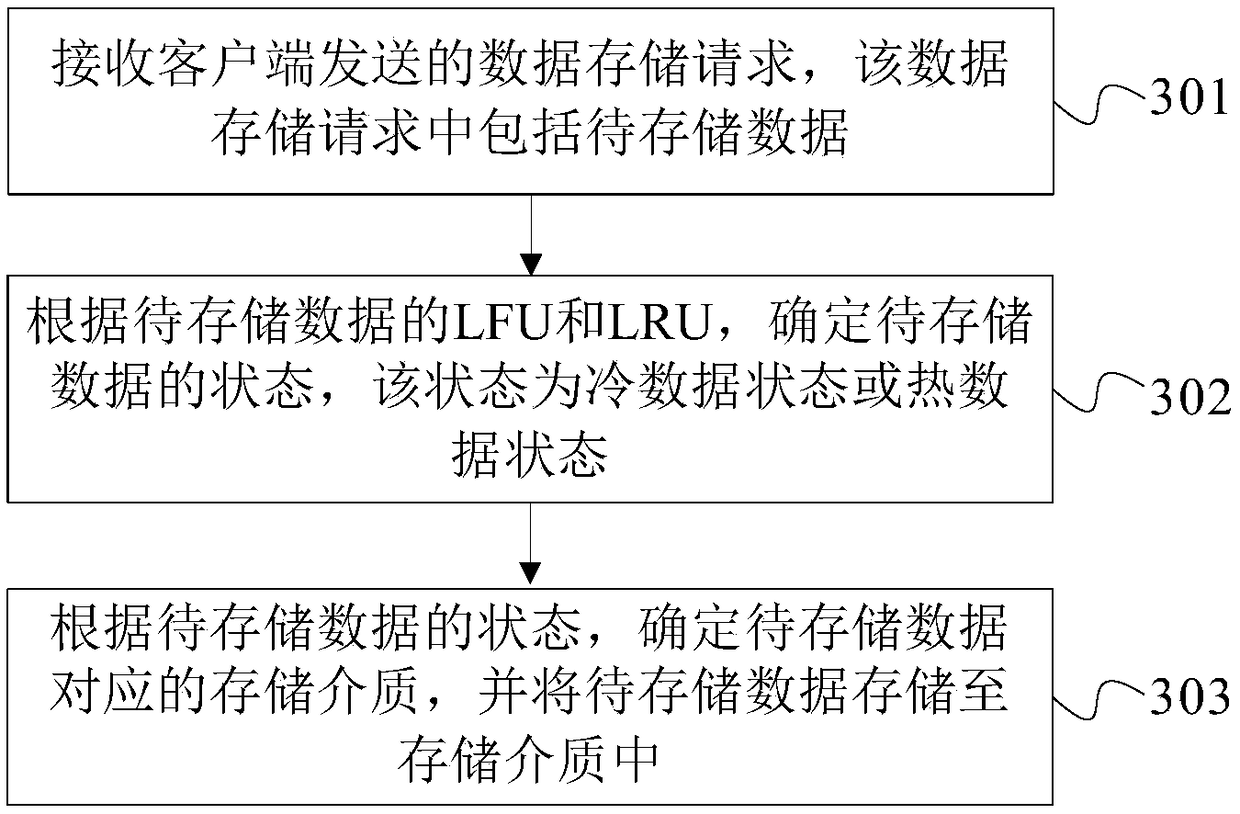

Data storage method and device, and storage medium

InactiveCN108829344AImprove accuracyReduce readMemory architecture accessing/allocationInput/output to record carriersClient-sideData store

The invention provides a data storage method and device, and a storage medium. The method comprises the steps of receiving a data storage request sent by a client, wherein the data storage request comprises to-be-stored data; determining a state of the to-be-stored data according to LFU and LRU of the to-be-stored data, wherein the state is a cold data state or a hot data state; and according to the state of the to-be-stored data, determining the storage medium corresponding to the to-be-stored data, and storing the to-be-stored data in the storage medium. According to the data storage methodand device, and the storage medium provided by the invention, the probability of reading a magnetic disk can be reduced, and the stability of a system is improved.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

Techniques for operating non-volatile memory systems with data sectors having different sizes than the sizes of the pages and/or blocks of the memory

InactiveUS7171513B2Efficient use ofImprove performanceMemory architecture accessing/allocationMemory adressing/allocation/relocationComputer scienceNon-volatile memory

A non-volatile memory system, such as a flash EEPROM system, is disclosed to be divided into a plurality of blocks and each of the blocks into one or more pages, with sectors of data being stored therein that are of a different size than either the pages or blocks. One specific technique packs more sectors into a block than pages provided for that block. Error correction codes and other attribute data for a number of user data sectors are preferably stored together in different pages and blocks than the user data.

Owner:SANDISK TECH LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com