Multi-target behavior action recognition and prediction method, electronic equipment and storage medium

An action recognition and prediction method technology, applied in character and pattern recognition, measurement devices, instruments, etc., can solve the problems of few recognition or prediction categories, high cost, inaccuracy, etc., to improve the accuracy and the number of behavior categories, improve The effect of accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

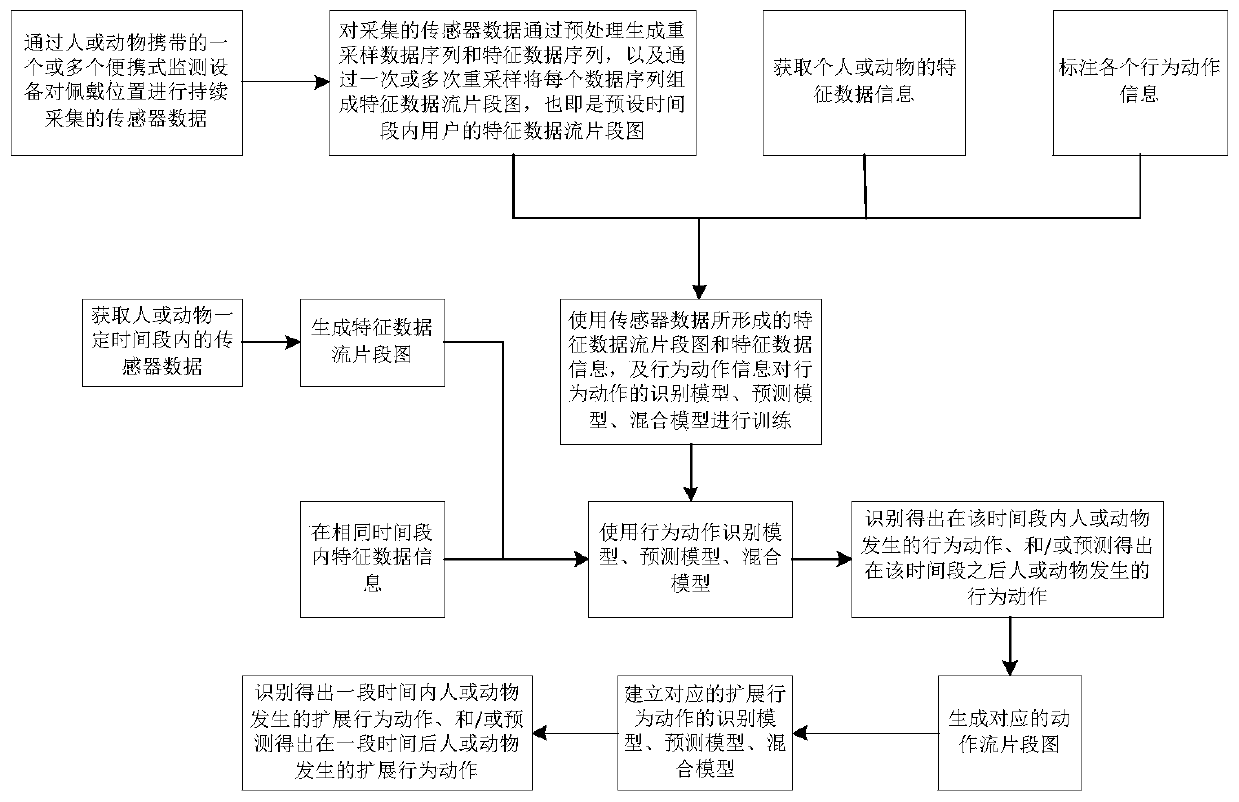

Method used

Image

Examples

Embodiment 1

[0078] Example 1: Tickle

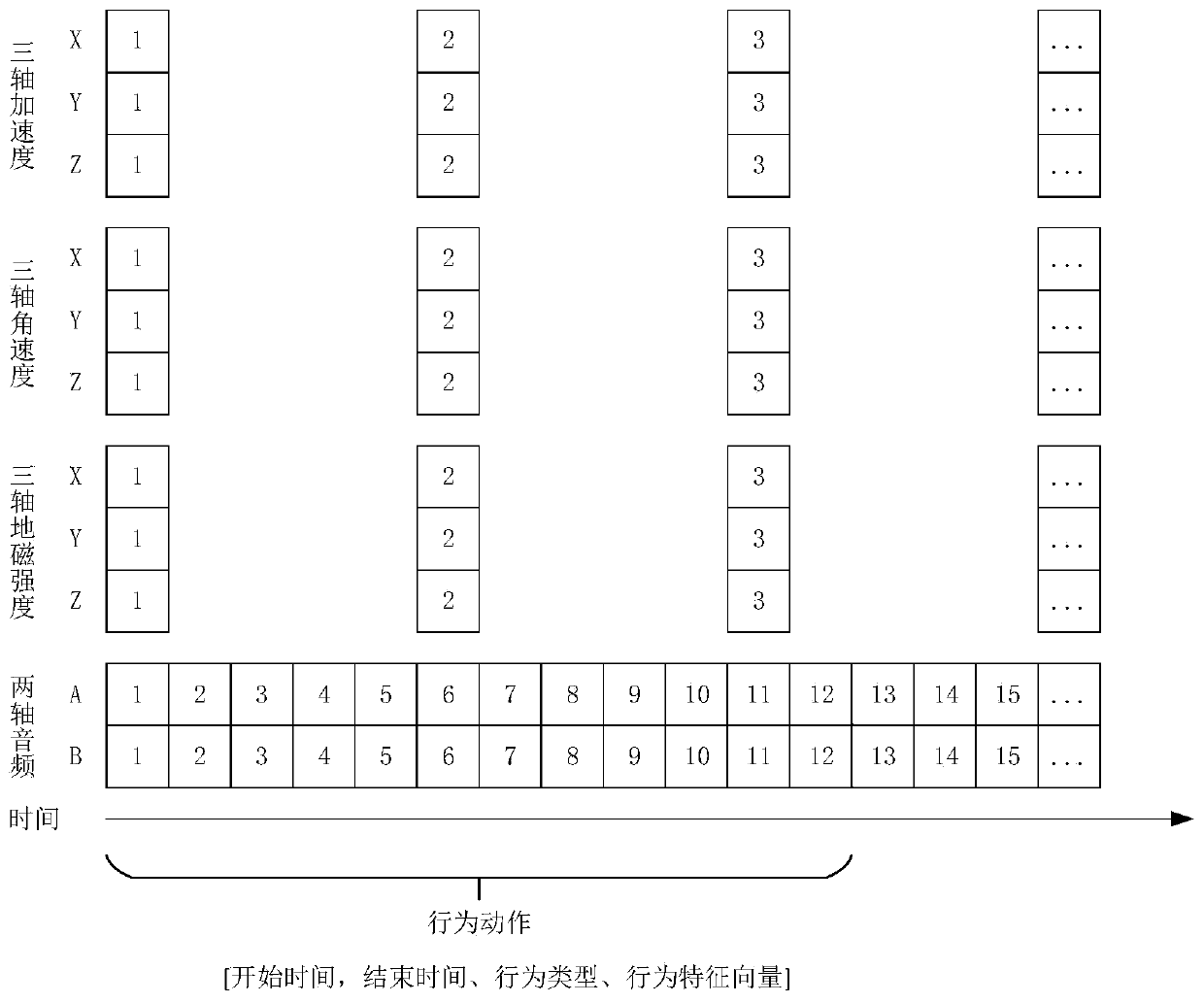

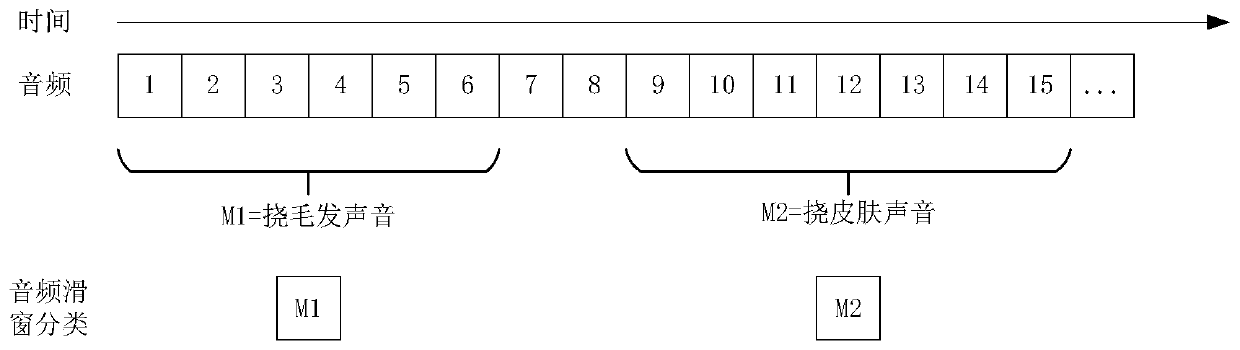

[0079] Due to mosquito bites, bacteria, trauma, nervous tension, etc., it will cause local itching and itching of the skin in different positions. When itching occurs, humans and some animals will have the behavior of scratching. By recording the time, location, frequency, etc. of the behavior, the analysis of the environment, drugs, and psychological conditions in the future will have higher value. This embodiment is aimed at identifying and predicting basic behaviors, and its time span is small, and the user's behaviors can be directly reflected from the data detected in the portable monitoring device carried by the person.

[0080] For the existing technology and method, the most direct way is to shoot the user through the camera, and judge by the human body gesture recognition, but this technology requires the user to move in the shooting screen, and requires multiple cameras to shoot from different angles in order to accurately obtain the scrat...

Embodiment 2

[0122] Example 2: Going to the toilet

[0123] These actions such as washing, urinating and defecating, namely: extended behavior actions (hereinafter also referred to as: long-term behavior actions), people with sound physical functions will repeat these actions every day. Whether you wash your hands before meals and the number of times you urinate and defecate every day are closely related to people's health. Washing and urinating generally need to be performed in the bathroom. These actions usually last for a long time, ranging from tens of seconds to tens of minutes. It is difficult to directly use sensor data or even some extracted feature data. The main reason for identifying the above actions is that there is no fixed time range, and secondly, the time span of the action is large and the original data is huge. By recording the time, location, and frequency of the behavior, it will be of great help to the analysis of diet, exercise, and health status in the future.

[...

Embodiment 3

[0200] The present invention also provides an electronic device comprising a memory, a processor and a computer program stored on the memory and executable on the process, the processor implementing the program to achieve a multi-objective as described herein The steps of the action action recognition prediction method.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com