Autonomous unmanned system position identification and positioning method based on sequence image features

A sequence of images, recognition and positioning technology, applied in the field of mobile robots, can solve problems such as large amount of calculation, huge map, and large time consumption, and achieve the effect of enhancing robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0054] Embodiment 1: The present invention will be further described below in conjunction with drawings and embodiments.

[0055] The visual position recognition is a method based on two-dimensional images. The images used in the present invention are all RGB images acquired by a common monocular camera, and each data set includes at least two groups of images, collected from the same route, different time and perspective. The mechanism of the position recognition task based on the image sequence is that the motion of the robot is continuous in time and space, and it can be considered that the images collected in a similar time have a high similarity, that is, the adjacent images of the current frame can be Find matching images within the contiguous range of the best matching image for the current frame.

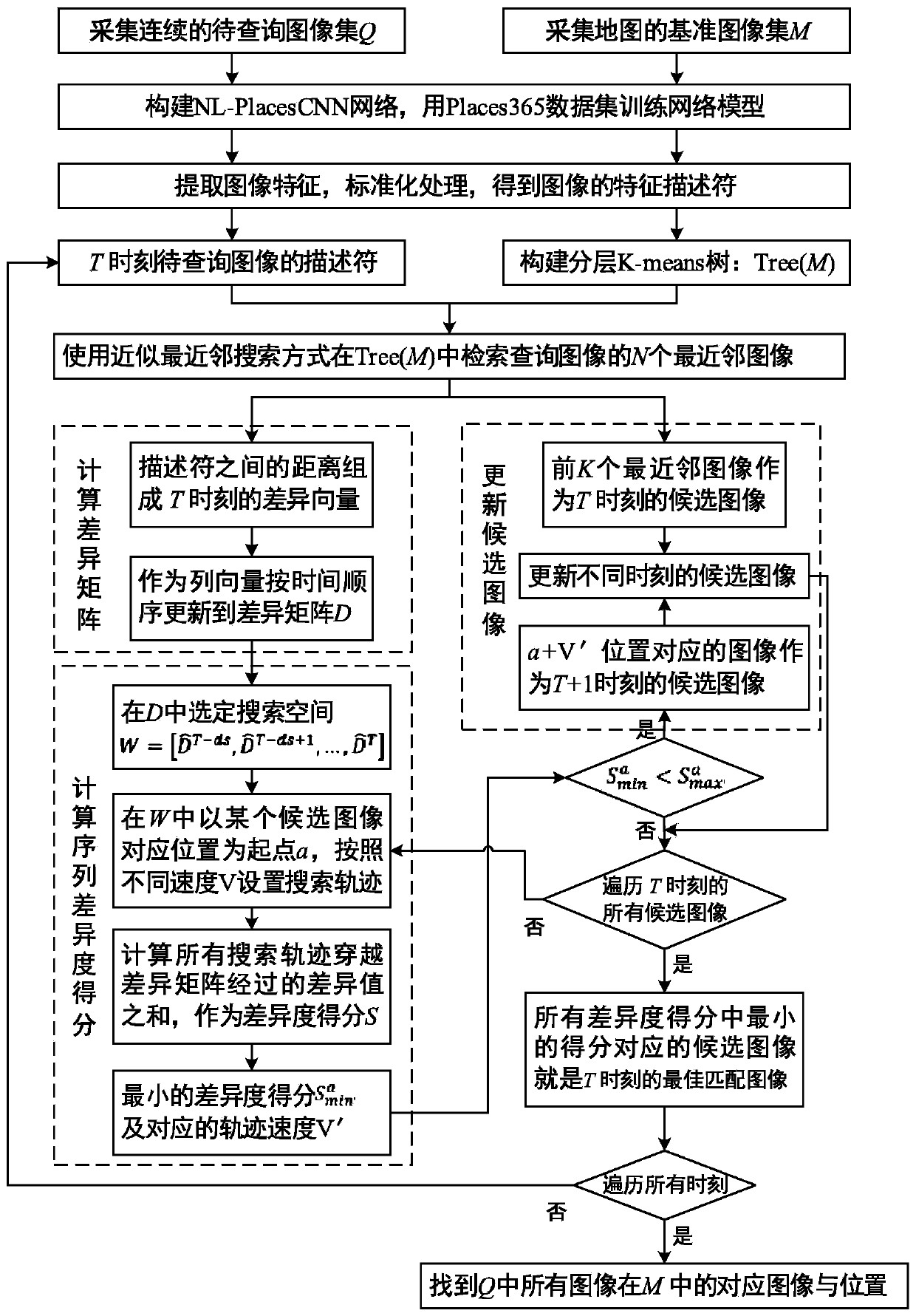

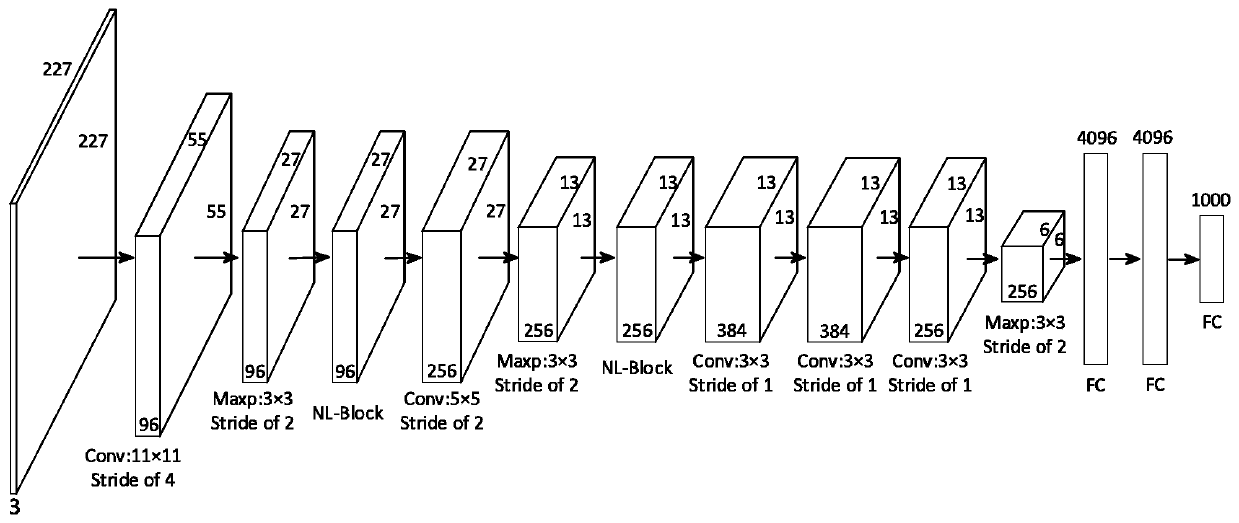

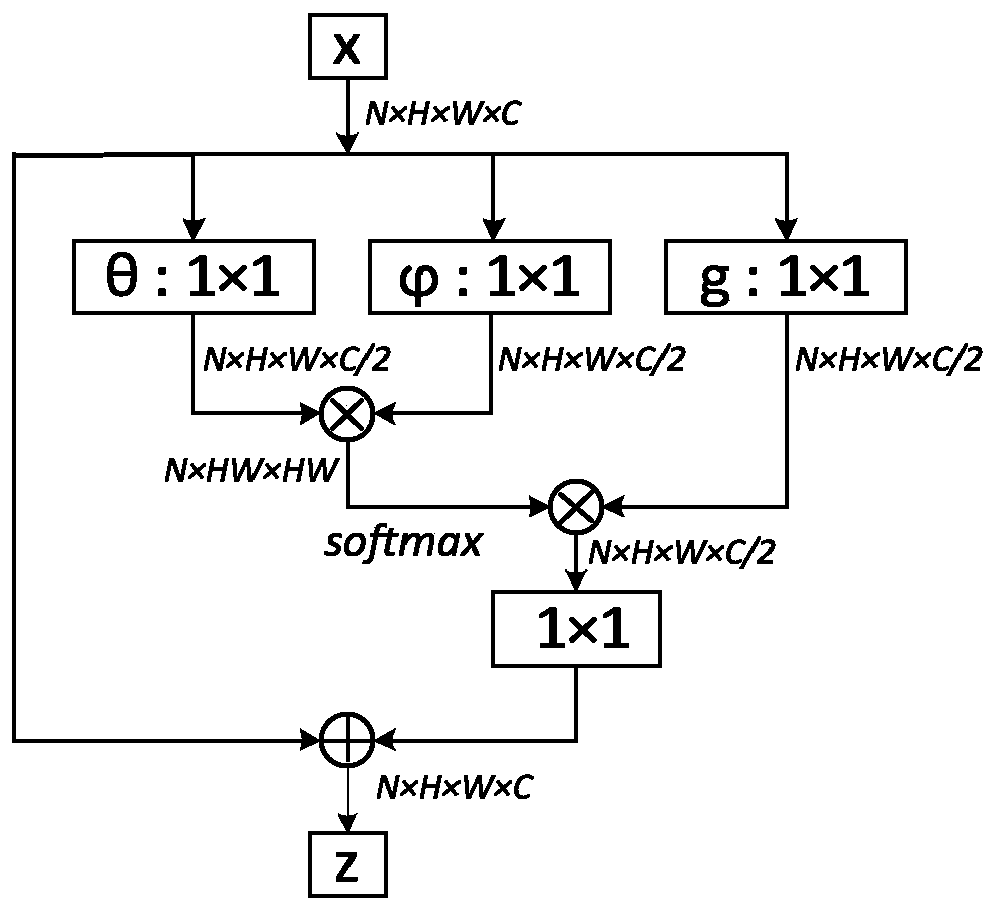

[0056] Such as figure 1 Shown is a flow chart of the present invention, a method for identifying and locating the position of an autonomous unmanned system based on sequen...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com