Breast cancer image recognition method and system based on multi-stage multi-feature deep fusion

An image recognition and multi-feature technology, applied in the field of information processing, can solve problems such as high complexity, time-consuming cost, easy loss of identification information, etc., to reduce the requirements of machine hardware configuration, reduce time cost and equipment cost, reduce Effect of Model Parameter Quantity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

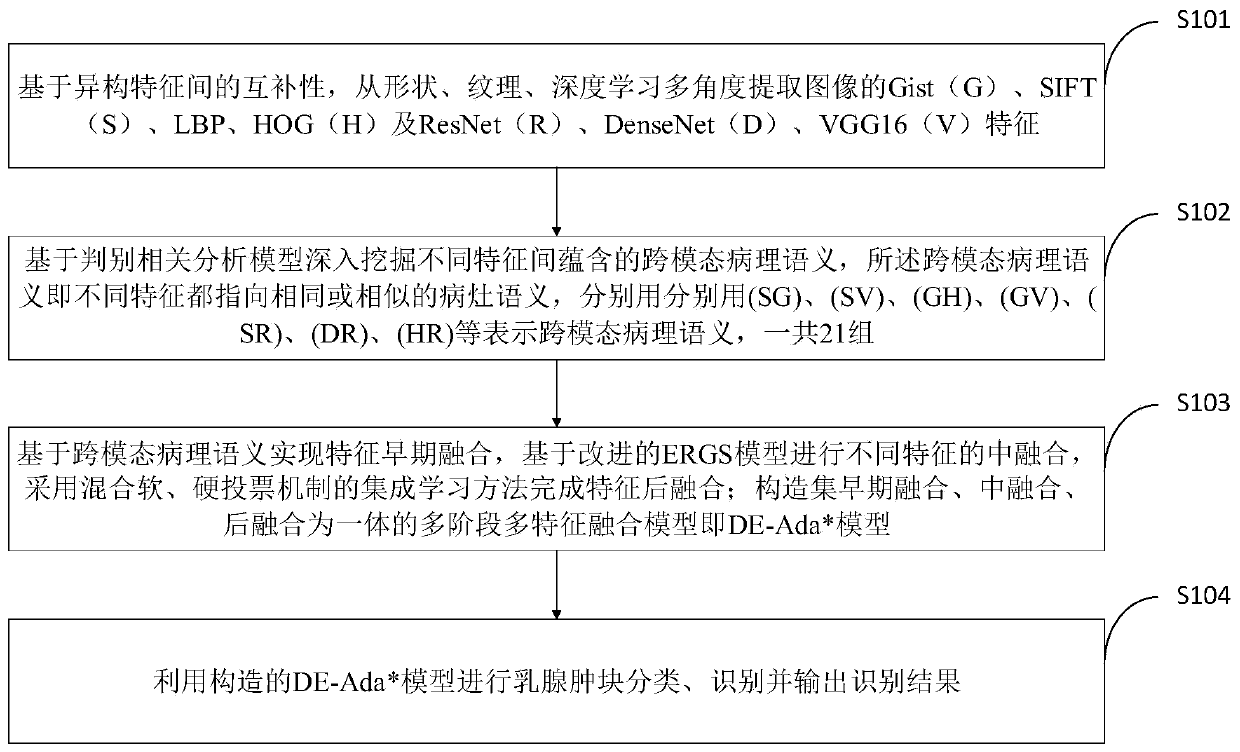

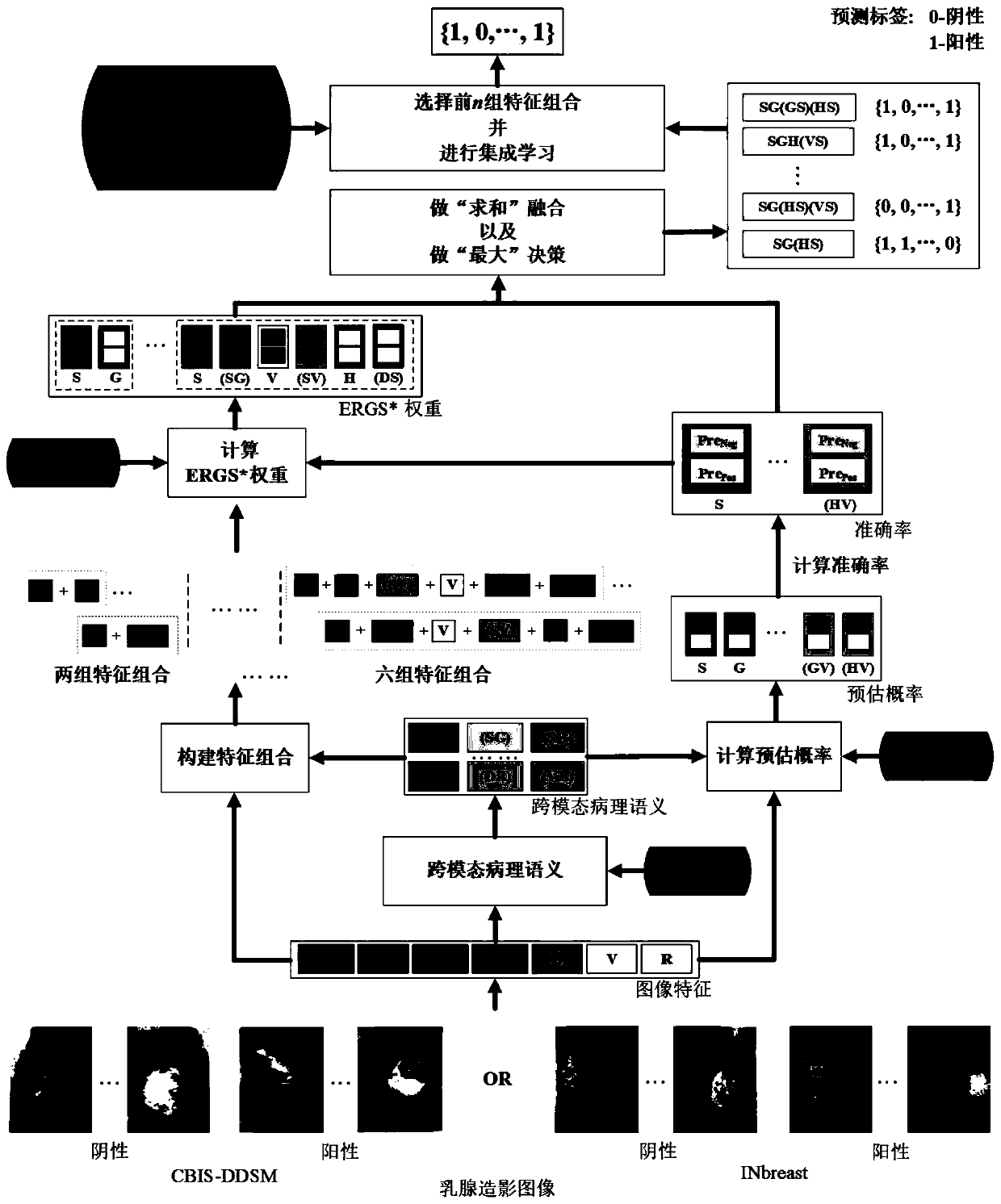

[0083] Multi-stage multi-feature deep fusion refers to: 1) Using multiple complementary features, such as traditional features and deep learning features, of which there are four traditional features and three deep learning features. Because the feature extraction methods are different and heterogeneous, they describe mammography images from different visual angles; 2) There are several simple, easy and effective feature fusion methods: early feature fusion, feature mid-fusion and feature post-fusion. Each stage can contribute to the improvement of the final breast cancer diagnosis performance.

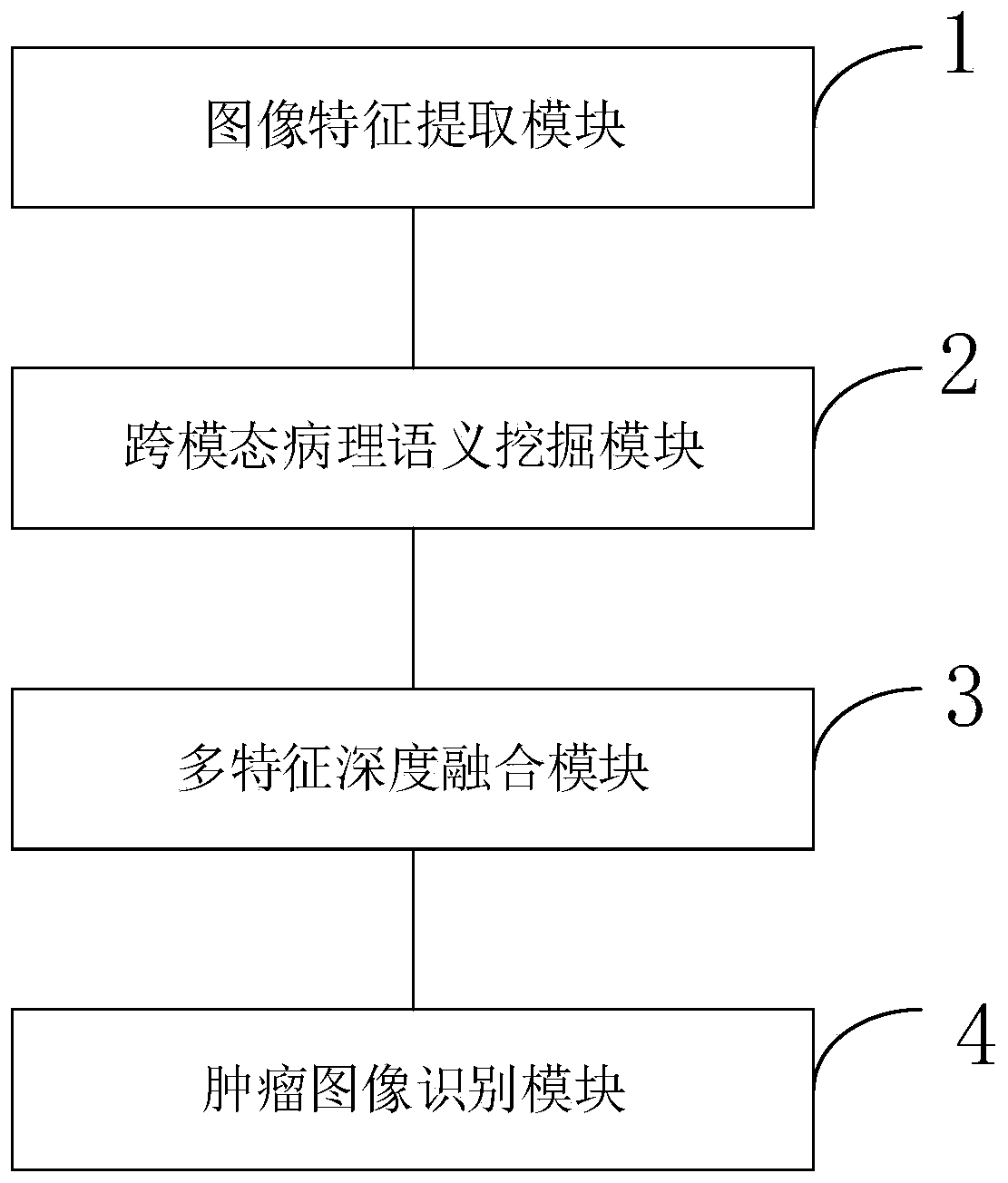

[0084] Based on the idea of multi-stage multi-feature deep fusion, a new DE-Ada* breast cancer diagnosis model is proposed, which includes: image feature extraction, cross-modal pathology semantic mining, multi-feature fusion and breast cancer classification. From shape, Extract Gist(G), SIFT(S), HOG(H), LBP(L) and VGG16(V), ResNet(R), DenseNet(D) features of images from the angles ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com