Hair reconstruction method based on adaptive octree hair convolutional neural network

A convolutional neural network and adaptive technology, applied in the field of 3D reconstruction, can solve the problems of consuming large 3D convolution modules, wasting storage space, and increasing computing overhead

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] The present invention will be further described below in conjunction with specific examples.

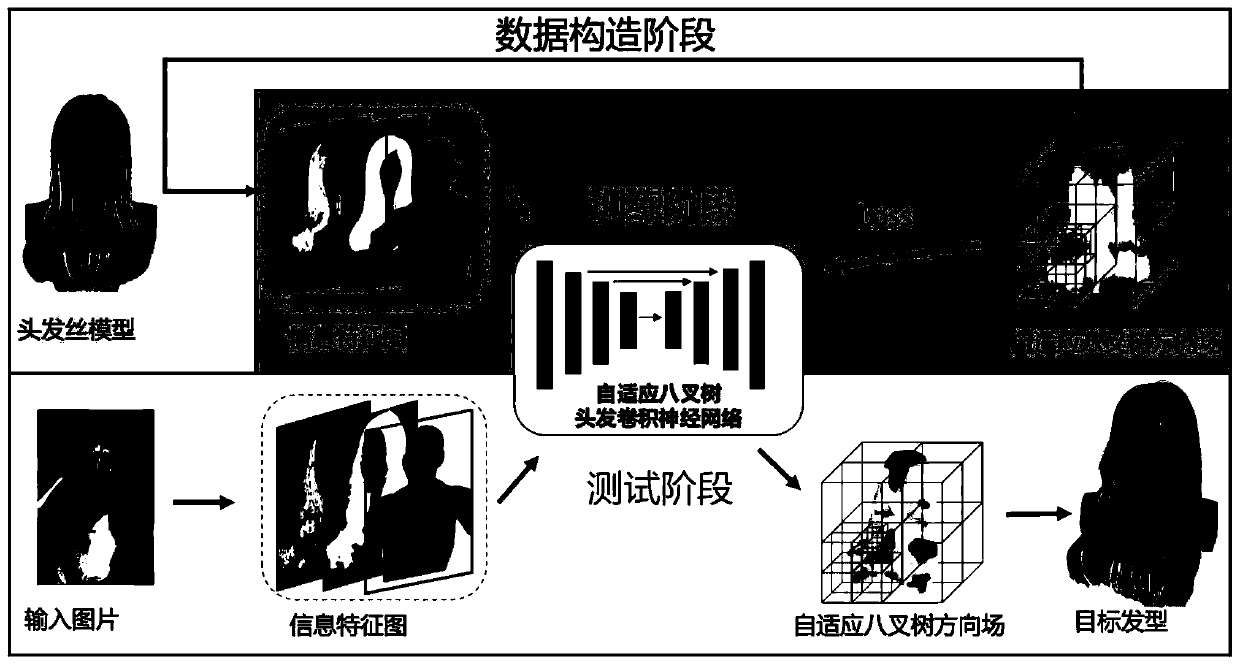

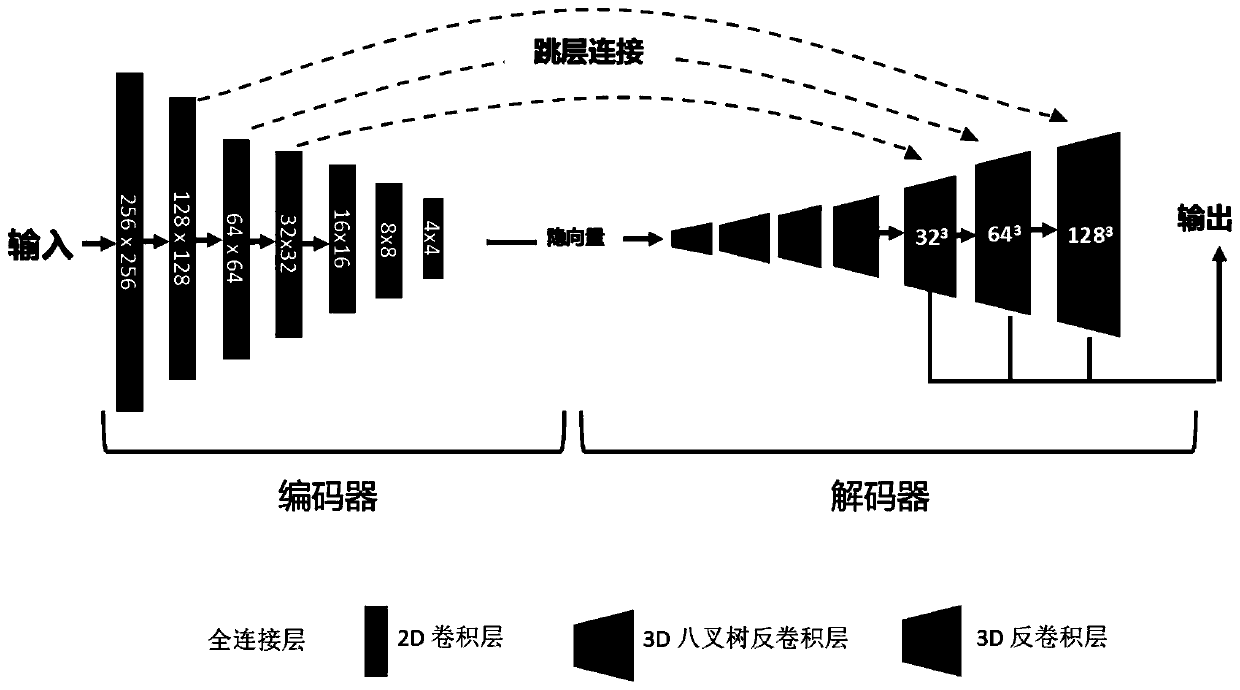

[0044] Such as Figure 1 to Figure 3 As shown, the hair reconstruction method based on the adaptive octree hair convolutional neural network provided in this embodiment specifically includes the following steps:

[0045] 1) Prepare training data. It was further processed using the publicly available hair model database (USC-HairSalon database) as the data base. The database contains 343 hair models, each model is composed of 10,000 hair strands, each hair strand is composed of 100 points, and all models have been aligned to the same head template. First, we rasterize the hairline direction of each 3D model in the database to form a hairline direction map. Specifically, the direction of the hair strands is mapped to different colors, and the colors are attached to the triangles of the hair mesh. At the same time, prepare an upper body body mesh for the hair mesh, and send i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com