Mobile robot positioning method and device based on particle filter and vision assistance

A mobile robot and particle filter technology, applied in the field of robotics and navigation, can solve the problems of low efficiency, large global map, and random particle distribution failure recovery time, and achieve the effect of reducing time, improving efficiency, and increasing accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

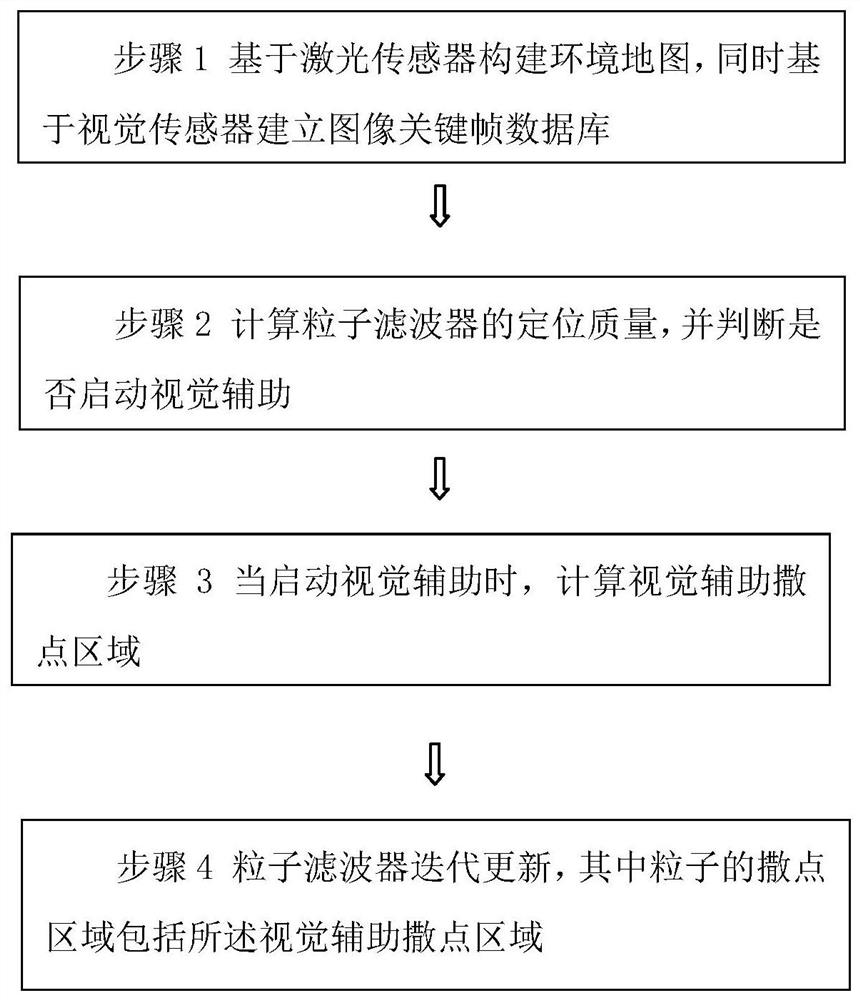

[0039] Such as figure 2 As shown, a mobile robot localization method based on particle filters and visual aids, including:

[0040] Step 1. Build an environmental map based on the laser sensor, and build an image key frame database based on the visual sensor. The image key frame database stores all image key frame information, and the image key frame information includes at least the global position information and depth information of the image key frame. Depth information be dense or sparse.

[0041] To construct an environmental map based on laser sensors, algorithms such as Gmapping, HectorSLAM, and Cartographer can be used to construct a two-dimensional grid map.

[0042] The method for establishing an image key frame database based on a visual sensor at least includes: fusing an IMU with a depth camera or a binocular camera or a monocular camera to obtain global position information and depth information of the image key frame. The usual practice is to use the SLAM al...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com