Sparse neural network-oriented system on chip

A neural network and system-on-a-chip technology, applied in biological neural network models, complex mathematical operations, instruments, etc., can solve problems such as difficulty in data reuse of accelerators, low CPU utilization, and general acceleration effects, and achieve improved data utilization, The effect of improving efficiency and reducing the number of memory accesses

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

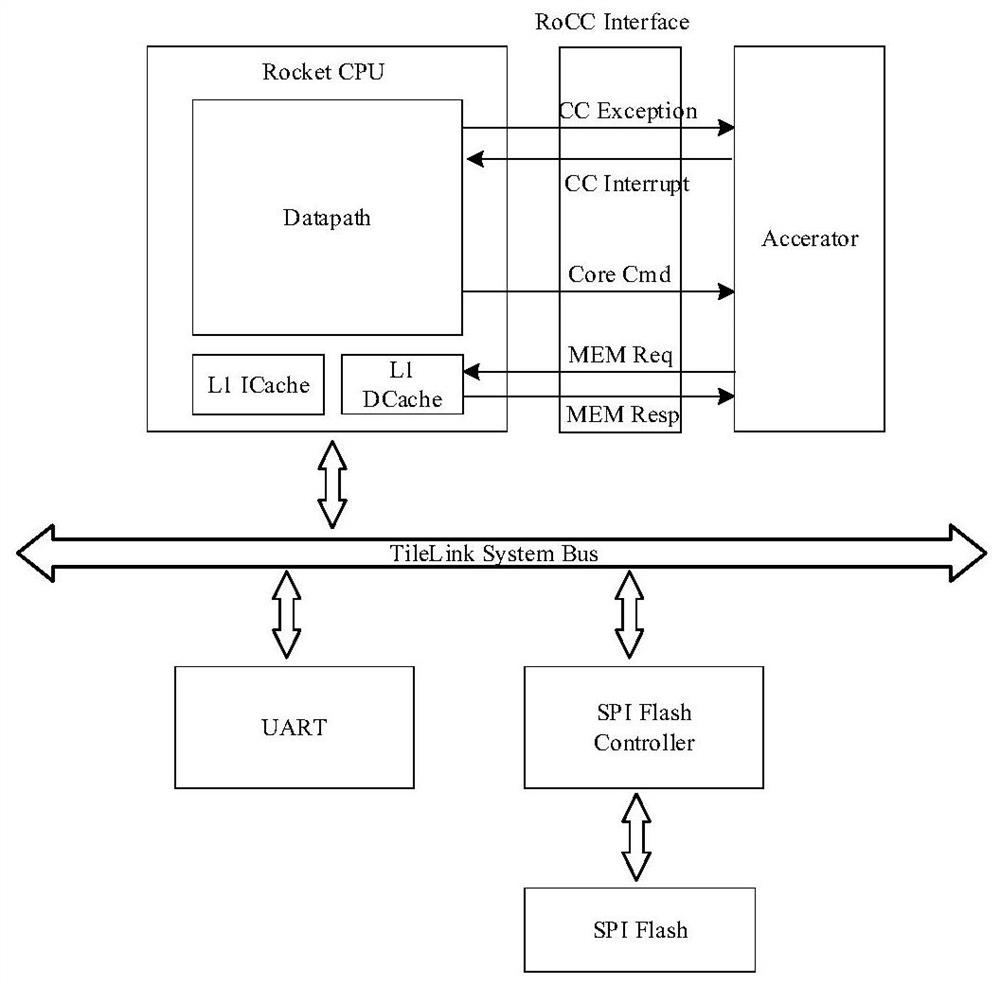

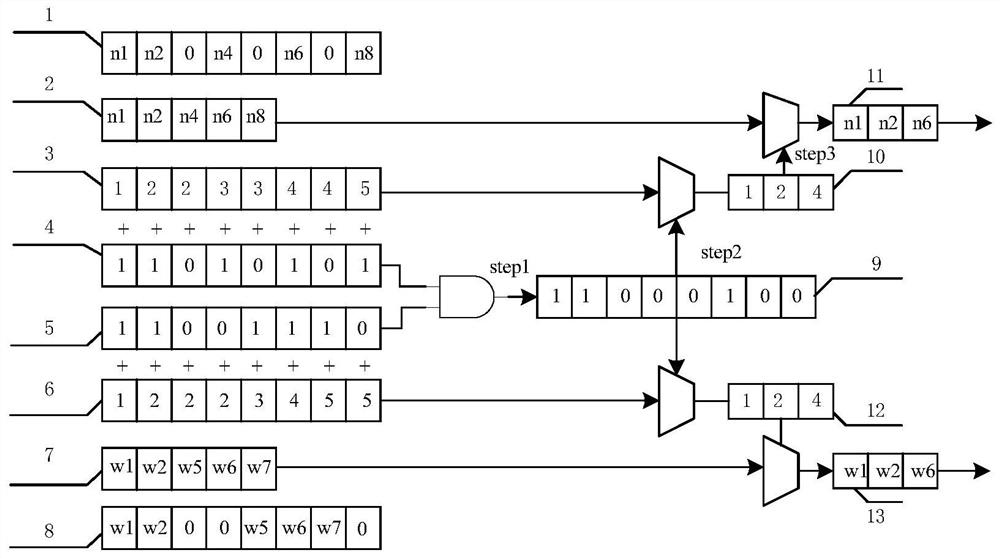

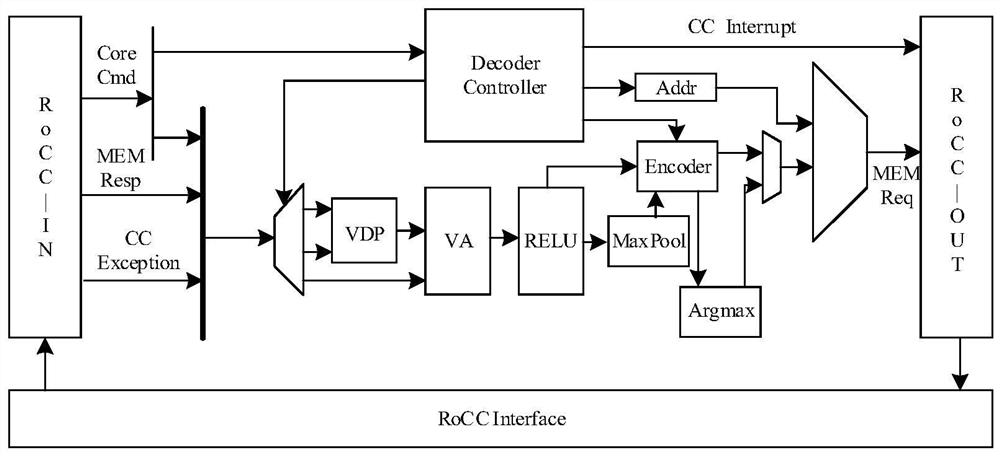

[0049] A system-on-a-chip for sparse neural networks, in this embodiment, includes the main processor type open source processor Rocket, the neural network accelerator of coprocessor type, system bus TileLink, debug interface UART, SPIFlash controller and chip external SPI Flash memory. Among them, the processor is the main device of the system; the neural network accelerator is the coprocessor of the main processor, and the coprocessor is connected to the main processor through the RoCC interface; the UART and SPI Flash controller are the slave devices of the system, and the slave device is connected through TileLink The bus is connected to the main processor; SPI Flash is integrated into the system as an off-chip storage through the SPI interface in the SPI Flash controller. The processor is mainly responsible for decomposing the matrix calculation in the neural network algorithm into vector calculation, and then filtering out the non-zero data participating in the calculati...

Embodiment 2

[0051] Such as figure 1 As shown, this embodiment is a heterogeneous system-on-chip created based on a RISC-V open source processor. The system integrates Rocket CPU, coprocessor accelerator, SPI Flash controller, UART and off-chip SPI Flash. RocketCPU serves as the main processor of the system, and the accelerator that executes the neural network algorithm serves as its coprocessor. The main processor and the coprocessor are tightly coupled through the RoCC interface, and the two share the L1 DCache. SPI Flash is an off-chip program memory, externally connected to the SPI Flash controller, which stores the binary file of the program inside. After the system is powered on and reset, the CPU reads a block of instructions from the base address of the SPI Flash to the L1 ICache. . Then, the CPU executes the program to filter and reorganize the non-zero neurons and non-zero weights involved in the calculation in the sparse neural network, and convert the sparse vector in the spa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com