Static gesture recognition method based on watershed transformation

A watershed transformation and gesture recognition technology, applied in the field of image processing and human-computer interaction, can solve the problems of error, over-segmentation, affecting the accuracy of gesture segmentation, and achieve the effect of good adaptability and high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

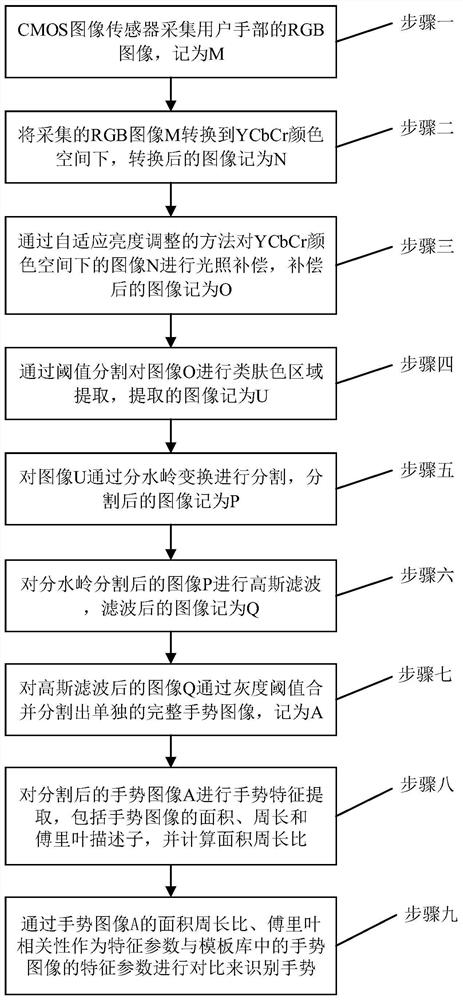

[0083] The present invention proposes a static gesture recognition method based on watershed transform, such as figure 1 As shown, the specific method is as follows:

[0084] Step 1, the CMOS image sensor collects the RGB image of the user's hand, denoted as M;

[0085] Step 2, the RGB image M of gathering is converted under the YCbCr color space, and the converted image is denoted as N;

[0086] Step 3, carry out illumination compensation to the image N under the YCbCr color space by the method for self-adaptive brightness adjustment, the image after compensation is denoted as O;

[0087] Step 4, extract the skin-like region of the image O by threshold segmentation, and denote the extracted image as U;

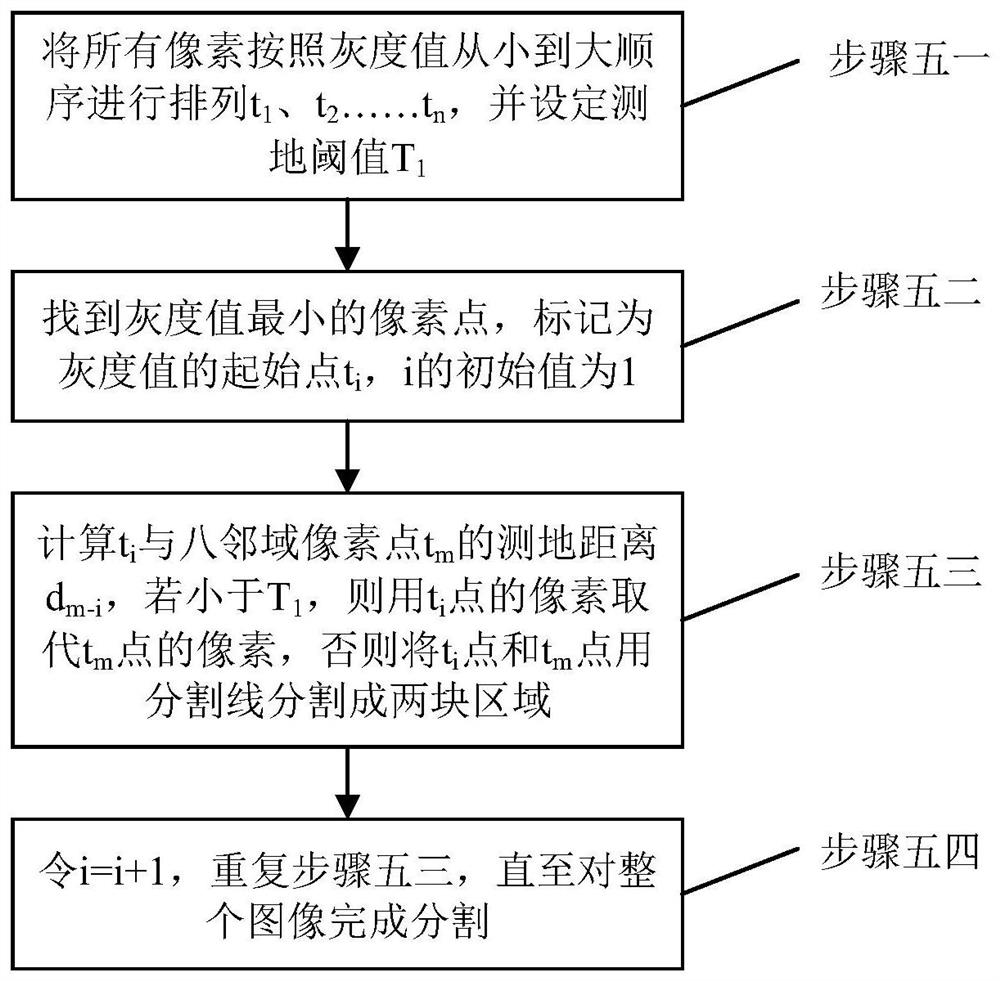

[0088] Step 5. Segment the image U by watershed transform, and denote the segmented image as P;

[0089] Step 6. Use two Gaussian filter kernels to perform Gaussian filtering on the image P after watershed segmentation, and the filtered image is denoted as Q;

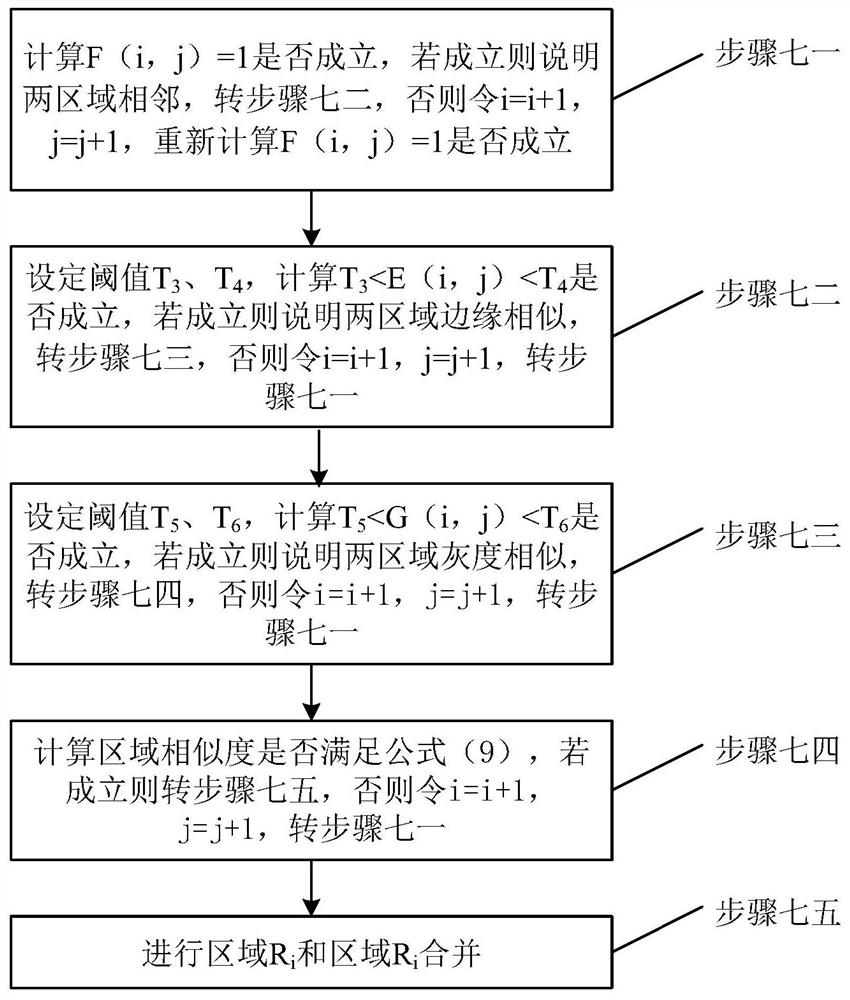

[0090] Ste...

specific Embodiment approach 2

[0094] On the basis of the specific embodiment one, a static gesture recognition method based on watershed transformation, in the first step, the CMOS image sensor collects the user's hand image, and requires the center of the palm or the center of the back of the subject to face the camera, so that A complete hand image that can be collected.

specific Embodiment approach 3

[0096] On the basis of the specific embodiment one, a static gesture recognition method based on watershed transformation, in the second step, the RGB image collected is converted to the YCbCr color space, and the good clustering characteristics of the skin color in the YCbCr color space are utilized , which can better segment skin-like areas, and perform color space conversion according to formula (1):

[0097]

[0098] where Y represents brightness, and Cb and Cr represent the concentration offset components of blue and red.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com