Method for extracting three-dimensional coordinate values from image in robot scene

A three-dimensional coordinate, robot technology, applied in image enhancement, image analysis, image data processing and other directions, can solve the problems of shaking, value accuracy is not high enough, low efficiency and so on

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

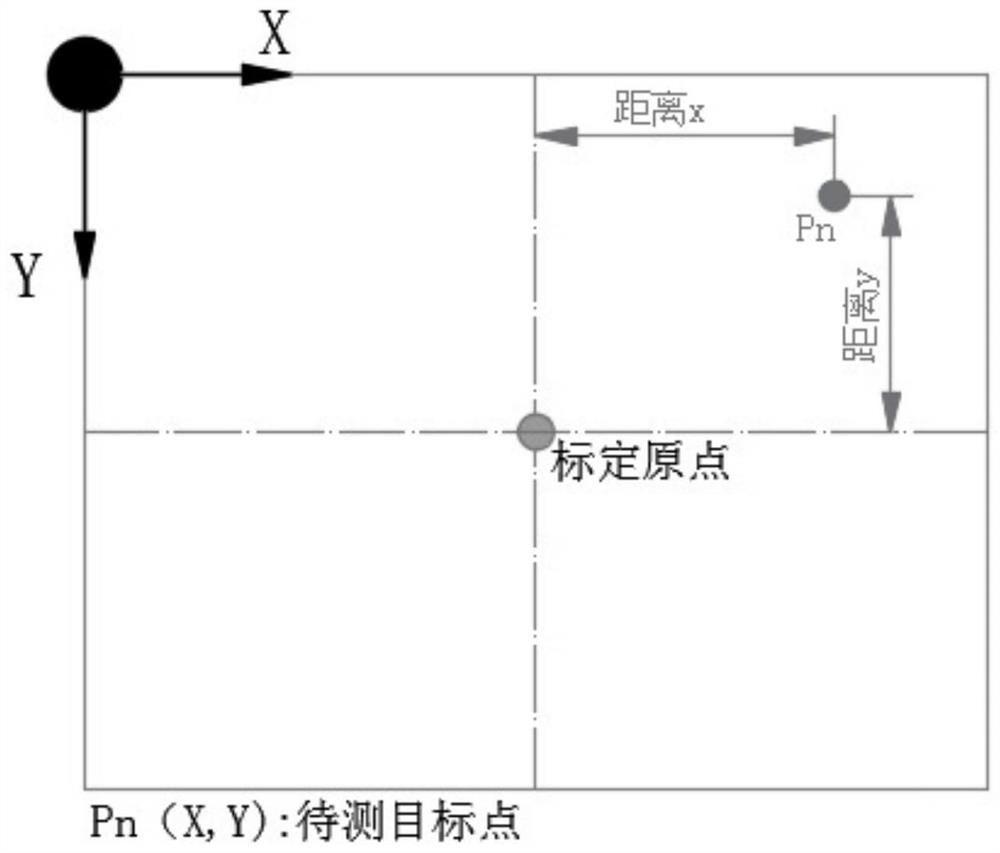

[0044] see Figure 1-3 , a method for extracting three-dimensional coordinate values from an image for a robot scene, comprising the following steps:

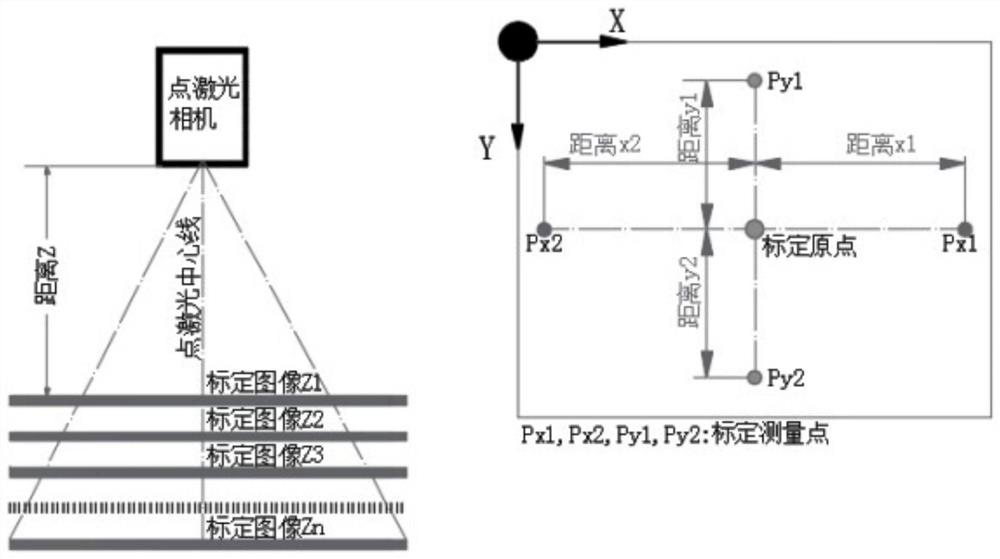

[0045] S1. Layered modeling is performed on the XY plane images at different heights (Z) from the camera to the target surface. The Z-direction interval of the sampled image is properly encrypted according to the usage requirements. Generally, ten steps are taken to form an image interpolation model. The interpolation model data structure mainly includes the distance Z between the camera lens and the target surface, the pixel XY position of the origin on the image, the X-direction proportional coefficient and the Y-direction proportional coefficient between the pixel and the physical length, etc.;

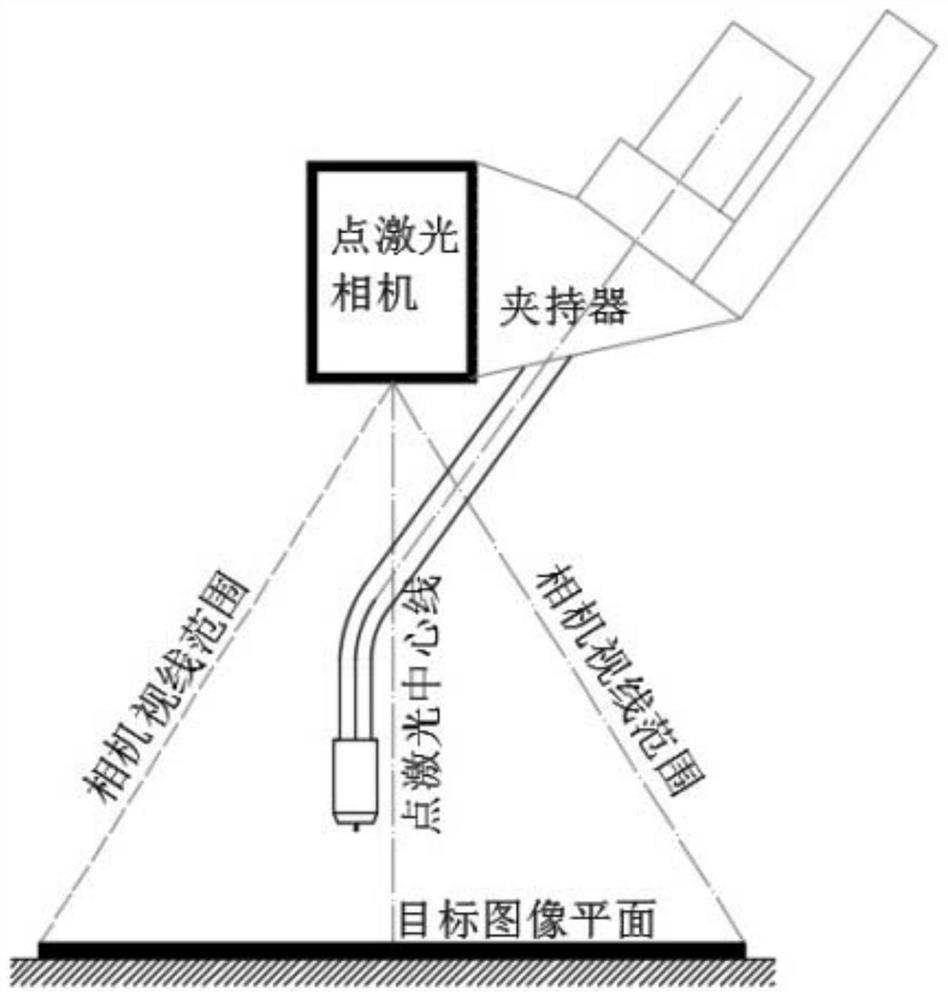

[0046] S2. The camera and the point laser are fixedly installed at a suitable position at the end of the robot, and the direction of the point laser is kept parallel to the line of sight of the camera.

[0047] The camera and poi...

Embodiment 2

[0074] see Figure 4 and Figure 6 , a camera docking bracket auxiliary device for extracting information from a robot scene, including a support plate mechanism 1, the interior of the support plate mechanism 1 includes a base 111, the top of the base 111 is connected with a stacked plate 112, and the side wall of the stacked plate 112 is A support frame 113 is connected through it, and a movable ring 2 is connected through the top of the stretching plate mechanism 1, and a tank pushing mechanism 3 is slidably connected to the inner wall of the moving ring 2. The inside of the tank pushing mechanism 3 includes an expansion tube 311, and A superimposed network 312 runs through the axis of the inner side wall, a central column 315 runs through the axis of the inner side wall of the superimposed network 312, and a ball 314 runs through the side walls of the expansion tube 311, and the top and side walls of the ball 314 run through Connected with support rod 313, the bottom end o...

Embodiment 3

[0079] see Figure 4-5 , a camera docking bracket auxiliary device for extracting information from a robot scene, comprising a brace mechanism 1, a movable ring 2 connected through the top of the brace mechanism 1, and a tank push mechanism 3 slidingly connected to the inner wall of the movable ring 2, The interior of the tank pushing mechanism 3 includes an expansion tube 311, the inner side wall axis of the expansion tube 311 is connected with a superimposed net 312, and the inner side wall axis of the superimposed net 312 is connected with a central column 315, and the side wall of the expansion tube 311 There are balls 314 connected between them, the top and side walls of the balls 314 are connected with struts 313, the bottom end of the side wall of the movable ring 2 is connected with a connecting ring 9, and the middle part of the inner side wall of the movable ring 2 is connected with a movable disc 6. The side wall of movable disc 6 is connected with movable rod 7, an...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com