Cache processing method and protocol processor cache control unit

A protocol processor and high-speed cache technology, applied in the computer field, can solve the problems of large system delay and low processing efficiency, and achieve the effect of improving efficiency, improving throughput, and eliminating the problem of Cache access delay

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

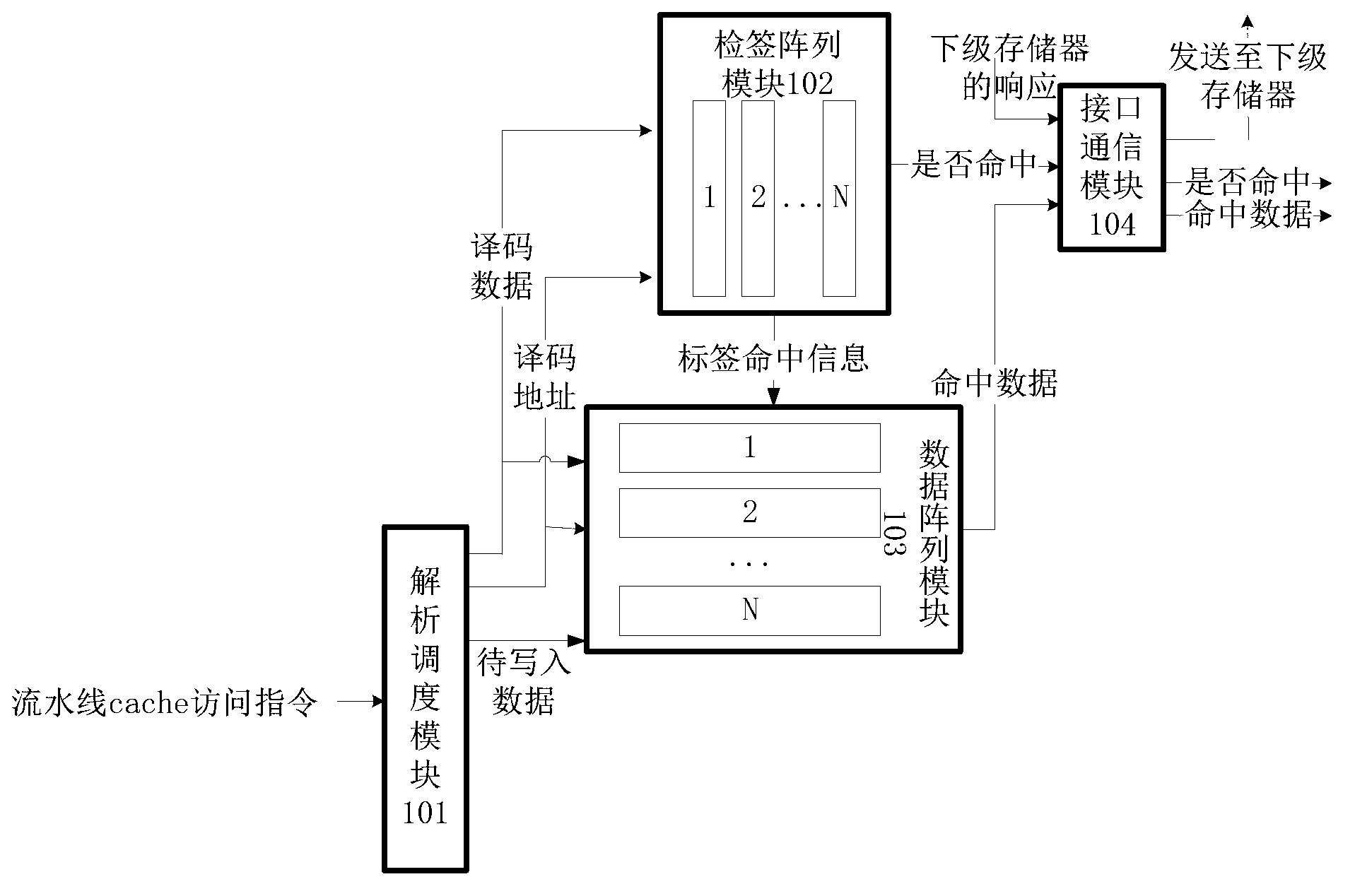

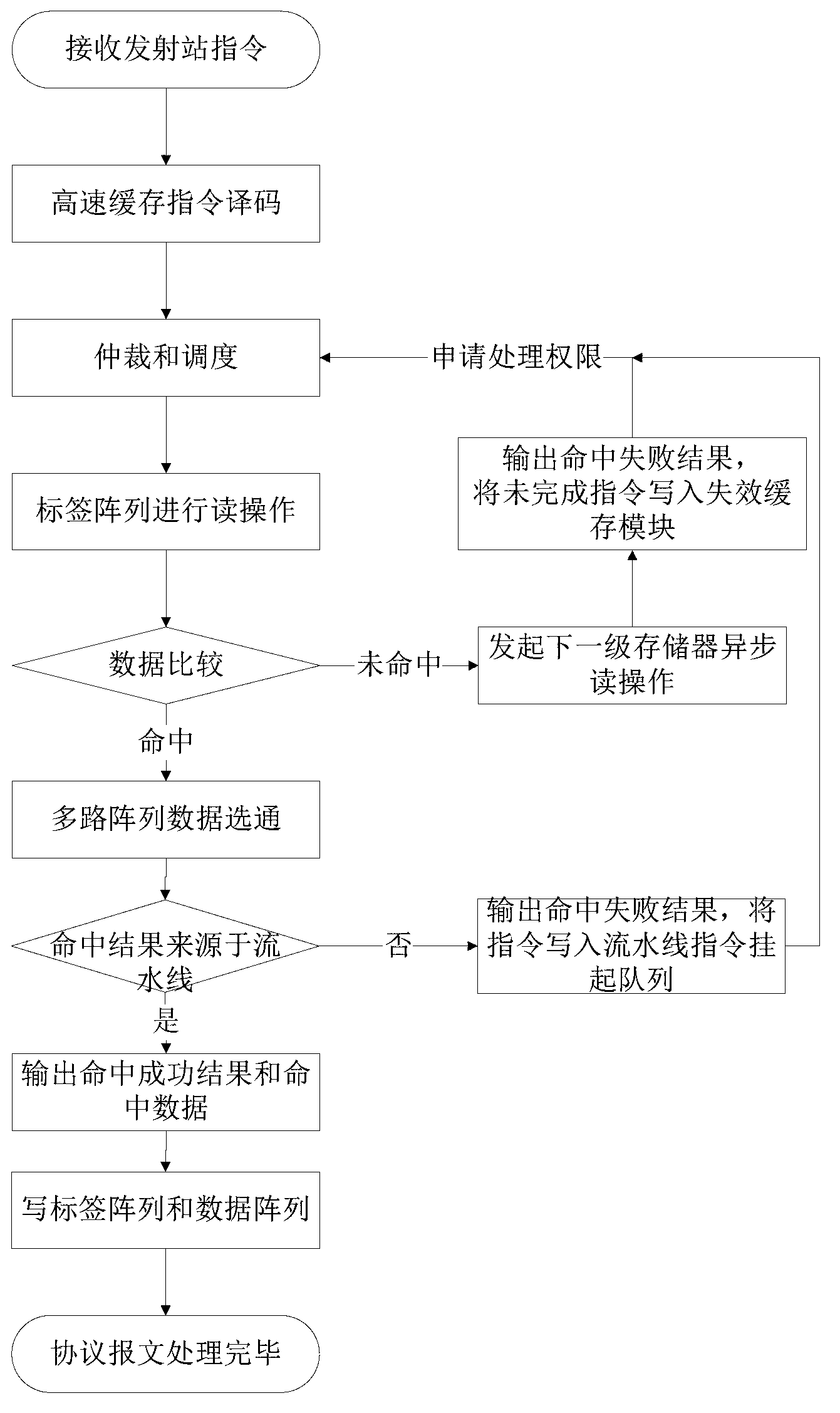

[0053] Such as figure 1 As shown, the protocol processor cache control unit includes a resolution scheduling module 101 , a tag array module 102 , a data array module 103 , and an interface communication module 104 . The number of channels in the tag array module 102 and the data array module 103 is the same, both are N channels, and N is a positive integer, for example, N is 8.

[0054] The parsing and dispatching module 101 is used to receive instructions from different sources, and after dispatching and arbitrating each instruction, assign a processing authority to one or more instructions therein. When the instruction obtaining the processing authority is a pipeline cache access instruction, it is parsed, and the The decoded data is sent to the tag array module 102, and the decoded data and the decoded address are sent to the tag array module 102 and the data array module 103; it is also used to send the data to be written when the pipeline cache access instruction is a wr...

Embodiment 2

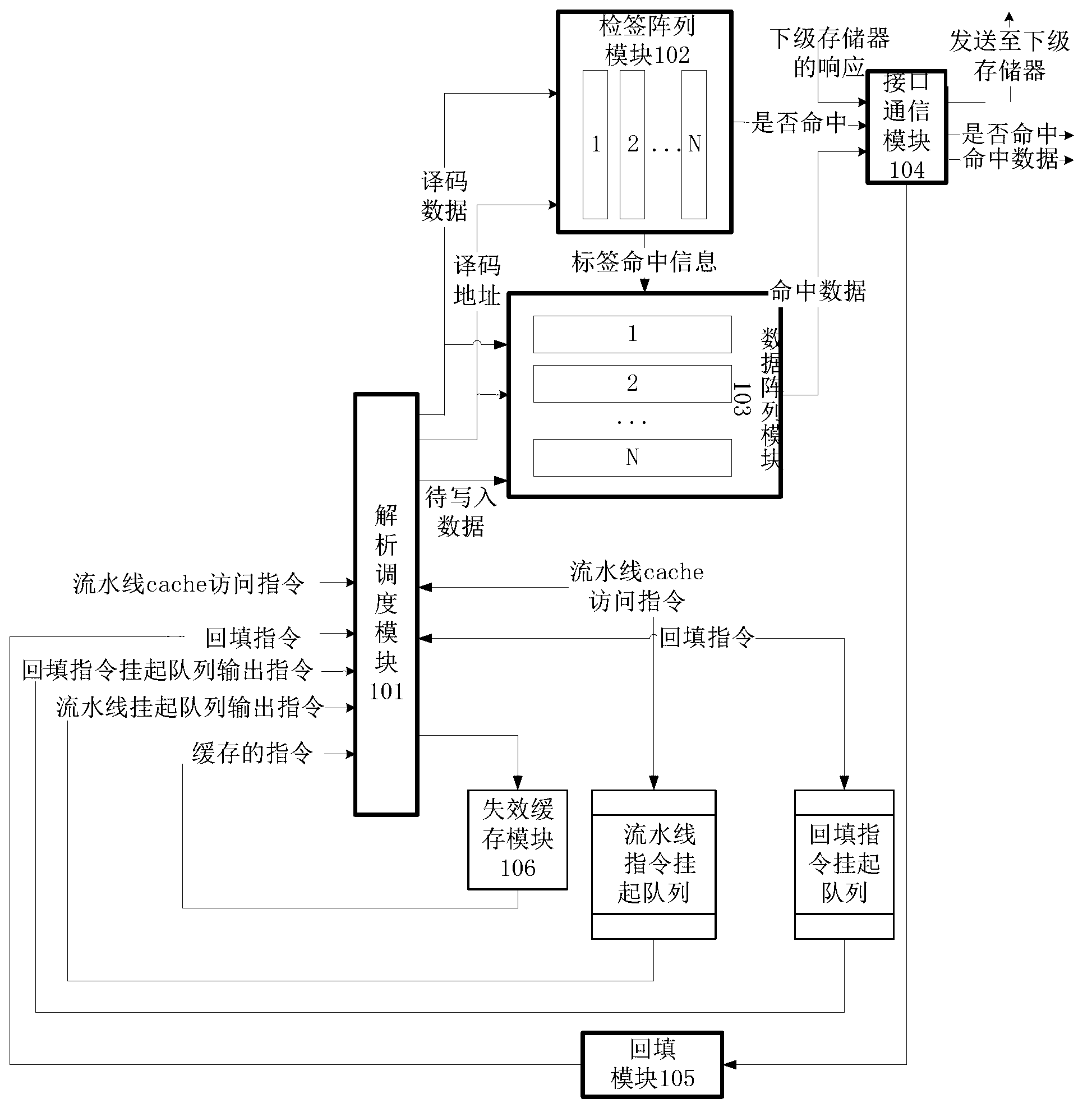

[0064] Such as figure 2 As shown, the protocol processor cache control unit includes a backfill module 105 and an invalidation cache module 106 in addition to the resolution scheduling module 101 , tag array module 102 , data array module 103 , and interface communication module 104 described in Embodiment 1.

[0065] During backfill processing:

[0066] The interface communication module 104 is also configured to notify the backfill module 105 after receiving the data response from the lower-level storage.

[0067] The backfill module 105 is used for initiating a cache backfill instruction to the parsing scheduling module 101 .

[0068] The parsing scheduling module 101 is also used to execute the backfill instruction to perform cache backfill when assigning a processing authority to the backfill instruction, and to place the backfill instruction into the backfill instruction suspension queue when the backfill instruction is not assigned a processing authority. After assig...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com