Voice separating method based on auditory center system under multi-sound-source environment

An auditory center and speech separation technology, applied in speech analysis, instruments, etc., can solve problems such as complex stages and difficult computer processing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] A non-limiting embodiment is given below in conjunction with the accompanying drawings to further illustrate the present invention.

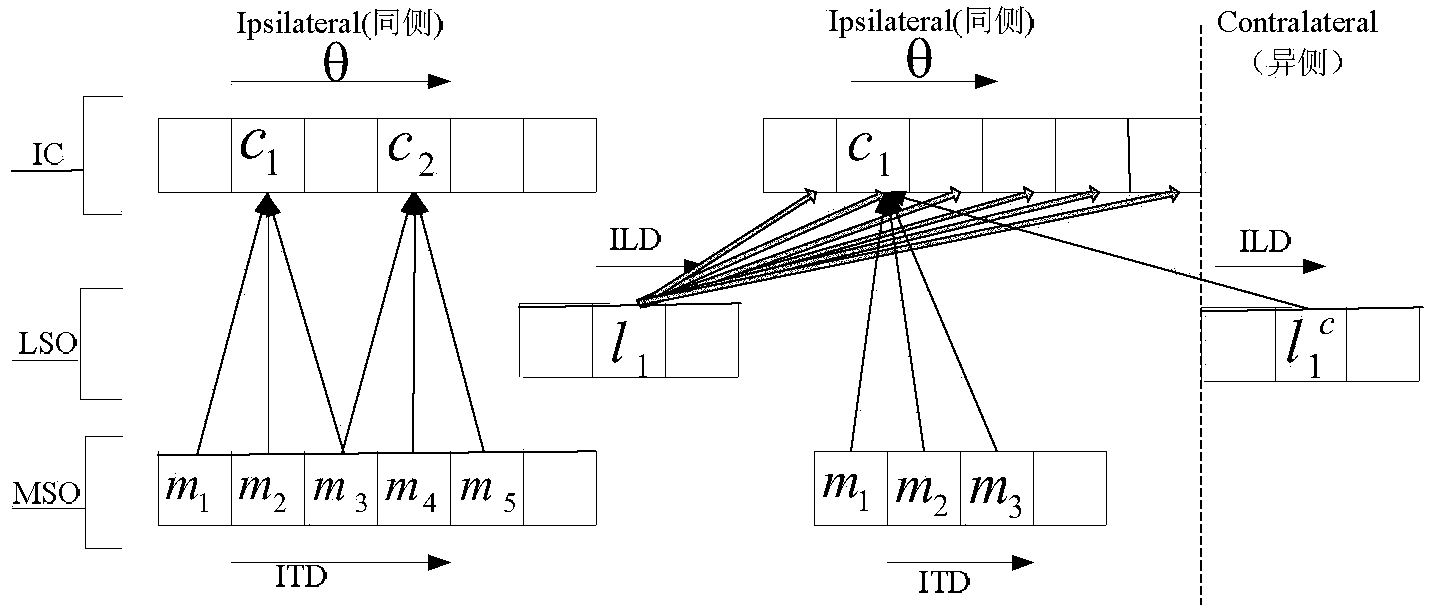

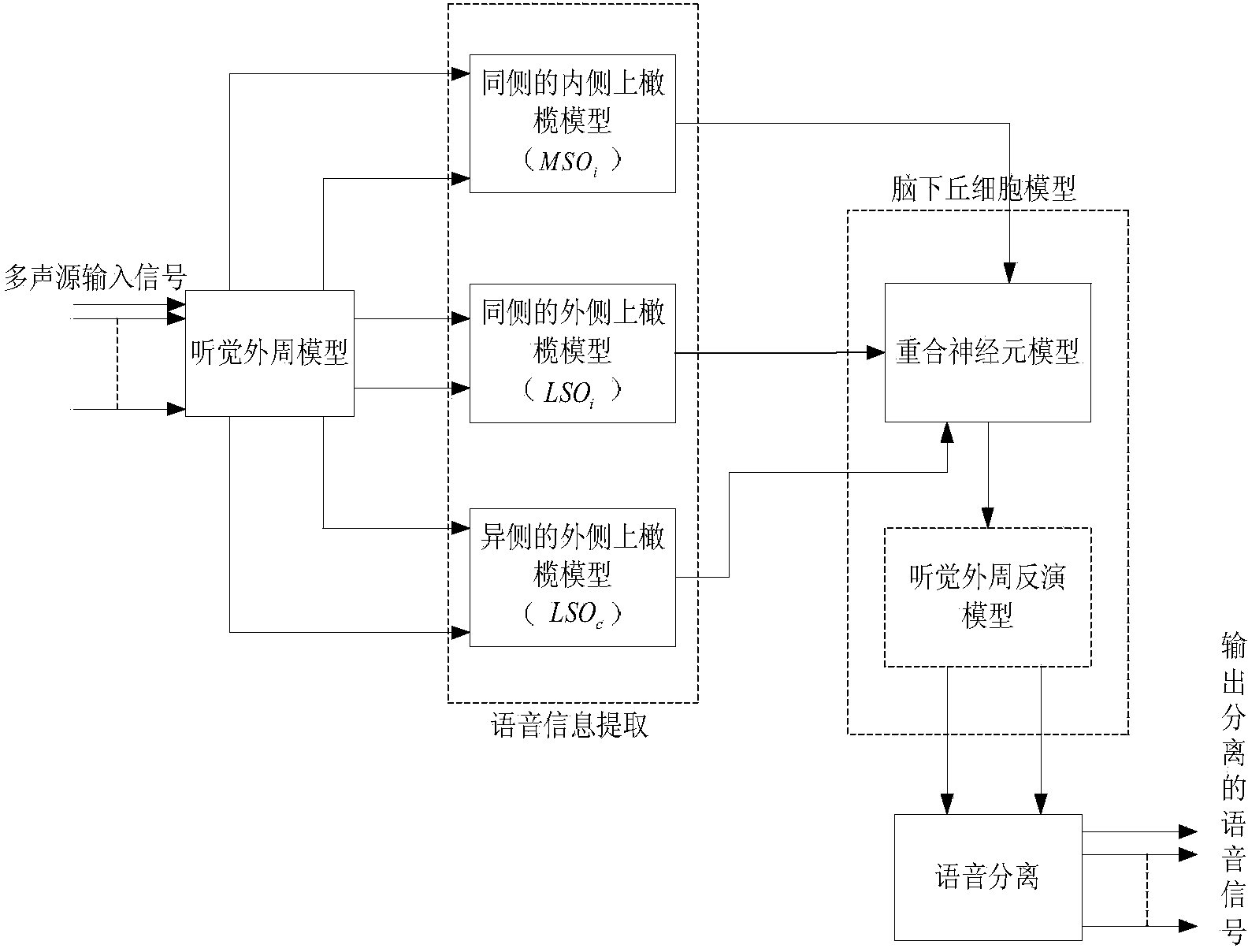

[0031] Such as figure 2It is a structural diagram of the principle of speech separation based on the auditory central system in a multi-sound source environment given in this paper. Multiple speech signals first pass through the peripheral auditory model and are divided into different frequency channels according to different frequencies; then pass through the superior olivary complex for speech information extraction; finally use the hypothalamic cell model to separate multiple sound sources into a single voice signal.

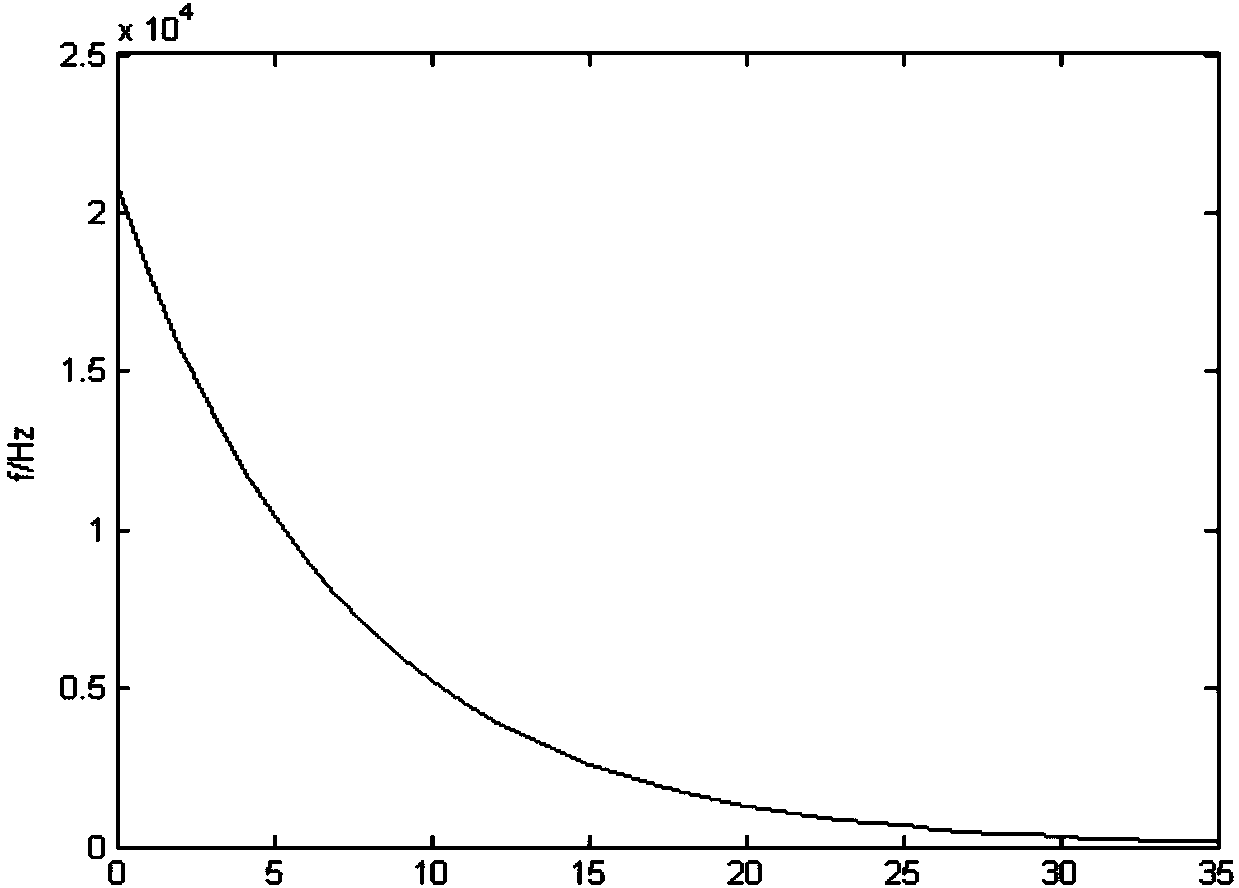

[0032] Acoustic studies have shown that the external auditory canals of both ears have different frequency responses to signals of different frequencies. The basilar membrane located inside the cochlea is an important link in the processing of the central auditory system.

[0033] The basilar membrane has the functio...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com