Multi-exposure image fusion method

An image fusion and multi-exposure technology, applied in the field of computer vision, can solve the problems of large image size and high time complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

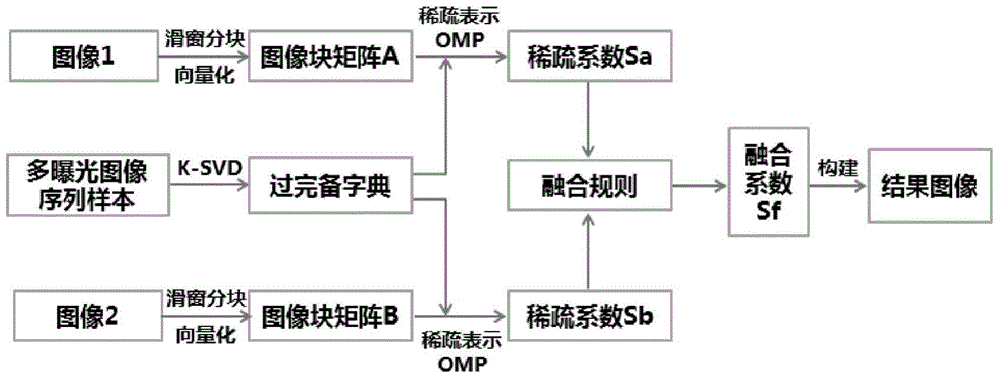

[0014] The invention adopts multi-exposure image sequences to construct learning samples, and uses a dictionary learning algorithm K-SVD to generate a dictionary matrix D. The sparsity enables the sparse representation to more accurately and adaptively select the most relevant atoms of the signal to be processed, and enhance the adaptive ability of the signal processing method, which is why the present invention uses a sparse matrix to represent image features.

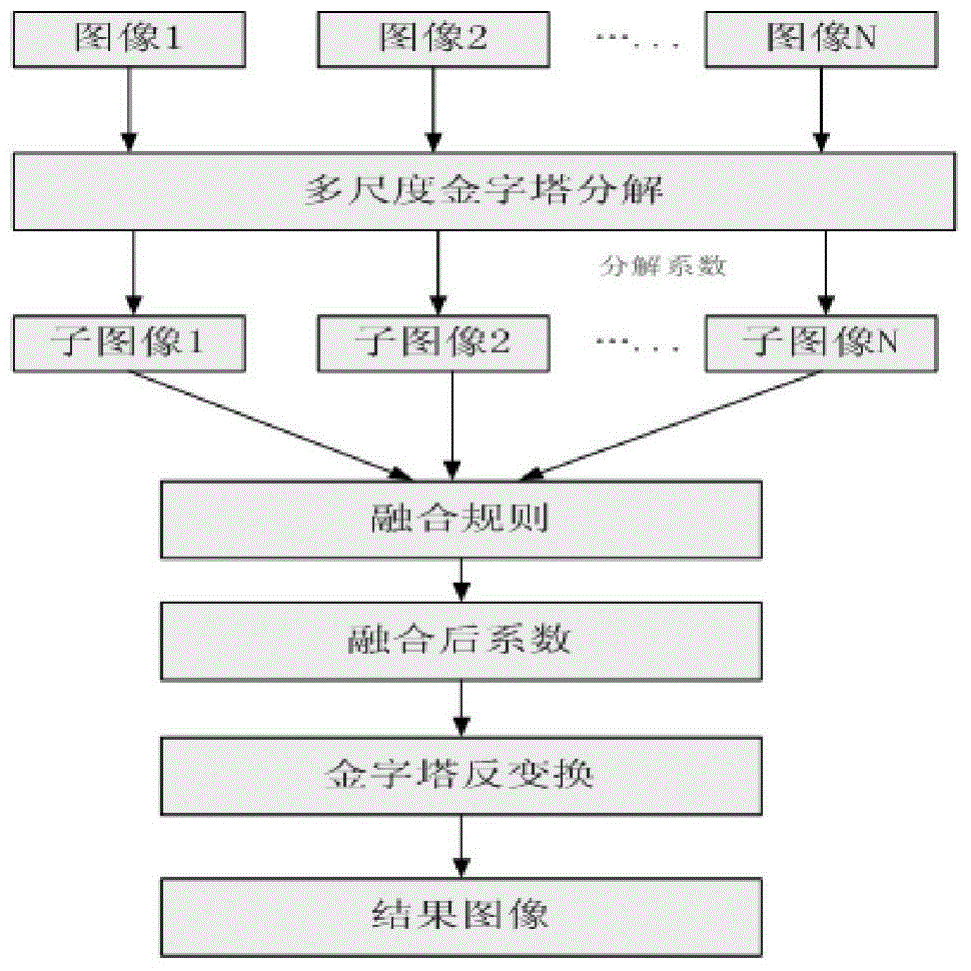

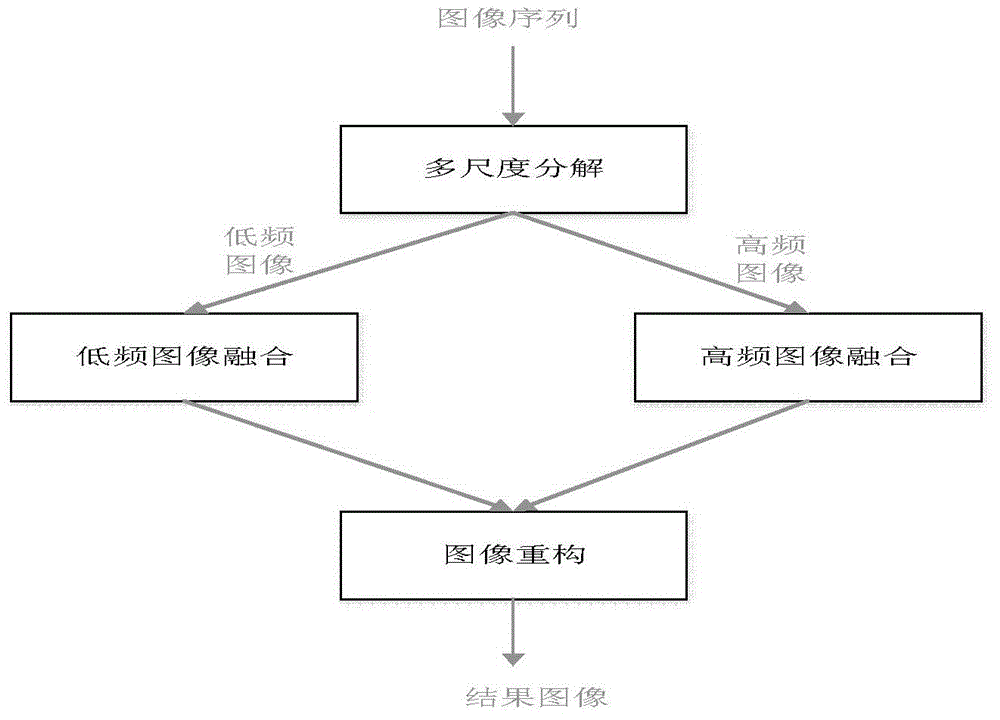

[0015] In addition, the stimuli of the human visual system to image features exist on different scales. Based on this idea, an image fusion algorithm in the frequency domain is produced. In the fusion process, the image is decomposed into different frequency layers by using the multi-scale decomposition method, and the fusion process is carried out on each frequency layer separately. In this way, different fusion rules can be adopted for the characteristics and details of different frequency layers, thereby achieving ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com