Indoor scene 3D reconstruction method based on Kinect

An indoor scene and 3D reconstruction technology, applied in the field of computer vision, can solve the problems of point cloud models with many redundant points, complex algorithm calculations, and high configuration requirements, so as to avoid point cloud redundancy, few redundant points, and low cost low effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

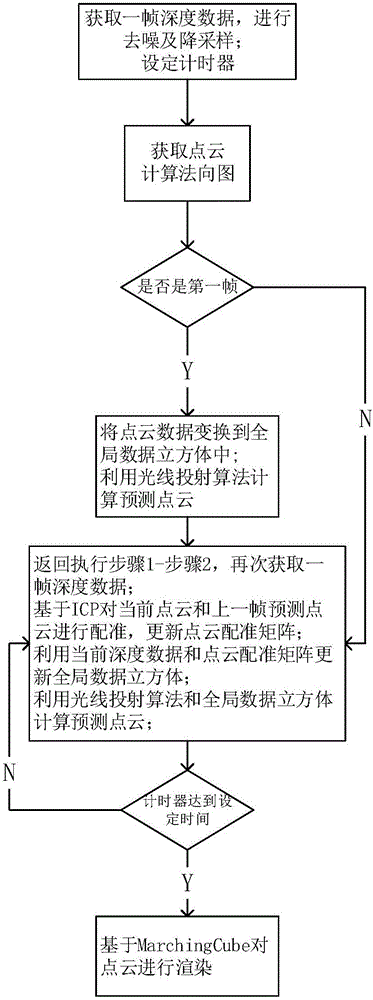

[0038] The existing 3D reconstruction technology has been widely used in robot navigation, industrial measurement, virtual interaction and other fields. To achieve better results, most of the algorithms in the prior art still have problems such as large amount of calculation, slow speed, and redundant points. The present invention studies at this present situation, proposes a kind of indoor scene three-dimensional reconstruction method based on Kinect, see figure 1 , the indoor scene three-dimensional reconstruction method of the present invention comprises the following steps:

[0039]Step 1. Denoising and downsampling of depth data: First, set the timer t and start timing. The timer is used to decide when to stop acquiring point cloud data and perform global point cloud rendering. The timer can be selected according to the size of the scene. The Kinect camera is used to obtain the depth data of one frame of objects in the indoor scene, and the joint bilateral filtering meth...

Embodiment 2

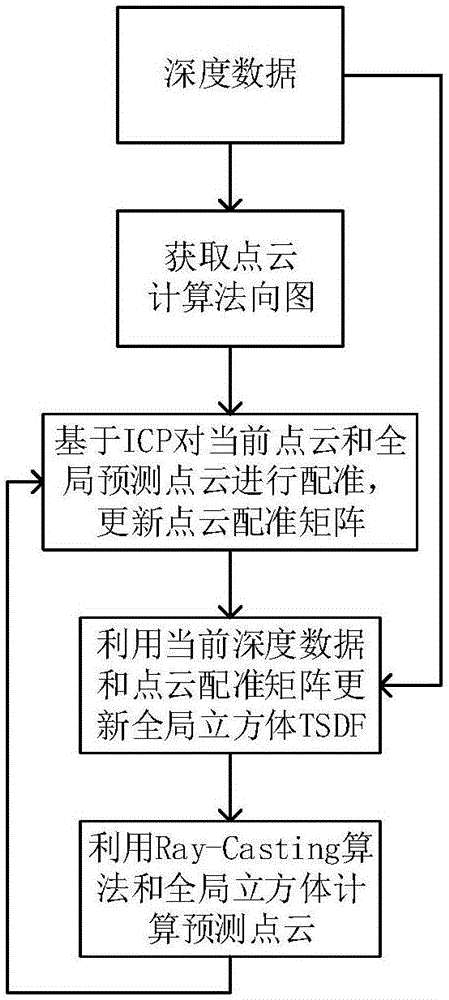

[0053] The method for three-dimensional reconstruction of an indoor scene based on Kinect is the same as that in Embodiment 1, step 1 uses the multi-resolution depth data obtained by downsampling, and is used to calculate the point cloud registration transformation matrix in step 4.2, specifically including:

[0054] 4.2.1 Use the ICP algorithm to calculate the point cloud registration matrix using the lowest resolution depth data and predicted point cloud data.

[0055] 4.2.2 Then, on the basis of this point cloud registration matrix, use the higher-resolution depth data and predicted point cloud data to calculate step by step to obtain a more accurate point cloud registration transformation matrix, and use it to update the current Point cloud registration matrix.

[0056] In the calculation, the present invention first uses low-resolution depth data to initially calculate the point cloud registration matrix, and then based on this matrix, uses higher-resolution depth data to...

Embodiment 3

[0059] The indoor scene 3D reconstruction method based on Kinect is the same as embodiment 1-2, adopting TSDF algorithm to carry out point cloud fusion in step 4.3, including:

[0060] 4.3.1 When using the TSDF algorithm, a cube grid is used to represent the 3-dimensional space, and each grid in the cube stores the distance D and weight W from the grid to the surface of the object model.

[0061] The present invention adopts TSDF algorithm, and the main idea of this method is to set up a virtual cube (Volume) in the graphics card, and the side length is L. In this example, the side length L of the virtual cube is set to 2 meters, and then the cube is divided into N ×N×N voxel (Voxel), in this example, set N to 512, and the side length of each voxel is L N , each voxel stores its distance D to the nearest surface of the object and its weight W. This example performs 3D reconstruction of a cabinet in the room.

[0062] 4.3.2 At the same time, positive and negative are used t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com