Task scheduling method based on depth reinforcement learning under vehicle network environment

A technology of reinforcement learning and task scheduling, applied in neural learning methods, biological neural network models, program startup/switching, etc., can solve problems such as tasks that cannot be completed in time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

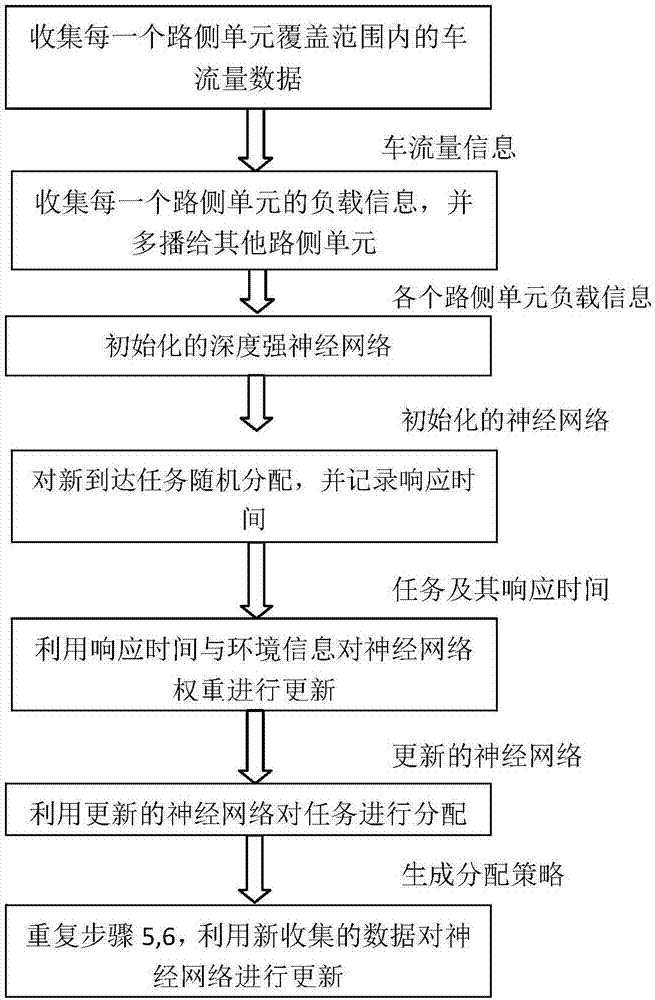

[0100] In this embodiment, a certain area of city A is used for experiments.

[0101] For this area, there are 10 roadside units, count the number of vehicles in each roadside unit within a certain period of time, unit (vehicle) {Q 1 ,Q 2 ,...Q 10}. Get the task queue length {L of each roadside unit 1 , L 2 ,... L 10}.

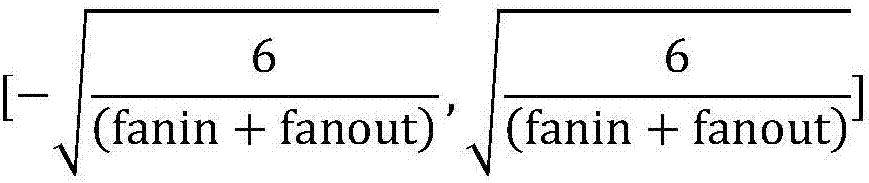

[0102] Secondly, initialize the neural network for task assignment as an input layer of 20 neurons, a first hidden layer of 7 neurons, a second hidden layer of seven neurons, and an output layer of 10 neurons .

[0103] Again, warm up the neural network, and record the response time and environment variables of the tasks within a period of time according to the strategy of random assignment.

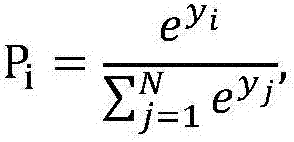

[0104] Then, the profit value of each strategy is calculated according to the response time, and the profit value is standardized in order to clarify whether the strategy is good or bad.

[0105] Next, the neural network is updated based on the BP algorithm us...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com