Programming model oriented to neural network heterogeneous computing platform

A neural network and computing platform technology, applied in the field of programming models oriented to neural network heterogeneous computing platforms, can solve problems such as not being able to meet performance requirements well, and achieve high flexibility, good computing performance, and strong scalability. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

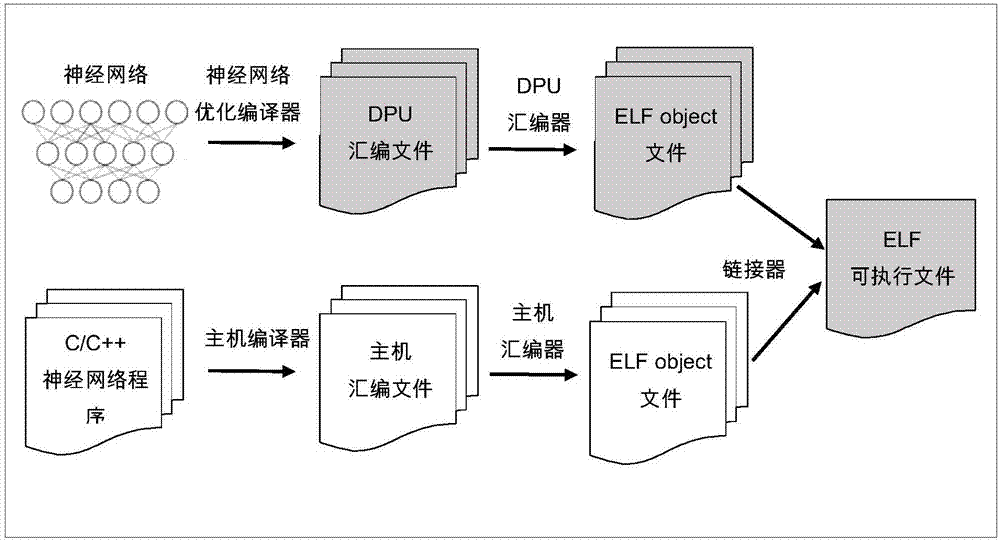

[0030] Below, we will first combine figure 1 to describe the functions of the constituent parts in the first embodiment.

[0031] figure 1 It is a hybrid compilation model for heterogeneous computing platforms of CPU + neural network special processor.

[0032] exist figure 1 In the neural network (Neural Network, can be referred to as NN) optimization compiler (or "NN optimization compiler") with the neural network model as input, by analyzing the network topology to obtain the control flow and data flow information in the model, Based on this, various optimization transformation techniques are applied to the model. Specifically, the compiler merges the computational operations between different network layers in the neural network model, reducing the computational intensity. For structured and unstructured sparse networks, the compiler will eliminate unnecessary computation and data movement caused by sparse values. In addition, the compiler will fully reuse the network...

no. 2 example

[0056] The following will combine Figure 4 To describe the programming environment of the present invention, that is, the program execution support model.

[0057] Figure 4 It is a program running support model of the heterogeneous computing platform according to the second embodiment of the present invention.

[0058] exist Figure 4 In , the neural network dedicated processor is abbreviated as DPU to distinguish it from the host CPU. It should be understood by those skilled in the art that such nomenclature does not affect the generality of a neural network special-purpose processor. That is to say, in this specification and the accompanying drawings, "neural network dedicated processor" and "DPU" are terms that can be used interchangeably, and are used to represent another processor that is different from the CPU on a heterogeneous computing platform.

[0059] like Figure 4 As shown in the neural network specific processor driver ( Figure 4 (shown as "DPU driver")...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com