A load balancing method and apparatus based on CPU-GPU

A load distribution and current load technology, applied in the computer field, can solve problems such as limited GPU memory capacity, unbalanced CPU and GPU task distribution, and inability to process large data sets, achieving the best overall performance and improving system performance.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

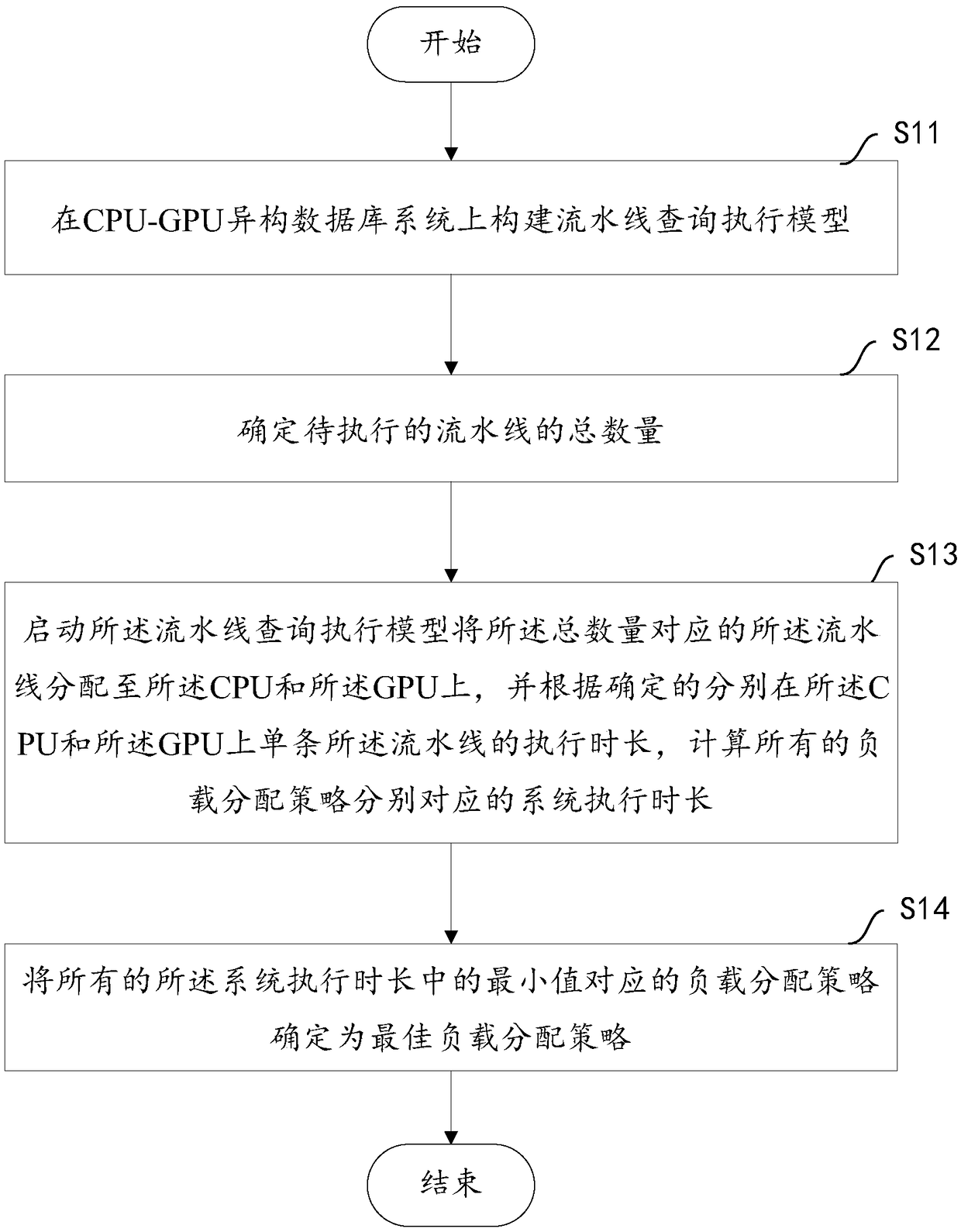

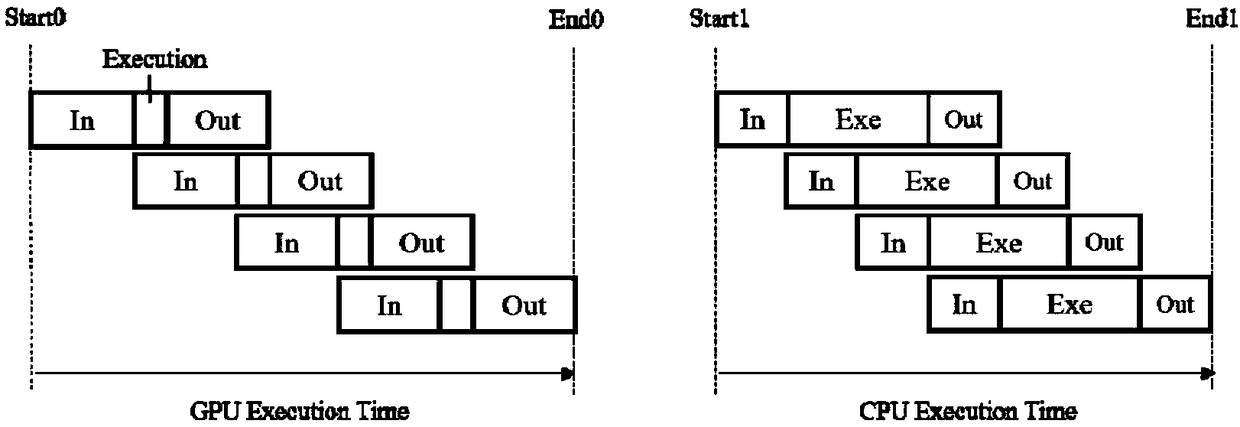

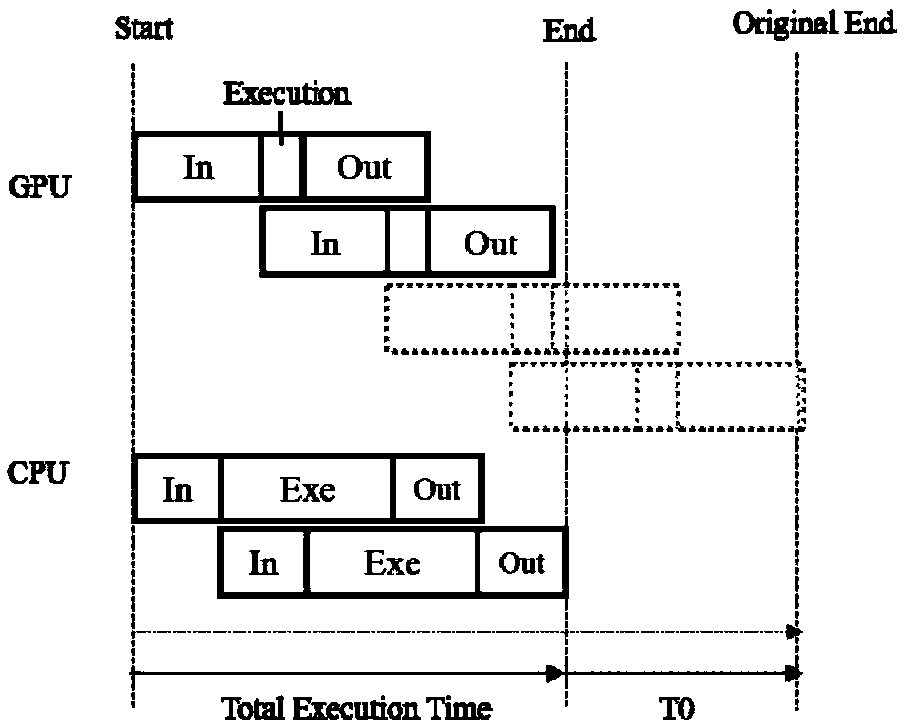

[0055] The application will be described in further detail below in conjunction with the accompanying drawings.

[0056] In a typical configuration of the present application, the terminal, the device serving the network and the trusted party all include one or more processors (CPUs), input / output interfaces, network interfaces and memory.

[0057] Memory may include non-permanent storage in computer readable media, in the form of random access memory (RAM) and / or nonvolatile memory such as read only memory (ROM) or flash RAM. Memory is an example of computer readable media.

[0058] Computer-readable media, including both permanent and non-permanent, removable and non-removable media, can be implemented by any method or technology for storage of information. Information may be computer readable instructions, data structures, modules of a program, or other data. Examples of computer storage media include, but are not limited to, phase change memory (PRAM), static random acce...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com