Patents

Literature

51results about How to "Make full use of computing resources" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

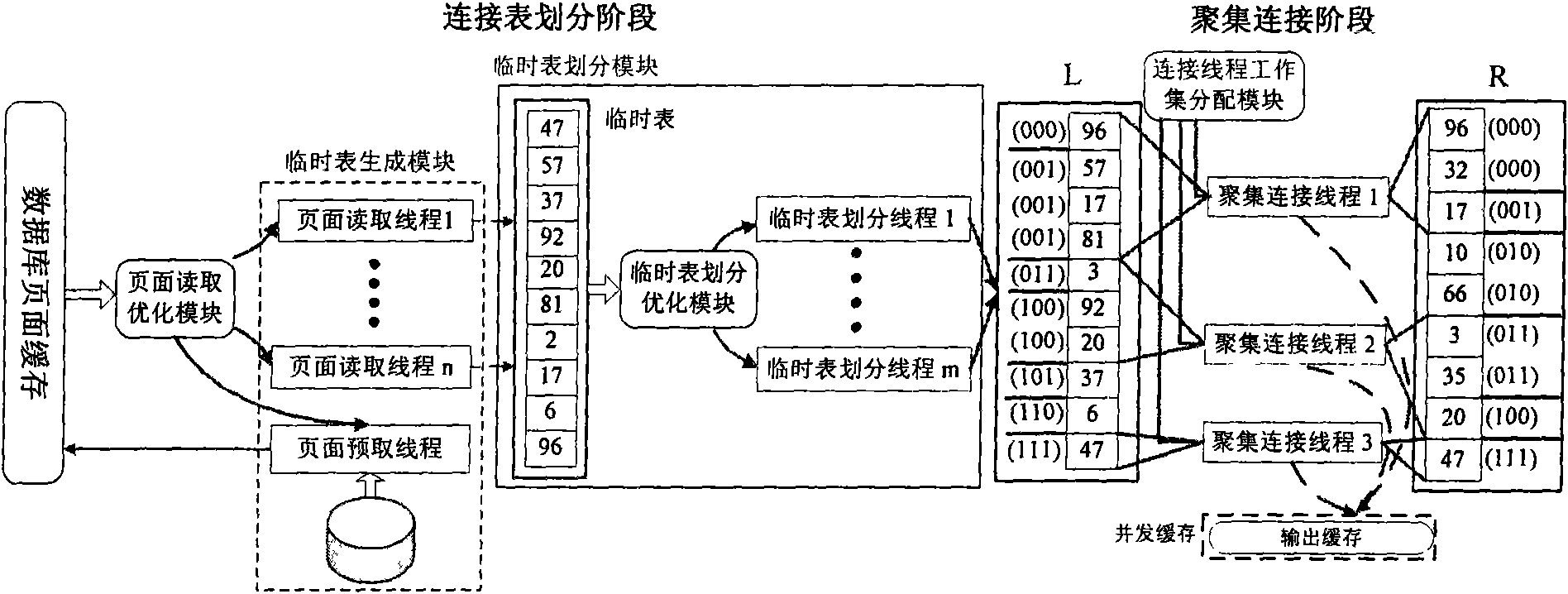

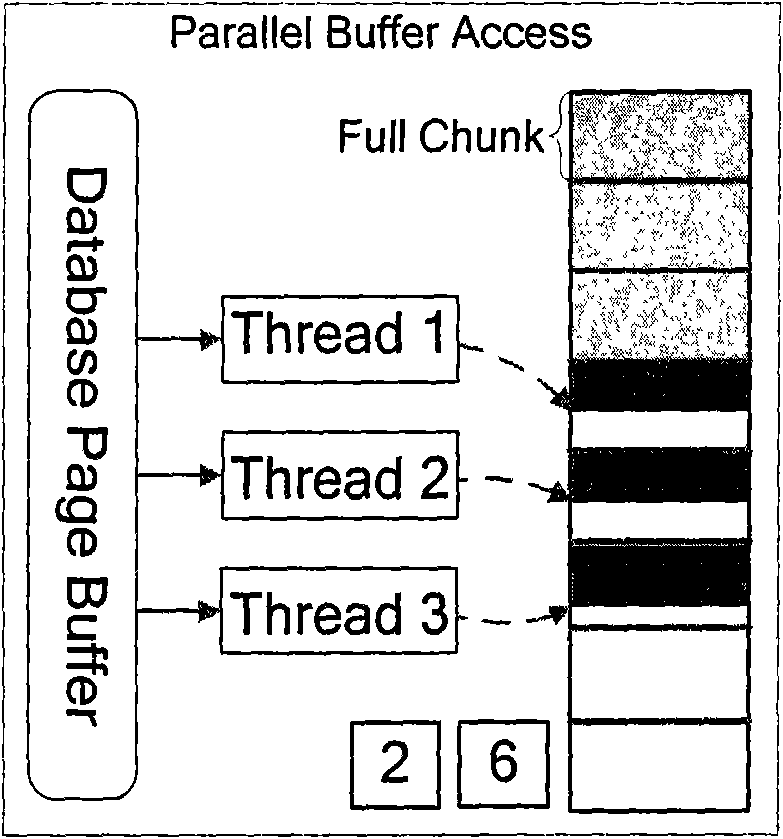

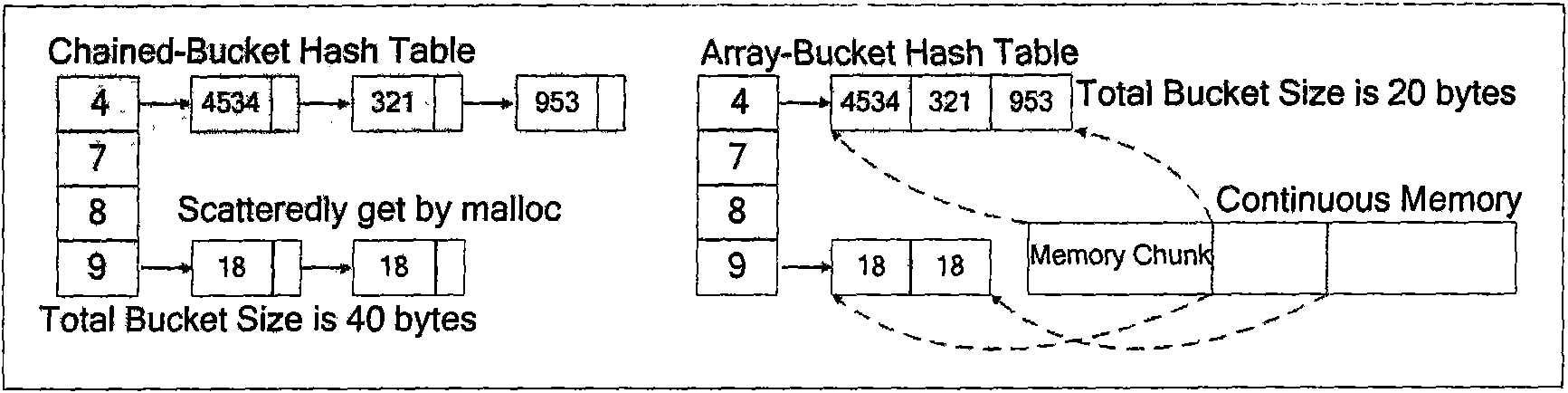

Hash connecting method for database based on shared Cache multicore processor

InactiveCN101593202AReduce Cache access conflictsLoad balancingMemory adressing/allocation/relocationConcurrent instruction executionCache accessLinked list

The invention discloses a hash connecting method for database based on a shared Cache multicore processor. The method is divided into a link list division phase and an aggregation connection phase, wherein the link list division comprises the following steps that: firstly, a temporary list is generated through a temporary list generation module; secondly, temporary list division is executed on the temporary list by temporary list division thread; thirdly, before division, a proper data division strategy is determined according to the size of the temporary list; and fourthly, proper start occasion of the temporary list division thread is determined in a process of temporary list division to reduce Cache access collision; in aggregation connection, an aggregation connection execution methodbased on classification of aggregation sizes is adopted, and the memory access in hash connection is optimized. The method ensures that the hash connection sufficiently utilizes operating resources of a multicore processor, and the speedup ratio executed by hash connection is close to the number of cores of the processor so as to greatly shorten the execution time of the hash connection.

Owner:NAT UNIV OF DEFENSE TECH +2

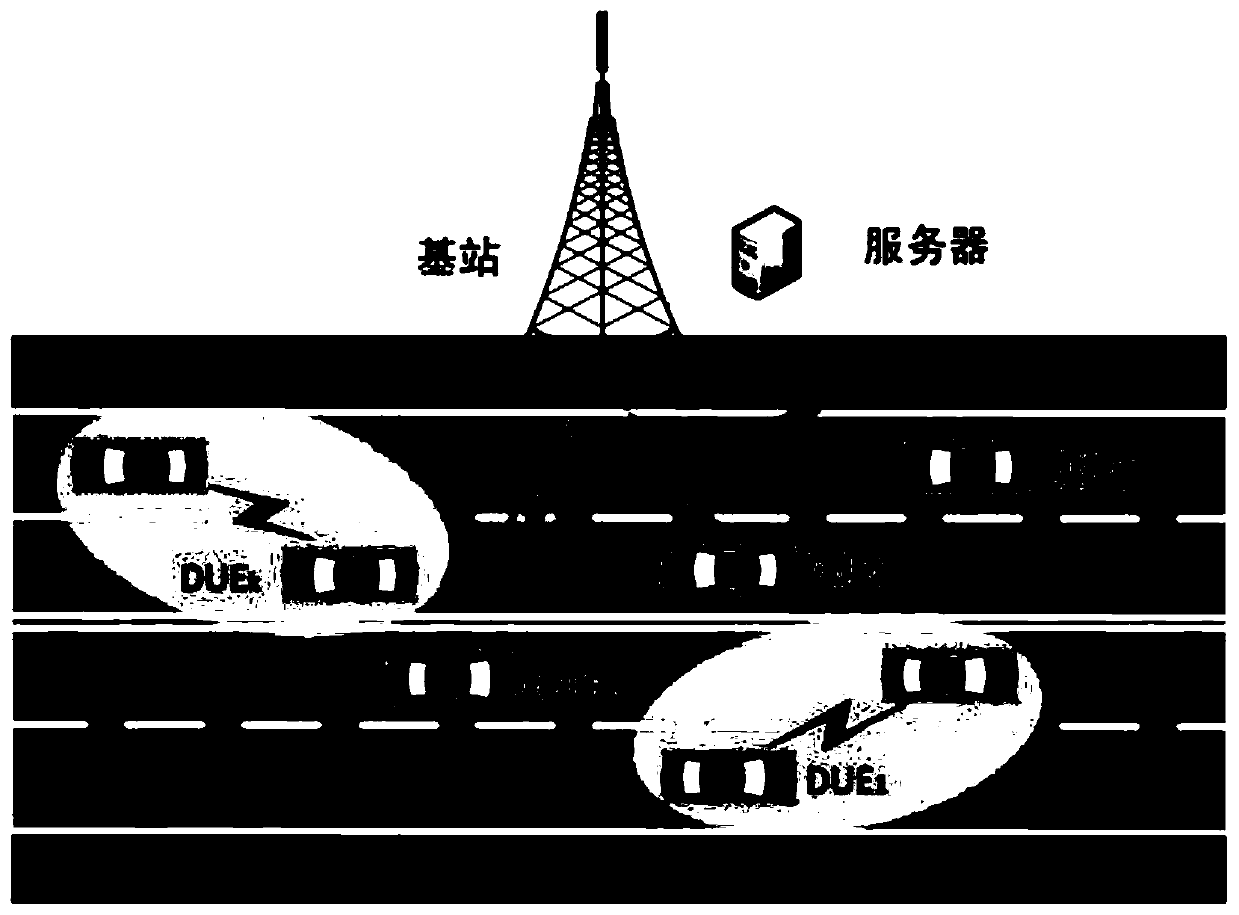

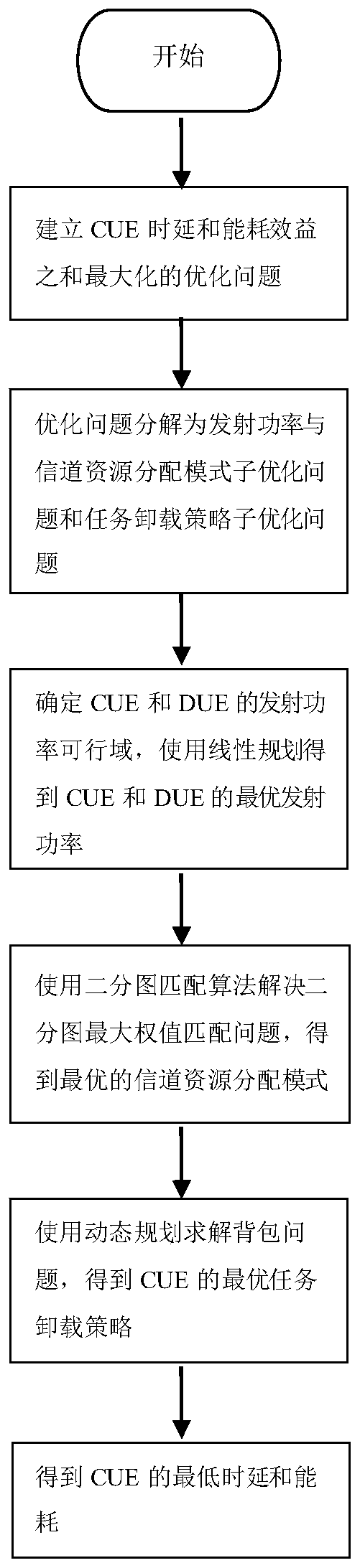

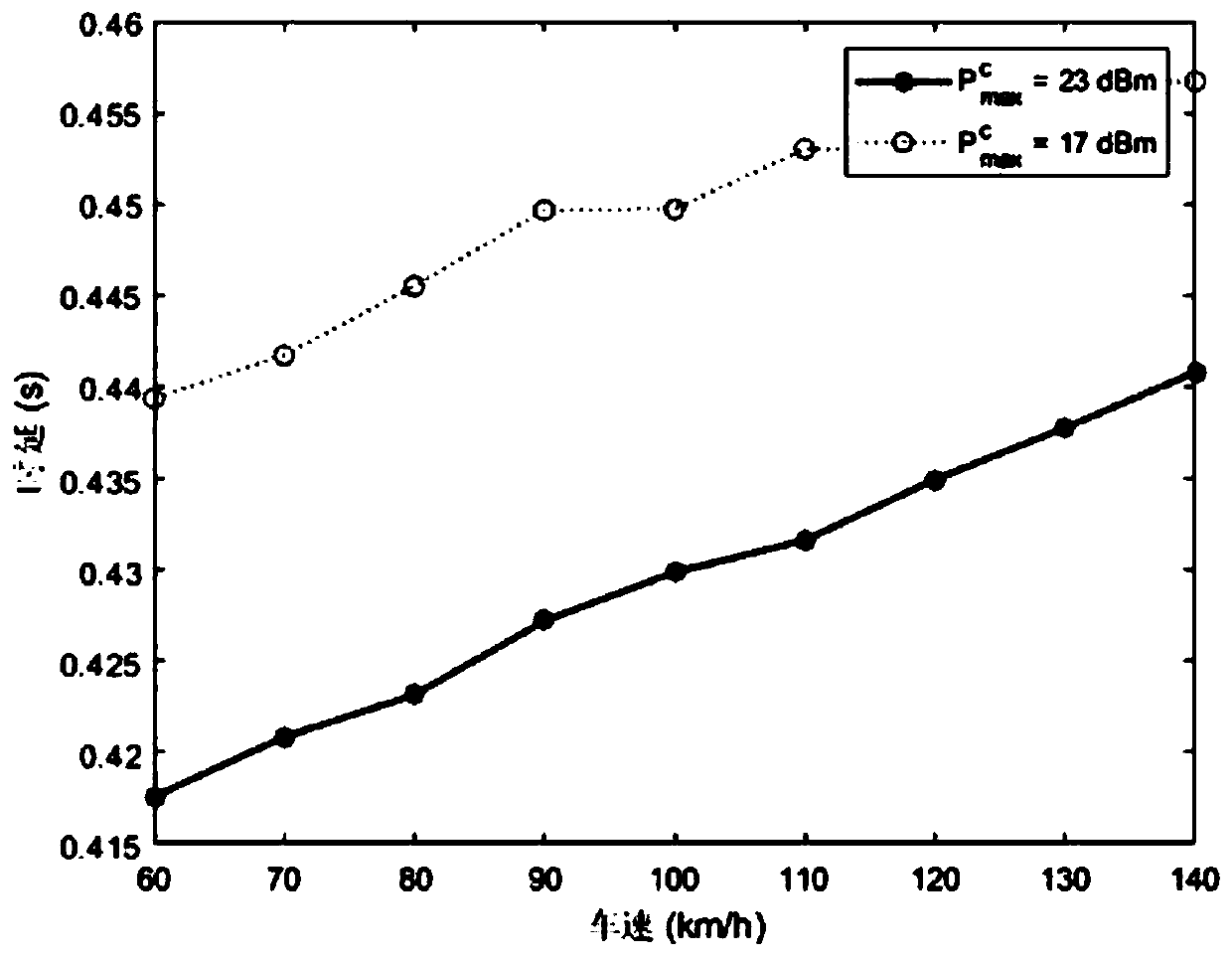

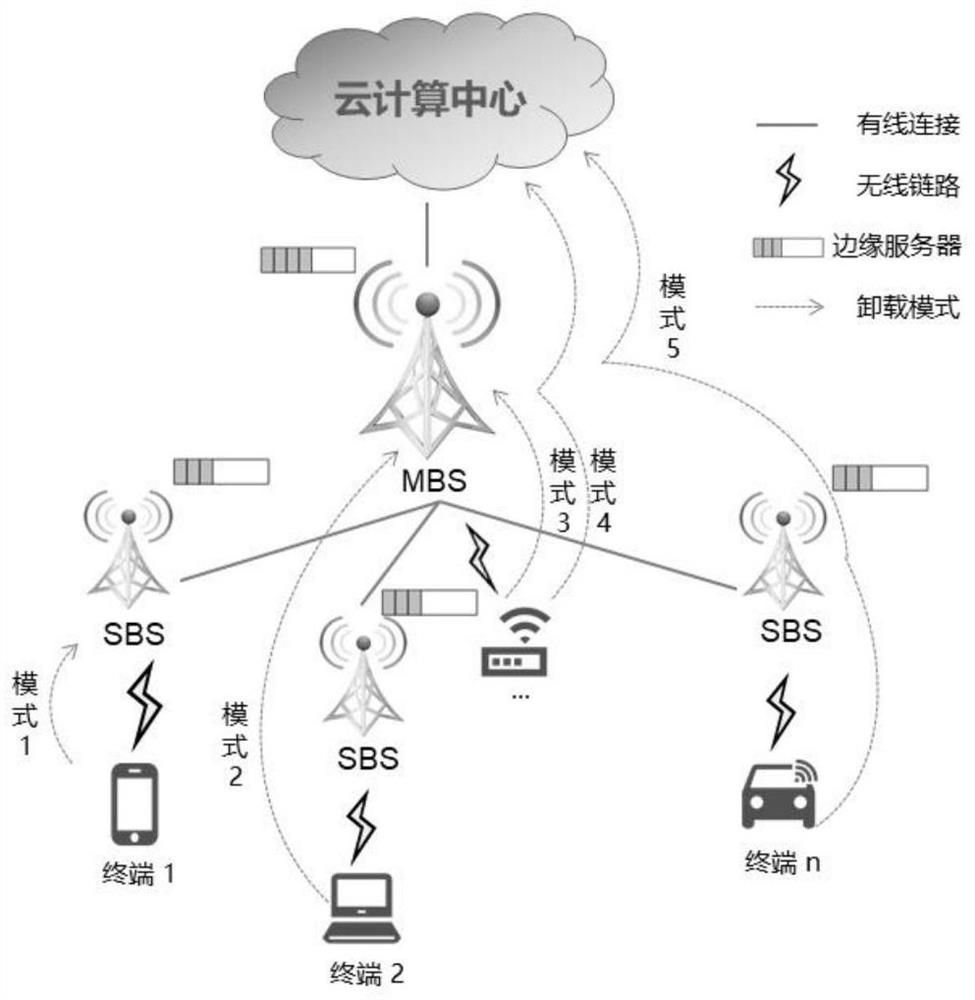

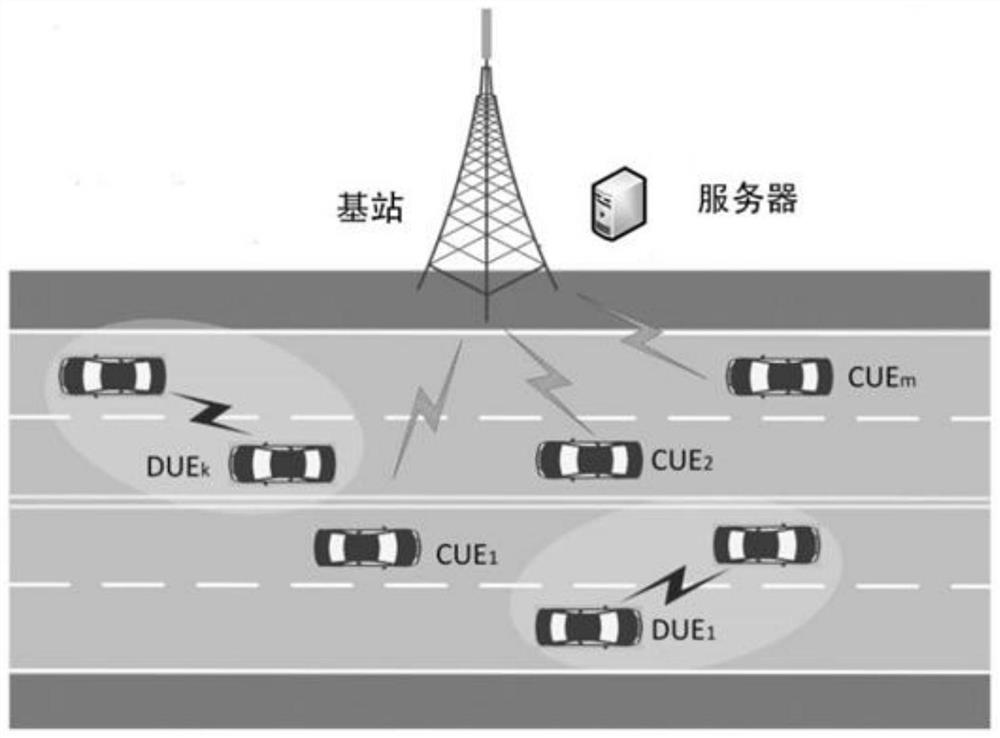

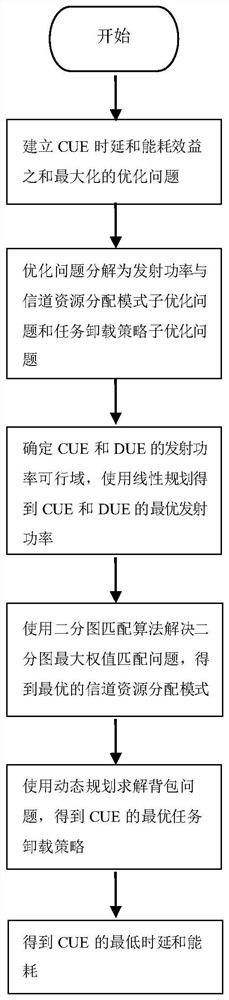

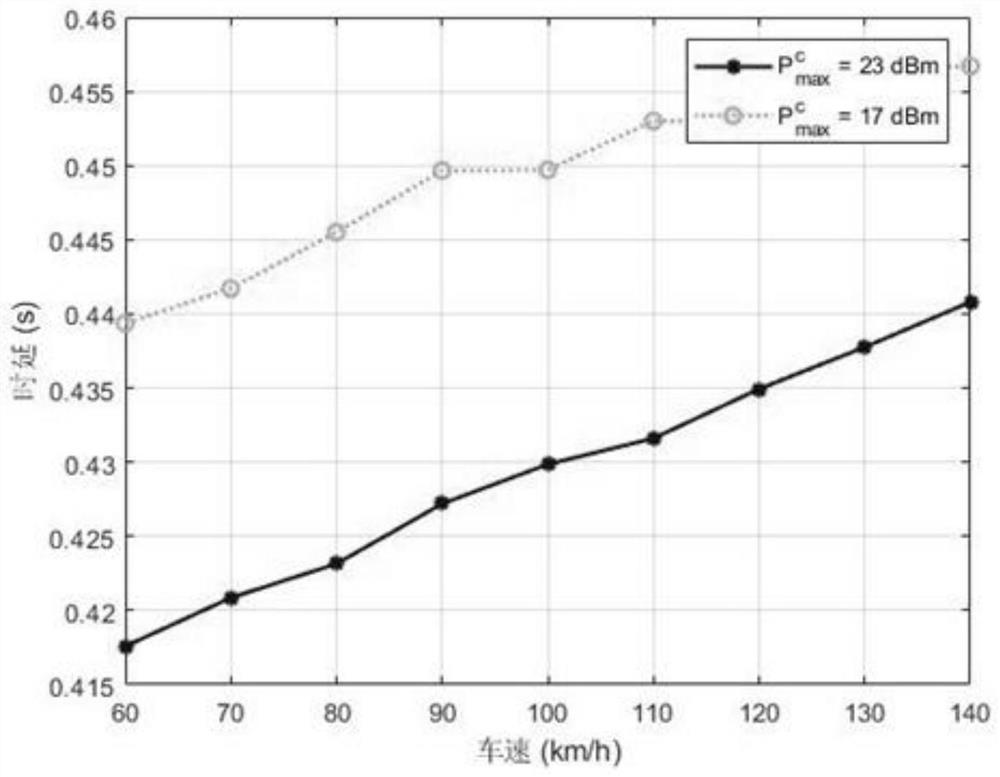

D2D-based multi-access edge computing task unloading method in Internet of Vehicles environment

ActiveCN111132077AMake full use of computing resourcesSatisfy the delay reliability conditionPower managementParticular environment based servicesTransmitted powerSimulation

The invention discloses a D2D-based multi-access edge computing task unloading method in an Internet of Vehicles environment. According to the method, a task unloading strategy, transmitting power anda channel resource allocation mode are modeled into a mixed integer nonlinear programming problem, and the optimization problem is maximization of the sum of time delay and energy consumption benefits of all terminal CUE in cellular communication with a base station in an Internet of Vehicles system. According to the method, the time complexity is low, the channel resources of the Internet of Vehicles system can be effectively utilized, the time delay reliability of the terminal DUE performing local V2V data exchange in a D2D communication mode is ensured, meanwhile, the time delay and energyconsumption of the CUE are close to the minimum, and the requirements for low time delay and high reliability of the Internet of Vehicles are met. Verification is carried out by building a simulationplatform, the total time delay and energy consumption of the CUE are approximately minimized, and the method has performance advantages compared with a traditional task unloading method.

Owner:SOUTH CHINA UNIV OF TECH

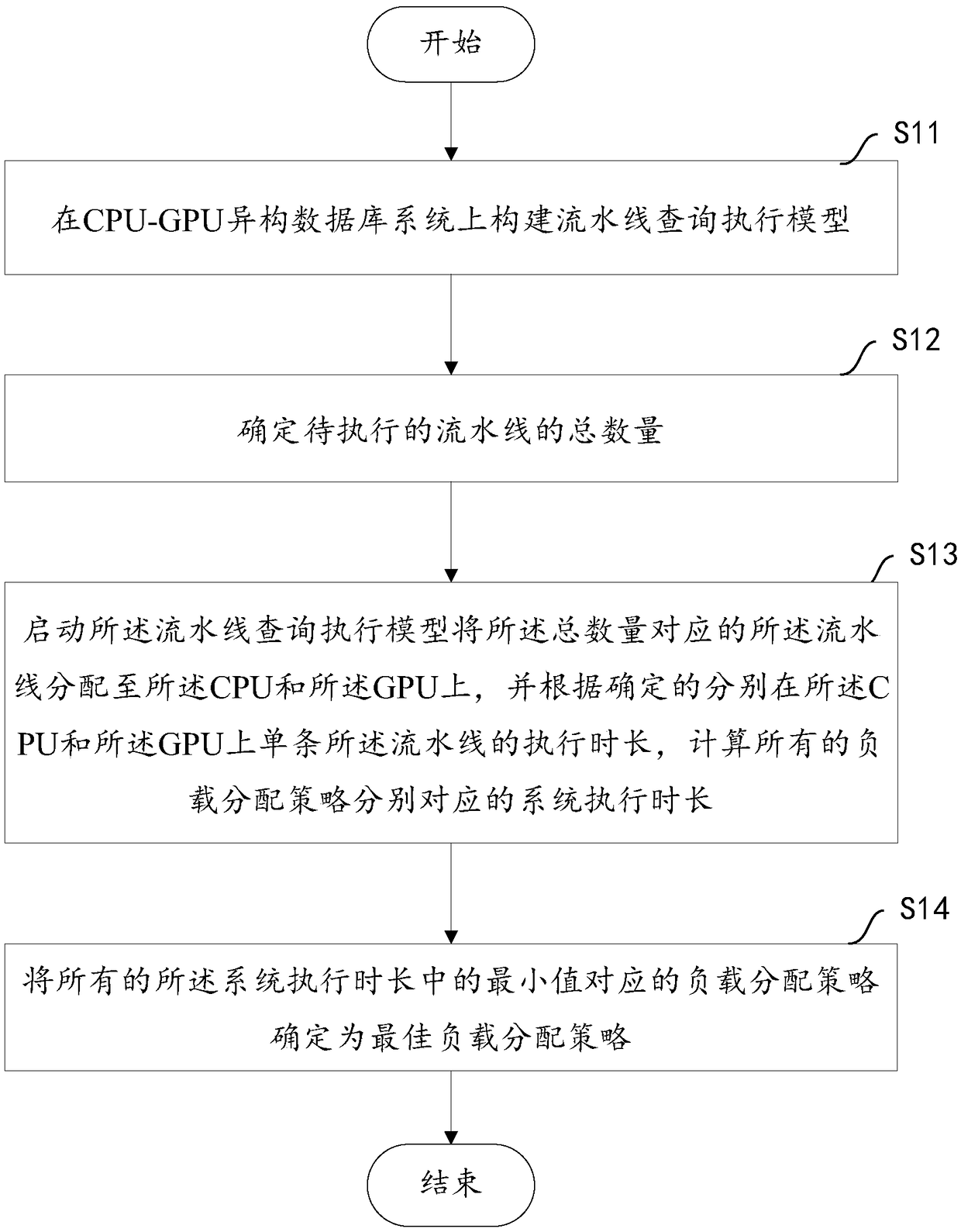

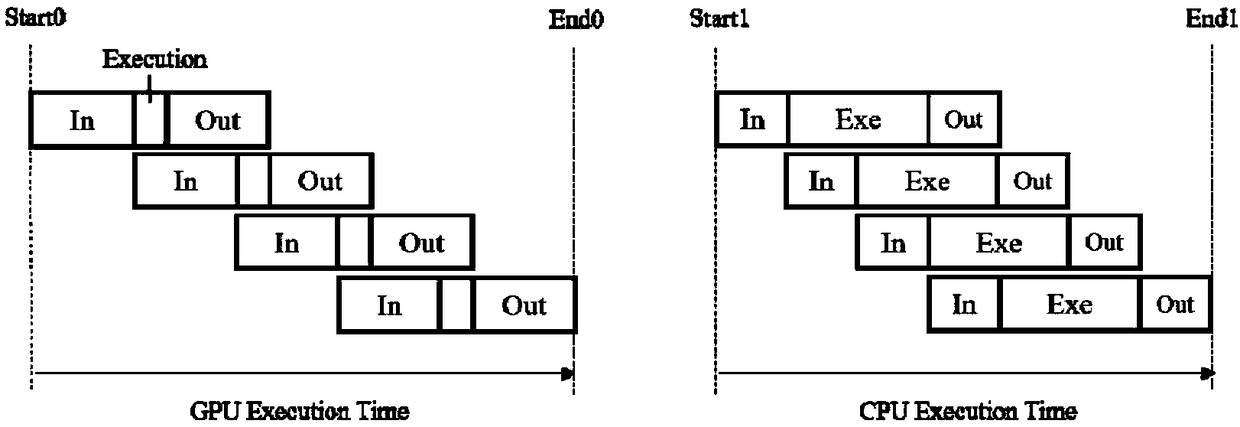

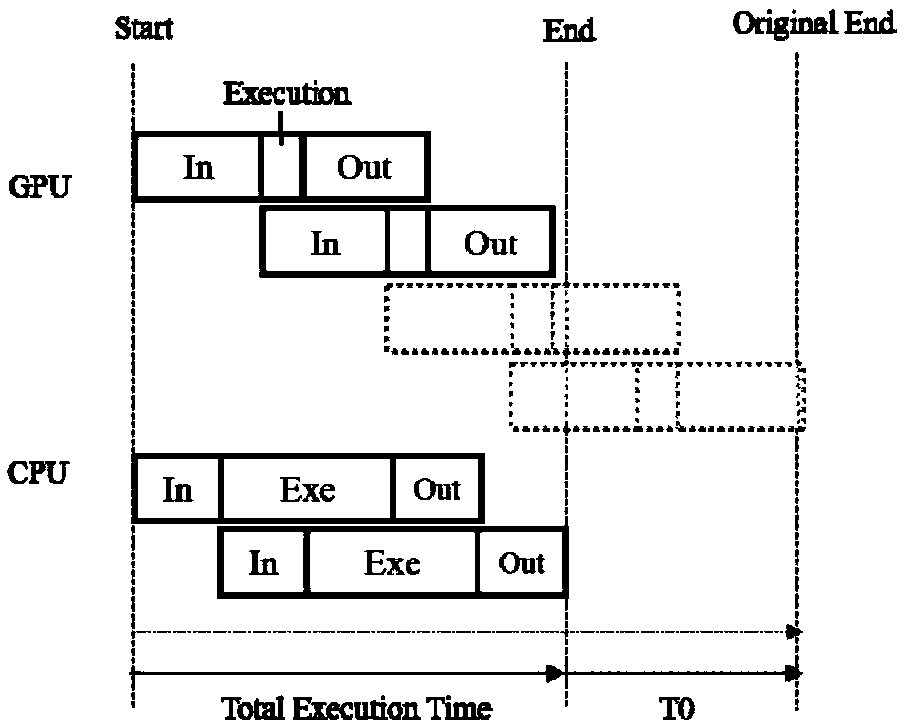

A load balancing method and apparatus based on CPU-GPU

ActiveCN109213601AImprove performanceMake full use of computing resourcesResource allocationProcessor architectures/configurationQuery analysisData analysis system

The object of the present application is to provide a CPU-GPU based load balancing method and apparatus. A pipelined query execution model is constructed on the GPU-GPU heterogeneous database system,so that CPU-GPU heterogeneous data analysis system can support query analysis in big data scenario; determining the total number of pipelines to be executed; starting the pipeline query execution model to allocate the pipeline corresponding to the total number to the CPU and the GPU, and calculating the system execution time corresponding to all load distribution strategies according to the determined execution time of a single pipeline on the CPU and the GPU respectively; finally, the load distribution strategy corresponding to the minimum value of the execution time of all the systems is determined as the optimal CPU-GPU allocation strategy,the load balancing strategy of the CPU-GPU heterogeneous data analysis system can reasonably distribute pipeline load to different processors, make full use of processor computing resources, not only improve the system performance, but also make the system achieve the best overall performance.

Owner:EAST CHINA NORMAL UNIV

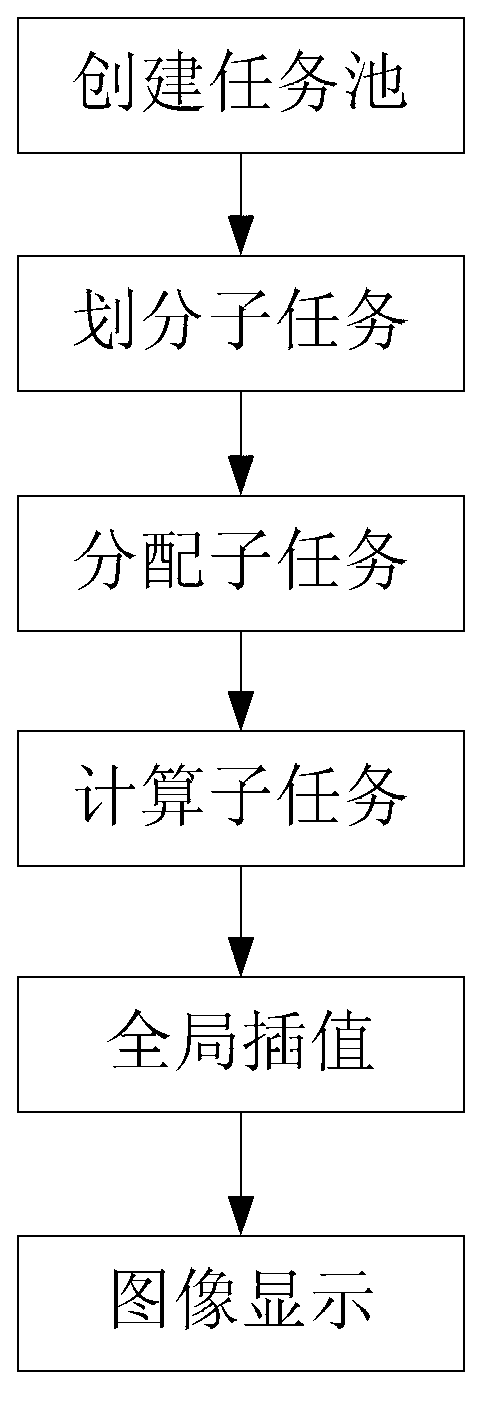

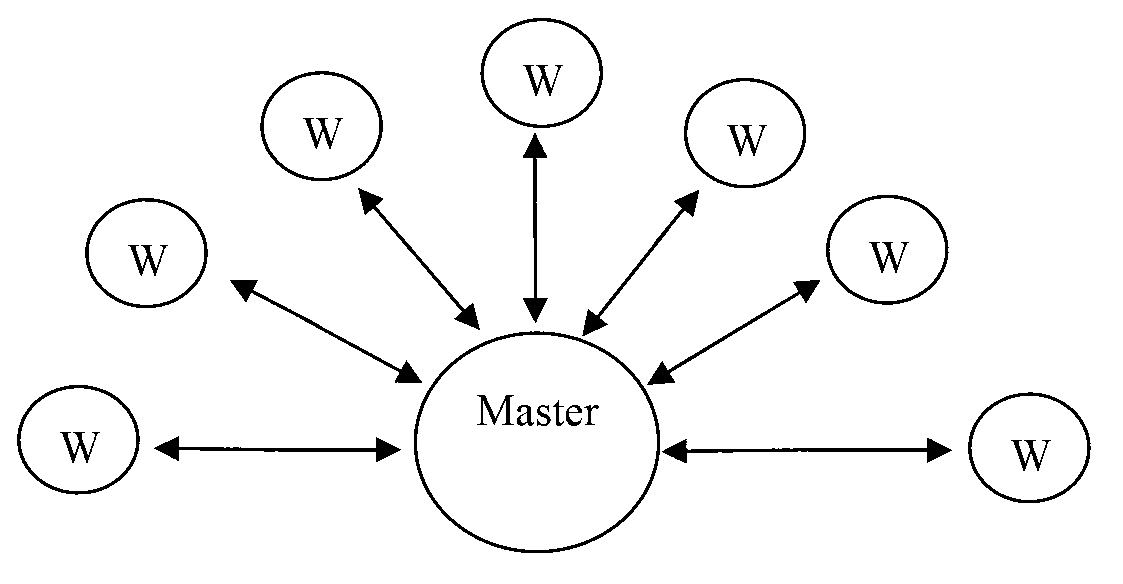

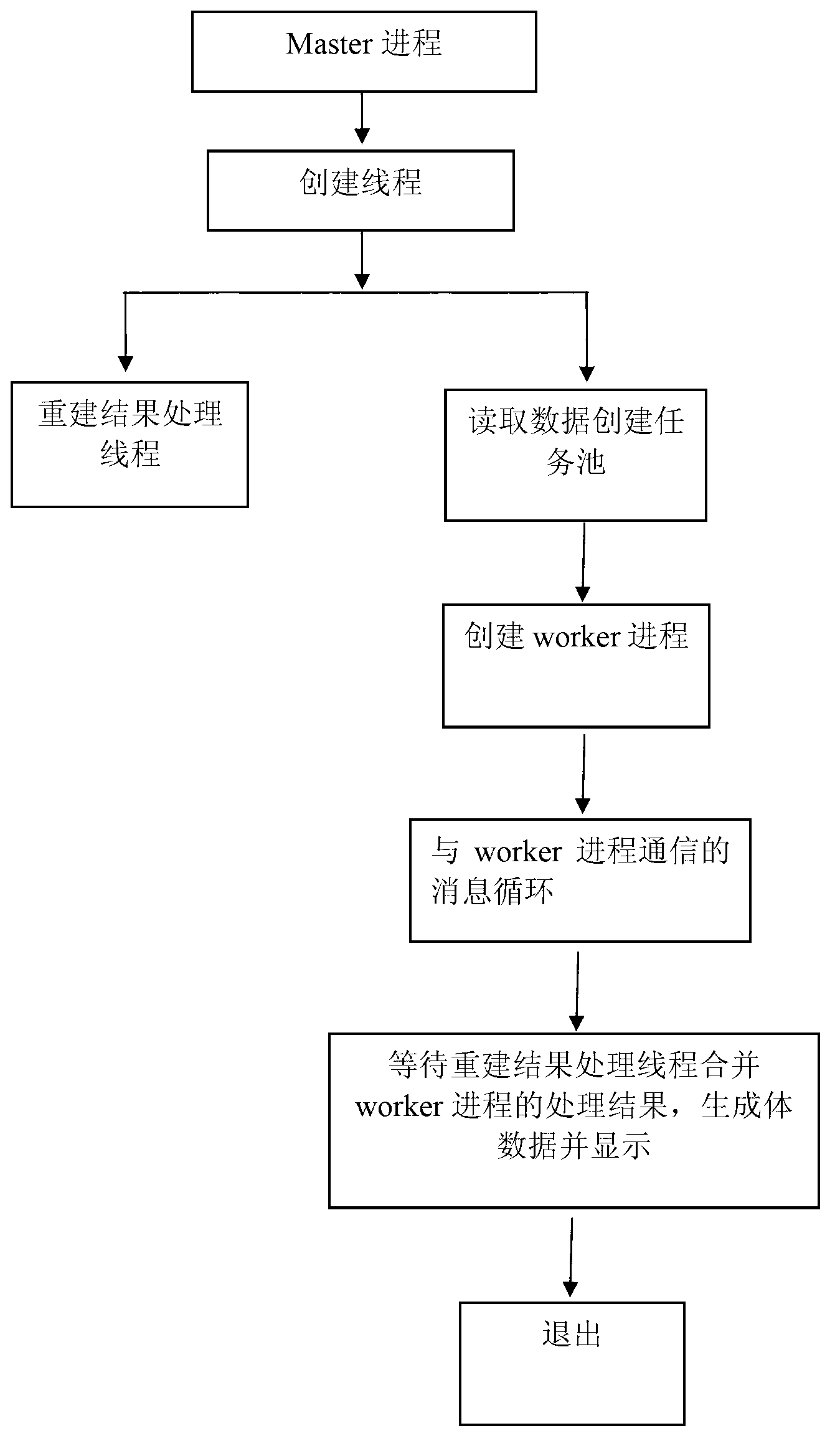

Method for medical ultrasound three-dimensional imaging based on parallel computer

InactiveCN102835974AMeet real-time requirementsFast imagingUltrasonic/sonic/infrasonic diagnosticsInfrasonic diagnosticsUltrasonographySonification

The invention discloses a method for medical ultrasound three-dimensional imaging based on parallel computers. The method includes the following steps: S1. creating a task pool, namely, reading image data in accordance with user setting parameters and construct the task pool; S2. conduct partitioning to obtain sub-tasks, namely, adopting domain partition method to partition collected ultrasound image sequences into a plurality of sub-tasks in accordance with defined particle size, wherein each sub-task is an interpolation to complete adjacent images of S frames; S3. sub-tasks distribution, namely, distributing sub-tasks to different processors; S4. calculating sub-tasks, namely each processor executing sub-tasks coordinately and parallelly; S5. overall interpolation, namely conducting overall interpolation to sub-task results obtained by reconstruction of each processor to get a three-dimensional image; and S6. image display, namely displaying the reconstructed three-dimensional image. The method solves the time-consuming problem of the ultrasound three-dimensional imaging. Besides, the parallel computer environment is constructed by low-cost computers. Thus, the computing resources can be fully used and the cost is also low.

Owner:SOUTH CHINA UNIV OF TECH

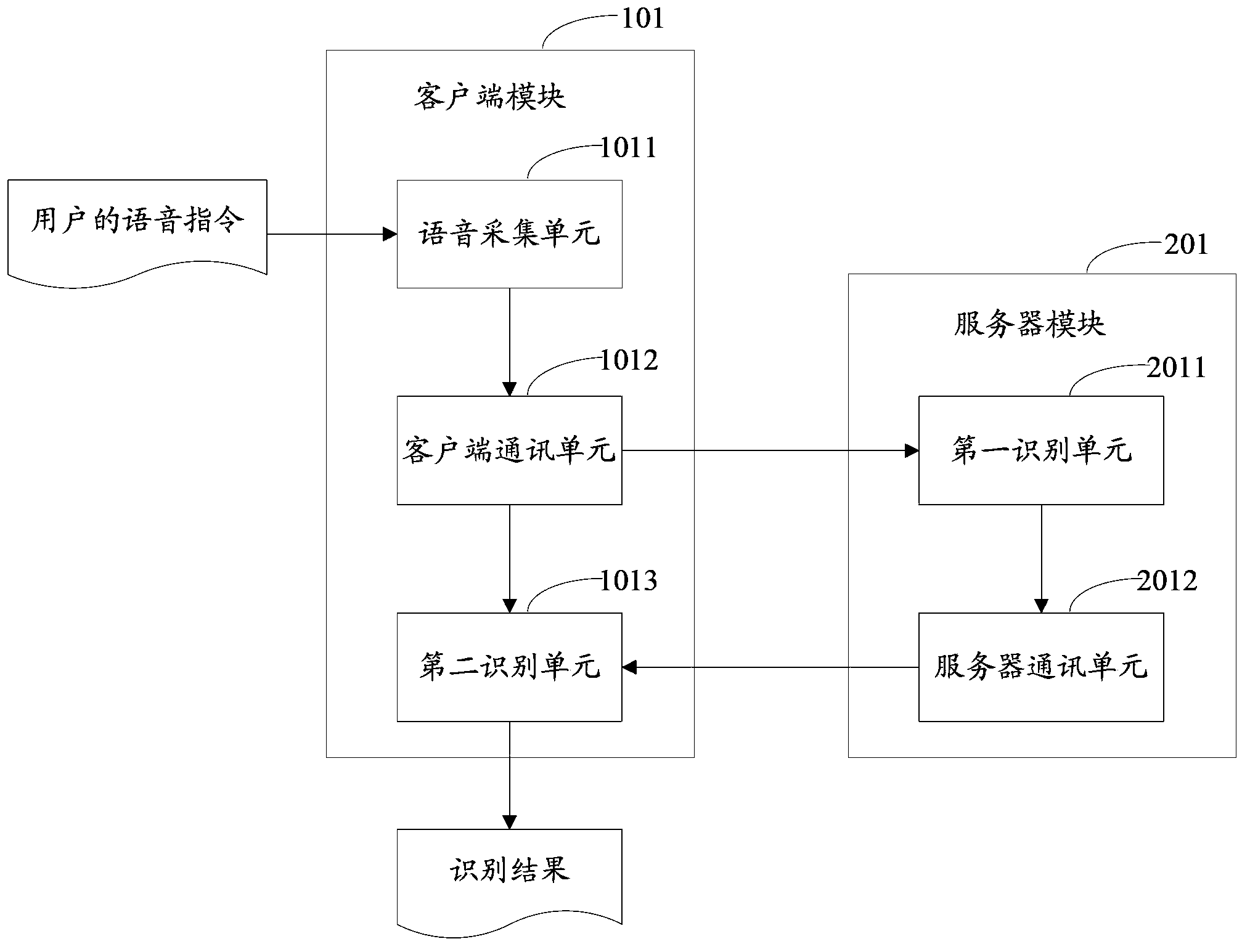

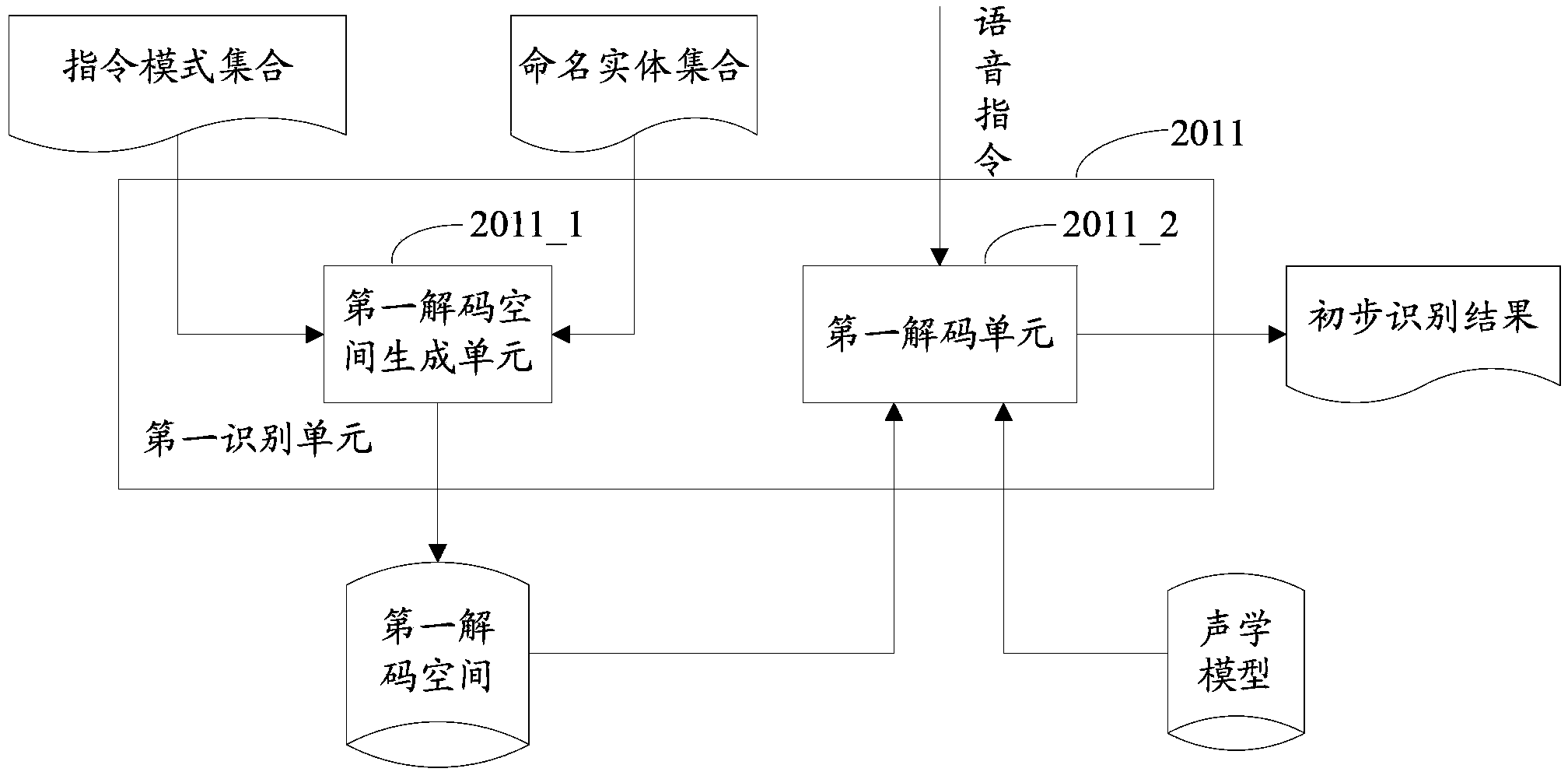

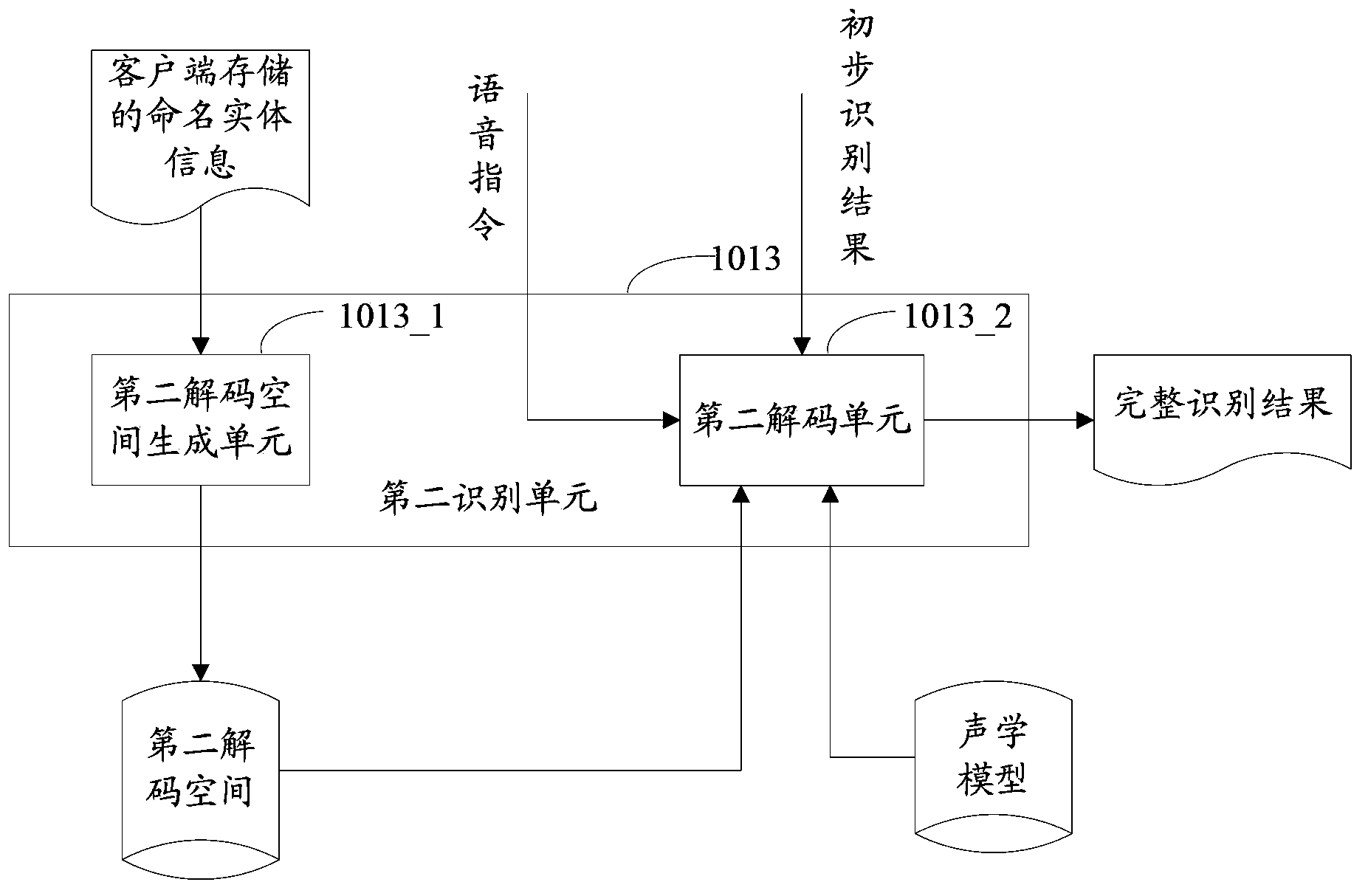

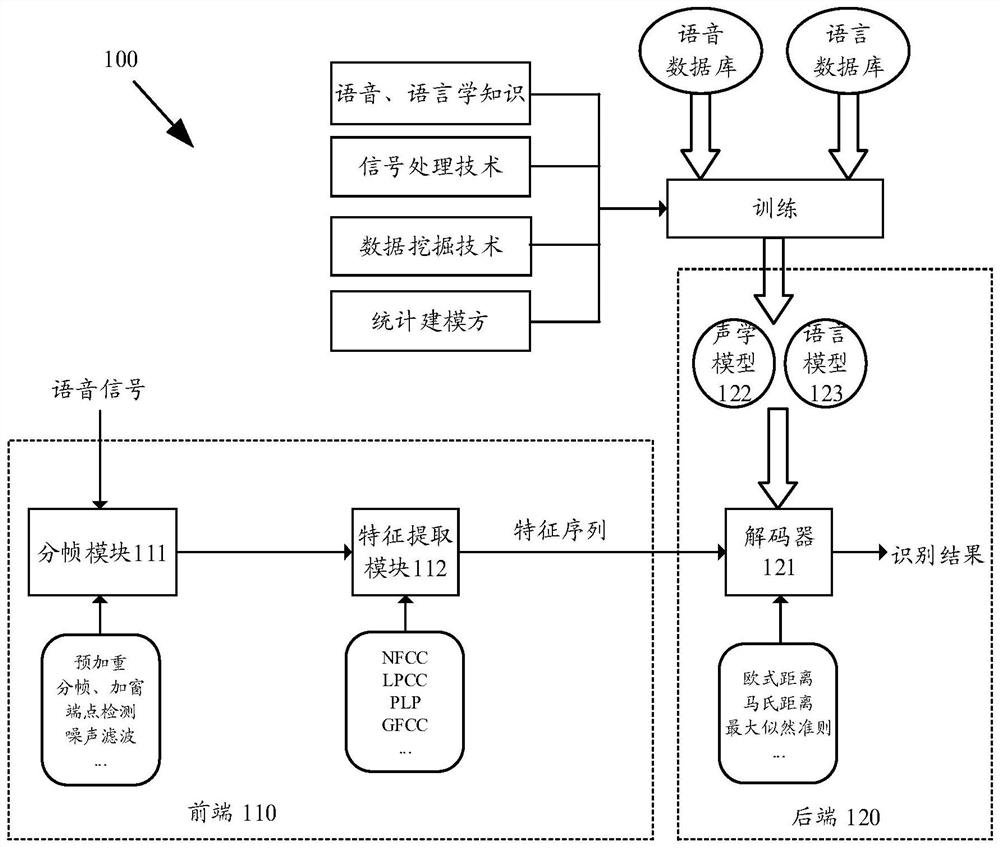

Voice identification method and system

ActiveCN103514882AMake full use of computing resourcesHigh precisionSpeech recognitionSpeech identificationClient-side

The invention provides a voice identification method and system. The voice identification method comprises the steps that A, a client terminal module sends an obtained user voice instruction to a server module; B, the server module identifies the voice instruction preliminarily by the utilization of an instruction template set and a named entity set, obtains a preliminary identification result, and sends the preliminary identification result to the client terminal module, wherein the preliminary identification result is an identification result containing unknown variable information; C, the client terminal module identifies unknown variables through the named entity information stored in the client terminal module so as to obtain an integral identification result of the voice instruction. By means of the mode, the computing resources of a server can be fully utilized, and voice identification accuracy is improved.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

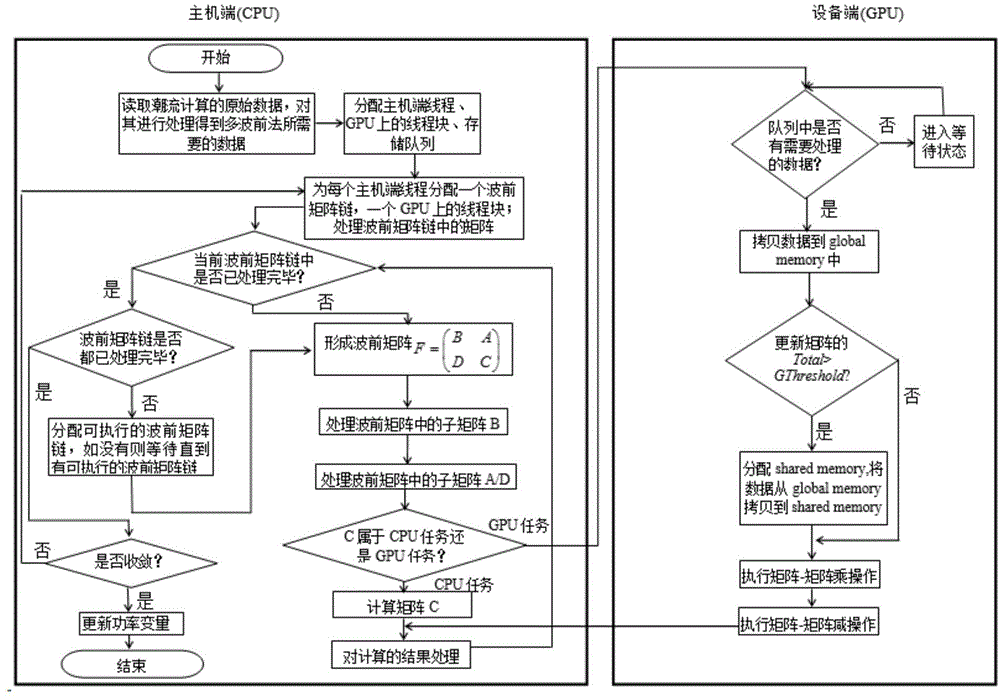

Multi-front load flow calculation method and system based on GPU (graphics processing unit)

InactiveCN104484234AMake full use of computing resourcesShorten the timeResource allocationMain processing unitDecomposition

The invention discloses a multi-front load flow calculation method and system based on a GPU (graphics processing unit). The method includes the steps: 1) asynchronously and concurrently executing a wave front matrix chain; 2) distributing tasks between a CPU (central processing unit) and the GPU; 3) optimizing matrix multiplication and subtraction algorithms on the GPU. The wave front matrix chain is processed in an asynchronously and concurrently executing mode, resources of the CPU are sufficiently used, whether the CPU or the GPU is used for processing is determined according to the sizes of matrixes, processing time of a single matrix is the shortest, idle waiting time of the CPU or the GPU can be shortened as far as possible by asynchronously and concurrently executing the wave front matrix chain, a shared memory is timely used, and the performance of a program is optimal. The performances of decomposition matrixes can be remarkably improved by combining the three methods.

Owner:CHINA ELECTRIC POWER RES INST +1

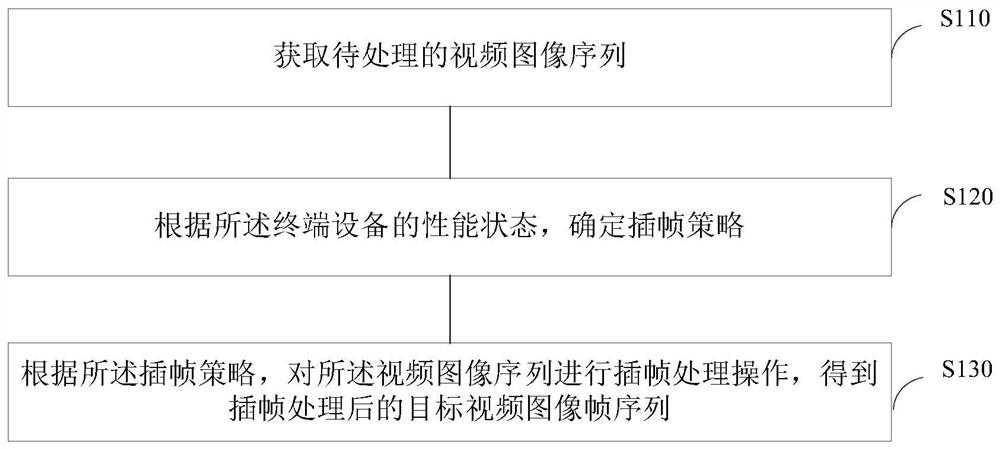

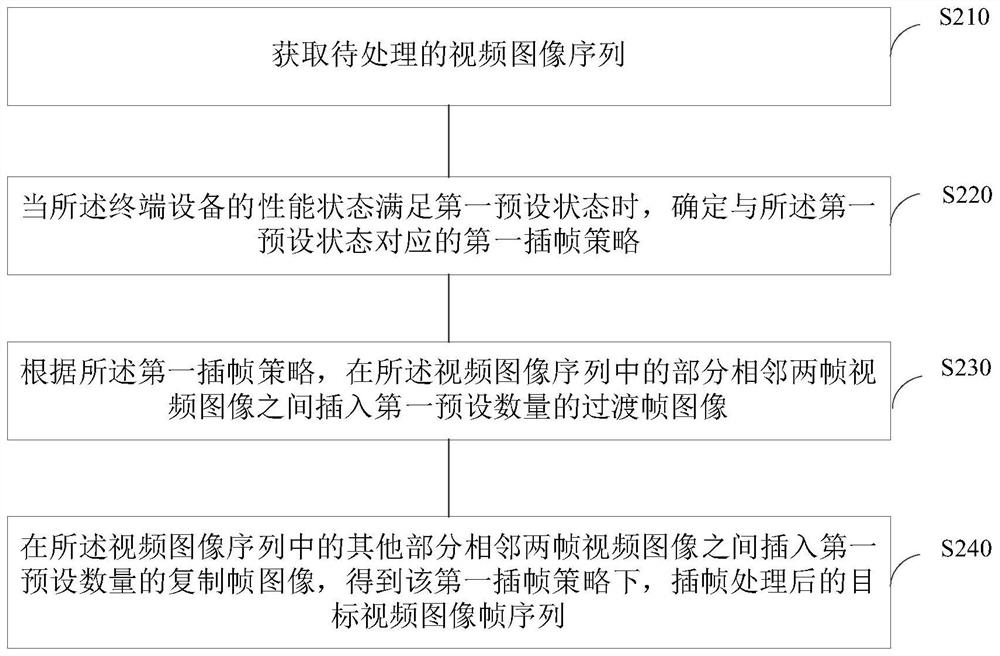

Image processing method and device, terminal equipment and computer readable storage medium

ActiveCN112788235AMake full use of computing resourcesImprove efficiencyTelevision system detailsColor television detailsImaging processingFrame sequence

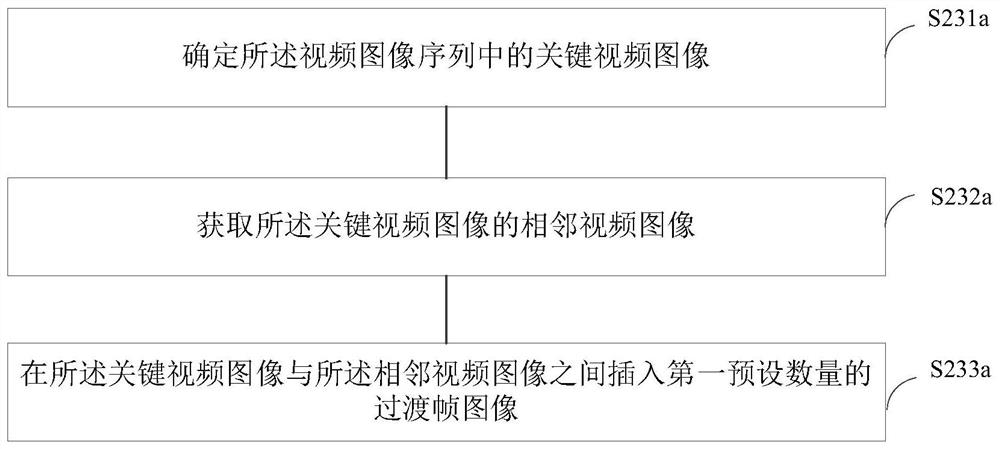

The invention discloses an image processing method and device, terminal equipment and a computer readable storage medium, and relates to the technical field of computer vision. The image processing method is applied to the terminal equipment, and comprises the following steps: acquiring a video image sequence to be processed, the video image sequence comprising multiple frames of video images; determining a frame insertion strategy according to the performance state of the terminal equipment; and according to the frame insertion strategy, performing frame insertion processing operation on the video image sequence to obtain a target video image frame sequence after frame insertion processing. According to the method, video frame insertion can be effectively realized.

Owner:SHENZHEN ZHUIYI TECH CO LTD

Neural network searching method and device and electronic equipment

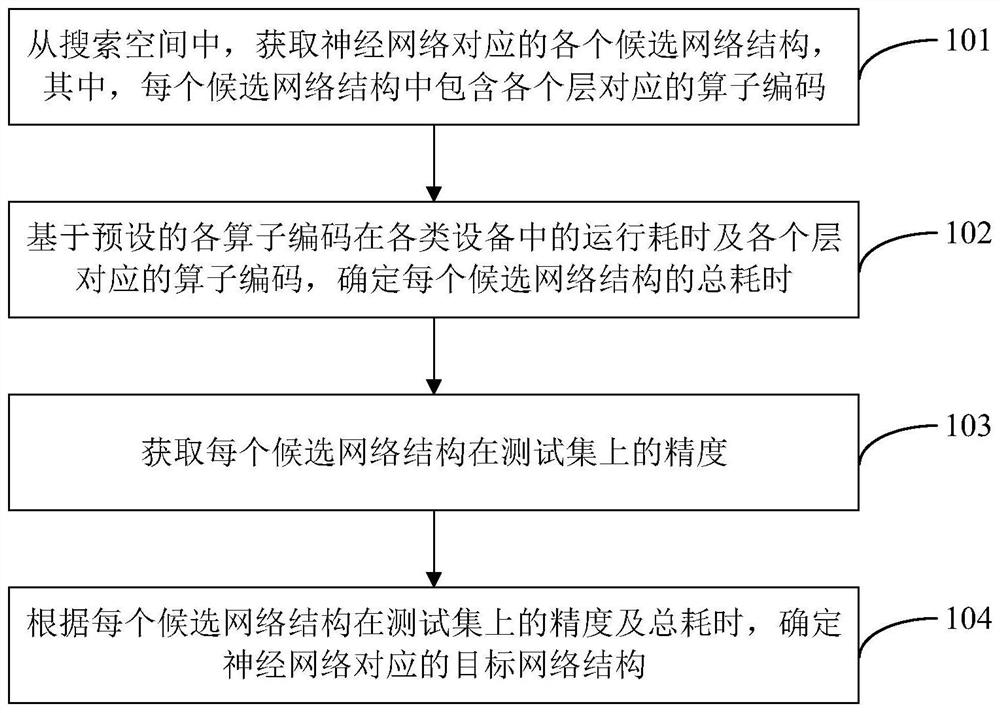

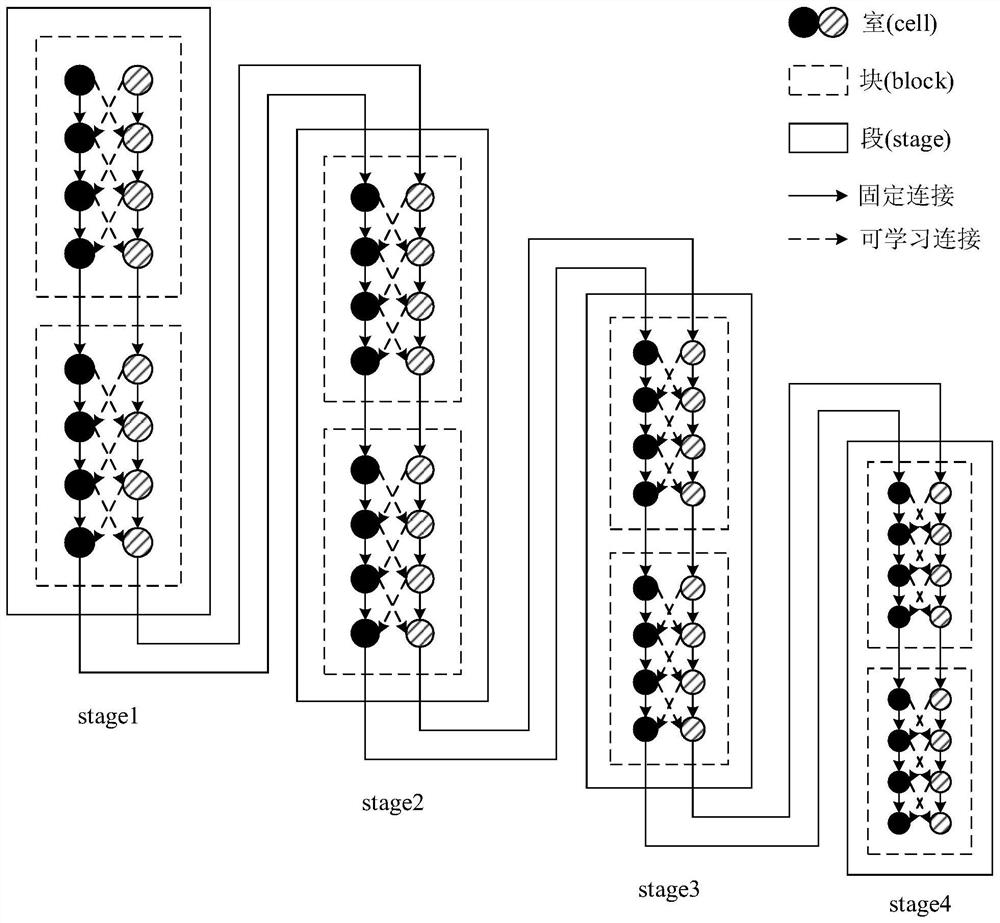

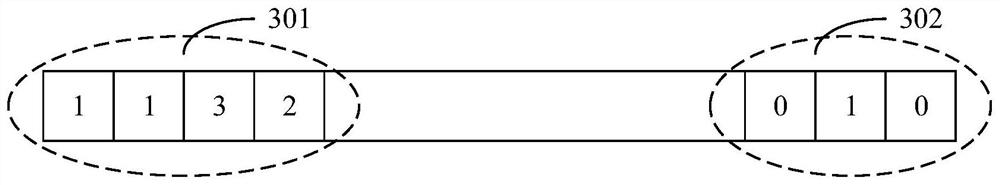

PendingCN112560985ALow efficiencyImprove build efficiencyCharacter and pattern recognitionNeural architecturesNetwork structureEngineering

The invention discloses a neural network search method and device and electronic equipment, and relates to the technical field of artificial intelligence, in particular to the technical field of deeplearning and computer vision. The method comprises steps: obtaining all candidate network structures corresponding to the neural network from a search space, wherein each candidate network structure comprises operator codes corresponding to all layers; determining the total time consumption of each candidate network structure based on the preset operation time consumption of each operator code ineach type of equipment and the operator code corresponding to each layer; obtaining the precision of each candidate network structure on the test set; and determining a target network structure corresponding to the neural network according to the precision of each candidate network structure on the test set and the total time consumption. Therefore, through the neural network search method, the construction efficiency of the neural network structure is improved, the computing resources of the heterogeneous equipment can be fully utilized, and the computing efficiency and precision of the neural network are improved.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

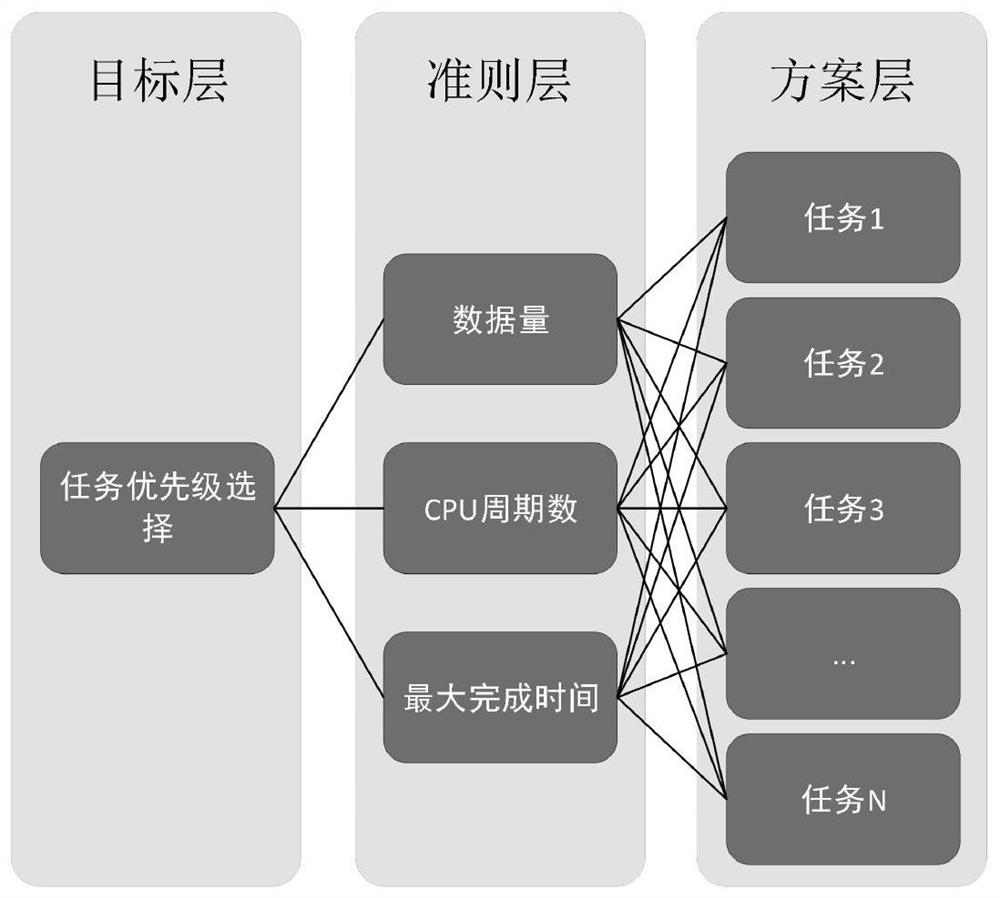

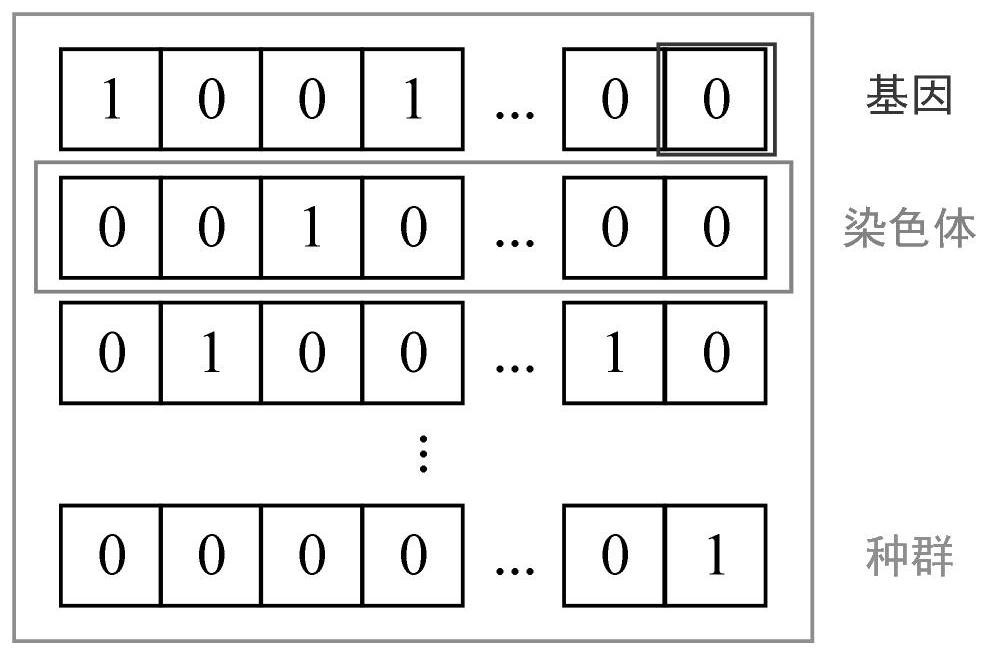

Mobile edge computing unloading time delay optimization method and device and storage medium

PendingCN114599096AAvoid congestionAvoid data accumulationHigh level techniquesWireless communicationCommunications systemEdge server

The invention discloses a mobile edge computing unloading time delay optimization method and device and a storage medium. The method comprises the following steps: comprehensively calculating and evaluating task time delay response priorities of users in a communication system by using an analytic hierarchy model to obtain priority weights; constructing a task processing time model, and calculating to obtain task processing time; establishing a problem model of an optimization target by using the priority weight and the task processing time; based on a problem model of an optimization target, a global optimal target is obtained through iteration, and an optimal channel selection coefficient and unloading coefficient parameter combination adopted when each user processes a task is generated. The computing resources of the heterogeneous edge server are fully utilized, and the system time delay is effectively optimized and reduced.

Owner:NANJING UNIV OF POSTS & TELECOMM

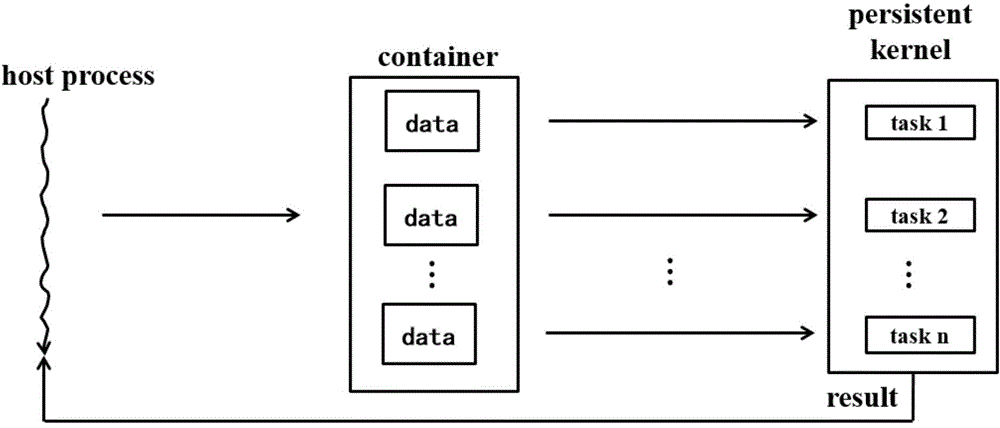

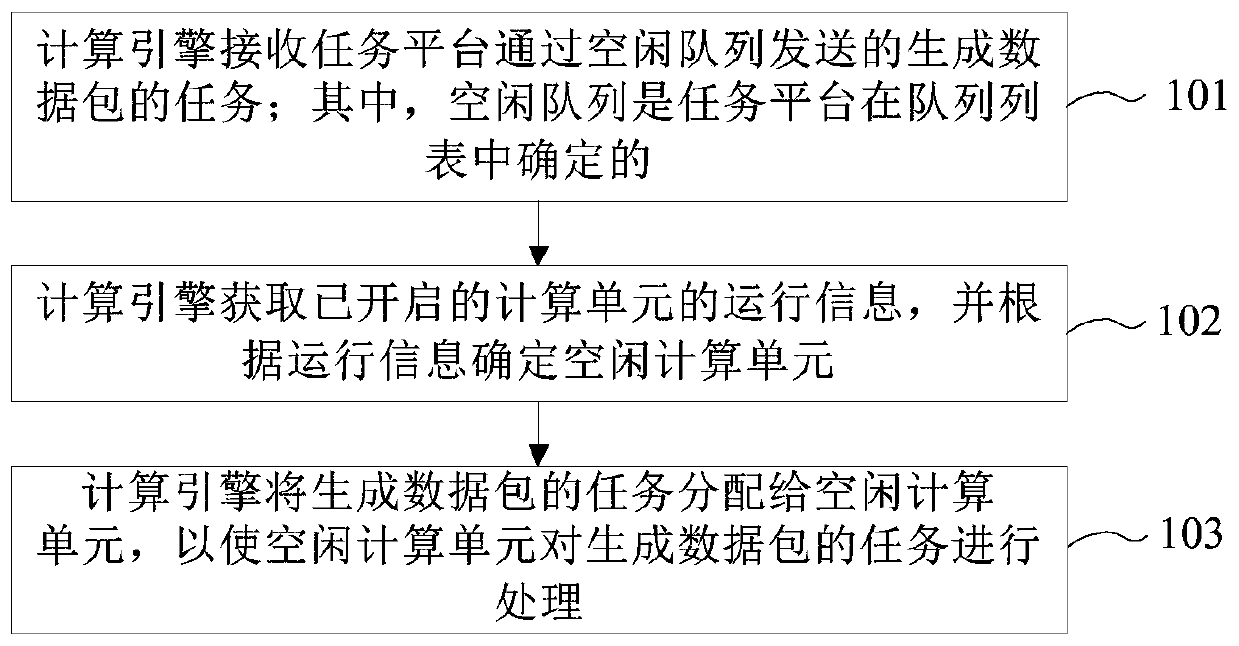

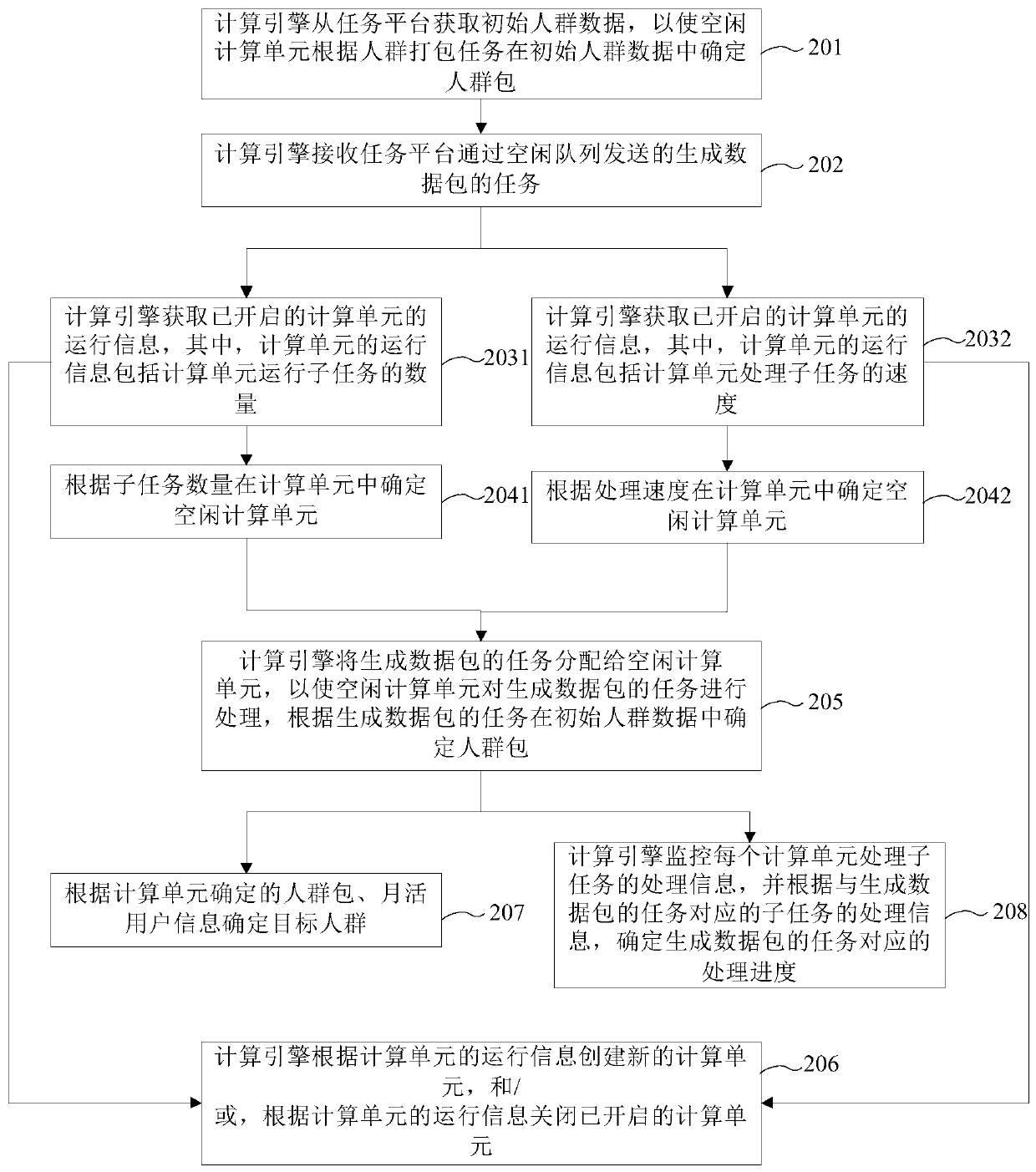

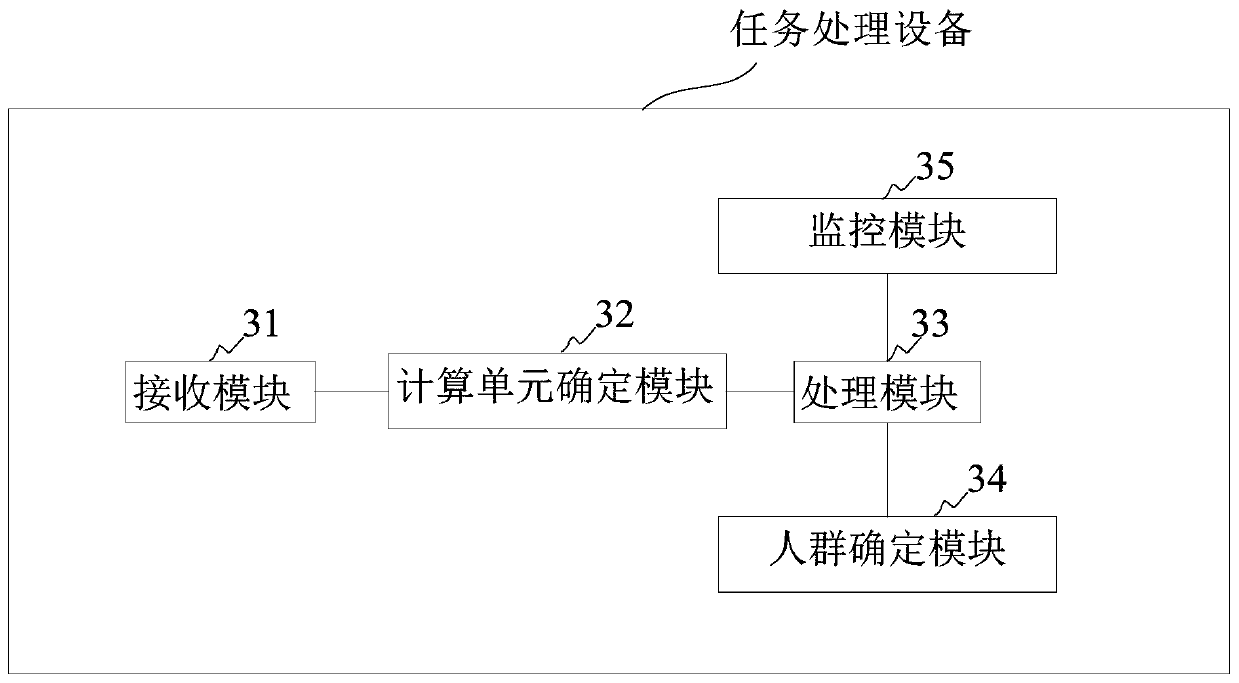

Task processing method and device and computer readable storage medium

InactiveCN110764892AMake full use of computing resourcesProgram initiation/switchingResource allocationEngineeringReal-time computing

The embodiment of the invention provides a task processing method and device and a computer readable storage medium. The task processing method comprises: a computing engine receiving a task for generating a data packet sent by a task platform through an idle queue; wherein the idle queue is determined by the task platform in a queue list; the calculation engine acquiring operation information ofthe started calculation units and determining idle calculation units according to the operation information; and the computing engine allocating the task for generating the data packet to the idle computing unit, so that the idle computing unit processes the task for generating the data packet. According to the scheme, the task platform sends a task for generating a data packet to the computing engine through the idle queue, and the computing engine allocates the received task for generating the data packet to the idle computing unit for processing, so that the task processing method providedby the embodiment of the invention can deploy the task, thereby reasonably scheduling each task and fully utilizing the computing resources of the computing engine.

Owner:BEIJING BYTEDANCE NETWORK TECH CO LTD

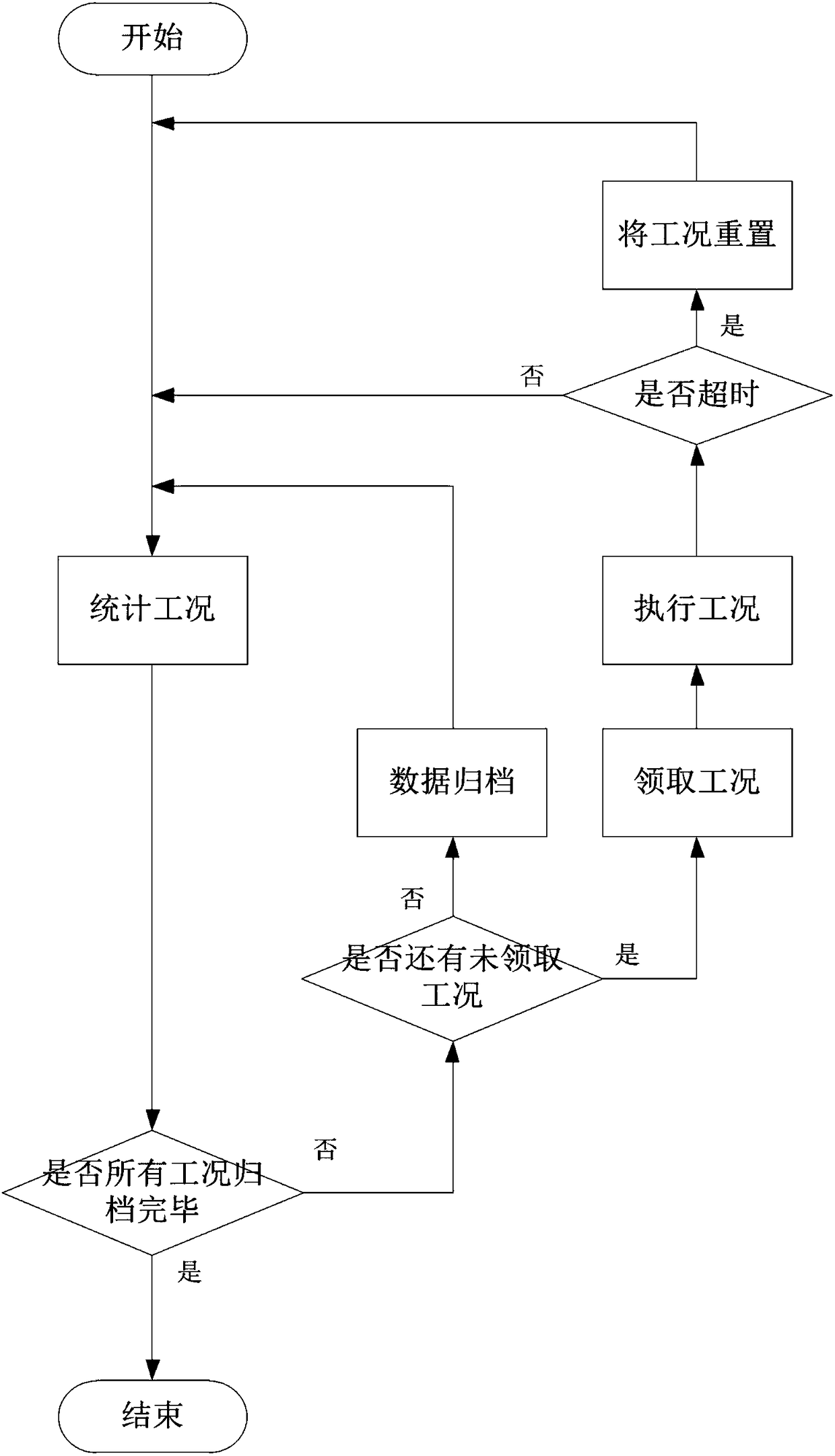

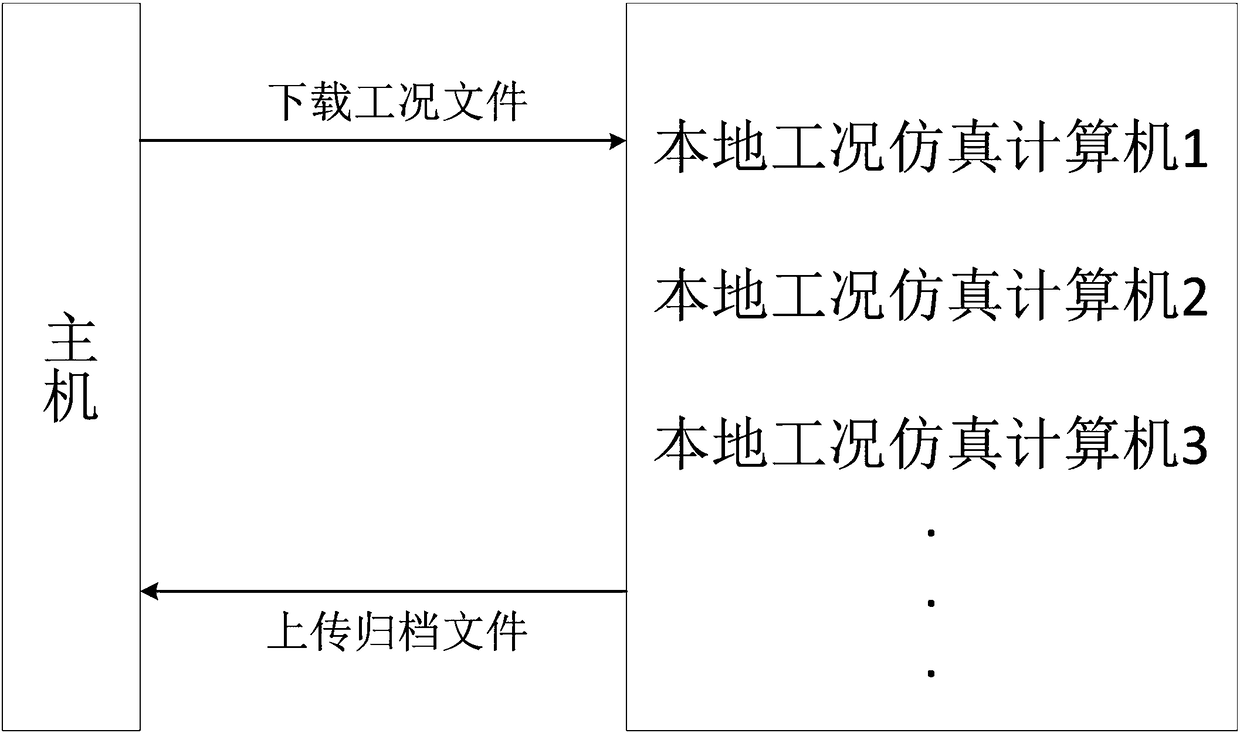

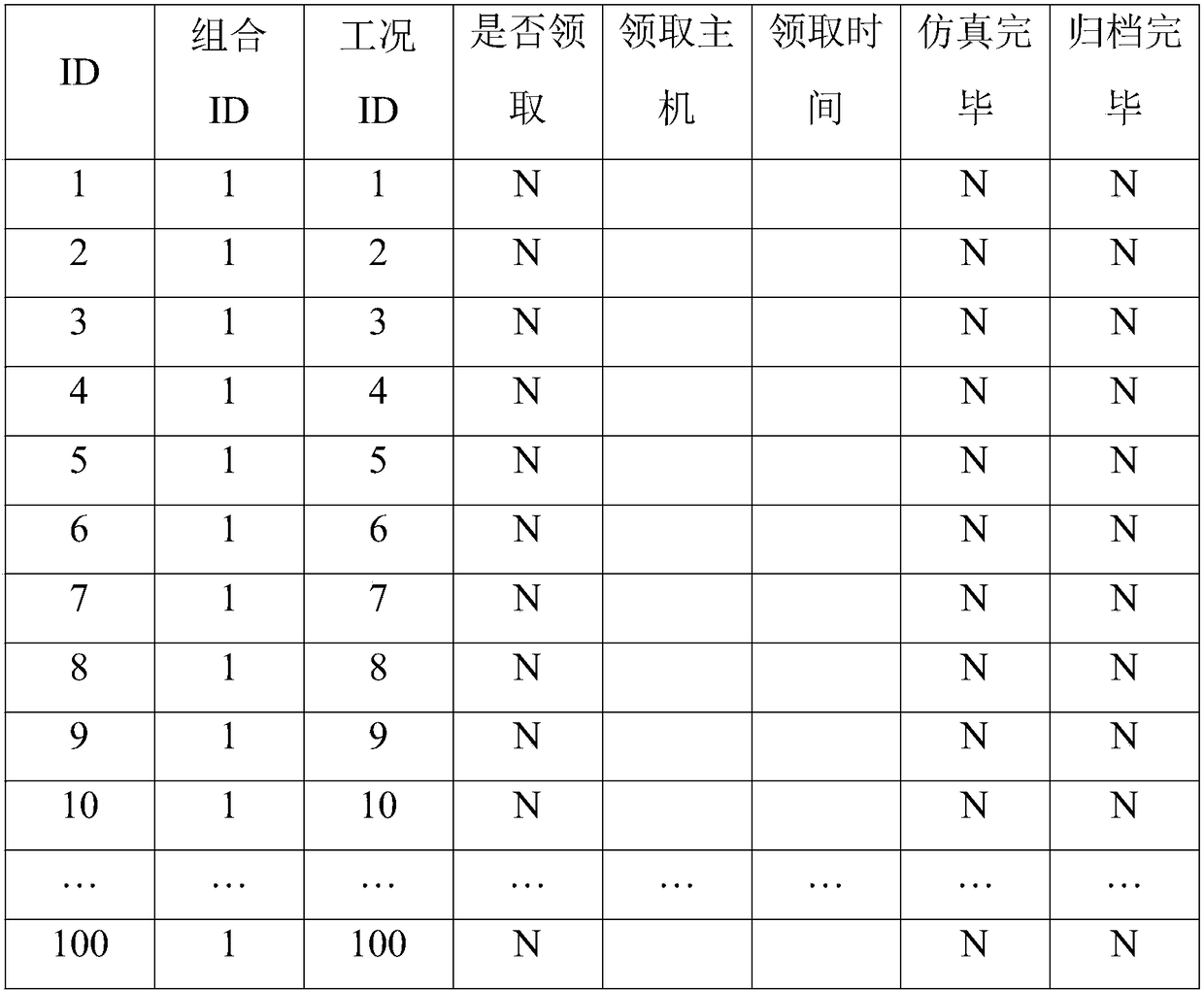

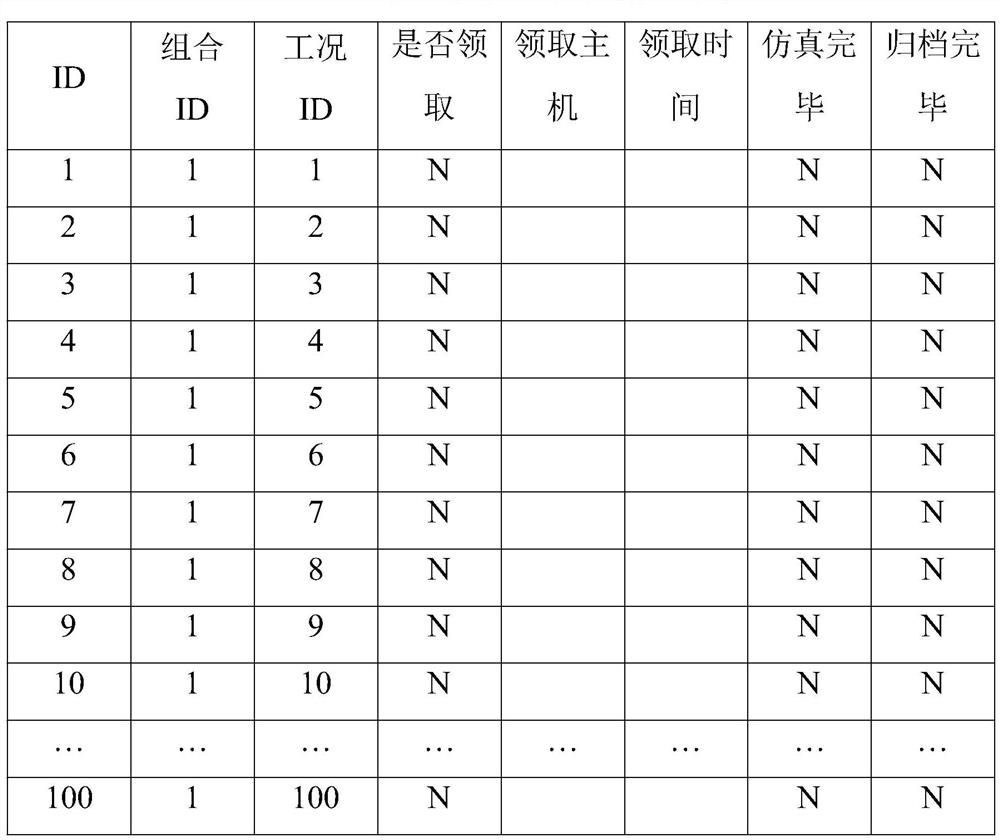

Artificial intelligence processing method and system of digital aircraft working condition set batch simulation

ActiveCN108334675AReduce workloadReduced simulation timeDesign optimisation/simulationFile system administrationParallel simulationWorkload

The invention discloses an artificial intelligence processing method and system of digital aircraft working condition set batch simulation. The method includes the steps: accessing a digital aircraftsimulation working condition set, and storing working condition information in the working condition set into a batch processing task database; receiving at least one piece of working condition information from the batch processing task database, and recording receiving conditions; downloading configuration files corresponding to the working condition information according the received working condition information, and starting digital aircraft simulation programs according to the configuration files to perform digital simulation; archiving all simulation data generated after digital simulation into a batch processing archiving database. According to the method, artificial intelligence processing of batch simulation of the digital aircraft working condition set is achieved, simulation anddata archiving of a lot of working conditions can be automatically performed, workload of people is reduced, parallel simulation processing of a plurality of hosts can be achieved when a lot of working conditions need to be processed, computing resources are sufficiently utilized, and simulation time is saved.

Owner:BEIHANG UNIV

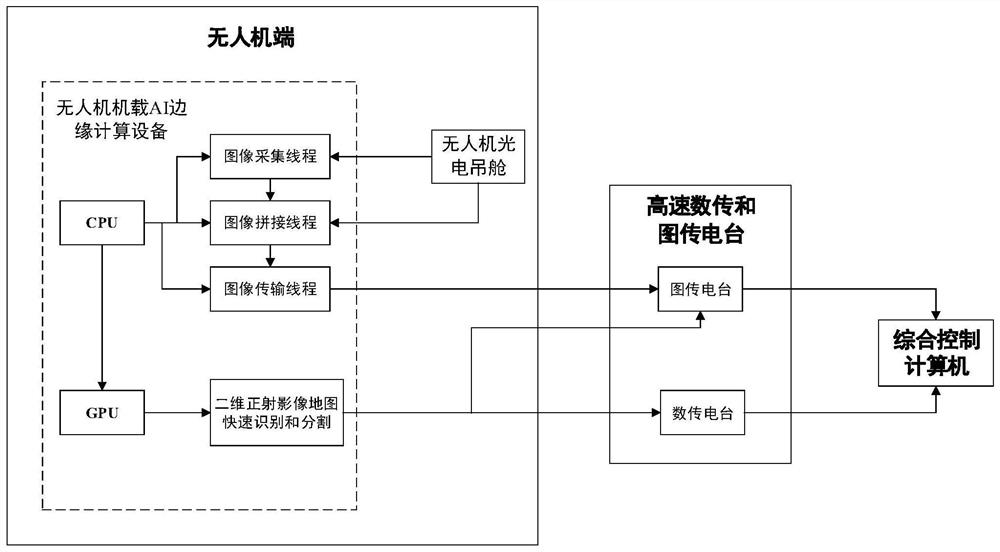

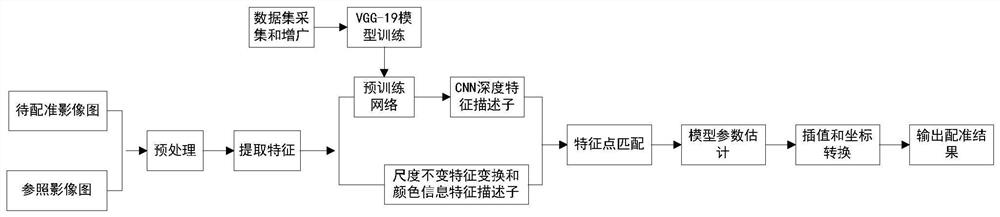

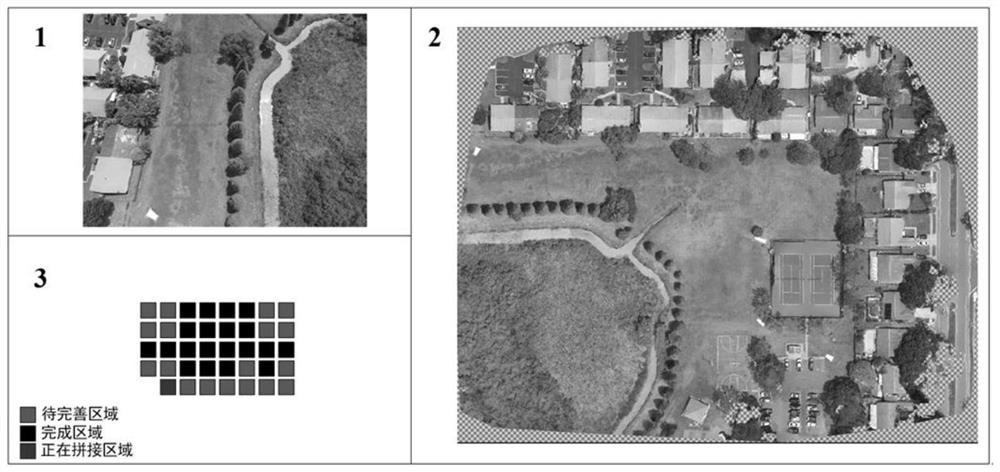

Multi-thread system for constructing orthoimage semantic map in real time

PendingCN112991487AImprove real-time performanceImprove timelinessDrawing from basic elementsImage analysisEdge computingUncrewed vehicle

The invention discloses a system for multithreading real-time construction of an orthoimage semantic map. The system comprises an unmanned aerial vehicle photoelectric pod, an AI edge computing device, a radio station and a comprehensive control computer. The unmanned aerial vehicle photoelectric pod is used for collecting aerial images of the ground terrain; the AI edge computing device is used for constructing a two-dimensional orthoimage map according to the aerial image and identifying and segmenting environmental elements; the radio station is used for transmitting the segmentation results of the two-dimensional orthoimage map and the environmental elements to the comprehensive control computer; and the comprehensive control computer is used for carrying out color separation marking on the two-dimensional orthoimage map according to the segmentation result to form a two-dimensional orthoimage semantic map. According to the multi-thread system for constructing the orthoimage semantic map in real time, the AI edge computing device is constructed on the unmanned aerial vehicle, and construction of the two-dimensional orthoimage map is transferred to the AI edge computing device from the ground workstation, so that the real-time performance and timeliness of image splicing are higher.

Owner:中国兵器装备集团自动化研究所有限公司

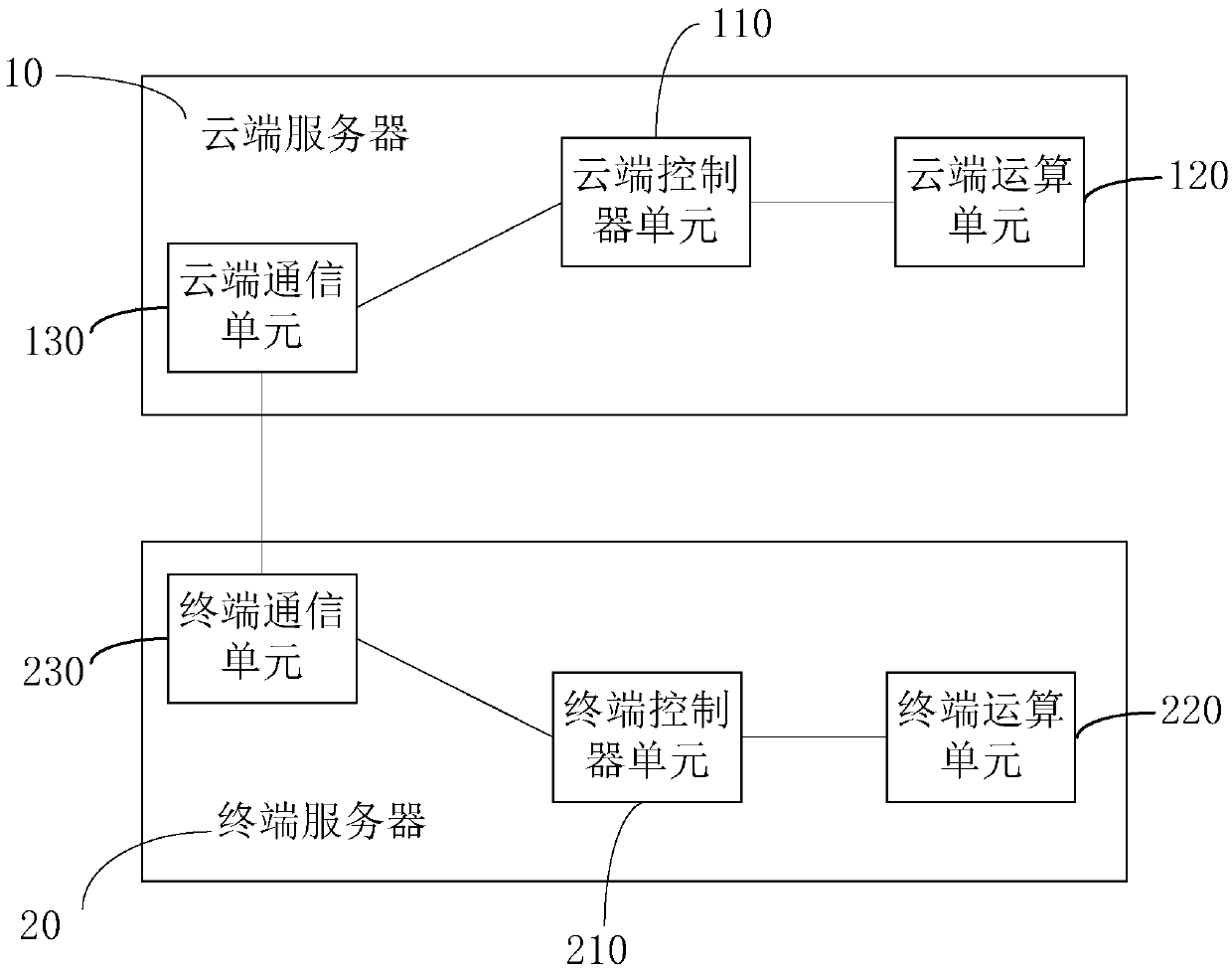

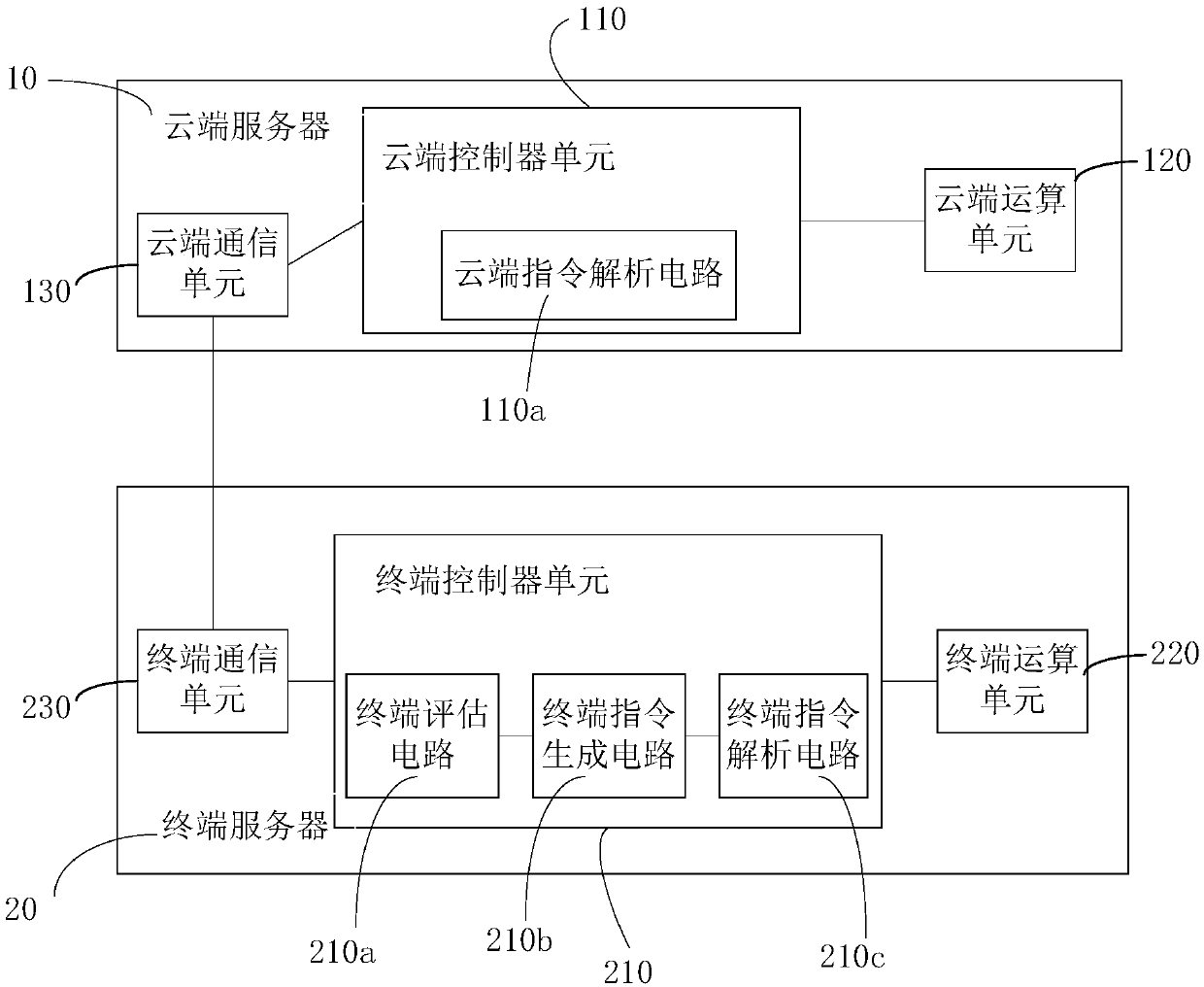

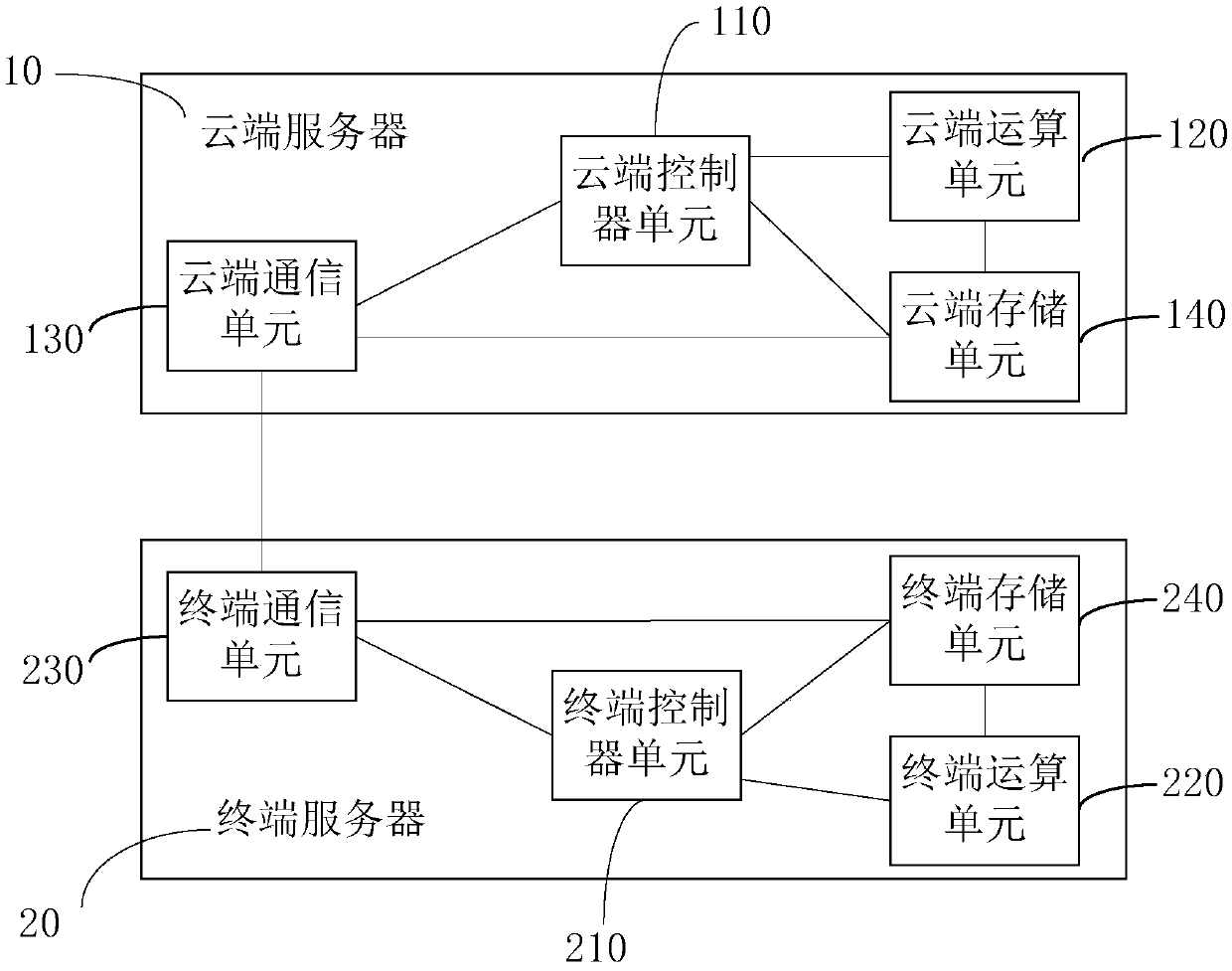

Machine learning operation distribution system and method

ActiveCN111047045AImprove accuracyAvoid waitingMachine learningMachine execution arrangementsTerminal serverDistribution system

The invention relates to a distribution system for machine learning operation, which can obtain an operation result with lower accuracy when a first machine learning algorithm with lower operation capability is used for calculating an operation task in a terminal server according to a control instruction of the terminal server. And when the same operation task is also calculated by using a secondmachine learning algorithm with relatively high operation capability in the cloud server according to the control instruction of the cloud server, an operation result with relatively high accuracy canbe obtained. Thus, different machine learning algorithms are flexibly used for respectively executing the same operation task based on the requirements of the user, so that the user can respectivelyobtain an operation result with lower accuracy and an operation result with higher accuracy. Moreover, as the operational capability of the terminal server is relatively weak, the operational result of the terminal can be output firstly, so that the user does not need to wait for a long time, and the processing efficiency is improved.

Owner:CAMBRICON TECH CO LTD

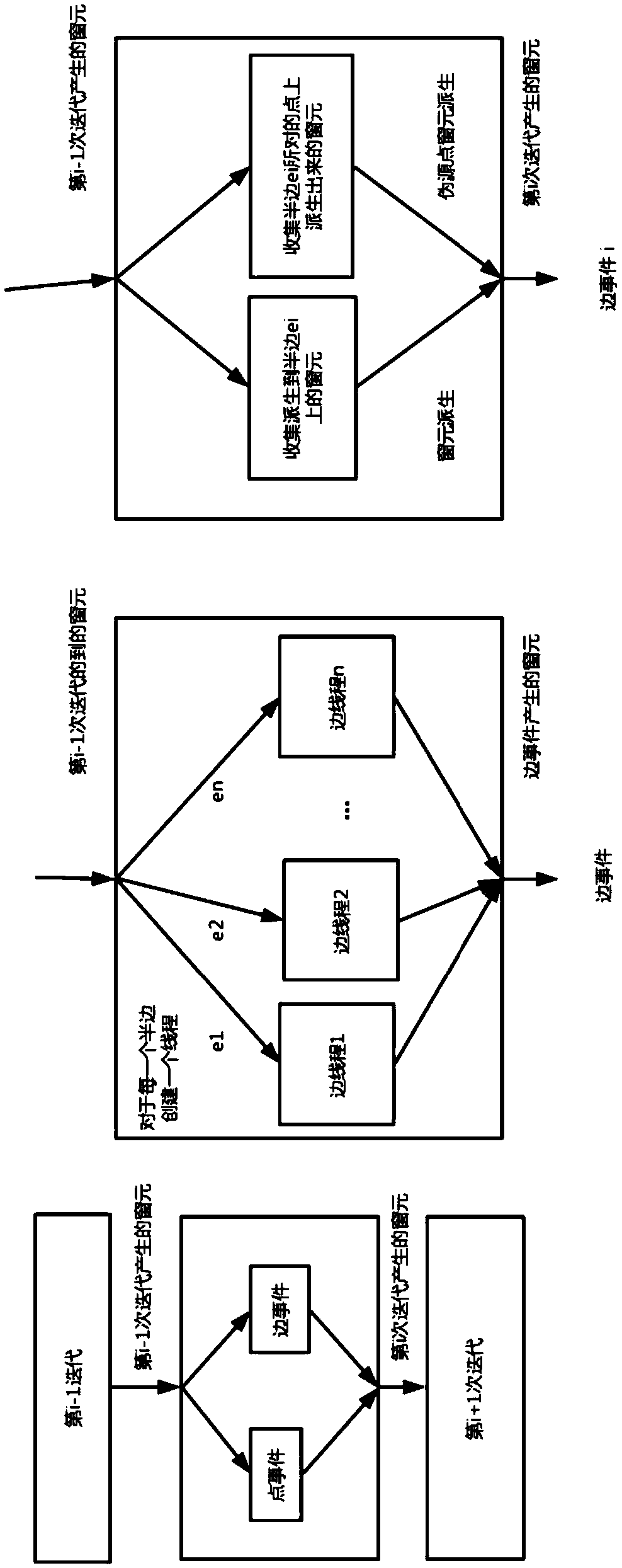

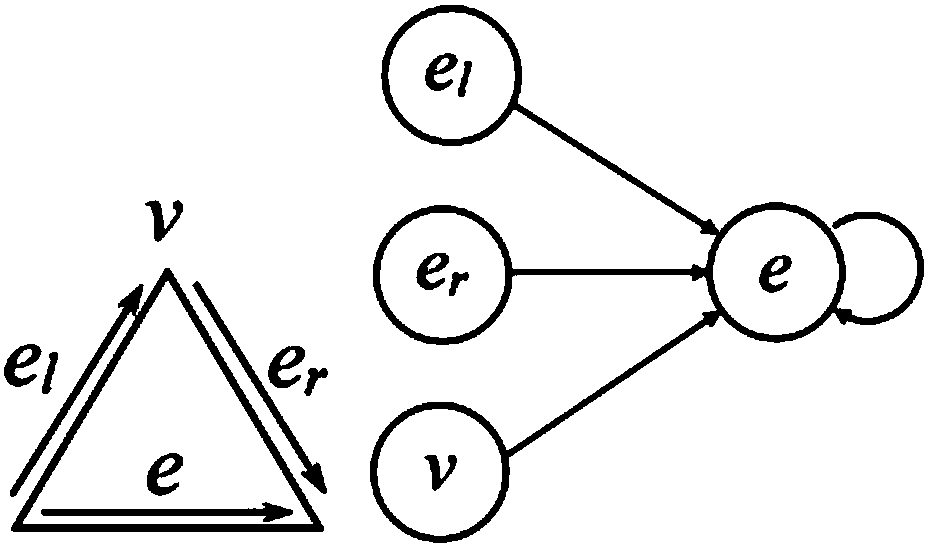

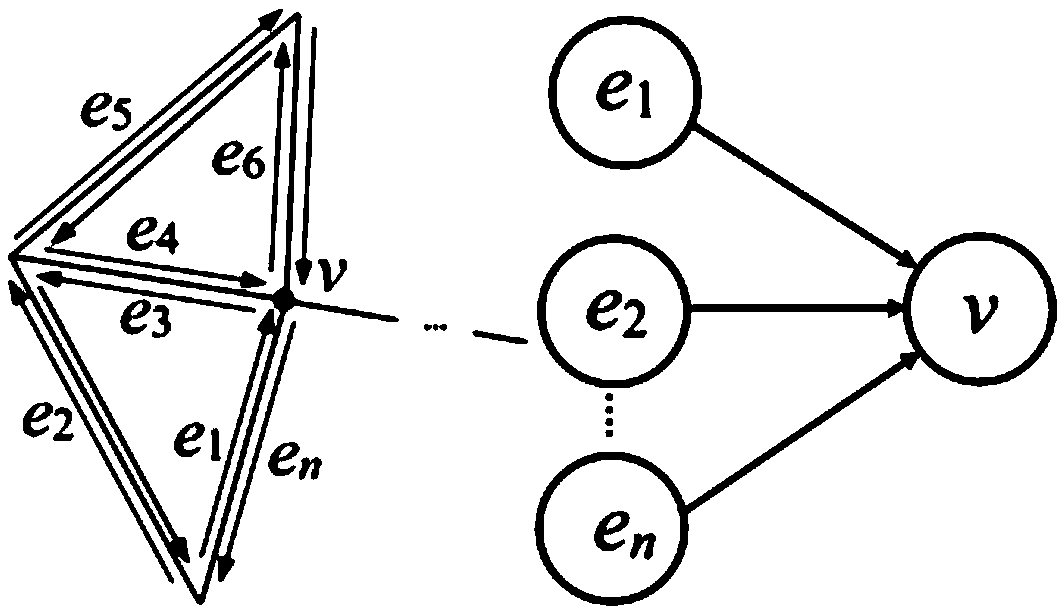

Local geometric feature-based efficient discrete geodesic line paralleling method

ActiveCN108717731AAchieve parallelizationImprove efficiencyDetails involving image processing hardware3D modellingArray data structureAlgorithm

The invention discloses a local geometric feature-based efficient discrete geodesic line paralleling method. The method comprises the following steps of: constructing a local geodesic information transfer topological structure; inputting a network model, initiatively updating own distance values by all the peaks in the network model, and deriving new pseudo-source point elements, namely, new second-type window elements; initiating deriving new window elements by edge window elements in first-type window elements, and storing the new window elements into buffer areas corresponding to the edges;establishing a plurality of edge threads to process a window element derivation event on each edge in parallel; creating a plurality of edge threads to process a window element collection event on each process in parallel; initiatively obtaining own sub-window elements by the edges according to own topological relationships; initiatively collecting window elements in a corresponding buffer area by the edge e and writing the collected window elements into a rolling array corresponding to the next state of rolling arrays; exchanging the rolling array; and if updatable second-type window elements exist, re-inputting the network mode l, and otherwise, ending the operation. According to the method, the algorithm efficiency is improved, the operation time is shortened and complete paralleling is realized.

Owner:TIANJIN UNIV

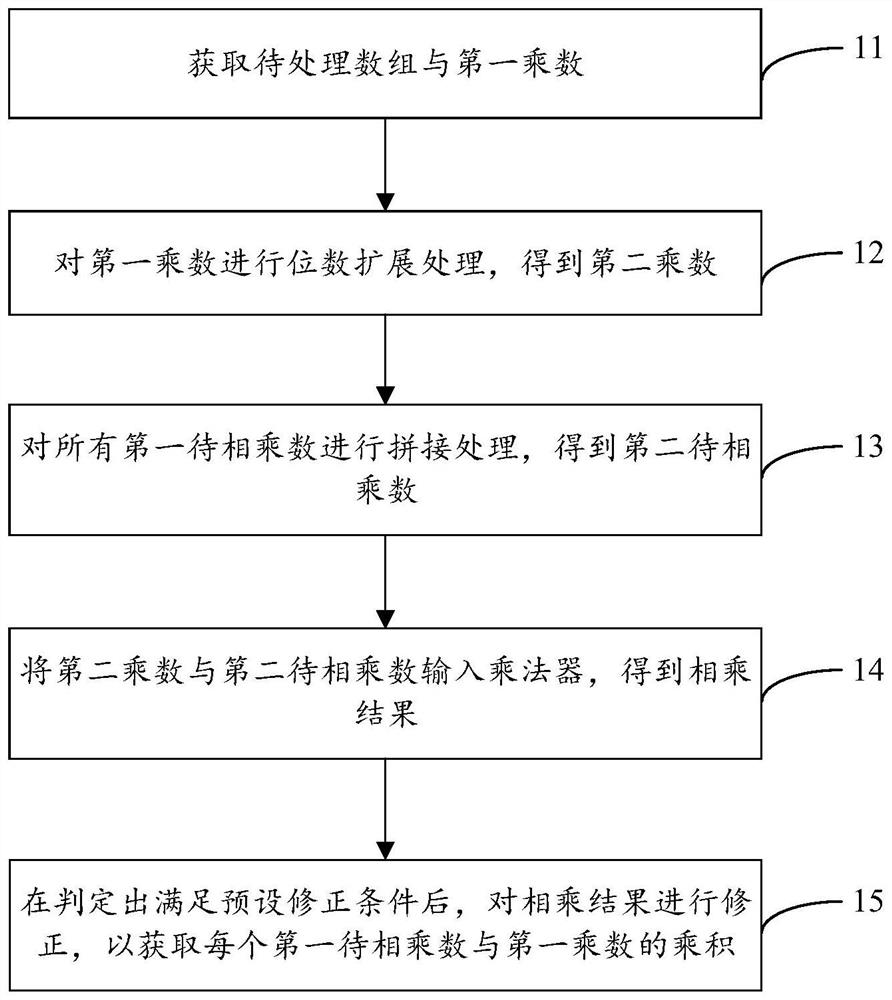

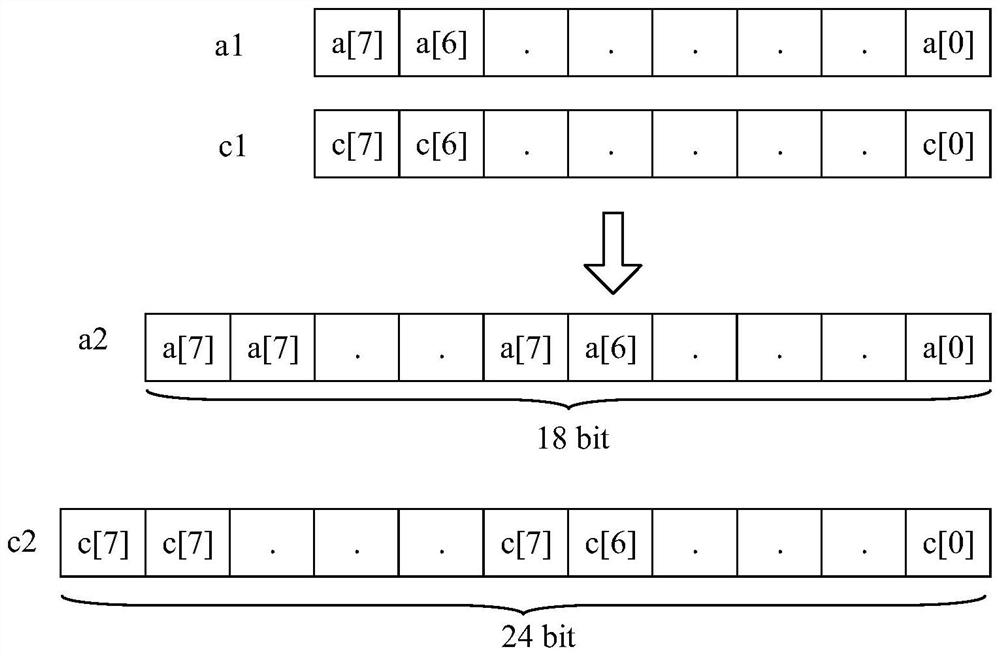

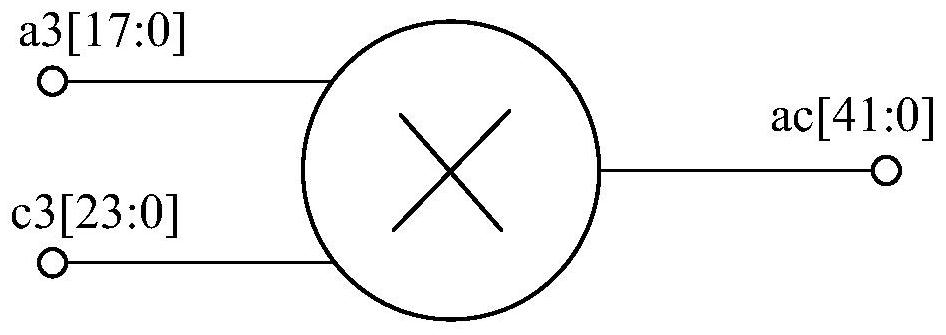

Operation method and device based on multiplier and computer readable storage medium

PendingCN114003194AImprove computing powerMake full use of computing resourcesDigital data processing detailsBinary multiplierComputer science

The invention discloses an operation method and device based on a multiplier and a computer readable storage medium, and the method comprises the steps: obtaining a to-be-processed array and a first multiplier, wherein the to-be-processed array comprises at least two first to-be-multiplied numbers; performing digit expansion processing on the first multiplier to obtain a second multiplier; splicing all the first to-be-multiplied numbers to obtain a second to-be-multiplied number; inputting the second multiplier and the second to-be-multiplied number into the multiplier to obtain a multiplication result; after it is judged that a preset correction condition is met, correcting the multiplication result to obtain a product of each first to-be-multiplied number and the first multiplier, the preset correction condition includes that an exclusive-or result of a value of a highest bit of the first to-be-multiplied number and a value of a highest bit of the first multiplier is a first value, and neither the first to-be-multiplied number nor the first multiplier is a second value. In this way, the computing power can be improved, and resources are fully utilized.

Owner:ZHEJIANG DAHUA TECH CO LTD

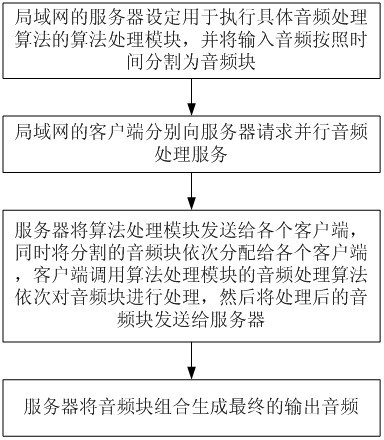

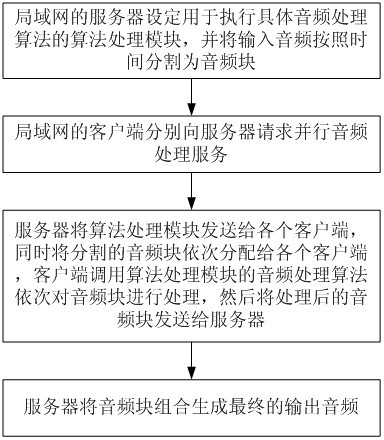

Parallel audio frequency processing method for multiple server nodes

InactiveCN102622209AEasy to handleImplementing Parallel Audio ProcessingConcurrent instruction executionTransmissionComputer moduleClient-side

The invention discloses a parallel audio frequency processing method for multiple server nodes, which includes the implementation steps: 1) setting algorithm processing modules for executing specific audio frequency processing algorithms in a server of a local area network, and dividing inputted audio frequency into audio frequency blocks according to time; 2) leading client sides of the local area network to respectively request the server to perform parallel audio frequency processing service; 3) utilizing the server to transmit the algorithm processing modules to each client side and sequentially allocate the divided audio frequency blocks into the client slides, and leading the client sides to invoke the audio frequency processing algorithms of the algorithm processing modules to sequentially process the audio frequency blocks and then transmit the processed audio frequency blocks to the server; and 4) combining the audio frequency blocks to generate final output audio frequency by the server. The parallel audio frequency processing method has the advantages of capability of realizing parallel audio frequency processing, high efficiency and rapidness in audio frequency processing and convenience in use.

Owner:苏州奇可思信息科技有限公司

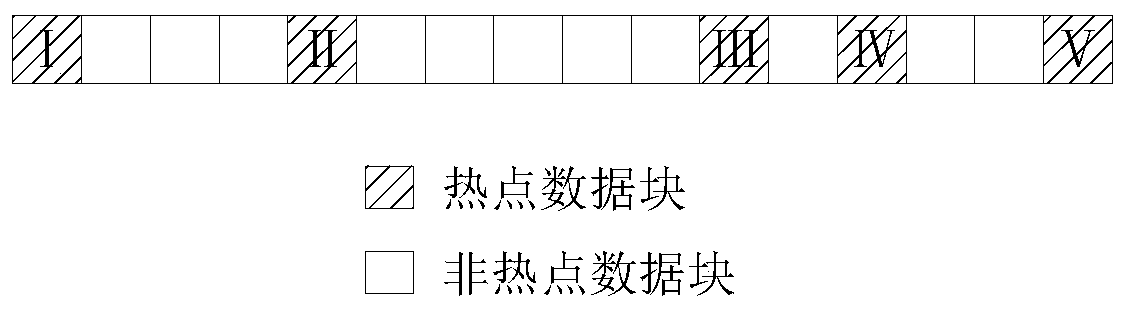

Index generation method and retrieval method for scientific big data

ActiveCN110442575AReduce sizeImprove retrieval efficiencyDatabase updatingEnergy efficient computingOriginal dataData science

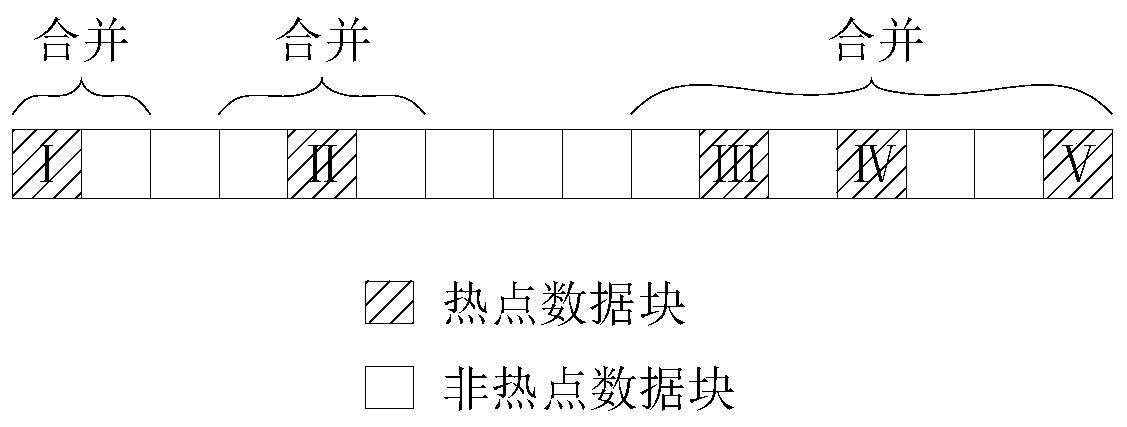

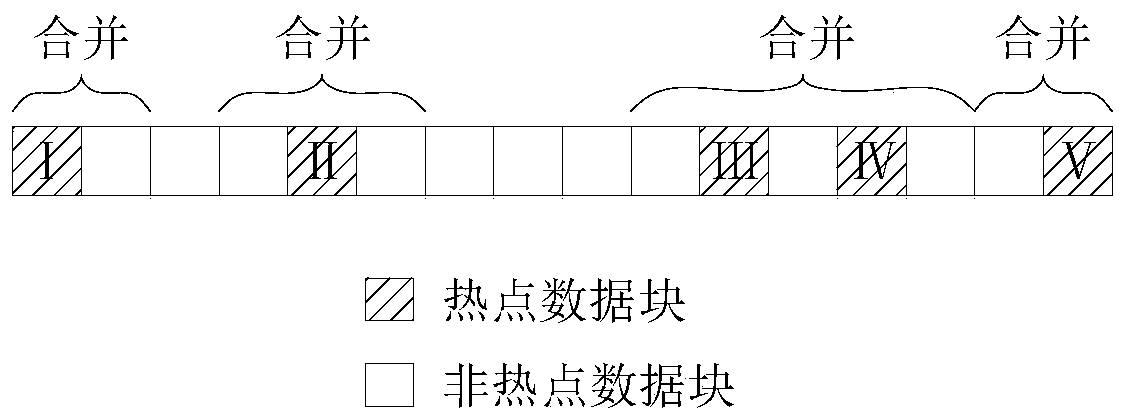

The invention relates to an index generation method and retrieval method for scientific big data. The method comprises the following steps of determining a plurality of data blocks as hotspot data blocks according to popularity of each data block; combining the hotspot data block with the data block adjacent to the hotspot data block according to the continuous condition of the hotspot data block;and generating a data index or updating an original data index according to the combined data block. According to the method, the situation that excessive redundant information enters the disk duringretrieval due to the fact that the data block is too large, and consequently data filtering expenditure is increased can be prevented, the situation that disk memory access expenditure is increased during retrieval due to the fact that the data block is too small can also be prevented, computing resources of a computer are more fully utilized, and the retrieval efficiency of scientific big data is greatly improved.

Owner:SUN YAT SEN UNIV

Read-write system and method compatible with two-dimensional structure data

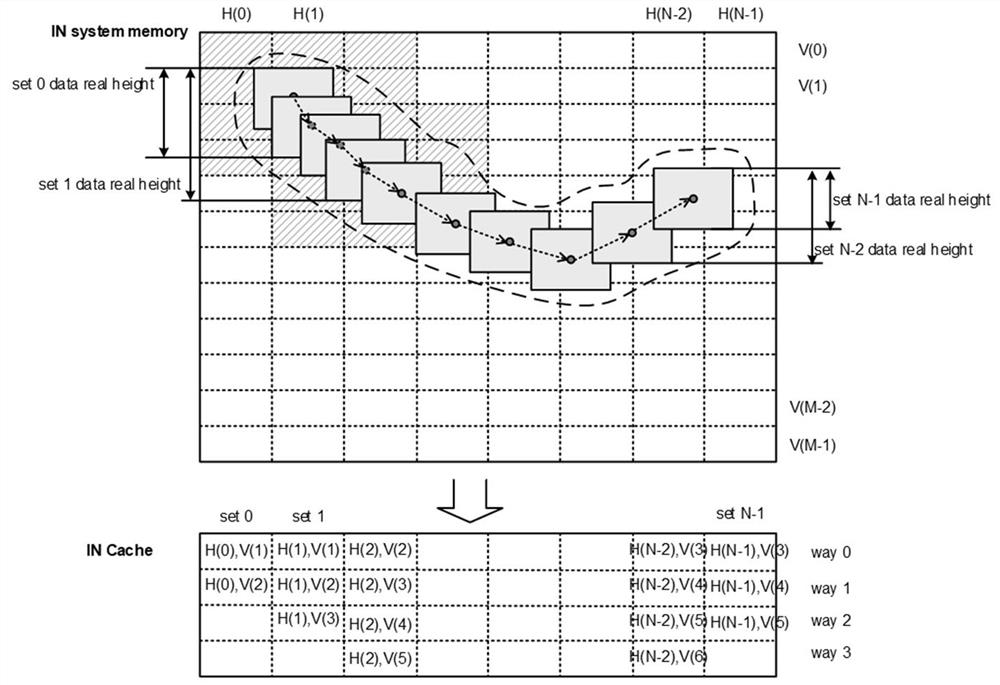

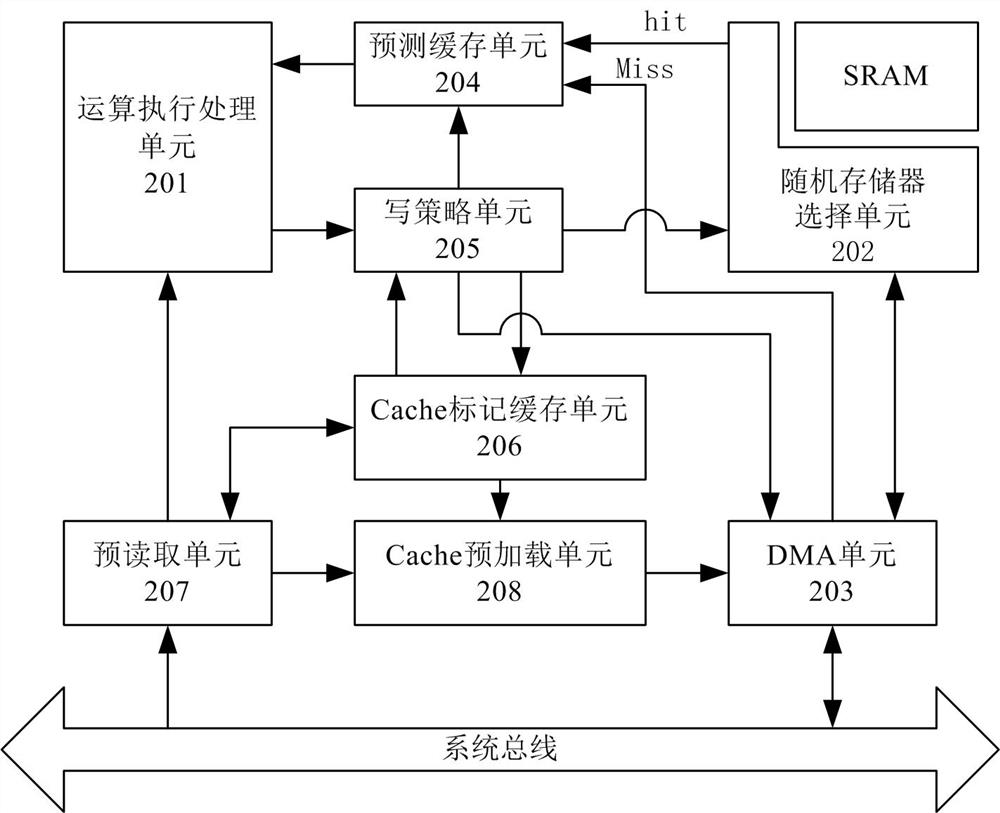

ActiveCN113886286AEfficient loadingImprove hit rateMemory architecture accessing/allocationMemory systemsComputer architectureStatic random-access memory

A read-write system compatible with two-dimensional structure data comprises a random access memory selection unit which reads and writes an SRAM (Static Random Access Memory) according to a read-write demand of instruction decoding of an operation execution unit; a prediction caching unit which is used for performing prediction caching on two-dimensional data required by instruction execution; a writing strategy unit which is used for formulating a strategy for writing data into the SRAM and writing back an external memory; a Cache mark caching unit which is used for caching the value mark of the Cache and the state value of the square block; a Cache preloading unit which is used for generating a corresponding block refreshing request according to an updating flag bit of the Cache mark cache unit, and putting the corresponding block refreshing request into the DMA unit request queue to update the Cache; and a pre-reading unit which is used for analyzing the operation tasks according to preset batches and marking the tasks. According to the system provided by the invention, the operation efficiency of the system with the vector processing capability is greatly improved.

Owner:NANJING SEMIDRIVE TECH CO LTD

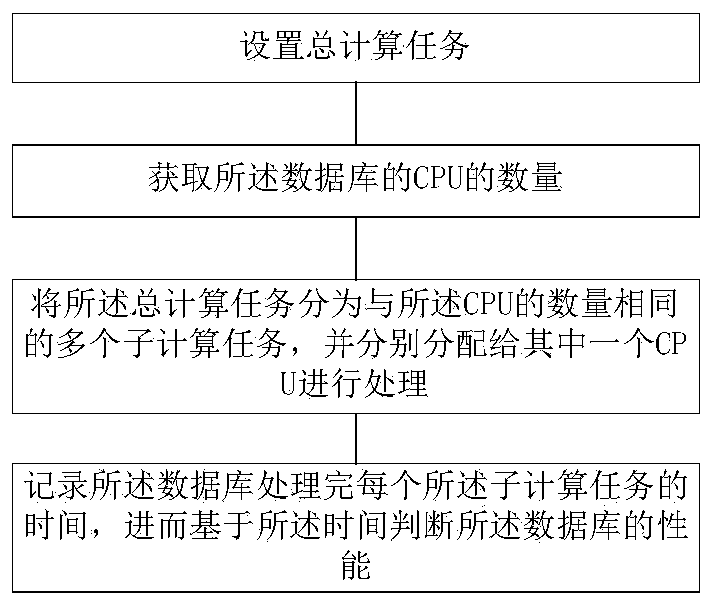

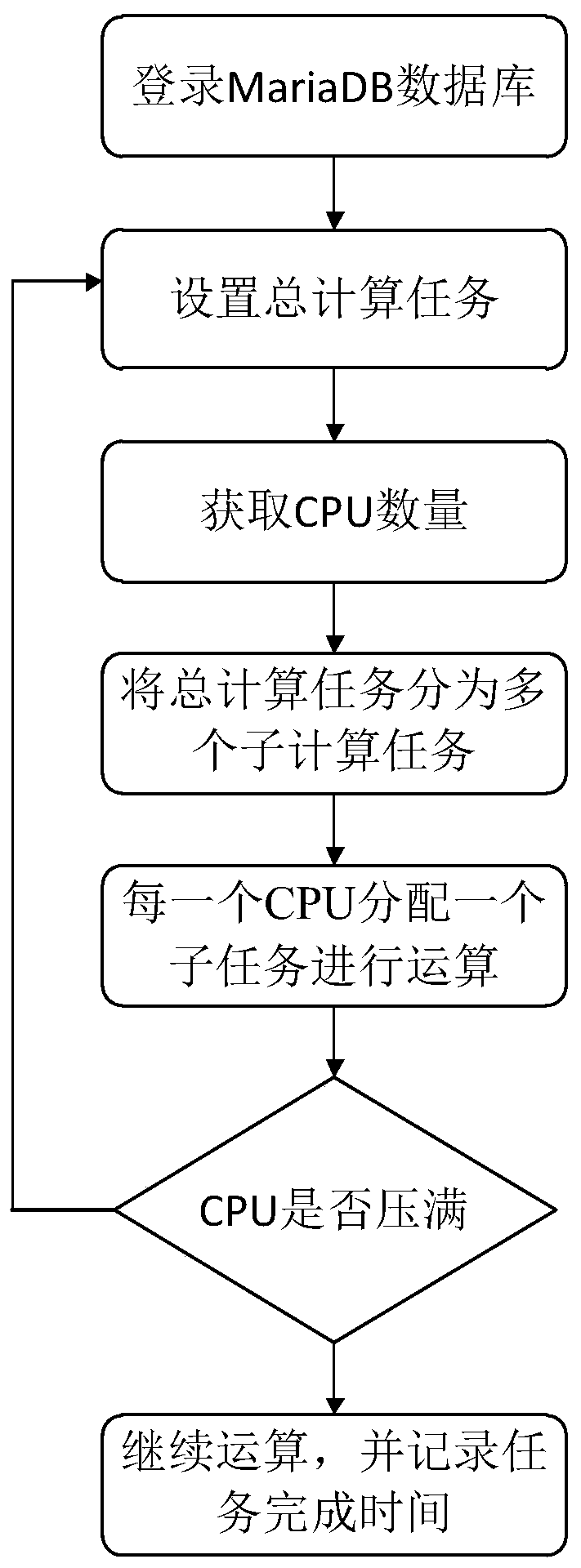

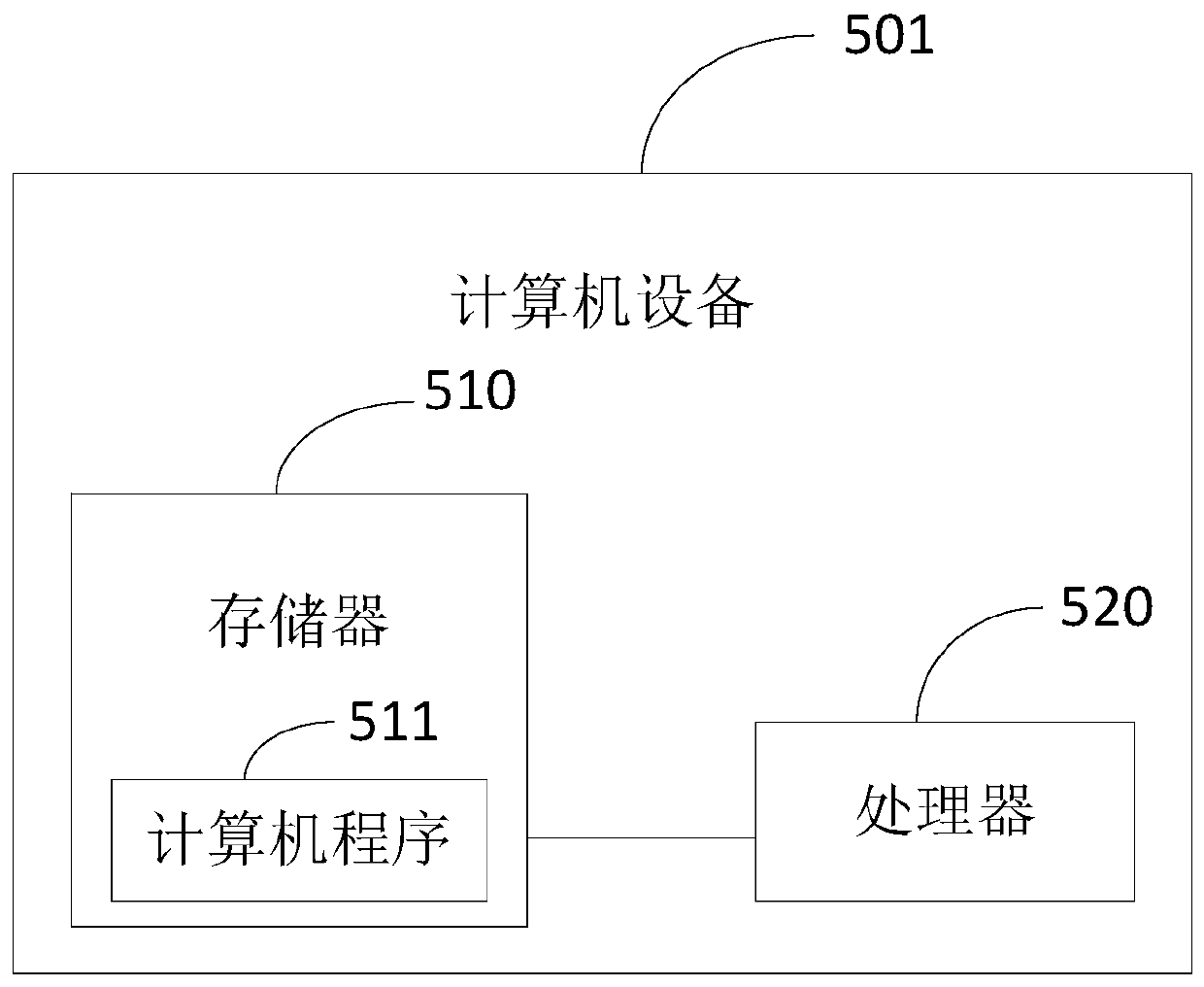

Database performance testing method and device and storage medium

InactiveCN110427312AMake full use of computing resourcesHardware monitoringTest efficiencyComputer science

The invention discloses a database performance testing method. The method comprises the following steps: setting a total calculation task; obtaining the number of CPUs of the database; dividing the total computing task into a plurality of sub-computing tasks with the same number as the CPUs, and respectively distributing the sub-computing tasks to one CPU for processing; and recording the time when the database finishes processing each sub-computing task, and further judging the performance of the database based on the time. The invention further discloses computer equipment and a readable storage medium. According to the technical scheme, the performance of the database can be rapidly tested, the test efficiency is improved, and the hardware investment is reduced.

Owner:INSPUR SUZHOU INTELLIGENT TECH CO LTD

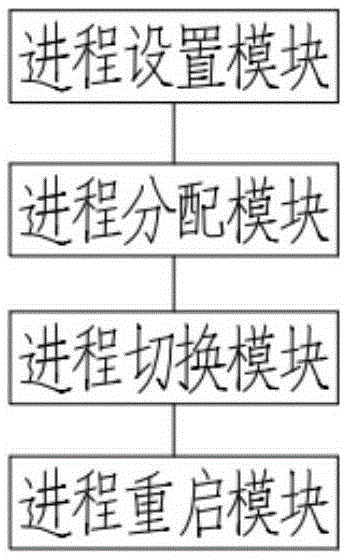

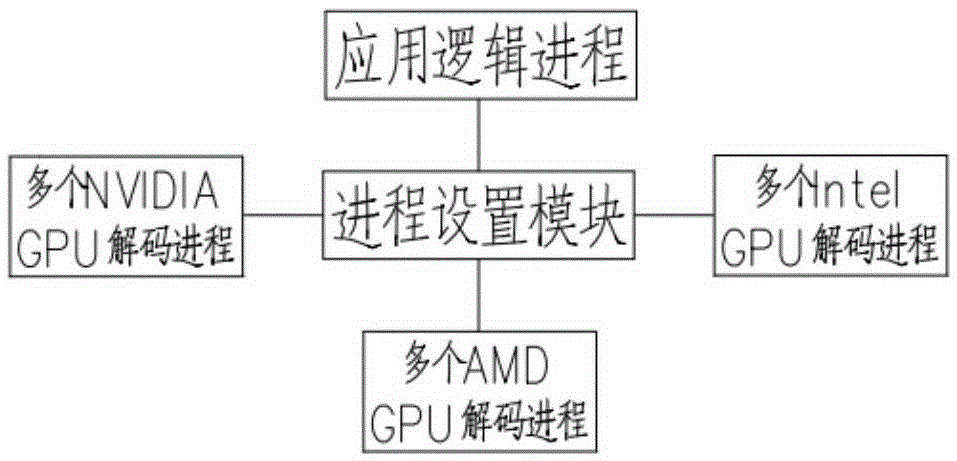

Method and system for decoding multi-process video

InactiveCN105306948AMake full use of computing resourcesMake full use of memoryDigital video signal modificationBusiness processVideo decoding

The invention provides a method for decoding multi-process video. The method comprises the following steps: establishing multiple corresponding independent decoding processes for different video cards of a video decoding system; and selecting decoding processes corresponding to the video cards to decode video data. The invention further provides a multi-process video decoding system. The multi-process video decoding system comprises a decoding process scheduler, wherein the decoding process scheduler comprises a process setting module and a process distribution module; the process setting module establishes multiple decoding processes for different video cards of the video decoding system; and the process distribution module selects the decoding processes corresponding to the video cards to decode the video data. By adopting the above technical scheme, the business process and decoding of video decoding software are realized by different processes, and the video decoding software can be decoded through a plurality of decoding processes to realize load balancing, therefore, the system hardware resources can be fully utilized and the characteristic of quick recovery of processes during crashed decoding process can be realized.

Owner:CHONGQING XUNMEI ELECTRONICS

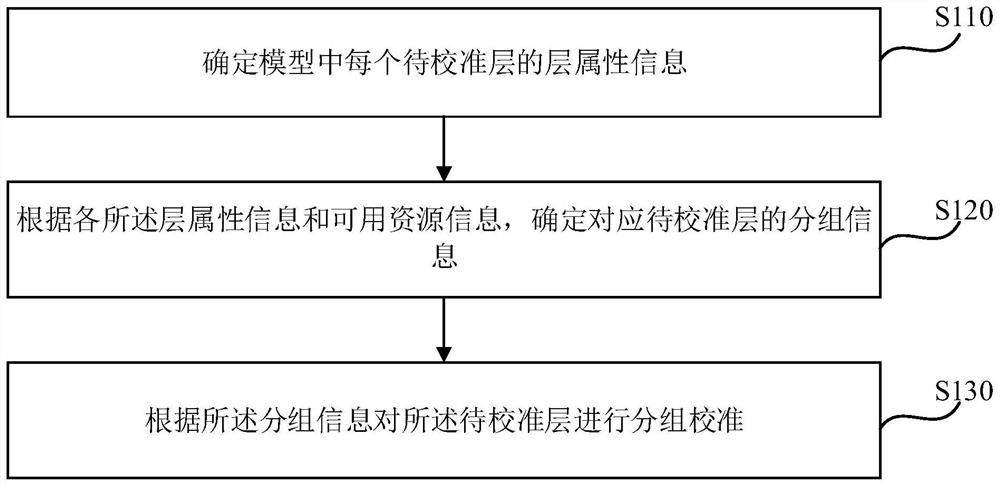

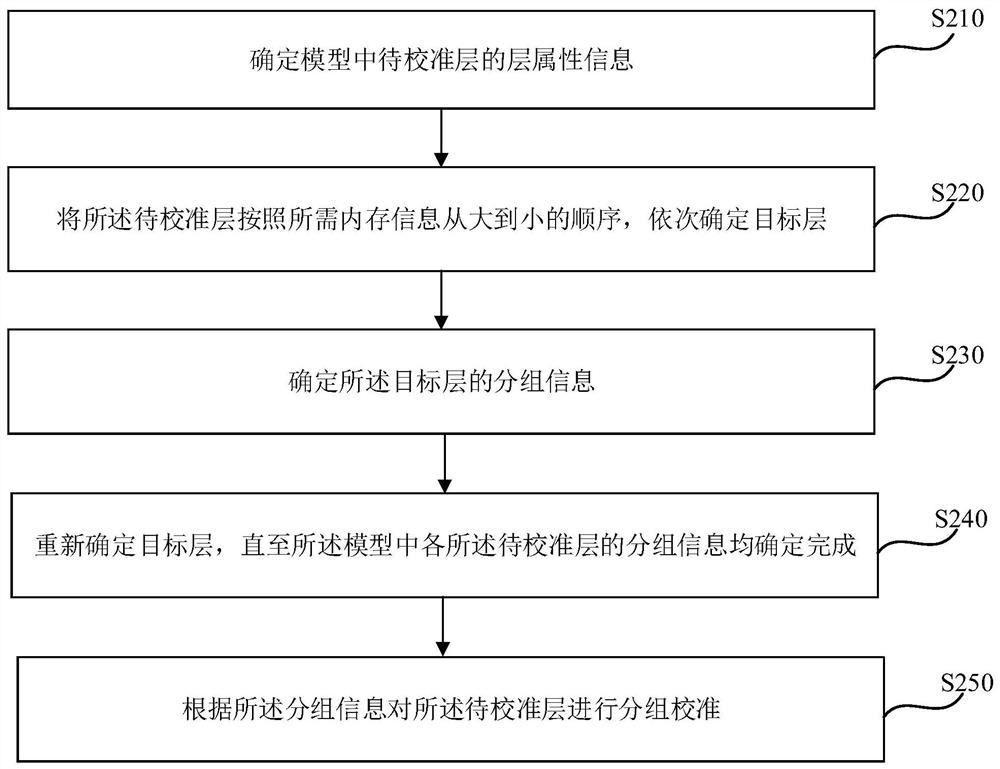

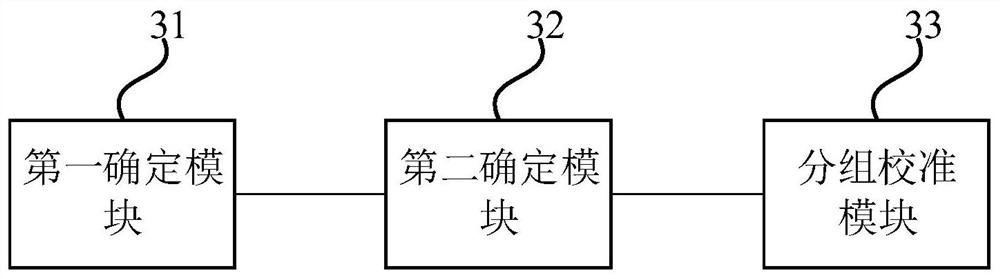

Calibration method and device, terminal equipment and storage medium

PendingCN111915017ACalculation speedReduce the number of timesMachine learningBiological modelsEngineeringReal-time computing

The invention discloses a calibration method and device, terminal equipment and a storage medium. The method comprises the steps of determining layer attribute information of each to-be-calibrated layer in a model; determining grouping information corresponding to the to-be-calibrated layer according to the layer attribute information and the available resource information; and performing groupingcalibration on the to-be-calibrated layer according to the grouping information. By means of the method, on the premise that available resources can support, all to-be-calibrated layers are reasonably grouped, so that multiple layers are calibrated at the same time through one-time calibration operation, the number of times of calibration operation is reduced, computing resources are fully utilized, and therefore the computing speed during model calibration is increased.

Owner:LYNXI TECH CO LTD

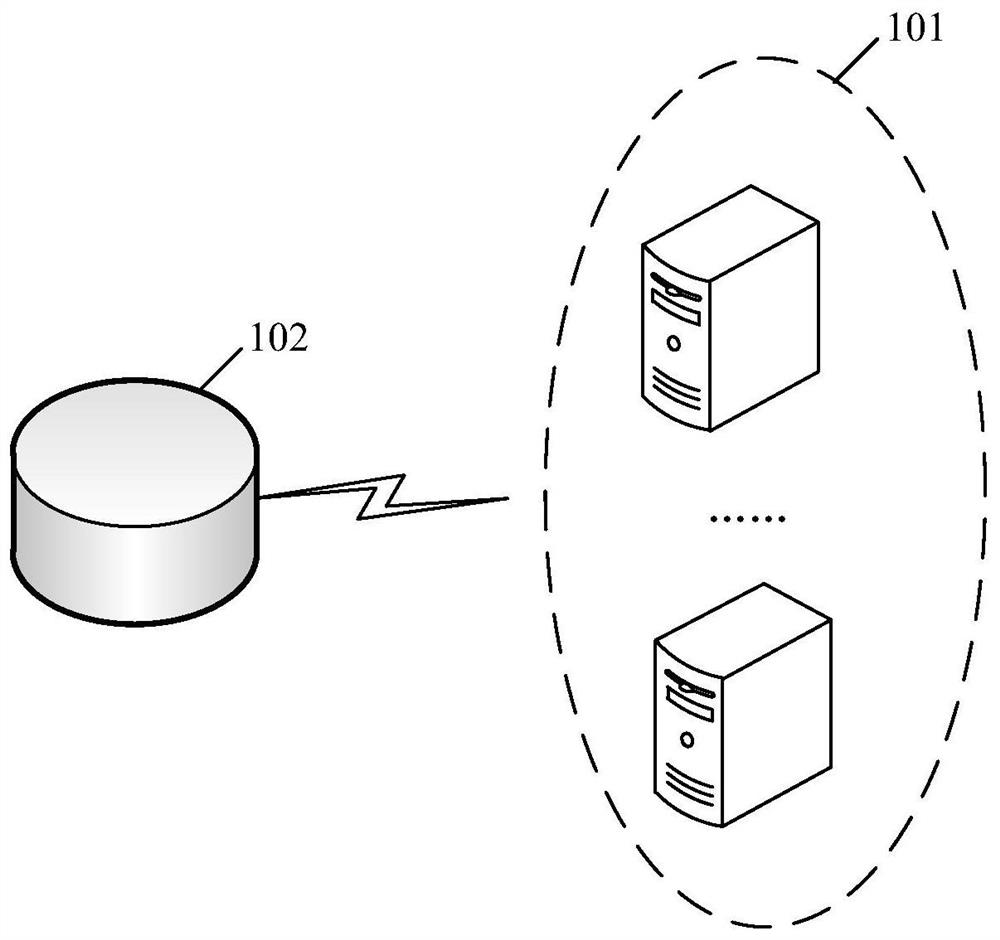

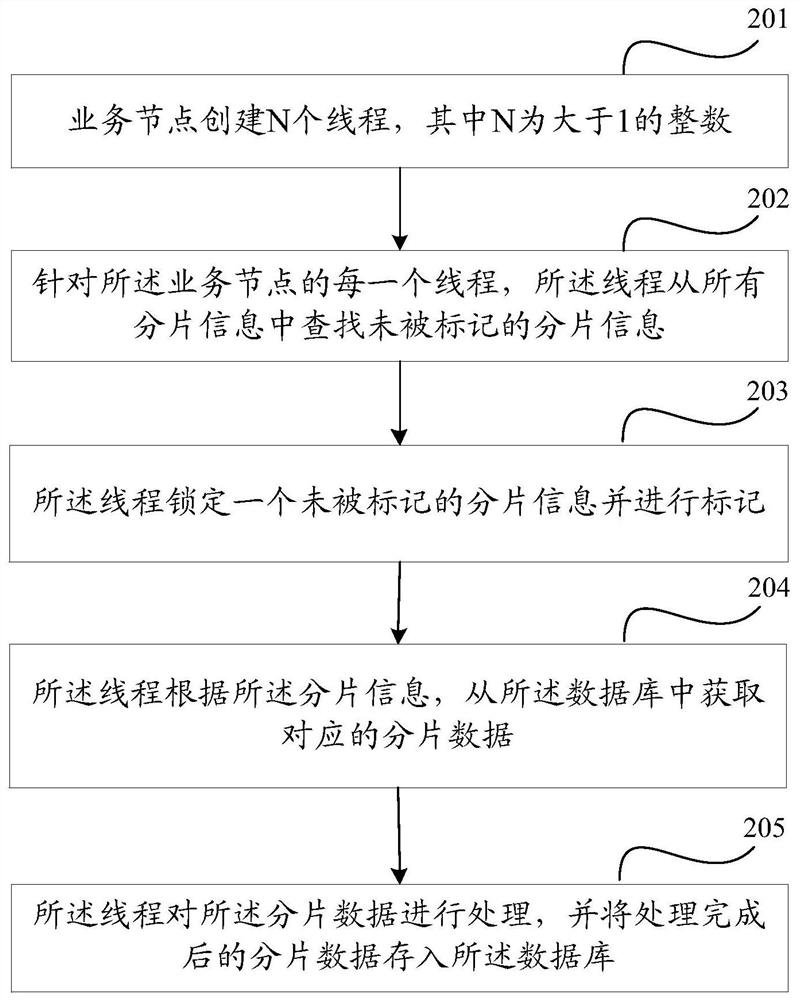

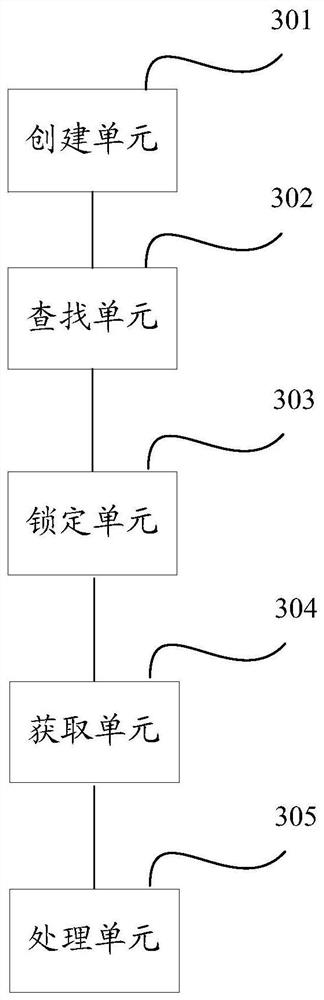

Data processing method and device

PendingCN111752961AMake full use of computing resourcesEasy to handleDatabase updatingDatabase management systemsData informationEngineering

Owner:WEBANK (CHINA)

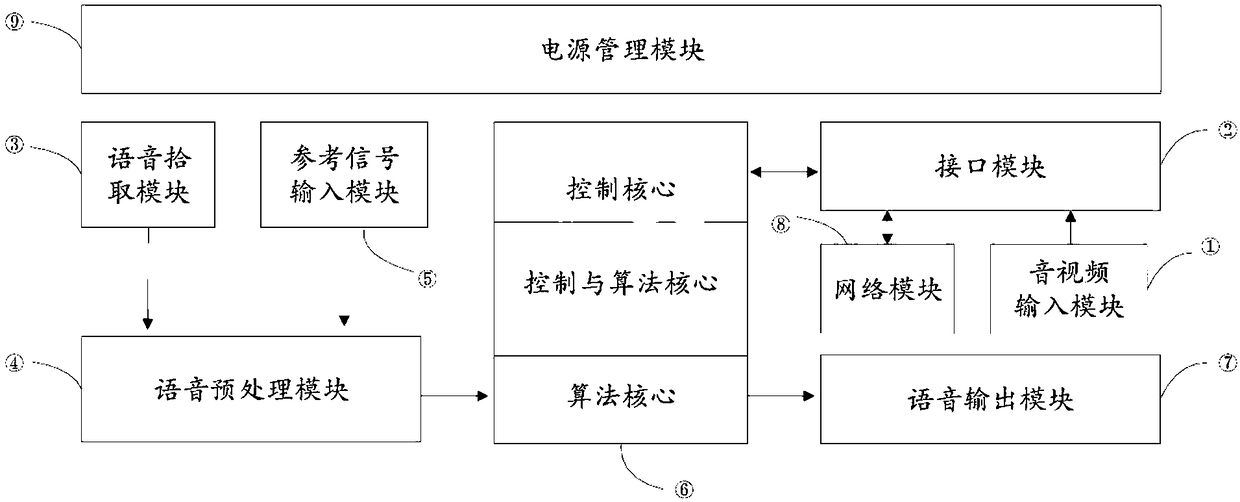

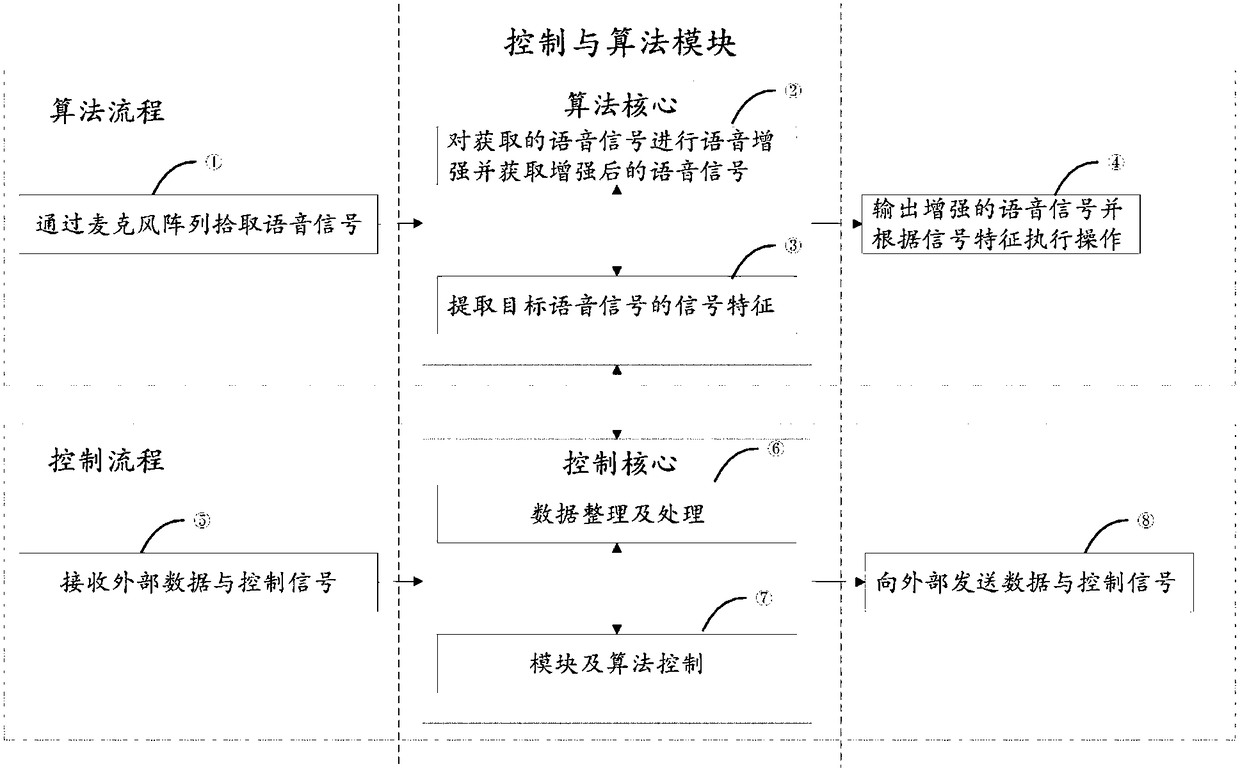

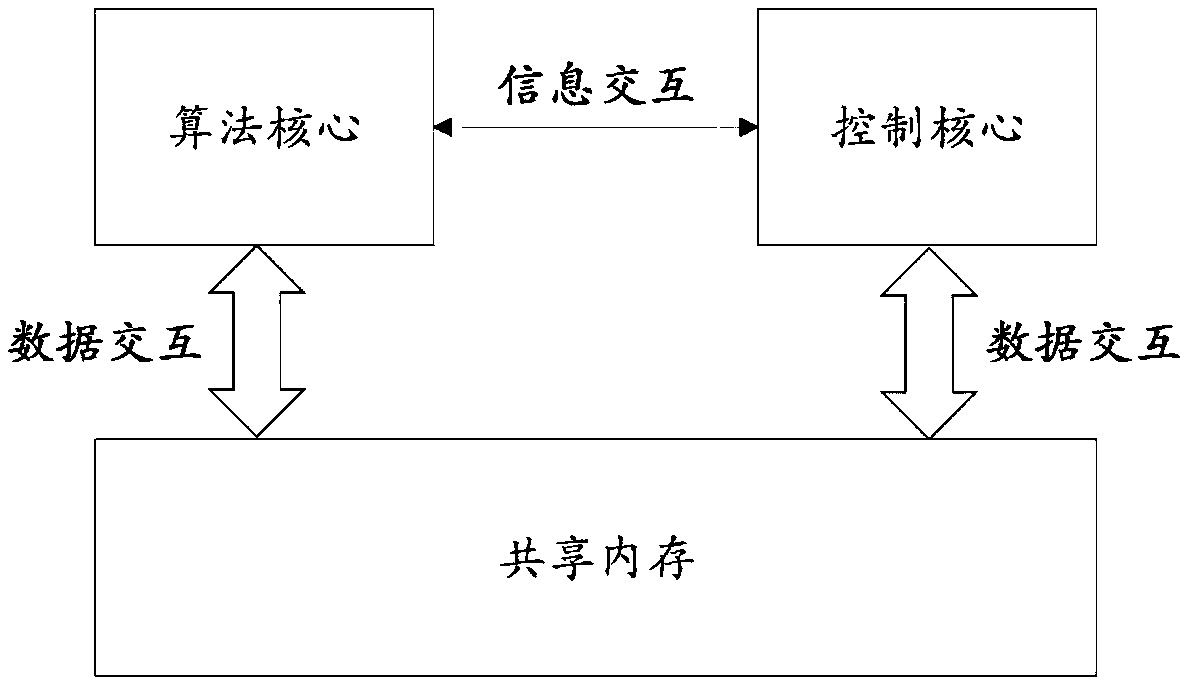

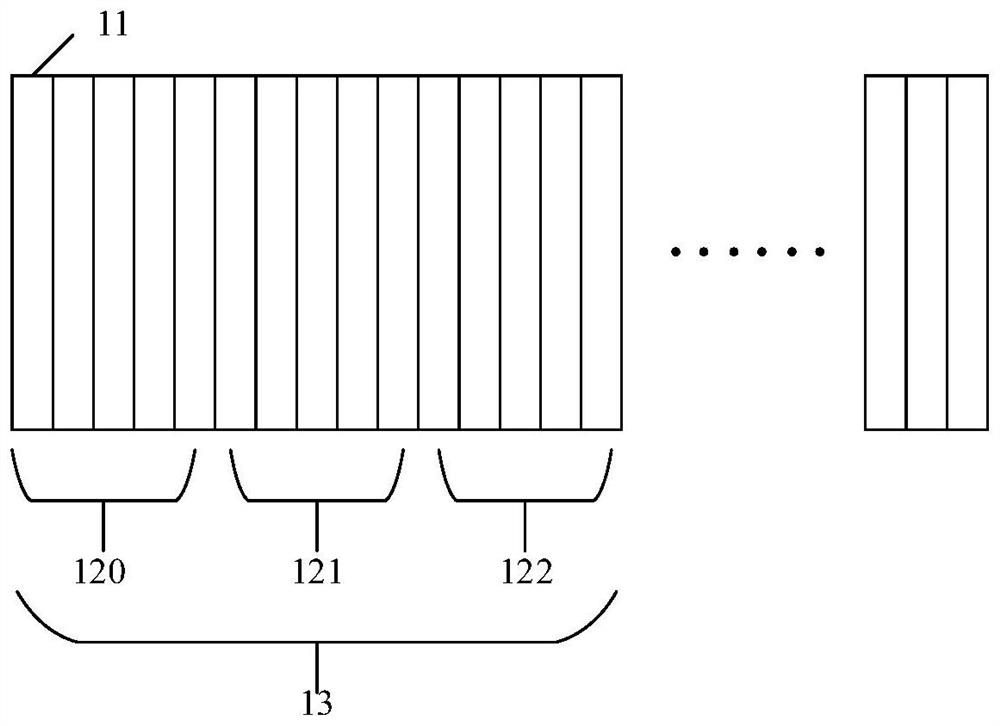

Acoustic processing device based on multi-core modular framework

PendingCN108305631AMake full use of computing resourcesLow extensionSpeech recognitionEnergy efficient computingProcessing coreComputer module

The invention discloses an acoustic processing device based on a multi-core modular framework. The acoustic processing device comprises at least two processing cores, each processing core comprises atleast one algorithm core module and at least one control core module, and further comprises an audio and video input module, an interface module, a voice pickup module, a voice preprocessing module,a reference signal input module, a control and algorithm module, a voice output module, a network module and a power management module. The acoustic processing process is divided into different modules, and different functional modules are independent of each other and are managed and controlled by different processing cores. The processing cores cooperate through the inter-core communication anda shared memory, and different modules are handed to different cores for management and control. Through reasonable module control and system resource allocation, computing resources of the processorare made full use of, the computing power and effective availability of a sound processing system are improved, the processing delay is reduced, and the system resource scheduling capabilities are improved.

Owner:西安合谱声学科技有限公司

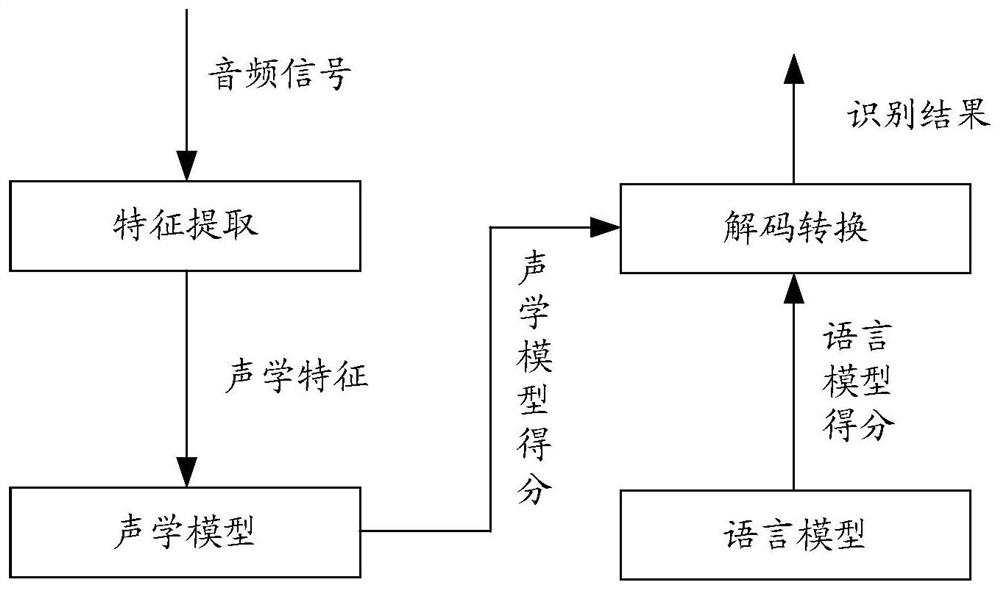

Speech recognition method, device, electronic device and storage medium

ActiveCN110689876BMake full use of computing resourcesMake full use of resourcesSpeech recognitionCode conversionSpeech sound

The present invention provides a voice recognition method, device, electronic equipment and storage medium; the method includes: acquiring the acoustic features of a plurality of voice frames of the voice signal to be recognized; Coding conversion to obtain the state corresponding to each of the speech frames; classify and combine the states corresponding to each of the speech frames through the central processing unit to obtain a phoneme sequence corresponding to the speech signal to be recognized; decode and convert the phoneme sequence , to obtain a text sequence corresponding to the speech signal to be recognized. In this way, speech recognition efficiency and resource utilization can be improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

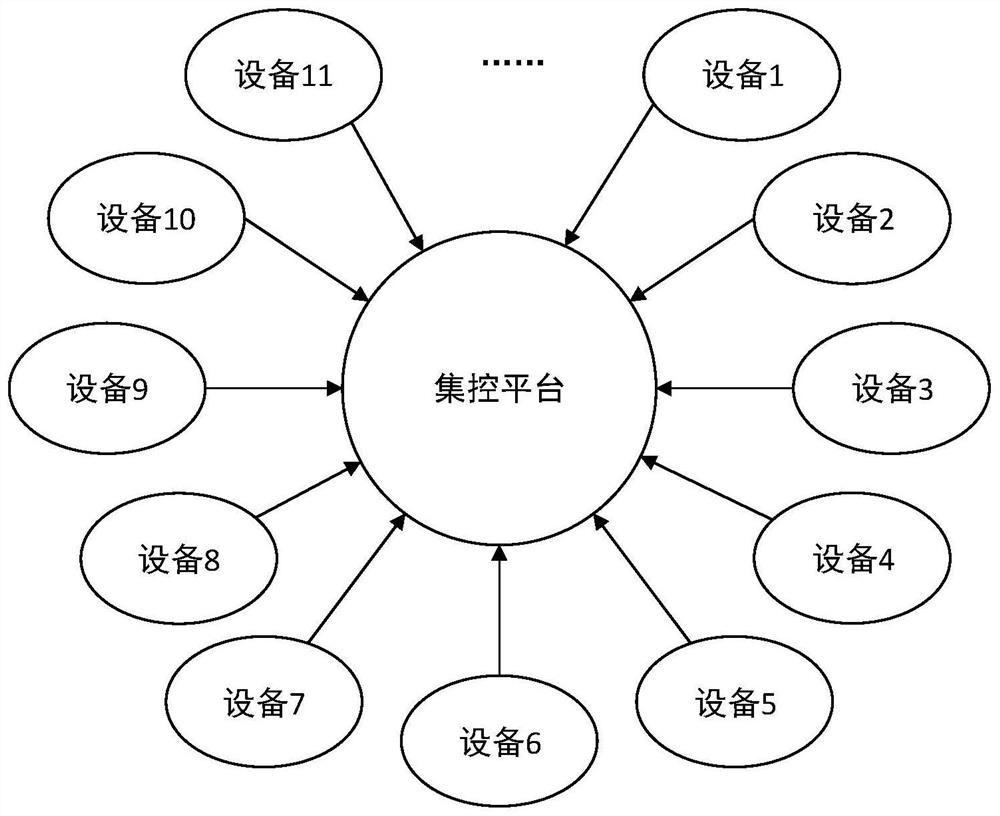

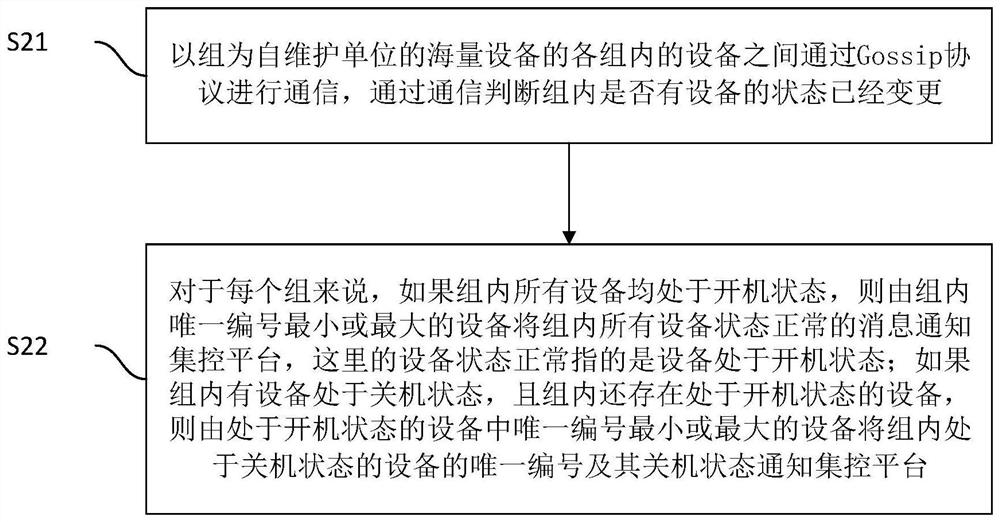

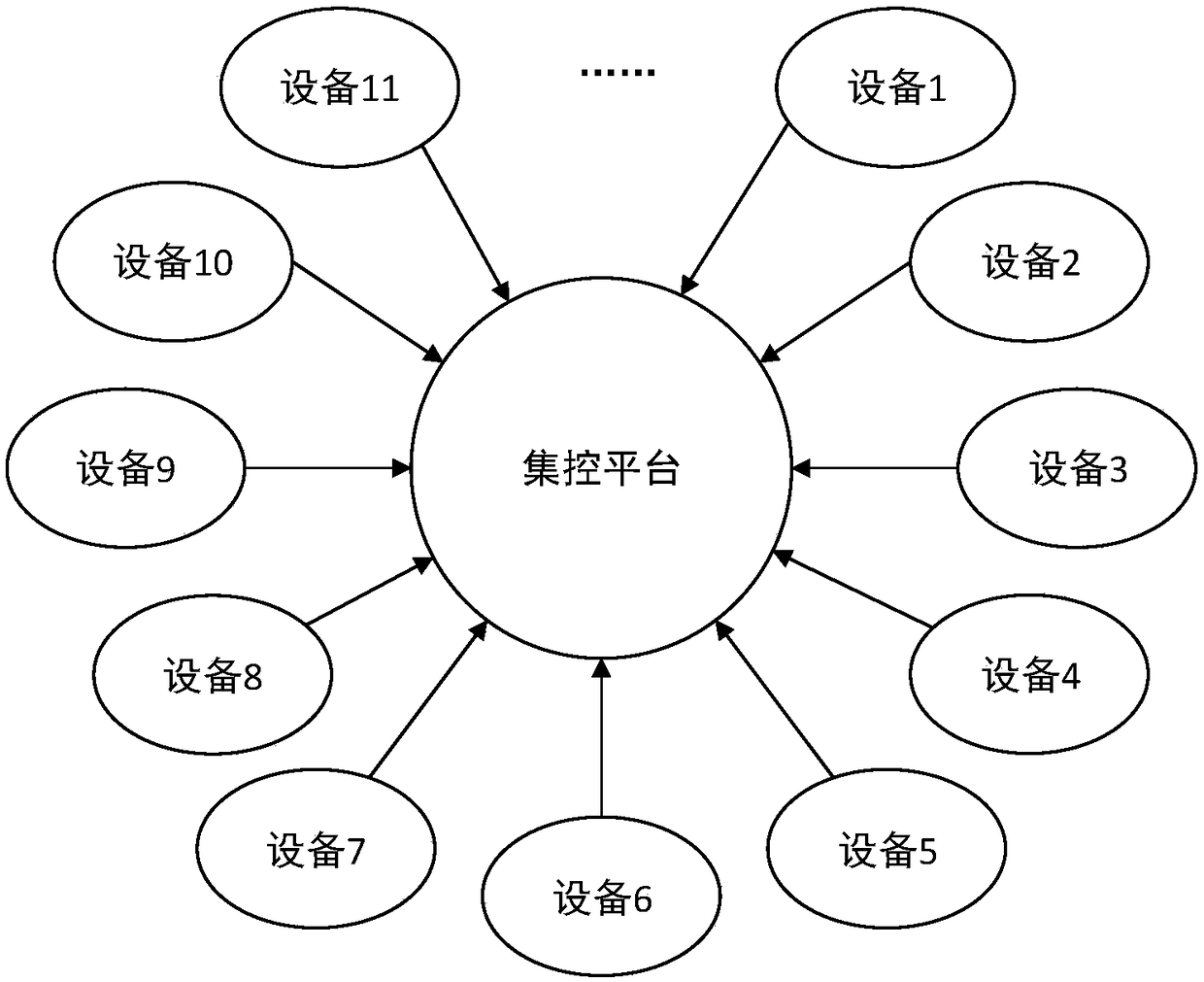

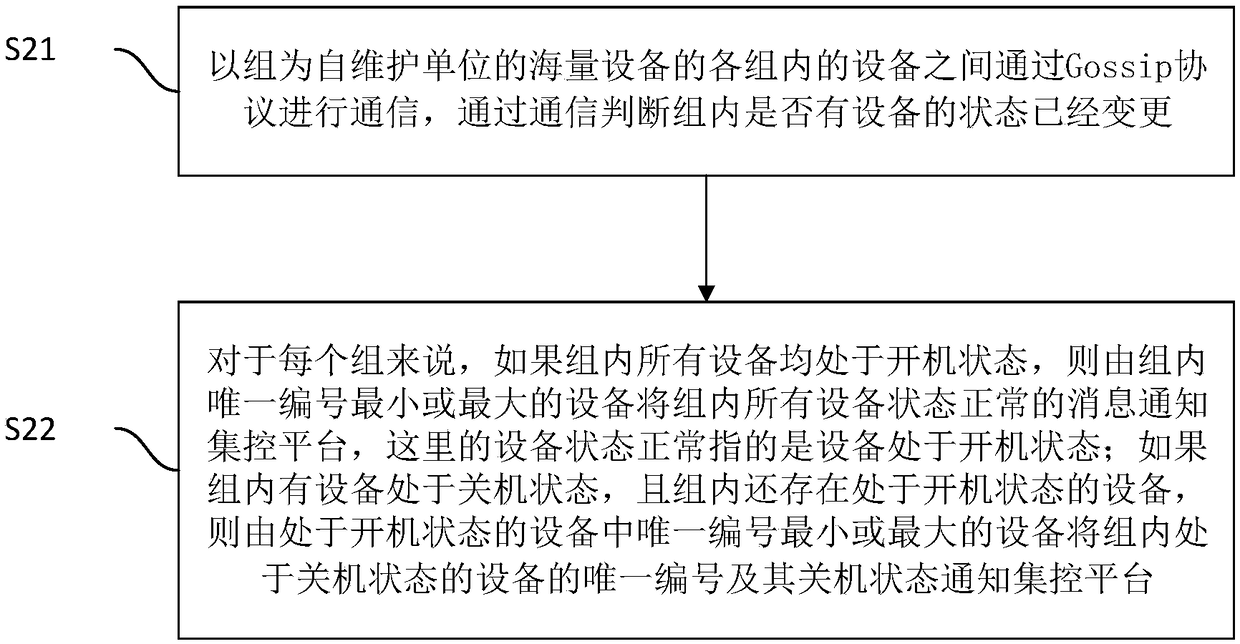

A self-maintenance method for mass equipment status and its device and system

ActiveCN108494853BReduce load pressureMake full use of computing resourcesData switching networksShut downDevice status

A method, device, and system for self-maintenance of massive equipment status, wherein the method includes: communication between equipment in each group of massive equipment with the group as the self-maintenance unit through the Gossip protocol, and judging whether there is any equipment in the group that is in Power-off state; among them, each device in a large number of devices is assigned a unique number; for each group, if all devices in the group are in the power-on state, the device with the smallest or largest unique number in the group will set the state of all devices in the group The normal message notifies the centralized control platform; if there are devices in the group that are in the shutdown state, and there are still devices in the power-on state in the group, the device with the smallest or largest unique number among the devices in the The unique number of the device and its shutdown status notify the centralized control platform. The technical solution proposed by the embodiment of the present invention greatly reduces the load pressure of the centralized control platform, fully utilizes the computing resources of each device, and improves the computing efficiency.

Owner:GUANGZHOU SHIYUAN ELECTRONICS CO LTD +1

Mass equipment state self-maintenance method and device and system thereof

ActiveCN108494853AReduce load pressureMake full use of computing resourcesData switching networksSelf maintenanceEmbedded system

The invention discloses a mass equipment state self-maintenance method and device and system thereof. The method comprises the following steps: performing communication among equipment in various groups of mass equipment taking a group as a self-maintenance unit through a Gossip protocol, judging whether the equipment in the off state is existent in the group, wherein each set of equipment in massequipment is distributed with a unique serial number; for each group, enabling the equipment with the minimum or maximum unique serial number in the group to notify the message that all equipment states in the group are normal to an integrated control platform if all equipment in the group is in the on state; if the equipment in off state is existent in the group and the equipment in the on stateis further existent in the group, enabling the equipment with the minimum or maximum unique serial number in the equipment in the on state to notify the unique serial number and the off state of theequipment in the off state to the integrated control platform. The technical scheme proposed by the embodiment of the invention greatly relieves the load pressure of the integrated control platform, the computing resource of each set of equipment is sufficiently utilized, and the computing efficiency is improved.

Owner:GUANGZHOU SHIYUAN ELECTRONICS CO LTD +1

D2D-based multi-access edge computing task offloading method in the Internet of Vehicles environment

ActiveCN111132077BMake full use of computing resourcesSatisfy the delay reliability conditionPower managementParticular environment based servicesThe InternetMajorization minimization

The present invention discloses a D2D-based multi-access edge computing task offloading method in the Internet of Vehicles environment. The method models the task offloading strategy, transmission power and channel resource allocation mode as a mixed integer nonlinear programming problem, and the optimization problem is The sum of the time delay and energy consumption benefits of all terminals CUE that communicate with the base station in the Internet of Vehicles system is maximized. This method has low time complexity, can effectively utilize the channel resources of the Internet of Vehicles system, and ensure the reliability of the delay of the terminal DUE for local V2V data exchange in the form of D2D communication. Low latency and high reliability requirements. The present invention is verified by building a simulation platform, and the total time delay and energy consumption of the CUE are nearly minimized. Compared with the traditional task offloading method, the method has performance advantages.

Owner:SOUTH CHINA UNIV OF TECH

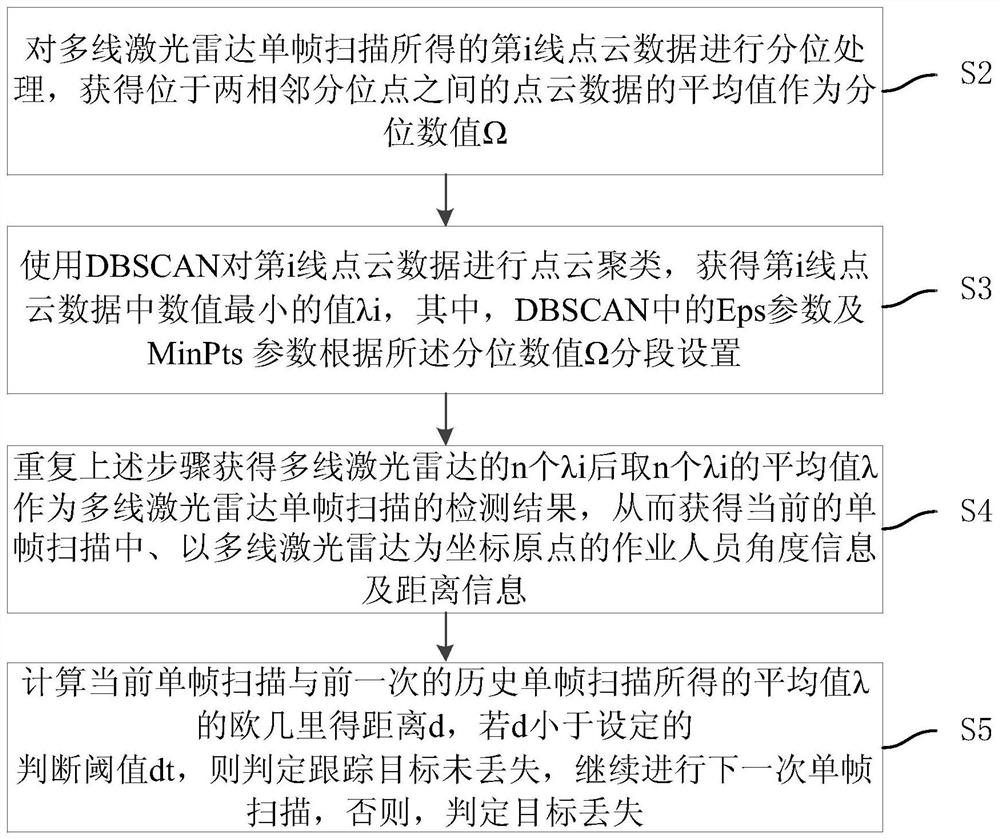

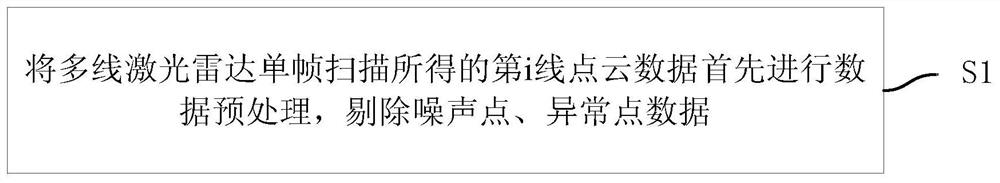

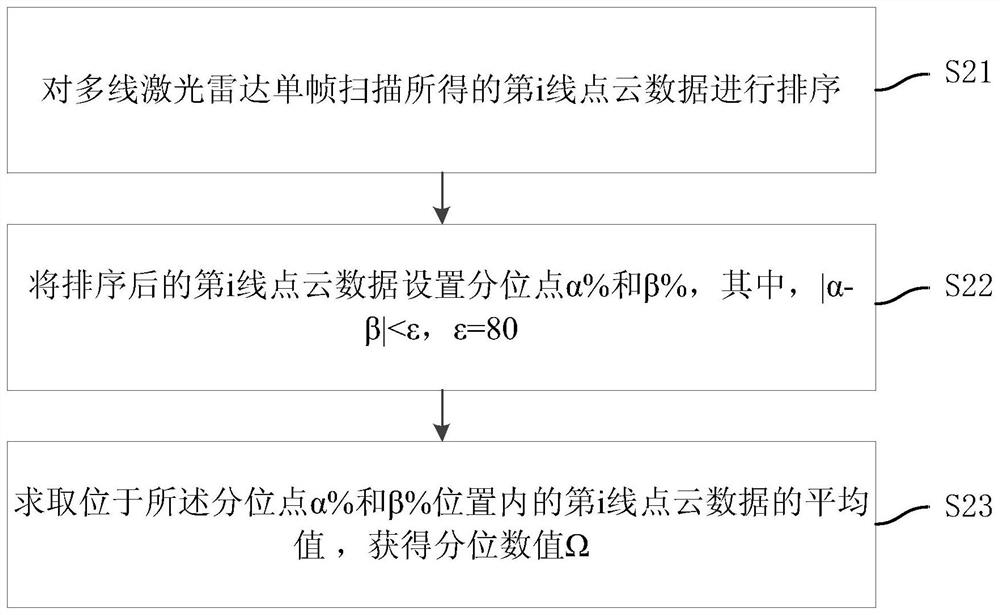

A robot intelligent self-following method, device, medium, and electronic equipment

ActiveCN112493928BHigh range resolutionImprove angular resolutionImage enhancementImage analysisPoint cloudEngineering

The invention discloses a robot intelligent self-following method, device, medium, and electronic equipment. The intelligent self-following method includes the steps of: performing quantization processing on the i-th line point cloud data obtained by multi-line lidar single-frame scanning to obtain The average value of the point cloud data located between two adjacent quantile points is taken as the quantile value Ω; use DBSCAN to perform point cloud clustering on the i-th line point cloud data, and obtain the smallest value λi in the i-th line point cloud data ;Repeat the above steps to obtain n λi of the multi-line laser radar, and then take the average value λ of n λi as the detection result of the multi-line laser radar single-frame scan; calculate the average of the current single-frame scan and the previous historical single-frame scan Euclidean distance d of the value λ and judge whether the target is lost. The present invention is applicable both day and night, and has little impact on the environment; during the following process, the tracking result of the operator is obtained by using the multi-line laser radar, and the detection accuracy is high, and the tracking accuracy is high when combined with the historical track.

Owner:广东盈峰智能环卫科技有限公司 +1

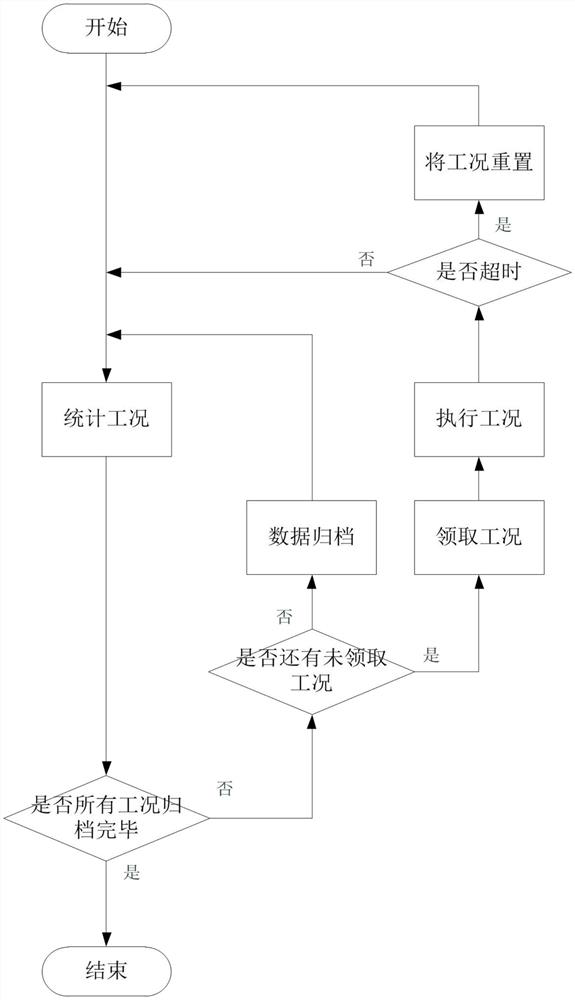

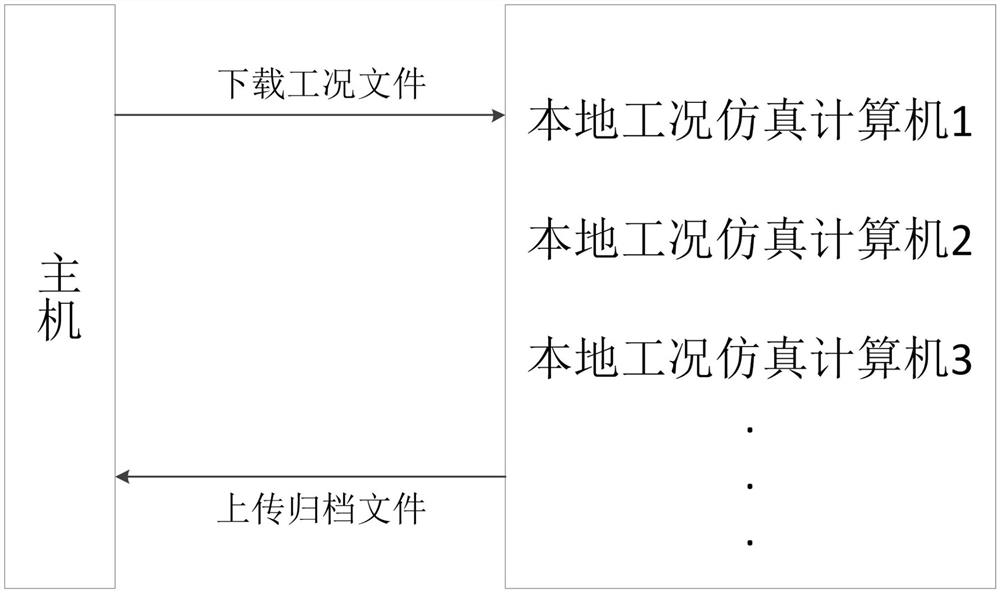

Artificial intelligence processing method and system for batch simulation of digital aircraft working condition set

ActiveCN108334675BReduce workloadMake full use of computing resourcesDesign optimisation/simulationFile system administrationBatch processingFlight vehicle

The invention discloses an artificial intelligence processing method and system for batch simulation of a digital aircraft working condition set. The method includes the following steps: accessing the digital aircraft simulation working condition set, and storing the working condition information included in the working condition set in a batch processing task database; Receive at least one working condition information from the batch task database, and record the receiving situation; download the configuration file corresponding to the working condition information according to the received at least one working condition information, and start the digital aircraft simulation program to perform digital simulation according to the configuration file; Archive all simulation data generated after digital simulation to a batch archive database. The invention realizes artificial intelligence processing for batch simulation of digital aircraft simulation working conditions, can automatically simulate and archive data for a large number of working conditions, reduces human workload, and can be processed when a large number of working conditions need to be processed. Realize multi-machine parallel simulation processing, make full use of computing resources, and save simulation time.

Owner:BEIHANG UNIV

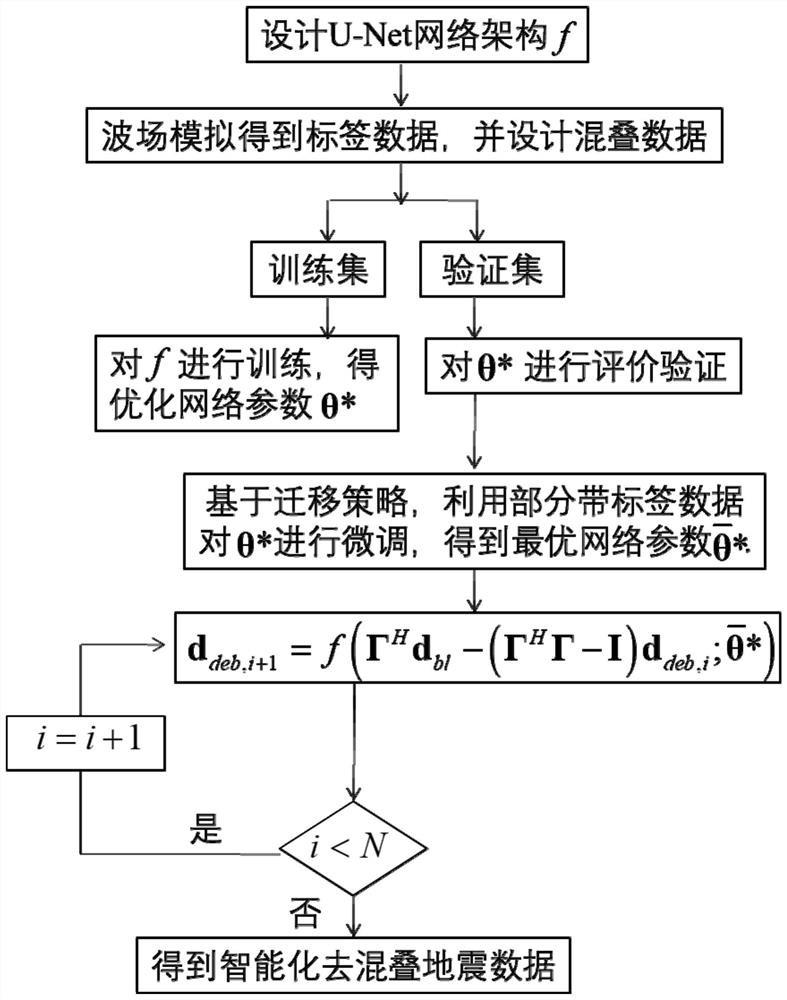

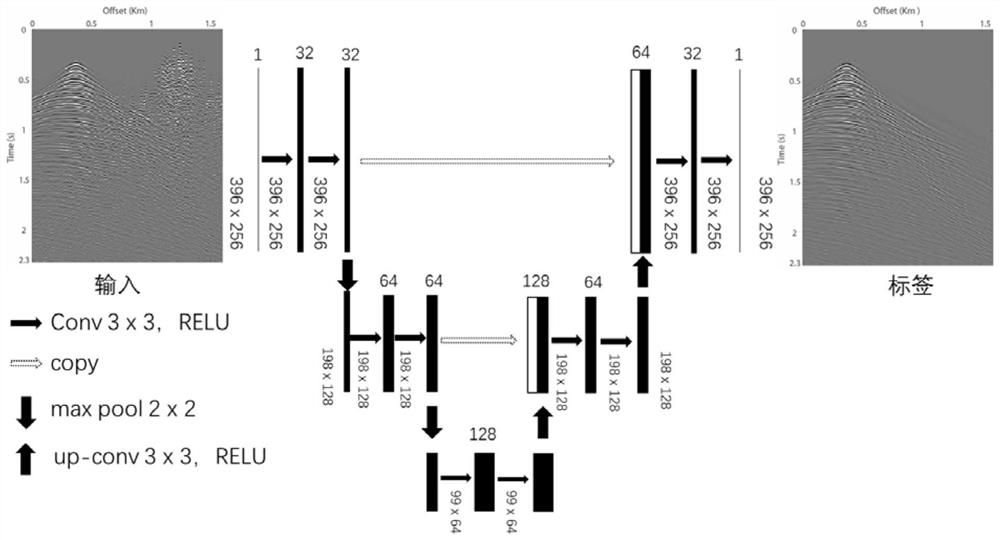

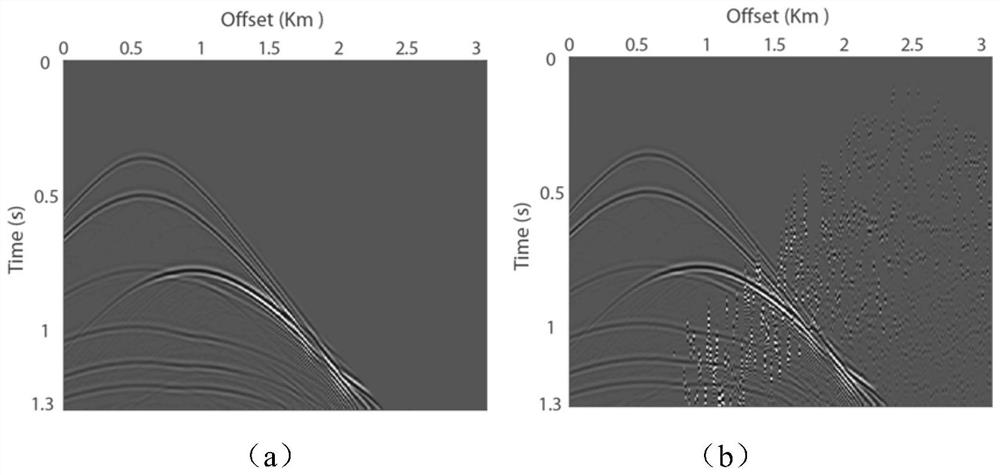

Intelligent seismic data anti-aliasing method and system based on u-net network

ActiveCN111273353BHigh anti-aliasing processing efficiencyImprove stabilitySeismic signal transmissionSeismic signal processingEngineeringData mining

The present invention relates to a kind of intelligent seismic data anti-aliasing method and system based on U-Net network, the method comprises the following steps: (1) build the U-Net network f for seismic data anti-aliasing; (2) obtain Simulated data training pair: including simulated non-aliased seismic data and aliased seismic data; (3) using simulated aliased seismic data as input and non-aliased seismic data as training labels to train the U‑Net network to obtain the trained Network parameter θ * (4) Based on the transfer learning method, using part of the actual aliasing seismic data with labels, the trained network parameters θ * Perform fine-tuning to obtain optimized network parameters (5) use the optimized U-Net network to perform cyclic iterations on the seismic data to be processed to obtain separated seismic data. Compared with the prior art, the present invention avoids assumptions about data linearity, sparsity, and low rank, and has high anti-aliasing processing efficiency, good stability, and high precision.

Owner:TONGJI UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com