An image registration method based on convolution neural network

A convolutional neural network and image registration technology, applied in the field of image processing, can solve problems such as uncorrectable distortion and limited neural network generation, and achieve the effect of ensuring accuracy and robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0054] An embodiment of the present invention provides an image registration method based on a convolutional neural network, see figure 1 and figure 2 , the method includes the following steps:

[0055] 101: Using the VGG-16 convolutional network to extract feature points from the reference image and the moving image respectively, so as to generate a reference feature point set and a moving feature point set;

[0056] 102: When the distance matrix of the feature points satisfies the first and second constraint conditions at the same time, perform a pre-matching operation, that is, the feature point x in the reference feature point set and the feature point y in the moving feature point set are matching points ;

[0057] 103: Set a certain threshold, combine iterations to dynamically select the interior points of the pre-matched feature points, filter out the final feature points, and obtain the prior probability matrix;

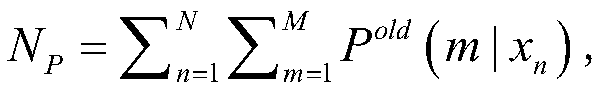

[0058] 104: Find the optimal parameters according t...

Embodiment 2

[0065] The following is combined with specific calculation formulas, examples, Figure 1-Figure 2 , the scheme in embodiment 1 is further introduced, see the following description for details:

[0066] 201: Using VGG-16 convolutional network in reference image I X All the feature points are extracted, and then the reference feature point set X is generated, and the VGG-16 convolutional network is used to move the image I Y All the feature points are extracted from above, and then the moving feature point set Y is generated;

[0067] For specific implementation, refer to image I X and moving images I Y The dimensions are unified to 224×224 in length and width, so as to obtain a receptive field of a suitable size (a term known in the art) and reduce the amount of calculation.

[0068] Among them, the VGG-16 convolutional network includes five sections of convolution, each section has 2-3 convolutional layers, and each section is connected to a maximum pooling layer at the en...

Embodiment 3

[0119] Below in conjunction with specific example, calculation formula, the scheme in embodiment 1 and 2 is further introduced, see the following description for details:

[0120] 301: Extract feature points:

[0121] Using the VGG-16 convolutional network in the reference image I X Extract feature points to generate a reference feature point set X, in the moving image I Y Extract the feature points above to generate the mobile feature point set Y, combined with the attached figure 1 To further explain the network construction;

[0122] 1) The reference image I X , moving image I Y The size is unified to 224×224 to obtain a suitable size of the receptive field and reduce the amount of calculation.

[0123] 2) The VGG-16 convolutional network includes 5 parts of convolution calculation, using a 28×28 grid to segment the reference image I X , moving image I Y . The output of the pooling layer pool3 layer obtains a feature map of 256d, and a feature descriptor is generate...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com