Behavior identification method for long-time fast and slow network fusion based on attitude articulation points

A technology of network fusion and recognition method, applied in the field of behavior recognition of long-term fast and slow network fusion, can solve the problems of inapplicable long video recognition, neglect of spatial structure features, and insufficient expression, so as to avoid loss of compensation information and improve recognition. rate and robustness, the effect of data volume reduction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0015] The technical solution of the present invention will be further described below in conjunction with the accompanying drawings. It should be understood that the embodiments provided below are only intended to disclose the present invention in detail and completely, and fully convey the technical concept of the present invention to those skilled in the art. The present invention can also be implemented in many different forms, and does not Limited to the embodiments described herein. The terms used in the exemplary embodiments shown in the drawings do not limit the present invention.

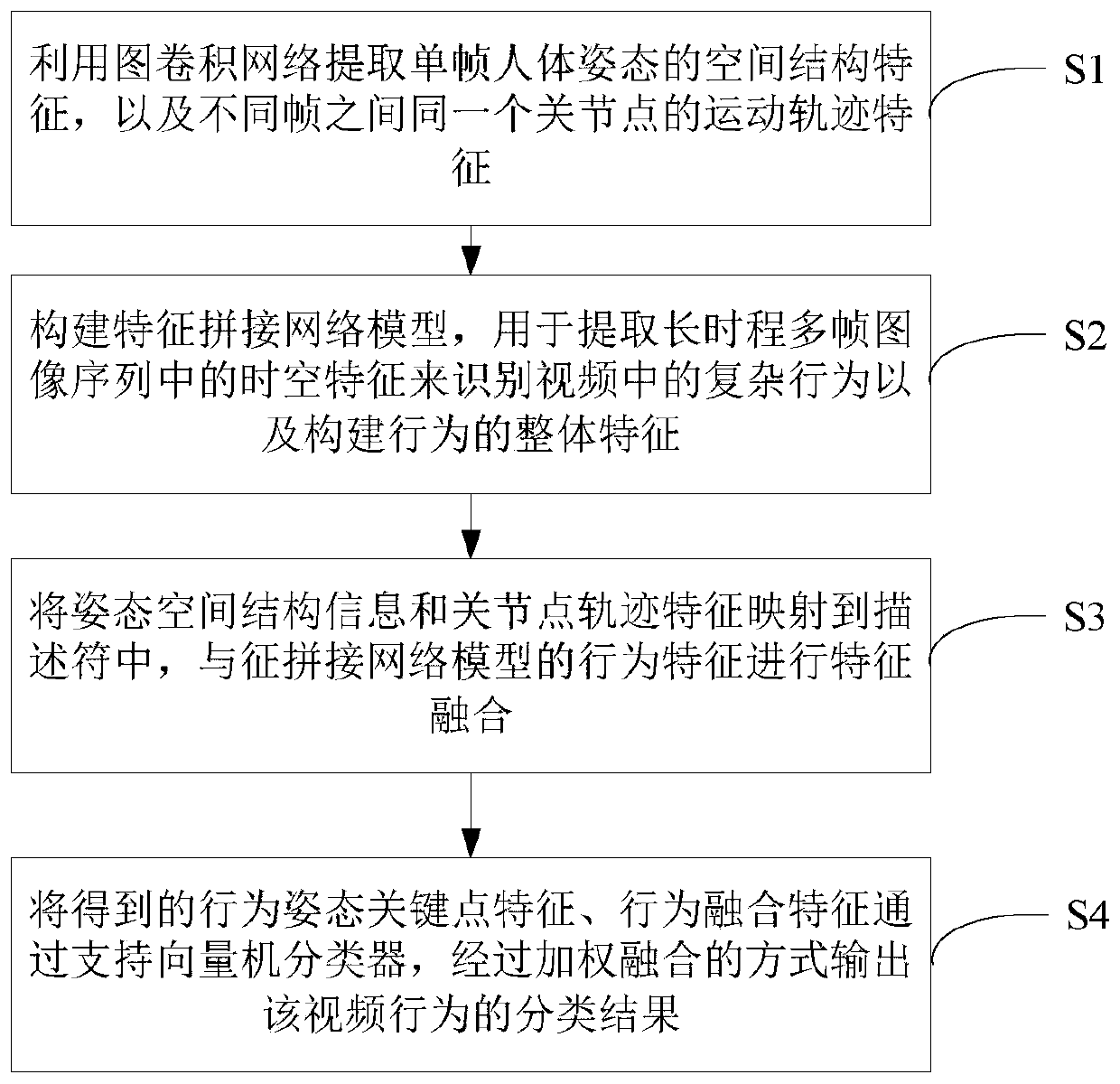

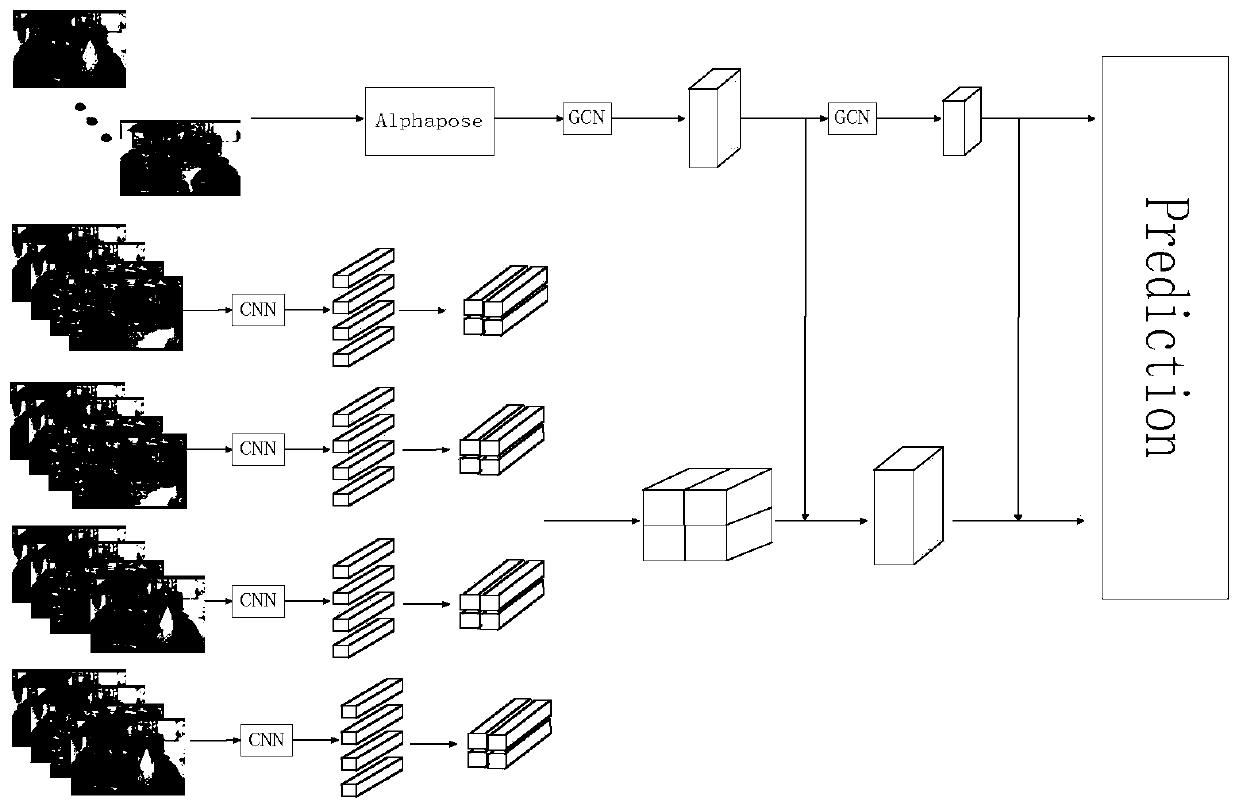

[0016] figure 1 It shows the flow chart of the behavior recognition method based on the long-term fast and slow network fusion of posture joint points of the present invention, figure 2 It is a schematic diagram of fast and slow network fusion. Alphapose in the figure is the name of the algorithm used to locate and extract pose joint points of people in RGB images. The extracted results ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com