Convolutional neural network hardware accelerator with novel convolution operation acceleration module

A technology of convolutional neural network and hardware accelerator, which is applied in the field of electronic information and deep learning, can solve the problems of data bus bit width, inability to consider each other, and large number of additions, etc., to achieve low data transmission bandwidth, reduce consumption, and reduce power consumption. consumption effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] The present invention will be further described below in combination with specific embodiments.

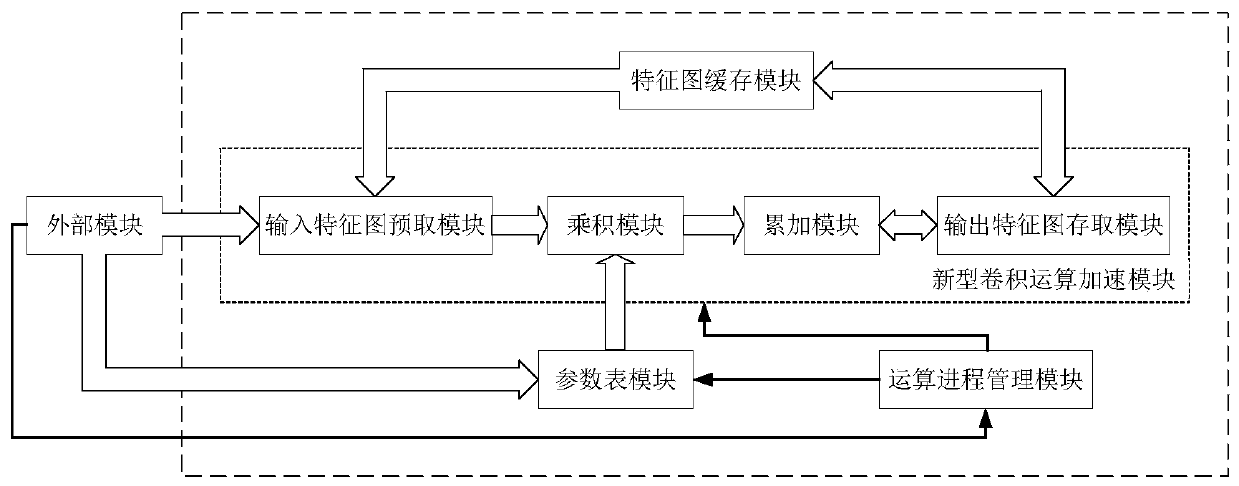

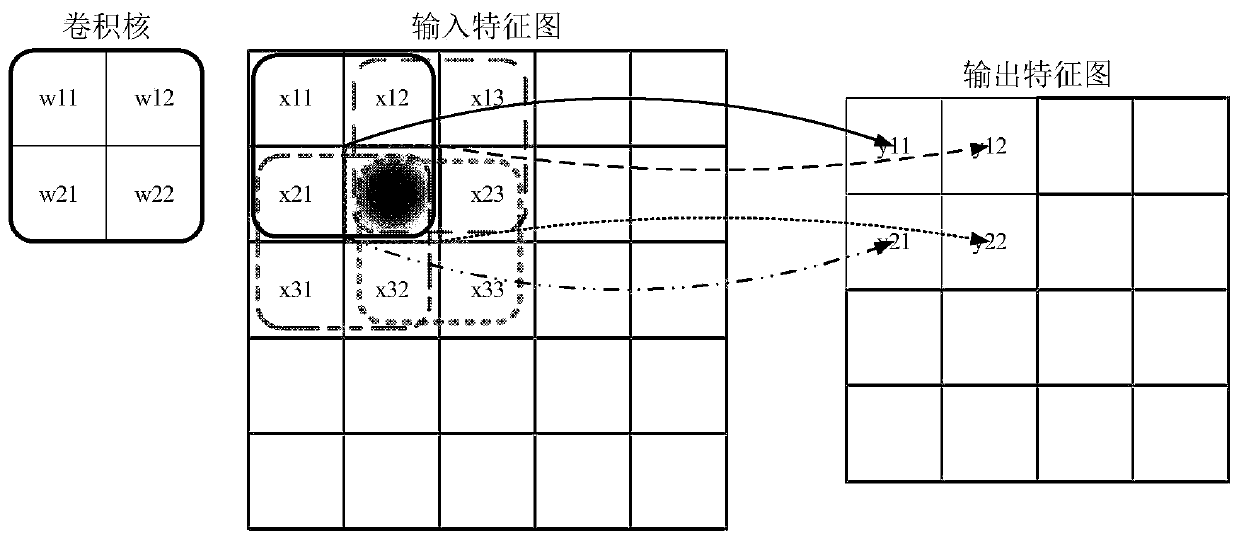

[0034] In the process of using the convolution kernel to perform convolution operations on the input feature map to obtain the output feature map, each convolution operation can obtain a pixel on the output feature map, and the convolution kernel slides to another area for the same convolution The operation can obtain additional output feature map pixels, and the convolution kernel can traverse the input feature map to obtain the entire output feature map. Therefore, the essence of calculating the output feature map is to repeat the same convolution operation multiple times, but the input is different. The present invention also follows this idea, as long as the hardware for performing a convolution operation is designed, and then reused in time, the purpose of calculating the complete output feature map can be achieved. Invent the process of performing a convolution operat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com